Re: [REVIEW] Re: Compression of full-page-writes

| Lists: | pgsql-hackers |

|---|

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Compression of full-page-writes |

| Date: | 2013-08-30 02:55:54 |

| Message-ID: | CAHGQGwGqG8e9YN0fNCUZqTTT=hNr7Ly516kfT5ffqf4pp1qnHg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

Attached patch adds new GUC parameter 'compress_backup_block'.

When this parameter is enabled, the server just compresses FPW

(full-page-writes) in WAL by using pglz_compress() before inserting it

to the WAL buffers. Then, the compressed FPW is decompressed

in recovery. This is very simple patch.

The purpose of this patch is the reduction of WAL size.

Under heavy write load, the server needs to write a large amount of

WAL and this is likely to be a bottleneck. What's the worse is,

in replication, a large amount of WAL would have harmful effect on

not only WAL writing in the master, but also WAL streaming and

WAL writing in the standby. Also we would need to spend more

money on the storage to store such a large data.

I'd like to alleviate such harmful situations by reducing WAL size.

My idea is very simple, just compress FPW because FPW is

a big part of WAL. I used pglz_compress() as a compression method,

but you might think that other method is better. We can add

something like FPW-compression-hook for that later. The patch

adds new GUC parameter, but I'm thinking to merge it to full_page_writes

parameter to avoid increasing the number of GUC. That is,

I'm thinking to change full_page_writes so that it can accept new value

'compress'.

I measured how much WAL this patch can reduce, by using pgbench.

* Server spec

CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

Mem: 16GB

Disk: 500GB SSD Samsung 840

* Benchmark

pgbench -c 32 -j 4 -T 900 -M prepared

scaling factor: 100

checkpoint_segments = 1024

checkpoint_timeout = 5min

(every checkpoint during benchmark were triggered by checkpoint_timeout)

* Result

[tps]

1386.8 (compress_backup_block = off)

1627.7 (compress_backup_block = on)

[the amount of WAL generated during running pgbench]

4302 MB (compress_backup_block = off)

1521 MB (compress_backup_block = on)

At least in my test, the patch could reduce the WAL size to one-third!

The patch is WIP yet. But I'd like to hear the opinions about this idea

before completing it, and then add the patch to next CF if okay.

Regards,

--

Fujii Masao

| Attachment | Content-Type | Size |

|---|---|---|

| compress_fpw_v1.patch | application/octet-stream | 4.4 KB |

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 03:07:45 |

| Message-ID: | 52200C81.4000108@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/08/30 11:55), Fujii Masao wrote:

> Hi,

>

> Attached patch adds new GUC parameter 'compress_backup_block'.

> When this parameter is enabled, the server just compresses FPW

> (full-page-writes) in WAL by using pglz_compress() before inserting it

> to the WAL buffers. Then, the compressed FPW is decompressed

> in recovery. This is very simple patch.

>

> The purpose of this patch is the reduction of WAL size.

> Under heavy write load, the server needs to write a large amount of

> WAL and this is likely to be a bottleneck. What's the worse is,

> in replication, a large amount of WAL would have harmful effect on

> not only WAL writing in the master, but also WAL streaming and

> WAL writing in the standby. Also we would need to spend more

> money on the storage to store such a large data.

> I'd like to alleviate such harmful situations by reducing WAL size.

>

> My idea is very simple, just compress FPW because FPW is

> a big part of WAL. I used pglz_compress() as a compression method,

> but you might think that other method is better. We can add

> something like FPW-compression-hook for that later. The patch

> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

> parameter to avoid increasing the number of GUC. That is,

> I'm thinking to change full_page_writes so that it can accept new value

> 'compress'.

>

> I measured how much WAL this patch can reduce, by using pgbench.

>

> * Server spec

> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

> Mem: 16GB

> Disk: 500GB SSD Samsung 840

>

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

I believe that the amount of backup blocks in WAL files is affected

by how often the checkpoints are occurring, particularly under such

update-intensive workload.

Under your configuration, checkpoint should occur so often.

So, you need to change checkpoint_timeout larger in order to

determine whether the patch is realistic.

Regards,

>

> * Result

> [tps]

> 1386.8 (compress_backup_block = off)

> 1627.7 (compress_backup_block = on)

>

> [the amount of WAL generated during running pgbench]

> 4302 MB (compress_backup_block = off)

> 1521 MB (compress_backup_block = on)

>

> At least in my test, the patch could reduce the WAL size to one-third!

>

> The patch is WIP yet. But I'd like to hear the opinions about this idea

> before completing it, and then add the patch to next CF if okay.

>

> Regards,

>

>

>

>

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 03:20:59 |

| Message-ID: | 52200F9B.6090609@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/08/30 12:07), Satoshi Nagayasu wrote:

>

>

> (2013/08/30 11:55), Fujii Masao wrote:

>> Hi,

>>

>> Attached patch adds new GUC parameter 'compress_backup_block'.

>> When this parameter is enabled, the server just compresses FPW

>> (full-page-writes) in WAL by using pglz_compress() before inserting it

>> to the WAL buffers. Then, the compressed FPW is decompressed

>> in recovery. This is very simple patch.

>>

>> The purpose of this patch is the reduction of WAL size.

>> Under heavy write load, the server needs to write a large amount of

>> WAL and this is likely to be a bottleneck. What's the worse is,

>> in replication, a large amount of WAL would have harmful effect on

>> not only WAL writing in the master, but also WAL streaming and

>> WAL writing in the standby. Also we would need to spend more

>> money on the storage to store such a large data.

>> I'd like to alleviate such harmful situations by reducing WAL size.

>>

>> My idea is very simple, just compress FPW because FPW is

>> a big part of WAL. I used pglz_compress() as a compression method,

>> but you might think that other method is better. We can add

>> something like FPW-compression-hook for that later. The patch

>> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

>> parameter to avoid increasing the number of GUC. That is,

>> I'm thinking to change full_page_writes so that it can accept new value

>> 'compress'.

>>

>> I measured how much WAL this patch can reduce, by using pgbench.

>>

>> * Server spec

>> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

>> Mem: 16GB

>> Disk: 500GB SSD Samsung 840

>>

>> * Benchmark

>> pgbench -c 32 -j 4 -T 900 -M prepared

>> scaling factor: 100

>>

>> checkpoint_segments = 1024

>> checkpoint_timeout = 5min

>> (every checkpoint during benchmark were triggered by

>> checkpoint_timeout)

>

> I believe that the amount of backup blocks in WAL files is affected

> by how often the checkpoints are occurring, particularly under such

> update-intensive workload.

>

> Under your configuration, checkpoint should occur so often.

> So, you need to change checkpoint_timeout larger in order to

> determine whether the patch is realistic.

In fact, the following chart shows that checkpoint_timeout=30min

also reduces WAL size to one-third, compared with 5min timeout,

in the pgbench experimentation.

https://www.oss.ecl.ntt.co.jp/ossc/oss/img/pglesslog_img02.jpg

Regards,

>

> Regards,

>

>>

>> * Result

>> [tps]

>> 1386.8 (compress_backup_block = off)

>> 1627.7 (compress_backup_block = on)

>>

>> [the amount of WAL generated during running pgbench]

>> 4302 MB (compress_backup_block = off)

>> 1521 MB (compress_backup_block = on)

>>

>> At least in my test, the patch could reduce the WAL size to one-third!

>>

>> The patch is WIP yet. But I'd like to hear the opinions about this idea

>> before completing it, and then add the patch to next CF if okay.

>>

>> Regards,

>>

>>

>>

>>

>

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| From: | Peter Geoghegan <pg(at)heroku(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 03:43:33 |

| Message-ID: | CAM3SWZQGGgKUKt_P+m3jpce7um=MbPKHwOrhez_O_8ncgG8NFA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Aug 29, 2013 at 7:55 PM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> [the amount of WAL generated during running pgbench]

> 4302 MB (compress_backup_block = off)

> 1521 MB (compress_backup_block = on)

Interesting.

I wonder, what is the impact on recovery time under the same

conditions? I suppose that the cost of the random I/O involved would

probably dominate just as with compress_backup_block = off. That said,

you've used an SSD here, so perhaps not.

--

Peter Geoghegan

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 04:43:53 |

| Message-ID: | CAA4eK1Lz_6hw0a1SVred-g5iq6uYxU2LPoP6JcuKKye5dorHxQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 8:25 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> Hi,

>

> Attached patch adds new GUC parameter 'compress_backup_block'.

> When this parameter is enabled, the server just compresses FPW

> (full-page-writes) in WAL by using pglz_compress() before inserting it

> to the WAL buffers. Then, the compressed FPW is decompressed

> in recovery. This is very simple patch.

>

> The purpose of this patch is the reduction of WAL size.

> Under heavy write load, the server needs to write a large amount of

> WAL and this is likely to be a bottleneck. What's the worse is,

> in replication, a large amount of WAL would have harmful effect on

> not only WAL writing in the master, but also WAL streaming and

> WAL writing in the standby. Also we would need to spend more

> money on the storage to store such a large data.

> I'd like to alleviate such harmful situations by reducing WAL size.

>

> My idea is very simple, just compress FPW because FPW is

> a big part of WAL. I used pglz_compress() as a compression method,

> but you might think that other method is better. We can add

> something like FPW-compression-hook for that later. The patch

> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

> parameter to avoid increasing the number of GUC. That is,

> I'm thinking to change full_page_writes so that it can accept new value

> 'compress'.

>

> I measured how much WAL this patch can reduce, by using pgbench.

>

> * Server spec

> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

> Mem: 16GB

> Disk: 500GB SSD Samsung 840

>

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

>

> * Result

> [tps]

> 1386.8 (compress_backup_block = off)

> 1627.7 (compress_backup_block = on)

>

> [the amount of WAL generated during running pgbench]

> 4302 MB (compress_backup_block = off)

> 1521 MB (compress_backup_block = on)

This is really nice data.

I think if you want, you can once try with one of the tests Heikki has

posted for one of my other patch which is here:

http://www.postgresql.org/message-id/51366323.8070606@vmware.com

Also if possible, for with lesser clients (1,2,4) and may be with more

frequency of checkpoint.

This is just to show benefits of this idea with other kind of workload.

I think we can do these tests later as well, I had mentioned because

sometime back (probably 6 months), one of my colleagues have tried

exactly the same idea of using compression method (LZ and few others)

for FPW, but it turned out that even though the WAL size is reduced

but performance went down which is not the case in the data you have

shown even though you have used SSD, might be he has done some mistake

as he was not as experienced, but I think still it's good to check on

various workloads.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 05:32:58 |

| Message-ID: | 52202E8A.5030707@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/08/30 11:55), Fujii Masao wrote:

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

Did you execute munual checkpoint before starting benchmark?

We read only your message, it occuered three times checkpoint during benchmark.

But if you did not executed manual checkpoint, it would be different.

You had better clear this point for more transparent evaluation.

Regards,

--

Mitsumasa KONDO

NTT Open Software Center

| From: | Nikhil Sontakke <nikkhils(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 05:37:40 |

| Message-ID: | CANgU5ZdVPxztiN5cmyq8Qxwt60+Dg7J4V4HTvcTduxSUs11mSQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi Fujii-san,

I must be missing something really trivial, but why not try to compress all

types of WAL blocks and not just FPW?

Regards,

Nikhils

On Fri, Aug 30, 2013 at 8:25 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> Hi,

>

> Attached patch adds new GUC parameter 'compress_backup_block'.

> When this parameter is enabled, the server just compresses FPW

> (full-page-writes) in WAL by using pglz_compress() before inserting it

> to the WAL buffers. Then, the compressed FPW is decompressed

> in recovery. This is very simple patch.

>

> The purpose of this patch is the reduction of WAL size.

> Under heavy write load, the server needs to write a large amount of

> WAL and this is likely to be a bottleneck. What's the worse is,

> in replication, a large amount of WAL would have harmful effect on

> not only WAL writing in the master, but also WAL streaming and

> WAL writing in the standby. Also we would need to spend more

> money on the storage to store such a large data.

> I'd like to alleviate such harmful situations by reducing WAL size.

>

> My idea is very simple, just compress FPW because FPW is

> a big part of WAL. I used pglz_compress() as a compression method,

> but you might think that other method is better. We can add

> something like FPW-compression-hook for that later. The patch

> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

> parameter to avoid increasing the number of GUC. That is,

> I'm thinking to change full_page_writes so that it can accept new value

> 'compress'.

>

> I measured how much WAL this patch can reduce, by using pgbench.

>

> * Server spec

> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

> Mem: 16GB

> Disk: 500GB SSD Samsung 840

>

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

>

> * Result

> [tps]

> 1386.8 (compress_backup_block = off)

> 1627.7 (compress_backup_block = on)

>

> [the amount of WAL generated during running pgbench]

> 4302 MB (compress_backup_block = off)

> 1521 MB (compress_backup_block = on)

>

> At least in my test, the patch could reduce the WAL size to one-third!

>

> The patch is WIP yet. But I'd like to hear the opinions about this idea

> before completing it, and then add the patch to next CF if okay.

>

> Regards,

>

> --

> Fujii Masao

>

>

> --

> Sent via pgsql-hackers mailing list (pgsql-hackers(at)postgresql(dot)org)

> To make changes to your subscription:

> http://www.postgresql.org/mailpref/pgsql-hackers

>

>

| From: | Michael Paquier <michael(dot)paquier(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 05:55:23 |

| Message-ID: | CAB7nPqRZxh6NrLYGr5B3eSmdE=O_VYowKtzBOkStxDA_yAy2rQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 11:55 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> My idea is very simple, just compress FPW because FPW is

> a big part of WAL. I used pglz_compress() as a compression method,

> but you might think that other method is better. We can add

> something like FPW-compression-hook for that later. The patch

> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

> parameter to avoid increasing the number of GUC. That is,

> I'm thinking to change full_page_writes so that it can accept new value

> 'compress'.

Instead of a generic 'compress', what about using the name of the

compression method as parameter value? Just to keep the door open to

new types of compression methods.

> * Result

> [tps]

> 1386.8 (compress_backup_block = off)

> 1627.7 (compress_backup_block = on)

>

> [the amount of WAL generated during running pgbench]

> 4302 MB (compress_backup_block = off)

> 1521 MB (compress_backup_block = on)

>

> At least in my test, the patch could reduce the WAL size to one-third!

Nice numbers! Testing this patch with other benchmarks than pgbench

would be interesting as well.

--

Michael

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Peter Geoghegan <pg(at)heroku(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 05:55:31 |

| Message-ID: | CAHGQGwFs0b95FZO9tPNOxTnKLSWHytjJrMYK8tqMGWxKOykXcQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 12:43 PM, Peter Geoghegan <pg(at)heroku(dot)com> wrote:

> On Thu, Aug 29, 2013 at 7:55 PM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> [the amount of WAL generated during running pgbench]

>> 4302 MB (compress_backup_block = off)

>> 1521 MB (compress_backup_block = on)

>

> Interesting.

>

> I wonder, what is the impact on recovery time under the same

> conditions?

Will test! I can imagine that the recovery time would be a bit

longer with compress_backup_block=on because compressed

FPW needs to be decompressed.

> I suppose that the cost of the random I/O involved would

> probably dominate just as with compress_backup_block = off. That said,

> you've used an SSD here, so perhaps not.

Oh, maybe my description was confusing. full_page_writes was enabled

while running the benchmark even if compress_backup_block = off.

I've not merged those two parameters yet. So even in

compress_backup_block = off, random I/O would not be increased in recovery.

Regards,

--

Fujii Masao

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 06:02:09 |

| Message-ID: | CAHGQGwFuWdA-Y7OWScBPKM17xDVDtdjBYRqxANLMVNhbPj5g4Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 1:43 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Fri, Aug 30, 2013 at 8:25 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> Hi,

>>

>> Attached patch adds new GUC parameter 'compress_backup_block'.

>> When this parameter is enabled, the server just compresses FPW

>> (full-page-writes) in WAL by using pglz_compress() before inserting it

>> to the WAL buffers. Then, the compressed FPW is decompressed

>> in recovery. This is very simple patch.

>>

>> The purpose of this patch is the reduction of WAL size.

>> Under heavy write load, the server needs to write a large amount of

>> WAL and this is likely to be a bottleneck. What's the worse is,

>> in replication, a large amount of WAL would have harmful effect on

>> not only WAL writing in the master, but also WAL streaming and

>> WAL writing in the standby. Also we would need to spend more

>> money on the storage to store such a large data.

>> I'd like to alleviate such harmful situations by reducing WAL size.

>>

>> My idea is very simple, just compress FPW because FPW is

>> a big part of WAL. I used pglz_compress() as a compression method,

>> but you might think that other method is better. We can add

>> something like FPW-compression-hook for that later. The patch

>> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

>> parameter to avoid increasing the number of GUC. That is,

>> I'm thinking to change full_page_writes so that it can accept new value

>> 'compress'.

>>

>> I measured how much WAL this patch can reduce, by using pgbench.

>>

>> * Server spec

>> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

>> Mem: 16GB

>> Disk: 500GB SSD Samsung 840

>>

>> * Benchmark

>> pgbench -c 32 -j 4 -T 900 -M prepared

>> scaling factor: 100

>>

>> checkpoint_segments = 1024

>> checkpoint_timeout = 5min

>> (every checkpoint during benchmark were triggered by checkpoint_timeout)

>>

>> * Result

>> [tps]

>> 1386.8 (compress_backup_block = off)

>> 1627.7 (compress_backup_block = on)

>>

>> [the amount of WAL generated during running pgbench]

>> 4302 MB (compress_backup_block = off)

>> 1521 MB (compress_backup_block = on)

>

> This is really nice data.

>

> I think if you want, you can once try with one of the tests Heikki has

> posted for one of my other patch which is here:

> http://www.postgresql.org/message-id/51366323.8070606@vmware.com

>

> Also if possible, for with lesser clients (1,2,4) and may be with more

> frequency of checkpoint.

>

> This is just to show benefits of this idea with other kind of workload.

Yep, I will do more tests.

> I think we can do these tests later as well, I had mentioned because

> sometime back (probably 6 months), one of my colleagues have tried

> exactly the same idea of using compression method (LZ and few others)

> for FPW, but it turned out that even though the WAL size is reduced

> but performance went down which is not the case in the data you have

> shown even though you have used SSD, might be he has done some mistake

> as he was not as experienced, but I think still it's good to check on

> various workloads.

I'd appreciate if you test the patch with HDD. Now I have no machine with HDD.

Regards,

--

Fujii Masao

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 06:03:39 |

| Message-ID: | CAHGQGwGn8qtJXAmSVveupvHTaPhTj=FJnWGofHS4pn5h-CG9aQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 2:32 PM, KONDO Mitsumasa

<kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

> (2013/08/30 11:55), Fujii Masao wrote:

>>

>> * Benchmark

>> pgbench -c 32 -j 4 -T 900 -M prepared

>> scaling factor: 100

>>

>> checkpoint_segments = 1024

>> checkpoint_timeout = 5min

>> (every checkpoint during benchmark were triggered by

>> checkpoint_timeout)

>

> Did you execute munual checkpoint before starting benchmark?

Yes.

> We read only your message, it occuered three times checkpoint during

> benchmark.

> But if you did not executed manual checkpoint, it would be different.

>

> You had better clear this point for more transparent evaluation.

What I executed was:

-------------------------------------

CHECKPOINT

SELECT pg_current_xlog_location()

pgbench -c 32 -j 4 -T 900 -M prepared -r -P 10

SELECT pg_current_xlog_location()

SELECT pg_xlog_location_diff() -- calculate the diff of the above locations

-------------------------------------

I repeated this several times to eliminate the noise.

Regards,

--

Fujii Masao

| From: | Peter Geoghegan <pg(at)heroku(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 06:05:38 |

| Message-ID: | CAM3SWZSWV7km5AMqtDMXOA8vWnfrCTX2XA6fr5wwnW2PTQLw0Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Aug 29, 2013 at 10:55 PM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> I suppose that the cost of the random I/O involved would

>> probably dominate just as with compress_backup_block = off. That said,

>> you've used an SSD here, so perhaps not.

>

> Oh, maybe my description was confusing. full_page_writes was enabled

> while running the benchmark even if compress_backup_block = off.

> I've not merged those two parameters yet. So even in

> compress_backup_block = off, random I/O would not be increased in recovery.

I understood it that way. I just meant that it could be that the

random I/O was so expensive that the additional cost of decompressing

the FPIs looked insignificant in comparison. If that was the case, the

increase in recovery time would be modest.

--

Peter Geoghegan

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Nikhil Sontakke <nikkhils(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 06:15:12 |

| Message-ID: | CAHGQGwFBqDP=D=uyG4dnQ1r6Wrf2zgtuDDDEj2A-Jbrc3wdPvg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 2:37 PM, Nikhil Sontakke <nikkhils(at)gmail(dot)com> wrote:

> Hi Fujii-san,

>

> I must be missing something really trivial, but why not try to compress all

> types of WAL blocks and not just FPW?

The size of non-FPW WAL is small, compared to that of FPW.

I thought that compression of such a small WAL would not have

big effect on the reduction of WAL size. Rather, compression of

every WAL records might cause large performance overhead.

Also, focusing on FPW makes the patch very simple. We can

add the compression of other WAL later if we want.

Regards,

--

Fujii Masao

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 06:57:10 |

| Message-ID: | 52204246.6030600@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 30.08.2013 05:55, Fujii Masao wrote:

> * Result

> [tps]

> 1386.8 (compress_backup_block = off)

> 1627.7 (compress_backup_block = on)

It would be good to check how much of this effect comes from reducing

the amount of data that needs to be CRC'd, because there has been some

talk of replacing the current CRC-32 algorithm with something faster.

See

http://www.postgresql.org/message-id/20130829223004.GD4283@awork2.anarazel.de.

It might even be beneficial to use one routine for full-page-writes,

which are generally much larger than other WAL records, and another

routine for smaller records. As long as they both produce the same CRC,

of course.

Speeding up the CRC calculation obviously won't help with the WAL volume

per se, ie. you still generate the same amount of WAL that needs to be

shipped in replication. But then again, if all you want to do is to

reduce the volume, you could just compress the whole WAL stream.

- Heikki

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-08-30 22:34:22 |

| Message-ID: | CA+TgmoZZbjA3Ht6iNXOBwjwdHo-3aGKd6oO1nFUM5mODE=-o+w@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Aug 29, 2013 at 10:55 PM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> Attached patch adds new GUC parameter 'compress_backup_block'.

I think this is a great idea.

(This is not to disagree with any of the suggestions made on this

thread for further investigation, all of which I think I basically

agree with. But I just wanted to voice general support for the

general idea, regardless of what specifically we end up with.)

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-11 10:39:14 |

| Message-ID: | CAHGQGwFZgpd8kYe-H5GSQgXx7BC-w4o6FqUaB5TMxV9Wfh-hmw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Aug 30, 2013 at 11:55 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> Hi,

>

> Attached patch adds new GUC parameter 'compress_backup_block'.

> When this parameter is enabled, the server just compresses FPW

> (full-page-writes) in WAL by using pglz_compress() before inserting it

> to the WAL buffers. Then, the compressed FPW is decompressed

> in recovery. This is very simple patch.

>

> The purpose of this patch is the reduction of WAL size.

> Under heavy write load, the server needs to write a large amount of

> WAL and this is likely to be a bottleneck. What's the worse is,

> in replication, a large amount of WAL would have harmful effect on

> not only WAL writing in the master, but also WAL streaming and

> WAL writing in the standby. Also we would need to spend more

> money on the storage to store such a large data.

> I'd like to alleviate such harmful situations by reducing WAL size.

>

> My idea is very simple, just compress FPW because FPW is

> a big part of WAL. I used pglz_compress() as a compression method,

> but you might think that other method is better. We can add

> something like FPW-compression-hook for that later. The patch

> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

> parameter to avoid increasing the number of GUC. That is,

> I'm thinking to change full_page_writes so that it can accept new value

> 'compress'.

Done. Attached is the updated version of the patch.

In this patch, full_page_writes accepts three values: on, compress, and off.

When it's set to compress, the full page image is compressed before it's

inserted into the WAL buffers.

I measured how much this patch affects the performance and the WAL

volume again, and I also measured how much this patch affects the

recovery time.

* Server spec

CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

Mem: 16GB

Disk: 500GB SSD Samsung 840

* Benchmark

pgbench -c 32 -j 4 -T 900 -M prepared

scaling factor: 100

checkpoint_segments = 1024

checkpoint_timeout = 5min

(every checkpoint during benchmark were triggered by checkpoint_timeout)

* Result

[tps]

1344.2 (full_page_writes = on)

1605.9 (compress)

1810.1 (off)

[the amount of WAL generated during running pgbench]

4422 MB (on)

1517 MB (compress)

885 MB (off)

[time required to replay WAL generated during running pgbench]

61s (on) .... 1209911 transactions were replayed,

recovery speed: 19834.6 transactions/sec

39s (compress) .... 1445446 transactions were replayed,

recovery speed: 37062.7 transactions/sec

37s (off) .... 1629235 transactions were replayed,

recovery speed: 44033.3 transactions/sec

When full_page_writes is disabled, the recovery speed is basically very low

because of random I/O. But, ISTM that, since I was using SSD in my box,

the recovery with full_page_writse=off was fastest.

Regards,

--

Fujii Masao

| Attachment | Content-Type | Size |

|---|---|---|

| compress_fpw_v2.patch | application/octet-stream | 24.1 KB |

| From: | Andres Freund <andres(at)2ndquadrant(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-11 10:43:21 |

| Message-ID: | 20130911104321.GB9411@awork2.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2013-09-11 19:39:14 +0900, Fujii Masao wrote:

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

>

> * Result

> [tps]

> 1344.2 (full_page_writes = on)

> 1605.9 (compress)

> 1810.1 (off)

>

> [the amount of WAL generated during running pgbench]

> 4422 MB (on)

> 1517 MB (compress)

> 885 MB (off)

>

> [time required to replay WAL generated during running pgbench]

> 61s (on) .... 1209911 transactions were replayed,

> recovery speed: 19834.6 transactions/sec

> 39s (compress) .... 1445446 transactions were replayed,

> recovery speed: 37062.7 transactions/sec

> 37s (off) .... 1629235 transactions were replayed,

> recovery speed: 44033.3 transactions/sec

ISTM for those benchmarks you should use an absolute number of

transactions, not one based on elapsed time. Otherwise the comparison

isn't really meaningful.

Greetings,

Andres Freund

--

Andres Freund http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-30 03:49:42 |

| Message-ID: | CAHGQGwF+KcJfzHmvK=_aD7PecVDsP1OA2sEz6-JuYeJtcVq1hA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Sep 11, 2013 at 7:39 PM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> On Fri, Aug 30, 2013 at 11:55 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> Hi,

>>

>> Attached patch adds new GUC parameter 'compress_backup_block'.

>> When this parameter is enabled, the server just compresses FPW

>> (full-page-writes) in WAL by using pglz_compress() before inserting it

>> to the WAL buffers. Then, the compressed FPW is decompressed

>> in recovery. This is very simple patch.

>>

>> The purpose of this patch is the reduction of WAL size.

>> Under heavy write load, the server needs to write a large amount of

>> WAL and this is likely to be a bottleneck. What's the worse is,

>> in replication, a large amount of WAL would have harmful effect on

>> not only WAL writing in the master, but also WAL streaming and

>> WAL writing in the standby. Also we would need to spend more

>> money on the storage to store such a large data.

>> I'd like to alleviate such harmful situations by reducing WAL size.

>>

>> My idea is very simple, just compress FPW because FPW is

>> a big part of WAL. I used pglz_compress() as a compression method,

>> but you might think that other method is better. We can add

>> something like FPW-compression-hook for that later. The patch

>> adds new GUC parameter, but I'm thinking to merge it to full_page_writes

>> parameter to avoid increasing the number of GUC. That is,

>> I'm thinking to change full_page_writes so that it can accept new value

>> 'compress'.

>

> Done. Attached is the updated version of the patch.

>

> In this patch, full_page_writes accepts three values: on, compress, and off.

> When it's set to compress, the full page image is compressed before it's

> inserted into the WAL buffers.

>

> I measured how much this patch affects the performance and the WAL

> volume again, and I also measured how much this patch affects the

> recovery time.

>

> * Server spec

> CPU: 8core, Intel(R) Core(TM) i7-3630QM CPU @ 2.40GHz

> Mem: 16GB

> Disk: 500GB SSD Samsung 840

>

> * Benchmark

> pgbench -c 32 -j 4 -T 900 -M prepared

> scaling factor: 100

>

> checkpoint_segments = 1024

> checkpoint_timeout = 5min

> (every checkpoint during benchmark were triggered by checkpoint_timeout)

>

> * Result

> [tps]

> 1344.2 (full_page_writes = on)

> 1605.9 (compress)

> 1810.1 (off)

>

> [the amount of WAL generated during running pgbench]

> 4422 MB (on)

> 1517 MB (compress)

> 885 MB (off)

On second thought, the patch could compress WAL very much because I

used pgbench.

Most of data in pgbench are pgbench_accounts table's "filler" columns, i.e.,

blank-padded empty strings. So, the compression ratio of WAL was very high.

I will do the same measurement by using another benchmark.

Regards,

--

Fujii Masao

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-30 04:27:39 |

| Message-ID: | 5248FDBB.1090809@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi Fujii-san,

(2013/09/30 12:49), Fujii Masao wrote:

> On second thought, the patch could compress WAL very much because I used pgbench.

> I will do the same measurement by using another benchmark.

If you hope, I can test this patch in DBT-2 benchmark in end of this week.

I will use under following test server.

* Test server

Server: HP Proliant DL360 G7

CPU: Xeon E5640 2.66GHz (1P/4C)

Memory: 18GB(PC3-10600R-9)

Disk: 146GB(15k)*4 RAID1+0

RAID controller: P410i/256MB

This is PG-REX test server as you know.

Regards,

--

Mitsumasa KONDO

NTT Open Source Software Center

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-30 04:34:14 |

| Message-ID: | CAHGQGwFdXoGDvVj=ozML0VK6PkBSTF_TOsrk=7YC-PWoA2Fv9A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 30, 2013 at 1:27 PM, KONDO Mitsumasa

<kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

> Hi Fujii-san,

>

>

> (2013/09/30 12:49), Fujii Masao wrote:

>> On second thought, the patch could compress WAL very much because I used

>> pgbench.

>>

>> I will do the same measurement by using another benchmark.

>

> If you hope, I can test this patch in DBT-2 benchmark in end of this week.

> I will use under following test server.

>

> * Test server

> Server: HP Proliant DL360 G7

> CPU: Xeon E5640 2.66GHz (1P/4C)

> Memory: 18GB(PC3-10600R-9)

> Disk: 146GB(15k)*4 RAID1+0

> RAID controller: P410i/256MB

Yep, please! It's really helpful!

Regards,

--

Fujii Masao

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-30 04:55:46 |

| Message-ID: | CAA4eK1+wkH5ZqwkhwT10nyMT-c5JFJZfZQE+Wg_kmK6My9qa0Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 30, 2013 at 10:04 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> On Mon, Sep 30, 2013 at 1:27 PM, KONDO Mitsumasa

> <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

>> Hi Fujii-san,

>>

>>

>> (2013/09/30 12:49), Fujii Masao wrote:

>>> On second thought, the patch could compress WAL very much because I used

>>> pgbench.

>>>

>>> I will do the same measurement by using another benchmark.

>>

>> If you hope, I can test this patch in DBT-2 benchmark in end of this week.

>> I will use under following test server.

>>

>> * Test server

>> Server: HP Proliant DL360 G7

>> CPU: Xeon E5640 2.66GHz (1P/4C)

>> Memory: 18GB(PC3-10600R-9)

>> Disk: 146GB(15k)*4 RAID1+0

>> RAID controller: P410i/256MB

>

> Yep, please! It's really helpful!

I think it will be useful if you can get the data for 1 and 2 threads

(may be with pgbench itself) as well, because the WAL reduction is

almost sure, but the only thing is that it should not dip tps in some

of the scenarios.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-09-30 10:11:23 |

| Message-ID: | 52494E4B.20604@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/09/30 13:55), Amit Kapila wrote:

> On Mon, Sep 30, 2013 at 10:04 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> Yep, please! It's really helpful!

OK! I test with single instance and synchronous replication constitution.

By the way, you posted patch which is sync_file_range() WAL writing method in 3

years ago. I think it is also good for performance. As the reason, I read

sync_file_range() and fdatasync() in latest linux kernel code(3.9.11),

fdatasync() writes in dirty buffers of the whole file, on the other hand,

sync_file_range() writes in partial dirty buffers. In more detail, these

functions use the same function in kernel source code, fdatasync() is

vfs_fsync_range(file, 0, LLONG_MAX, 1), and sync_file_range() is

vfs_fsync_range(file, offset, amount, 1).

It is obvious that which is more efficiently in WAL writing.

You had better confirm it in linux kernel's git. I think your conviction will be

more deeply.

https://git.kernel.org/cgit/linux/kernel/git/stable/linux-stable.git/tree/fs/sync.c?id=refs/tags/v3.11.2

> I think it will be useful if you can get the data for 1 and 2 threads

> (may be with pgbench itself) as well, because the WAL reduction is

> almost sure, but the only thing is that it should not dip tps in some

> of the scenarios.

That's right. I also want to know about this patch in MD environment, because

MD has strong point in sequential write which like WAL writing.

Regards,

--

Mitsumasa KONDO

NTT Open Source Software Center

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-04 05:19:27 |

| Message-ID: | CAHGQGwF-0eae9pkYAAKk8LoYkWrfHEs8RjR8g=ok0yH7Z0evww@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 30, 2013 at 1:55 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Mon, Sep 30, 2013 at 10:04 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> On Mon, Sep 30, 2013 at 1:27 PM, KONDO Mitsumasa

>> <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

>>> Hi Fujii-san,

>>>

>>>

>>> (2013/09/30 12:49), Fujii Masao wrote:

>>>> On second thought, the patch could compress WAL very much because I used

>>>> pgbench.

>>>>

>>>> I will do the same measurement by using another benchmark.

>>>

>>> If you hope, I can test this patch in DBT-2 benchmark in end of this week.

>>> I will use under following test server.

>>>

>>> * Test server

>>> Server: HP Proliant DL360 G7

>>> CPU: Xeon E5640 2.66GHz (1P/4C)

>>> Memory: 18GB(PC3-10600R-9)

>>> Disk: 146GB(15k)*4 RAID1+0

>>> RAID controller: P410i/256MB

>>

>> Yep, please! It's really helpful!

>

> I think it will be useful if you can get the data for 1 and 2 threads

> (may be with pgbench itself) as well, because the WAL reduction is

> almost sure, but the only thing is that it should not dip tps in some

> of the scenarios.

Here is the measurement result of pgbench with 1 thread.

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 900 s

WAL Volume

- 1344 MB (full_page_writes = on)

- 349 MB (compress)

- 78 MB (off)

TPS

117.369221 (on)

143.908024 (compress)

163.722063 (off)

Regards,

--

Fujii Masao

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-05 11:42:13 |

| Message-ID: | CAA4eK1L91SffEaDjDajLrOAfxuzNMv5YGkez+rAO3yEBQa8yxw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Oct 4, 2013 at 10:49 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

> On Mon, Sep 30, 2013 at 1:55 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>> On Mon, Sep 30, 2013 at 10:04 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>>> On Mon, Sep 30, 2013 at 1:27 PM, KONDO Mitsumasa

>>> <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

>>>> Hi Fujii-san,

>>>>

>>>>

>>>> (2013/09/30 12:49), Fujii Masao wrote:

>>>>> On second thought, the patch could compress WAL very much because I used

>>>>> pgbench.

>>>>>

>>>>> I will do the same measurement by using another benchmark.

>>>>

>>>> If you hope, I can test this patch in DBT-2 benchmark in end of this week.

>>>> I will use under following test server.

>>>>

>>>> * Test server

>>>> Server: HP Proliant DL360 G7

>>>> CPU: Xeon E5640 2.66GHz (1P/4C)

>>>> Memory: 18GB(PC3-10600R-9)

>>>> Disk: 146GB(15k)*4 RAID1+0

>>>> RAID controller: P410i/256MB

>>>

>>> Yep, please! It's really helpful!

>>

>> I think it will be useful if you can get the data for 1 and 2 threads

>> (may be with pgbench itself) as well, because the WAL reduction is

>> almost sure, but the only thing is that it should not dip tps in some

>> of the scenarios.

>

> Here is the measurement result of pgbench with 1 thread.

>

> scaling factor: 100

> query mode: prepared

> number of clients: 1

> number of threads: 1

> duration: 900 s

>

> WAL Volume

> - 1344 MB (full_page_writes = on)

> - 349 MB (compress)

> - 78 MB (off)

>

> TPS

> 117.369221 (on)

> 143.908024 (compress)

> 163.722063 (off)

This data is good.

I will check if with the help of my old colleagues, I can get the

performance data on m/c where we have tried similar idea.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 08:33:11 |

| Message-ID: | 8977CB36860C5843884E0A18D8747B0372BC7888@szxeml558-mbs.china.huawei.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 05 October 2013 17:12 Amit Kapila wrote:

>On Fri, Oct 4, 2013 at 10:49 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>> On Mon, Sep 30, 2013 at 1:55 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>>> On Mon, Sep 30, 2013 at 10:04 AM, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> wrote:

>>>> On Mon, Sep 30, 2013 at 1:27 PM, KONDO Mitsumasa

>>>> <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

>>>>> Hi Fujii-san,

>>>>>

>>>>>

>>>>> (2013/09/30 12:49), Fujii Masao wrote:

>>>>>> On second thought, the patch could compress WAL very much because

>>>>>> I used pgbench.

>>>>>>

>>>>>> I will do the same measurement by using another benchmark.

>>>>>

>>>>> If you hope, I can test this patch in DBT-2 benchmark in end of this week.

>>>>> I will use under following test server.

>>>>>

>>>>> * Test server

>>>>> Server: HP Proliant DL360 G7

>>>>> CPU: Xeon E5640 2.66GHz (1P/4C)

>>>>> Memory: 18GB(PC3-10600R-9)

>>>>> Disk: 146GB(15k)*4 RAID1+0

>>>>> RAID controller: P410i/256MB

>>>>

>>>> Yep, please! It's really helpful!

>>>

>>> I think it will be useful if you can get the data for 1 and 2 threads

>>> (may be with pgbench itself) as well, because the WAL reduction is

>>> almost sure, but the only thing is that it should not dip tps in some

>>> of the scenarios.

>>

>> Here is the measurement result of pgbench with 1 thread.

>>

>> scaling factor: 100

>> query mode: prepared

>> number of clients: 1

>> number of threads: 1

>> duration: 900 s

>>

>> WAL Volume

>> - 1344 MB (full_page_writes = on)

>> - 349 MB (compress)

>> - 78 MB (off)

>>

>> TPS

>> 117.369221 (on)

>> 143.908024 (compress)

>> 163.722063 (off)

>This data is good.

>I will check if with the help of my old colleagues, I can get the performance data on m/c where we have tried similar idea.

Thread-1 Threads-2

Head code FPW compress Head code FPW compress

Pgbench-org 5min 1011(0.96GB) 815(0.20GB) 2083(1.24GB) 1843(0.40GB)

Pgbench-1000 5min 958(1.16GB) 778(0.24GB) 1937(2.80GB) 1659(0.73GB)

Pgbench-org 15min 1065(1.43GB) 983(0.56GB) 2094(1.93GB) 2025(1.09GB)

Pgbench-1000 15min 1020(3.70GB) 898(1.05GB) 1383(5.31GB) 1908(2.49GB)

Pgbench-org - original pgbench

Pgbench-1000 - changed pgbench with a record size of 1000.

5 min - pgbench test carried out for 5 min.

15 min - pgbench test carried out for 15 min.

The checkpoint_timeout and checkpoint_segments are increased to make sure no checkpoint happens during the test run.

From the above readings it is observed that,

1. There a performance dip in one or two threads test, the amount of dip reduces with the test run time.

2. For two threads pgbench-1000 record size test, the fpw compress performance is good in 15min run.

3. More than 50% WAL reduction in all scenarios.

All these readings are measured with pgbench query mode as simple.

Please find the attached sheet for more details regarding machine and test configuration.

Regards,

Hari babu.

| Attachment | Content-Type | Size |

|---|---|---|

| compress_fpw.htm | text/html | 78.4 KB |

| From: | Andres Freund <andres(at)2ndquadrant(dot)com> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 09:49:11 |

| Message-ID: | 20131008094911.GB3698093@alap2.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2013-09-11 12:43:21 +0200, Andres Freund wrote:

> On 2013-09-11 19:39:14 +0900, Fujii Masao wrote:

> > * Benchmark

> > pgbench -c 32 -j 4 -T 900 -M prepared

> > scaling factor: 100

> >

> > checkpoint_segments = 1024

> > checkpoint_timeout = 5min

> > (every checkpoint during benchmark were triggered by checkpoint_timeout)

> >

> > * Result

> > [tps]

> > 1344.2 (full_page_writes = on)

> > 1605.9 (compress)

> > 1810.1 (off)

> >

> > [the amount of WAL generated during running pgbench]

> > 4422 MB (on)

> > 1517 MB (compress)

> > 885 MB (off)

> >

> > [time required to replay WAL generated during running pgbench]

> > 61s (on) .... 1209911 transactions were replayed,

> > recovery speed: 19834.6 transactions/sec

> > 39s (compress) .... 1445446 transactions were replayed,

> > recovery speed: 37062.7 transactions/sec

> > 37s (off) .... 1629235 transactions were replayed,

> > recovery speed: 44033.3 transactions/sec

>

> ISTM for those benchmarks you should use an absolute number of

> transactions, not one based on elapsed time. Otherwise the comparison

> isn't really meaningful.

I really think we need to see recovery time benchmarks with a constant

amount of transactions to judge this properly.

Greetings,

Andres Freund

--

Andres Freund http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 09:51:34 |

| Message-ID: | 5253D5A6.7080409@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/10/08 17:33), Haribabu kommi wrote:

> The checkpoint_timeout and checkpoint_segments are increased to make sure no checkpoint happens during the test run.

Your setting is easy occurred checkpoint in checkpoint_segments = 256. I don't

know number of disks in your test server, in my test server which has 4 magnetic

disk(1.5k rpm), postgres generates 50 - 100 WALs per minutes.

And I cannot understand your setting which is sync_commit = off. This setting

tend to cause cpu bottle-neck and data-loss. It is not general in database usage.

Therefore, your test is not fair comparison for Fujii's patch.

Going back to my DBT-2 benchmark, I have not got good performance (almost same

performance). So I am checking hunk, my setting, or something wrong in Fujii's

patch now. I am going to try to send test result tonight.

Regards,

--

Mitsumasa KONDO

NTT Open Source Software Center

| From: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

|---|---|

| To: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 11:13:04 |

| Message-ID: | 8977CB36860C5843884E0A18D8747B0372BC790D@szxeml558-mbs.china.huawei.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 08 October 2013 15:22 KONDO Mitsumasa wrote:

> (2013/10/08 17:33), Haribabu kommi wrote:

>> The checkpoint_timeout and checkpoint_segments are increased to make sure no checkpoint happens during the test run.

>Your setting is easy occurred checkpoint in checkpoint_segments = 256. I don't know number of disks in your test server, in my test server which has 4 magnetic disk(1.5k rpm), postgres generates 50 - 100 WALs per minutes.

A manual checkpoint is executed before starting of the test and verified as no checkpoint happened during the run by increasing the "checkpoint_warning".

>And I cannot understand your setting which is sync_commit = off. This setting tend to cause cpu bottle-neck and data-loss. It is not general in database usage.

Therefore, your test is not fair comparison for Fujii's patch.

I chosen the sync_commit=off mode because it generates more tps, thus it increases the volume of WAL.

I will test with sync_commit=on mode and provide the test results.

Regards,

Hari babu.

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 13:07:47 |

| Message-ID: | 525403A3.7000606@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

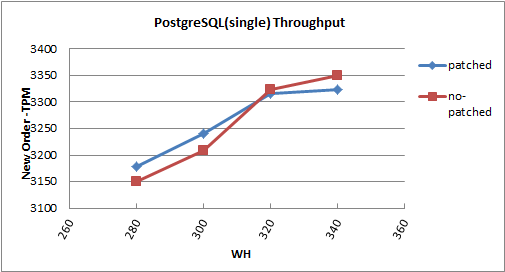

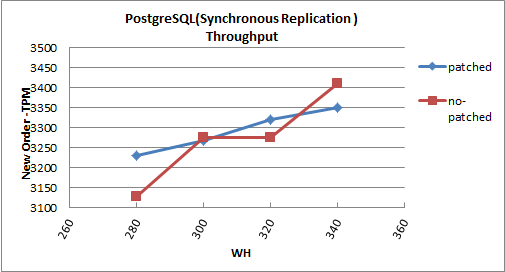

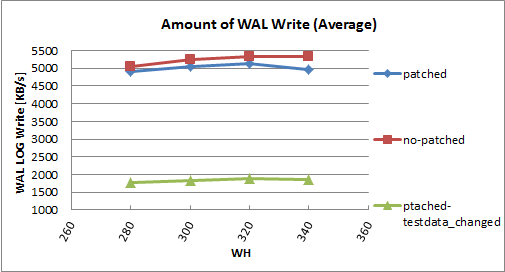

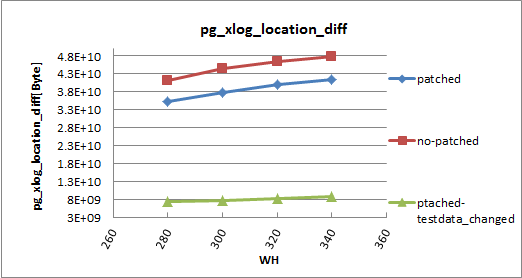

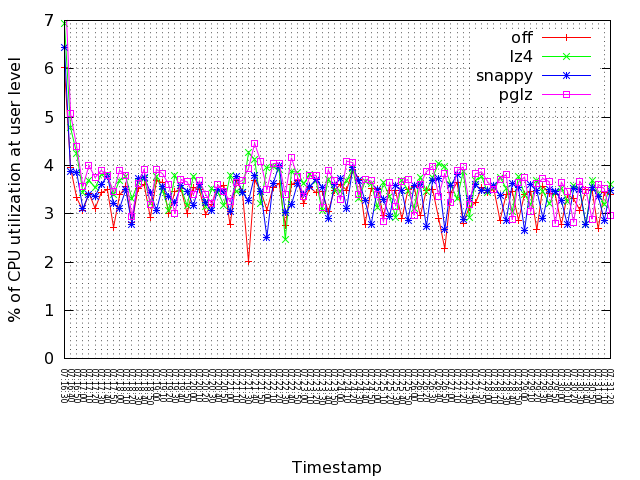

I tested dbt-2 benchmark in single instance and synchronous replication.

Unfortunately, my benchmark results were not seen many differences...

* Test server

Server: HP Proliant DL360 G7

CPU: Xeon E5640 2.66GHz (1P/4C)

Memory: 18GB(PC3-10600R-9)

Disk: 146GB(15k)*4 RAID1+0

RAID controller: P410i/256MB

* Result

** Single instance**

| NOTPM | 90%tile | Average | S.Deviation

------------+-----------+-------------+---------+-------------

no-patched | 3322.93 | 20.469071 | 5.882 | 10.478

patched | 3315.42 | 19.086105 | 5.669 | 9.108

** Synchronous Replication **

| NOTPM | 90%tile | Average | S.Deviation

------------+-----------+-------------+---------+-------------

no-patched | 3275.55 | 21.332866 | 6.072 | 9.882

patched | 3318.82 | 18.141807 | 5.757 | 9.829

** Detail of result

http://pgstatsinfo.projects.pgfoundry.org/DBT-2_Fujii_patch/

I set full_page_write = compress with Fujii's patch in DBT-2. But it does not

seems to effect for eleminating WAL files. I will try to DBT-2 benchmark more

once, and try to normal pgbench in my test server.

Regards,

--

Mitsumasa KONDO

NTT Open Source Software Center

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 6.6 KB |

|

image/png | 9.8 KB |

|

image/png | 8.7 KB |

|

image/png | 9.3 KB |

| From: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

|---|---|

| To: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-08 13:11:50 |

| Message-ID: | 52540496.5060501@lab.ntt.co.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/10/08 20:13), Haribabu kommi wrote:

> I chosen the sync_commit=off mode because it generates more tps, thus it increases the volume of WAL.

I did not think to there. Sorry...

> I will test with sync_commit=on mode and provide the test results.

OK. Thanks!

--

Mitsumasa KONDO

NTT Open Source Software Center

| From: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

|---|---|

| To: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-09 04:35:59 |

| Message-ID: | 8977CB36860C5843884E0A18D8747B0372BC7BD2@szxeml558-mbs.china.huawei.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 08 October 2013 18:42 KONDO Mitsumasa wrote:

>(2013/10/08 20:13), Haribabu kommi wrote:

>> I will test with sync_commit=on mode and provide the test results.

>OK. Thanks!

Pgbench test results with synchronous_commit mode as on.

Thread-1 Threads-2

Head code FPW compress Head code FPW compress

Pgbench-org 5min 138(0.24GB) 131(0.04GB) 160(0.28GB) 163(0.05GB)

Pgbench-1000 5min 140(0.29GB) 128(0.03GB) 160(0.33GB) 162(0.02GB)

Pgbench-org 15min 141(0.59GB) 136(0.12GB) 160(0.65GB) 162(0.14GB)

Pgbench-1000 15min 138(0.81GB) 134(0.11GB) 159(0.92GB) 162(0.18GB)

Pgbench-org - original pgbench

Pgbench-1000 - changed pgbench with a record size of 1000.

5 min - pgbench test carried out for 5 min.

15 min - pgbench test carried out for 15 min.

From the above readings it is observed that,

1. There a performance dip in one thread test, the amount of dip reduces with the test run time.

2. More than 75% WAL reduction in all scenarios.

Please find the attached sheet for more details regarding machine and test configuration

Regards,

Hari babu.

| Attachment | Content-Type | Size |

|---|---|---|

| compress_fpw_sync_on.htm | text/html | 61.0 KB |

| From: | Dimitri Fontaine <dimitri(at)2ndQuadrant(dot)fr> |

|---|---|

| To: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-10 16:20:56 |

| Message-ID: | m2mwmh6qs7.fsf@2ndQuadrant.fr |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

I did a partial review of this patch, wherein I focused on the patch and

the code itself, as I saw other contributors already did some testing on

it, so that we know it applies cleanly and work to some good extend.

Fujii Masao <masao(dot)fujii(at)gmail(dot)com> writes:

> In this patch, full_page_writes accepts three values: on, compress, and off.

> When it's set to compress, the full page image is compressed before it's

> inserted into the WAL buffers.

Code review :

In full_page_writes_str() why are you returning "unrecognized" rather

than doing an ELOG(ERROR, …) for this unexpected situation?

The code switches to compression (or trying to) when the following

condition is met:

+ if (fpw <= FULL_PAGE_WRITES_COMPRESS)

+ {

+ rdt->data = CompressBackupBlock(page, BLCKSZ - bkpb->hole_length, &(rdt->len));

We have

+ typedef enum FullPageWritesLevel

+ {

+ FULL_PAGE_WRITES_OFF = 0,

+ FULL_PAGE_WRITES_COMPRESS,

+ FULL_PAGE_WRITES_ON

+ } FullPageWritesLevel;

+ #define FullPageWritesIsNeeded(fpw) (fpw >= FULL_PAGE_WRITES_COMPRESS)

I don't much like using the <= test against and ENUM and I'm not sure I

understand the intention you have here. It somehow looks like a typo and

disagrees with the macro. What about using the FullPageWritesIsNeeded

macro, and maybe rewriting the macro as

#define FullPageWritesIsNeeded(fpw) \

(fpw == FULL_PAGE_WRITES_COMPRESS || fpw == FULL_PAGE_WRITES_ON)

Also, having "on" imply "compress" is a little funny to me. Maybe we

should just finish our testing and be happy to always compress the full

page writes. What would the downside be exactly (on buzy IO system

writing less data even if needing more CPU will be the right trade-off).

I like that you're checking the savings of the compressed data with

respect to the uncompressed data and cancel the compression if there's

no gain. I wonder if your test accounts for enough padding and headers

though given the results we saw in other tests made in this thread.

Why do we have both the static function full_page_writes_str() and the

macro FullPageWritesStr, with two different implementations issuing

either "true" and "false" or "on" and "off"?

! unsigned hole_offset:15, /* number of bytes before "hole" */

! flags:2, /* state of a backup block, see below */

! hole_length:15; /* number of bytes in "hole" */

I don't understand that. I wanted to use that patch as a leverage to

smoothly discover the internals of our WAL system but won't have the

time to do that here. That said, I don't even know that C syntax.

+ #define BKPBLOCK_UNCOMPRESSED 0 /* uncompressed */

+ #define BKPBLOCK_COMPRESSED 1 /* comperssed */

There's a typo in the comment above.

> [time required to replay WAL generated during running pgbench]

> 61s (on) .... 1209911 transactions were replayed,

> recovery speed: 19834.6 transactions/sec

> 39s (compress) .... 1445446 transactions were replayed,

> recovery speed: 37062.7 transactions/sec

> 37s (off) .... 1629235 transactions were replayed,

> recovery speed: 44033.3 transactions/sec

How did you get those numbers ? pg_basebackup before the test and

archiving, then a PITR maybe? Is it possible to do the same test with

the same number of transactions to replay, I guess using the -t

parameter rather than the -T one for this testing.

Regards,

--

Dimitri Fontaine

http://2ndQuadrant.fr PostgreSQL : Expertise, Formation et Support

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-10 17:32:33 |

| Message-ID: | CAHGQGwH-sSwg42xgsksM66YjUrpCVtGa=jkhyGaiZ=LKqBQriA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Oct 8, 2013 at 10:07 PM, KONDO Mitsumasa

<kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp> wrote:

> Hi,

>

> I tested dbt-2 benchmark in single instance and synchronous replication.

Thanks!

> Unfortunately, my benchmark results were not seen many differences...

>

>

> * Test server

> Server: HP Proliant DL360 G7

> CPU: Xeon E5640 2.66GHz (1P/4C)

> Memory: 18GB(PC3-10600R-9)

> Disk: 146GB(15k)*4 RAID1+0

> RAID controller: P410i/256MB

>

> * Result

> ** Single instance**

> | NOTPM | 90%tile | Average | S.Deviation

> ------------+-----------+-------------+---------+-------------

> no-patched | 3322.93 | 20.469071 | 5.882 | 10.478

> patched | 3315.42 | 19.086105 | 5.669 | 9.108

>

>

> ** Synchronous Replication **

> | NOTPM | 90%tile | Average | S.Deviation

> ------------+-----------+-------------+---------+-------------

> no-patched | 3275.55 | 21.332866 | 6.072 | 9.882

> patched | 3318.82 | 18.141807 | 5.757 | 9.829

>

> ** Detail of result

> http://pgstatsinfo.projects.pgfoundry.org/DBT-2_Fujii_patch/

>

>

> I set full_page_write = compress with Fujii's patch in DBT-2. But it does

> not

> seems to effect for eleminating WAL files.

Could you let me know how much WAL records were generated

during each benchmark?

I think that this benchmark result clearly means that the patch

has only limited effects in the reduction of WAL volume and

the performance improvement unless the database contains

highly-compressible data like pgbench_accounts.filler. But if

we can use other compression algorithm, maybe we can reduce

WAL volume very much. I'm not sure what algorithm is good

for WAL compression, though.

It might be better to introduce the hook for compression of FPW

so that users can freely use their compression module, rather

than just using pglz_compress(). Thought?

Regards,

--

Fujii Masao

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Haribabu kommi <haribabu(dot)kommi(at)huawei(dot)com> |

| Cc: | KONDO Mitsumasa <kondo(dot)mitsumasa(at)lab(dot)ntt(dot)co(dot)jp>, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-10 17:36:09 |

| Message-ID: | CAHGQGwH2GjPaNLREEToj6Qvv4J2ByctGCKOWKQaf6E3MPm=MTw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Oct 9, 2013 at 1:35 PM, Haribabu kommi

<haribabu(dot)kommi(at)huawei(dot)com> wrote:

> On 08 October 2013 18:42 KONDO Mitsumasa wrote:

>>(2013/10/08 20:13), Haribabu kommi wrote:

>>> I will test with sync_commit=on mode and provide the test results.

>>OK. Thanks!

>

> Pgbench test results with synchronous_commit mode as on.

Thanks!

> Thread-1 Threads-2

> Head code FPW compress Head code FPW compress

> Pgbench-org 5min 138(0.24GB) 131(0.04GB) 160(0.28GB) 163(0.05GB)

> Pgbench-1000 5min 140(0.29GB) 128(0.03GB) 160(0.33GB) 162(0.02GB)

> Pgbench-org 15min 141(0.59GB) 136(0.12GB) 160(0.65GB) 162(0.14GB)

> Pgbench-1000 15min 138(0.81GB) 134(0.11GB) 159(0.92GB) 162(0.18GB)

>

> Pgbench-org - original pgbench

> Pgbench-1000 - changed pgbench with a record size of 1000.

This means that you changed the data type of pgbench_accounts.filler

to char(1000)?

Regards,

--

Fujii Masao

| From: | Fujii Masao <masao(dot)fujii(at)gmail(dot)com> |

|---|---|

| To: | Dimitri Fontaine <dimitri(at)2ndquadrant(dot)fr> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Compression of full-page-writes |

| Date: | 2013-10-10 18:44:01 |

| Message-ID: | CAHGQGwG=aSraHUSc5dR7jywWreNoUYsgg4MD-CPDxxZ-XShF2w@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Oct 11, 2013 at 1:20 AM, Dimitri Fontaine

<dimitri(at)2ndquadrant(dot)fr> wrote:

> Hi,

>

> I did a partial review of this patch, wherein I focused on the patch and

> the code itself, as I saw other contributors already did some testing on

> it, so that we know it applies cleanly and work to some good extend.