Re: GIN improvements part2: fast scan

| Lists: | pgsql-hackers |

|---|

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | GIN improvements part2: fast scan |

| Date: | 2013-06-14 22:55:17 |

| Message-ID: | CAPpHfdvftaJq7www381naLw1=4u0h+qpXgWvNhcEB9HMVywbGg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hackes,

attached patch implementing "fast scan" technique for GIN. This is second

patch of GIN improvements, see the 1st one here:

http://www.postgresql.org/message-id/CAPpHfduxv-iL7aedwPW0W5fXrWGAKfxijWM63_hZujaCRxnmFQ@mail.gmail.com

This patch allow to skip parts of posting trees when their scan is not

necessary. In particular, it solves "frequent_term & rare_term" problem of

FTS.

It introduces new interface method pre_consistent which behaves like

consistent, but:

1) allows false positives on input (check[])

2) allowed to return false positives

Some example: "frequent_term & rare_term" becomes pretty fast.

create table test as (select to_tsvector('english', 'bbb') as v from

generate_series(1,1000000));

insert into test (select to_tsvector('english', 'ddd') from

generate_series(1,10));

create index test_idx on test using gin (v);

postgres=# explain analyze select * from test where v @@

to_tsquery('english', 'bbb & ddd');

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------

Bitmap Heap Scan on test (cost=942.75..7280.63 rows=5000 width=17)

(actual time=0.458..0.461 rows=10 loops=1)

Recheck Cond: (v @@ '''bbb'' & ''ddd'''::tsquery)

-> Bitmap Index Scan on test_idx (cost=0.00..941.50 rows=5000 width=0)

(actual time=0.449..0.449 rows=10 loops=1)

Index Cond: (v @@ '''bbb'' & ''ddd'''::tsquery)

Total runtime: 0.516 ms

(5 rows)

------

With best regards,

Alexander Korotkov.

| Attachment | Content-Type | Size |

|---|---|---|

| gin_fast_scan.1.patch.gz | application/x-gzip | 6.2 KB |

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-17 12:55:22 |

| Message-ID: | CAPpHfdsqDCa94Gy9QHHgbA3PmTNr9NCnY_WFYks0MNXjwFq1OQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sat, Jun 15, 2013 at 2:55 AM, Alexander Korotkov <aekorotkov(at)gmail(dot)com>wrote:

> attached patch implementing "fast scan" technique for GIN. This is second

> patch of GIN improvements, see the 1st one here:

>

> http://www.postgresql.org/message-id/CAPpHfduxv-iL7aedwPW0W5fXrWGAKfxijWM63_hZujaCRxnmFQ@mail.gmail.com

> This patch allow to skip parts of posting trees when their scan is not

> necessary. In particular, it solves "frequent_term & rare_term" problem of

> FTS.

> It introduces new interface method pre_consistent which behaves like

> consistent, but:

> 1) allows false positives on input (check[])

> 2) allowed to return false positives

>

> Some example: "frequent_term & rare_term" becomes pretty fast.

>

> create table test as (select to_tsvector('english', 'bbb') as v from

> generate_series(1,1000000));

> insert into test (select to_tsvector('english', 'ddd') from

> generate_series(1,10));

> create index test_idx on test using gin (v);

>

> postgres=# explain analyze select * from test where v @@

> to_tsquery('english', 'bbb & ddd');

> QUERY PLAN

>

> -----------------------------------------------------------------------------------------------------------------------

> Bitmap Heap Scan on test (cost=942.75..7280.63 rows=5000 width=17)

> (actual time=0.458..0.461 rows=10 loops=1)

> Recheck Cond: (v @@ '''bbb'' & ''ddd'''::tsquery)

> -> Bitmap Index Scan on test_idx (cost=0.00..941.50 rows=5000

> width=0) (actual time=0.449..0.449 rows=10 loops=1)

> Index Cond: (v @@ '''bbb'' & ''ddd'''::tsquery)

> Total runtime: 0.516 ms

> (5 rows)

>

Attached version of patch has some refactoring and bug fixes.

------

With best regards,

Alexander Korotkov.

| Attachment | Content-Type | Size |

|---|---|---|

| gin_fast_scan.2.patch.gz | application/x-gzip | 6.8 KB |

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-17 13:09:36 |

| Message-ID: | 51BF0A90.2000503@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17.06.2013 15:55, Alexander Korotkov wrote:

> On Sat, Jun 15, 2013 at 2:55 AM, Alexander Korotkov<aekorotkov(at)gmail(dot)com>wrote:

>

>> attached patch implementing "fast scan" technique for GIN. This is second

>> patch of GIN improvements, see the 1st one here:

>>

>> http://www.postgresql.org/message-id/CAPpHfduxv-iL7aedwPW0W5fXrWGAKfxijWM63_hZujaCRxnmFQ@mail.gmail.com

>> This patch allow to skip parts of posting trees when their scan is not

>> necessary. In particular, it solves "frequent_term& rare_term" problem of

>> FTS.

>> It introduces new interface method pre_consistent which behaves like

>> consistent, but:

>> 1) allows false positives on input (check[])

>> 2) allowed to return false positives

>>

>> Some example: "frequent_term& rare_term" becomes pretty fast.

>>

>> create table test as (select to_tsvector('english', 'bbb') as v from

>> generate_series(1,1000000));

>> insert into test (select to_tsvector('english', 'ddd') from

>> generate_series(1,10));

>> create index test_idx on test using gin (v);

>>

>> postgres=# explain analyze select * from test where v @@

>> to_tsquery('english', 'bbb& ddd');

>> QUERY PLAN

>>

>> -----------------------------------------------------------------------------------------------------------------------

>> Bitmap Heap Scan on test (cost=942.75..7280.63 rows=5000 width=17)

>> (actual time=0.458..0.461 rows=10 loops=1)

>> Recheck Cond: (v @@ '''bbb''& ''ddd'''::tsquery)

>> -> Bitmap Index Scan on test_idx (cost=0.00..941.50 rows=5000

>> width=0) (actual time=0.449..0.449 rows=10 loops=1)

>> Index Cond: (v @@ '''bbb''& ''ddd'''::tsquery)

>> Total runtime: 0.516 ms

>> (5 rows)

>>

>

> Attached version of patch has some refactoring and bug fixes.

Good timing, I just started looking at this.

I think you'll need to explain how this works. There are no docs, and

almost no comments.

(and this shows how poorly I understand this, but) Why does this require

the "additional information" patch? What extra information do you store

on-disk, in the additional information?

The pre-consistent method is like the consistent method, but it allows

false positives. I think that's because during the scan, before having

scanned for all the keys, the gin AM doesn't yet know if the tuple

contains all of the keys. So it passes the keys it doesn't yet know

about as 'true' to pre-consistent. Could that be generalized, to pass a

tri-state instead of a boolean for each key to the pre-consistent

method? For each key, you would pass "true", "false", or "don't know". I

think you could then also speed up queries like "!english & bbb".

- Heikki

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-18 20:59:54 |

| Message-ID: | CAPpHfdsAz71ZK1mYvsOT6juCjo0vFp4r4b7nErzbEvSTo2eMoA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Jun 17, 2013 at 5:09 PM, Heikki Linnakangas <hlinnakangas(at)vmware(dot)com

> wrote:

> On 17.06.2013 15:55, Alexander Korotkov wrote:

>

>> On Sat, Jun 15, 2013 at 2:55 AM, Alexander Korotkov<aekorotkov(at)gmail(dot)com>

>> **wrote:

>>

>> attached patch implementing "fast scan" technique for GIN. This is second

>>> patch of GIN improvements, see the 1st one here:

>>>

>>> http://www.postgresql.org/**message-id/CAPpHfduxv-**

>>> iL7aedwPW0W5fXrWGAKfxijWM63_**hZujaCRxnmFQ(at)mail(dot)gmail(dot)com<http://www.postgresql.org/message-id/CAPpHfduxv-iL7aedwPW0W5fXrWGAKfxijWM63_hZujaCRxnmFQ@mail.gmail.com>

>>> This patch allow to skip parts of posting trees when their scan is not

>>> necessary. In particular, it solves "frequent_term& rare_term" problem

>>> of

>>>

>>> FTS.

>>> It introduces new interface method pre_consistent which behaves like

>>> consistent, but:

>>> 1) allows false positives on input (check[])

>>> 2) allowed to return false positives

>>>

>>> Some example: "frequent_term& rare_term" becomes pretty fast.

>>>

>>>

>>> create table test as (select to_tsvector('english', 'bbb') as v from

>>> generate_series(1,1000000));

>>> insert into test (select to_tsvector('english', 'ddd') from

>>> generate_series(1,10));

>>> create index test_idx on test using gin (v);

>>>

>>> postgres=# explain analyze select * from test where v @@

>>> to_tsquery('english', 'bbb& ddd');

>>>

>>> QUERY PLAN

>>>

>>> ------------------------------**------------------------------**

>>> ------------------------------**-----------------------------

>>> Bitmap Heap Scan on test (cost=942.75..7280.63 rows=5000 width=17)

>>> (actual time=0.458..0.461 rows=10 loops=1)

>>> Recheck Cond: (v @@ '''bbb''& ''ddd'''::tsquery)

>>> -> Bitmap Index Scan on test_idx (cost=0.00..941.50 rows=5000

>>> width=0) (actual time=0.449..0.449 rows=10 loops=1)

>>> Index Cond: (v @@ '''bbb''& ''ddd'''::tsquery)

>>> Total runtime: 0.516 ms

>>> (5 rows)

>>>

>>>

>> Attached version of patch has some refactoring and bug fixes.

>>

>

> Good timing, I just started looking at this.

>

> I think you'll need to explain how this works. There are no docs, and

> almost no comments.

>

Sorry for that. I'll post patch with docs and comments in couple days.

(and this shows how poorly I understand this, but) Why does this require

> the "additional information" patch?

In principle, it doesn't require "additional information" patch. Same

optimization could be done without "additional information". The reason why

it requires "additional information" patch is that otherwise I have to

implement and maintain 2 versions of "fast scan": with and without

"additional information".

> What extra information do you store on-disk, in the additional information?

>

It depends on opclass. In regex patch it is part of graph associated with

trigram. In fulltext search it is word positions. In array similarity

search it could be length of array for better estimation of similarity (not

implemented yet). So it's anything which is stored with each ItemPointer

and is useful for consistent or index driven sorting (see patch #3).

> The pre-consistent method is like the consistent method, but it allows

> false positives. I think that's because during the scan, before having

> scanned for all the keys, the gin AM doesn't yet know if the tuple contains

> all of the keys. So it passes the keys it doesn't yet know about as 'true'

> to pre-consistent.

Could that be generalized, to pass a tri-state instead of a boolean for

> each key to the pre-consistent method? For each key, you would pass "true",

> "false", or "don't know". I think you could then also speed up queries like

> "!english & bbb".

I would like to illustrate that on example. Imagine you have fulltext query

"rare_term & frequent_term". Frequent term has large posting tree while

rare term has only small posting list containing iptr1, iptr2 and iptr3. At

first we get iptr1 from posting list of rare term, then we would like to

check whether we have to scan part of frequent term posting tree where iptr

< iptr1. So we call pre_consistent([false, true]), because we know that

rare term is not present for iptr < iptr2. pre_consistent returns false. So

we can start scanning frequent term posting tree from iptr1. Similarly we

can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

maximum possible pointer.

And yes, it could be generalized to tri-state instead of a boolean. I had

that idea first. But I found superiority of exact "true" quite narrow.

Let's see it on the example. If you have "!term1 & term2" there are few

cases:

1) term1 is rare, term2 is frequent => you can exclude only some entries

from posting tree of term2, performance benefit will be negligible

2) term1 is frequent, term2 is rare => you should actually scan term2

posting list and then check term1 posting tree like in example about

"rare_term & frequent_term", so there is no need of tri-state to handle

this situation

3) term1 is frequent, term2 is frequent => this case probably could be

optimized by tri-state. But in order to actully skip some parts of term2

posting tree you need item pointers in term1 to go sequential. It seems for

me to be very narrow case.

4) term1 is rare, term2 is rare => don't need optimization

BTW, version of patch with some bug fixes is attached.

------

With best regards,

Alexander Korotkov.

| Attachment | Content-Type | Size |

|---|---|---|

| gin_fast_scan.3.patch.gz | application/x-gzip | 6.8 KB |

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-19 07:48:05 |

| Message-ID: | 51C16235.3080308@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 18.06.2013 23:59, Alexander Korotkov wrote:

> I would like to illustrate that on example. Imagine you have fulltext query

> "rare_term& frequent_term". Frequent term has large posting tree while

> rare term has only small posting list containing iptr1, iptr2 and iptr3. At

> first we get iptr1 from posting list of rare term, then we would like to

> check whether we have to scan part of frequent term posting tree where iptr

> < iptr1. So we call pre_consistent([false, true]), because we know that

> rare term is not present for iptr< iptr2. pre_consistent returns false. So

> we can start scanning frequent term posting tree from iptr1. Similarly we

> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

> maximum possible pointer.

Thanks, now I understand the rare-term & frequent-term problem. Couldn't

you do that with the existing consistent function? I don't see why you

need the new pre-consistent function for this.

- Heikki

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-19 08:30:56 |

| Message-ID: | CAPpHfdtMPreArtJpJNKuYCiURvt5=G+UJjx5buHVSnM01Cbi8A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas <

hlinnakangas(at)vmware(dot)com> wrote:

> On 18.06.2013 23:59, Alexander Korotkov wrote:

>

>> I would like to illustrate that on example. Imagine you have fulltext

>> query

>> "rare_term& frequent_term". Frequent term has large posting tree while

>>

>> rare term has only small posting list containing iptr1, iptr2 and iptr3.

>> At

>> first we get iptr1 from posting list of rare term, then we would like to

>> check whether we have to scan part of frequent term posting tree where

>> iptr

>> < iptr1. So we call pre_consistent([false, true]), because we know that

>> rare term is not present for iptr< iptr2. pre_consistent returns false.

>> So

>> we can start scanning frequent term posting tree from iptr1. Similarly we

>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>> maximum possible pointer.

>>

>

> Thanks, now I understand the rare-term & frequent-term problem. Couldn't

> you do that with the existing consistent function? I don't see why you need

> the new pre-consistent function for this.

In the case of two entries I can. But in the case of n entries things

becomes more complicated. Imagine you have "term_1 & term_2 & ... & term_n"

query. When you get some item pointer from term_1 you can skip all the

lesser item pointers from term_2, term_3 ... term_n. But if all you have

for it is consistent function you have to call it with following check

arguments:

1) [false, false, false, ... , false]

2) [false, true, false, ... , false]

3) [false, false, true, ... , false]

4) [false, true, true, ..., false]

......

i.e. you have to call it 2^(n-1) times. But if you know the query specific

(i.e. in opclass) it's typically easy to calculate exactly what we need in

single pass. That's why I introduced pre_consistent.

------

With best regards,

Alexander Korotkov.

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-19 08:35:02 |

| Message-ID: | CAPpHfdtt=OAhg0+KtNX=thwpH+JeF=RhkD60u9pdjcrnPYDFTA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 19, 2013 at 12:30 PM, Alexander Korotkov

<aekorotkov(at)gmail(dot)com>wrote:

> On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas <

> hlinnakangas(at)vmware(dot)com> wrote:

>

>> On 18.06.2013 23:59, Alexander Korotkov wrote:

>>

>>> I would like to illustrate that on example. Imagine you have fulltext

>>> query

>>> "rare_term& frequent_term". Frequent term has large posting tree while

>>>

>>> rare term has only small posting list containing iptr1, iptr2 and iptr3.

>>> At

>>> first we get iptr1 from posting list of rare term, then we would like to

>>> check whether we have to scan part of frequent term posting tree where

>>> iptr

>>> < iptr1. So we call pre_consistent([false, true]), because we know that

>>> rare term is not present for iptr< iptr2. pre_consistent returns false.

>>> So

>>> we can start scanning frequent term posting tree from iptr1. Similarly we

>>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>>> maximum possible pointer.

>>>

>>

>> Thanks, now I understand the rare-term & frequent-term problem. Couldn't

>> you do that with the existing consistent function? I don't see why you need

>> the new pre-consistent function for this.

>

>

> In the case of two entries I can. But in the case of n entries things

> becomes more complicated. Imagine you have "term_1 & term_2 & ... & term_n"

> query. When you get some item pointer from term_1 you can skip all the

> lesser item pointers from term_2, term_3 ... term_n. But if all you have

> for it is consistent function you have to call it with following check

> arguments:

> 1) [false, false, false, ... , false]

> 2) [false, true, false, ... , false]

> 3) [false, false, true, ... , false]

> 4) [false, true, true, ..., false]

> ......

> i.e. you have to call it 2^(n-1) times.

>

To be precise you don't need the first check argument I listed. So, it's

2^(n-1)-1 calls.

------

With best regards,

Alexander Korotkov.

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-19 08:49:44 |

| Message-ID: | 51C170A8.9060509@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 19.06.2013 11:30, Alexander Korotkov wrote:

> On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas<

> hlinnakangas(at)vmware(dot)com> wrote:

>

>> On 18.06.2013 23:59, Alexander Korotkov wrote:

>>

>>> I would like to illustrate that on example. Imagine you have fulltext

>>> query

>>> "rare_term& frequent_term". Frequent term has large posting tree while

>>>

>>> rare term has only small posting list containing iptr1, iptr2 and iptr3.

>>> At

>>> first we get iptr1 from posting list of rare term, then we would like to

>>> check whether we have to scan part of frequent term posting tree where

>>> iptr

>>> < iptr1. So we call pre_consistent([false, true]), because we know that

>>> rare term is not present for iptr< iptr2. pre_consistent returns false.

>>> So

>>> we can start scanning frequent term posting tree from iptr1. Similarly we

>>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>>> maximum possible pointer.

>>>

>>

>> Thanks, now I understand the rare-term& frequent-term problem. Couldn't

>> you do that with the existing consistent function? I don't see why you need

>> the new pre-consistent function for this.

>

> In the case of two entries I can. But in the case of n entries things

> becomes more complicated. Imagine you have "term_1& term_2& ...& term_n"

> query. When you get some item pointer from term_1 you can skip all the

> lesser item pointers from term_2, term_3 ... term_n. But if all you have

> for it is consistent function you have to call it with following check

> arguments:

> 1) [false, false, false, ... , false]

> 2) [false, true, false, ... , false]

> 3) [false, false, true, ... , false]

> 4) [false, true, true, ..., false]

> ......

> i.e. you have to call it 2^(n-1) times. But if you know the query specific

> (i.e. in opclass) it's typically easy to calculate exactly what we need in

> single pass. That's why I introduced pre_consistent.

Hmm. So how does that work with the pre-consistent function? Don't you

need to call that 2^(n-1)-1 times as well?

- Heikki

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-19 08:56:37 |

| Message-ID: | CAPpHfdvOcjQZwBeT8on1yztt9e4VN_7orDXVbtE26rctS-nP_g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 19, 2013 at 12:49 PM, Heikki Linnakangas <

hlinnakangas(at)vmware(dot)com> wrote:

> On 19.06.2013 11:30, Alexander Korotkov wrote:

>

>> On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas<

>> hlinnakangas(at)vmware(dot)com> wrote:

>>

>> On 18.06.2013 23:59, Alexander Korotkov wrote:

>>>

>>> I would like to illustrate that on example. Imagine you have fulltext

>>>> query

>>>> "rare_term& frequent_term". Frequent term has large posting tree while

>>>>

>>>> rare term has only small posting list containing iptr1, iptr2 and iptr3.

>>>> At

>>>> first we get iptr1 from posting list of rare term, then we would like to

>>>> check whether we have to scan part of frequent term posting tree where

>>>> iptr

>>>> < iptr1. So we call pre_consistent([false, true]), because we know

>>>> that

>>>> rare term is not present for iptr< iptr2. pre_consistent returns

>>>> false.

>>>> So

>>>> we can start scanning frequent term posting tree from iptr1. Similarly

>>>> we

>>>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>>>> maximum possible pointer.

>>>>

>>>>

>>> Thanks, now I understand the rare-term& frequent-term problem. Couldn't

>>>

>>> you do that with the existing consistent function? I don't see why you

>>> need

>>> the new pre-consistent function for this.

>>>

>>

>> In the case of two entries I can. But in the case of n entries things

>> becomes more complicated. Imagine you have "term_1& term_2& ...&

>> term_n"

>>

>> query. When you get some item pointer from term_1 you can skip all the

>> lesser item pointers from term_2, term_3 ... term_n. But if all you have

>> for it is consistent function you have to call it with following check

>> arguments:

>> 1) [false, false, false, ... , false]

>> 2) [false, true, false, ... , false]

>> 3) [false, false, true, ... , false]

>> 4) [false, true, true, ..., false]

>> ......

>> i.e. you have to call it 2^(n-1) times. But if you know the query specific

>> (i.e. in opclass) it's typically easy to calculate exactly what we need in

>> single pass. That's why I introduced pre_consistent.

>>

>

> Hmm. So how does that work with the pre-consistent function? Don't you

> need to call that 2^(n-1)-1 times as well?

I call pre-consistent once with [false, true, true, ..., true].

Pre-consistent knows that each true passed to it could be false positive.

So, if it returns false it guarantees that consistent will be false for all

possible combinations.

------

With best regards,

Alexander Korotkov.

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-21 19:43:01 |

| Message-ID: | 51C4ACC5.2090707@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 19.06.2013 11:56, Alexander Korotkov wrote:

> On Wed, Jun 19, 2013 at 12:49 PM, Heikki Linnakangas<

> hlinnakangas(at)vmware(dot)com> wrote:

>

>> On 19.06.2013 11:30, Alexander Korotkov wrote:

>>

>>> On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas<

>>> hlinnakangas(at)vmware(dot)com> wrote:

>>>

>>> On 18.06.2013 23:59, Alexander Korotkov wrote:

>>>>

>>>> I would like to illustrate that on example. Imagine you have fulltext

>>>>> query

>>>>> "rare_term& frequent_term". Frequent term has large posting tree while

>>>>>

>>>>> rare term has only small posting list containing iptr1, iptr2 and iptr3.

>>>>> At

>>>>> first we get iptr1 from posting list of rare term, then we would like to

>>>>> check whether we have to scan part of frequent term posting tree where

>>>>> iptr

>>>>> < iptr1. So we call pre_consistent([false, true]), because we know

>>>>> that

>>>>> rare term is not present for iptr< iptr2. pre_consistent returns

>>>>> false.

>>>>> So

>>>>> we can start scanning frequent term posting tree from iptr1. Similarly

>>>>> we

>>>>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>>>>> maximum possible pointer.

>>>>>

>>>>>

>>>> Thanks, now I understand the rare-term& frequent-term problem. Couldn't

>>>>

>>>> you do that with the existing consistent function? I don't see why you

>>>> need

>>>> the new pre-consistent function for this.

>>>>

>>>

>>> In the case of two entries I can. But in the case of n entries things

>>> becomes more complicated. Imagine you have "term_1& term_2& ...&

>>> term_n"

>>>

>>> query. When you get some item pointer from term_1 you can skip all the

>>> lesser item pointers from term_2, term_3 ... term_n. But if all you have

>>> for it is consistent function you have to call it with following check

>>> arguments:

>>> 1) [false, false, false, ... , false]

>>> 2) [false, true, false, ... , false]

>>> 3) [false, false, true, ... , false]

>>> 4) [false, true, true, ..., false]

>>> ......

>>> i.e. you have to call it 2^(n-1) times. But if you know the query specific

>>> (i.e. in opclass) it's typically easy to calculate exactly what we need in

>>> single pass. That's why I introduced pre_consistent.

>>>

>>

>> Hmm. So how does that work with the pre-consistent function? Don't you

>> need to call that 2^(n-1)-1 times as well?

>

>

> I call pre-consistent once with [false, true, true, ..., true].

> Pre-consistent knows that each true passed to it could be false positive.

> So, if it returns false it guarantees that consistent will be false for all

> possible combinations.

Ok, I see.

I spent some time pondering this. I'd like to find a way to do something

about this without requiring another user-defined function. A couple of

observations:

1. The profile of that rare-term & frequent-term quest, without any

patch, looks like this:

28,55% postgres ginCompareItemPointers

19,36% postgres keyGetItem

15,20% postgres scanGetItem

7,75% postgres checkcondition_gin

6,25% postgres gin_tsquery_consistent

4,34% postgres TS_execute

3,85% postgres callConsistentFn

3,64% postgres FunctionCall8Coll

3,19% postgres check_stack_depth

2,60% postgres entryGetNextItem

1,35% postgres entryGetItem

1,25% postgres MemoryContextReset

1,12% postgres MemoryContextSwitchTo

0,31% libc-2.17.so __memcpy_ssse3_back

0,24% postgres collectMatchesForHeapRow

I was quite surprised by seeing ginCompareItemPointers at the top. It

turns out that it's relatively expensive to do comparisons in the format

we keep item pointers, packed in 6 bytes, in 3 int16s. I hacked together

a patch to convert ItemPointers into uint64s, when dealing with them in

memory. That helped quite a bit.

Another important thing in the above profile is that calling

consistent-function is taking up a lot of resources. And in the example

test case you gave, it's called with the same arguments every time.

Caching the result of consistent-function would be a big win.

I wrote a quick patch to do that caching, and together with the

itempointer hack, I was able to halve the runtime of that test case.

That's impressive, we probably should pursue that low-hanging fruit, but

it's still slower than your "fast scan" patch by a factor of 100x. So

clearly we do need an algorithmic improvement here, along the lines of

your patch, or something similar.

2. There's one trick we could do even without the pre-consistent

function, that would help the particular test case you gave. Suppose

that you have two search terms A and B. If you have just called

consistent on a row that matched term A, but not term B, you can skip

any subsequent rows in the scan that match A but not B. That means that

you can skip over to the next row that matches B. This is essentially

the same thing you do with the pre-consistent function, it's just a

degenerate case of it. That helps as long as the search contains only

one frequent term, but if it contains multiple, then you have to still

stop at every row that matches more than one of the frequent terms.

3. I'm still not totally convinced that we shouldn't just build the

"truth table" by calling the regular consistent function with all the

combinations of matching keys, as discussed above. I think that would

work pretty well in practice, for queries with up to 5-10 frequent

terms. Per the profiling, it probably would make sense to pre-compute

such a table anyway, to avoid calling consistent repeatedly.

4. If we do go with a new function, I'd like to just call it

"consistent" (or consistent2 or something, to keep it separate form the

old consistent function), and pass it a tri-state input for each search

term. It might not be any different for the full-text search

implementation, or any of the other ones for that matter, but I think it

would be a more understandable API.

- Heikki

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-24 22:20:59 |

| Message-ID: | CAPpHfduJ-X9WKR9h-vyTrNEVNR37ZDx05qOLkA3ys3j-14uKXA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Jun 21, 2013 at 11:43 PM, Heikki Linnakangas <

hlinnakangas(at)vmware(dot)com> wrote:

> On 19.06.2013 11:56, Alexander Korotkov wrote:

>

>> On Wed, Jun 19, 2013 at 12:49 PM, Heikki Linnakangas<

>> hlinnakangas(at)vmware(dot)com> wrote:

>>

>> On 19.06.2013 11:30, Alexander Korotkov wrote:

>>>

>>> On Wed, Jun 19, 2013 at 11:48 AM, Heikki Linnakangas<

>>>> hlinnakangas(at)vmware(dot)com> wrote:

>>>>

>>>> On 18.06.2013 23:59, Alexander Korotkov wrote:

>>>>

>>>>>

>>>>> I would like to illustrate that on example. Imagine you have fulltext

>>>>>

>>>>>> query

>>>>>> "rare_term& frequent_term". Frequent term has large posting tree

>>>>>> while

>>>>>>

>>>>>> rare term has only small posting list containing iptr1, iptr2 and

>>>>>> iptr3.

>>>>>> At

>>>>>> first we get iptr1 from posting list of rare term, then we would like

>>>>>> to

>>>>>> check whether we have to scan part of frequent term posting tree where

>>>>>> iptr

>>>>>> < iptr1. So we call pre_consistent([false, true]), because we know

>>>>>> that

>>>>>> rare term is not present for iptr< iptr2. pre_consistent returns

>>>>>> false.

>>>>>> So

>>>>>> we can start scanning frequent term posting tree from iptr1. Similarly

>>>>>> we

>>>>>> can skip lags between iptr1 and iptr2, iptr2 and iptr3, from iptr3 to

>>>>>> maximum possible pointer.

>>>>>>

>>>>>>

>>>>>> Thanks, now I understand the rare-term& frequent-term problem.

>>>>> Couldn't

>>>>>

>>>>> you do that with the existing consistent function? I don't see why you

>>>>> need

>>>>> the new pre-consistent function for this.

>>>>>

>>>>>

>>>> In the case of two entries I can. But in the case of n entries things

>>>> becomes more complicated. Imagine you have "term_1& term_2& ...&

>>>> term_n"

>>>>

>>>> query. When you get some item pointer from term_1 you can skip all the

>>>> lesser item pointers from term_2, term_3 ... term_n. But if all you have

>>>> for it is consistent function you have to call it with following check

>>>> arguments:

>>>> 1) [false, false, false, ... , false]

>>>> 2) [false, true, false, ... , false]

>>>> 3) [false, false, true, ... , false]

>>>> 4) [false, true, true, ..., false]

>>>> ......

>>>> i.e. you have to call it 2^(n-1) times. But if you know the query

>>>> specific

>>>> (i.e. in opclass) it's typically easy to calculate exactly what we need

>>>> in

>>>> single pass. That's why I introduced pre_consistent.

>>>>

>>>>

>>> Hmm. So how does that work with the pre-consistent function? Don't you

>>> need to call that 2^(n-1)-1 times as well?

>>>

>>

>>

>> I call pre-consistent once with [false, true, true, ..., true].

>> Pre-consistent knows that each true passed to it could be false positive.

>> So, if it returns false it guarantees that consistent will be false for

>> all

>> possible combinations.

>>

>

> Ok, I see.

>

> I spent some time pondering this. I'd like to find a way to do something

> about this without requiring another user-defined function. A couple of

> observations:

>

> 1. The profile of that rare-term & frequent-term quest, without any patch,

> looks like this:

>

> 28,55% postgres ginCompareItemPointers

> 19,36% postgres keyGetItem

> 15,20% postgres scanGetItem

> 7,75% postgres checkcondition_gin

> 6,25% postgres gin_tsquery_consistent

> 4,34% postgres TS_execute

> 3,85% postgres callConsistentFn

> 3,64% postgres FunctionCall8Coll

> 3,19% postgres check_stack_depth

> 2,60% postgres entryGetNextItem

> 1,35% postgres entryGetItem

> 1,25% postgres MemoryContextReset

> 1,12% postgres MemoryContextSwitchTo

> 0,31% libc-2.17.so __memcpy_ssse3_back

> 0,24% postgres collectMatchesForHeapRow

>

> I was quite surprised by seeing ginCompareItemPointers at the top. It

> turns out that it's relatively expensive to do comparisons in the format we

> keep item pointers, packed in 6 bytes, in 3 int16s. I hacked together a

> patch to convert ItemPointers into uint64s, when dealing with them in

> memory. That helped quite a bit.

>

> Another important thing in the above profile is that calling

> consistent-function is taking up a lot of resources. And in the example

> test case you gave, it's called with the same arguments every time. Caching

> the result of consistent-function would be a big win.

>

> I wrote a quick patch to do that caching, and together with the

> itempointer hack, I was able to halve the runtime of that test case. That's

> impressive, we probably should pursue that low-hanging fruit, but it's

> still slower than your "fast scan" patch by a factor of 100x. So clearly we

> do need an algorithmic improvement here, along the lines of your patch, or

> something similar.

>

For sure, many advantages can be achieved without "fast scan". For example,

sphinx is known to be fast, but it straightforwardly scan each posting list

just like GIN now.

> 2. There's one trick we could do even without the pre-consistent function,

> that would help the particular test case you gave. Suppose that you have

> two search terms A and B. If you have just called consistent on a row that

> matched term A, but not term B, you can skip any subsequent rows in the

> scan that match A but not B. That means that you can skip over to the next

> row that matches B. This is essentially the same thing you do with the

> pre-consistent function, it's just a degenerate case of it. That helps as

> long as the search contains only one frequent term, but if it contains

> multiple, then you have to still stop at every row that matches more than

> one of the frequent terms.

>

Yes, two terms case is confluent and there is no direct need of

preConsistent.

> 3. I'm still not totally convinced that we shouldn't just build the "truth

> table" by calling the regular consistent function with all the combinations

> of matching keys, as discussed above. I think that would work pretty well

> in practice, for queries with up to 5-10 frequent terms. Per the profiling,

> it probably would make sense to pre-compute such a table anyway, to avoid

> calling consistent repeatedly.

>

Why do you mention 5-10 _frequent_ items? If we have 5-10 frequent items

and 20 rare items we would have to create "truth table" of frequent items

for each new combination of rare items. For 20 rare items truth

combinations can be unique for each item pointer, in that case you would

have to calculate small "truth table" of frequent items for each item

pointers. And then it can appear to be not so small. I mean it seems to me

that we should take into account both "frequent" and "rare" items when

talking about "truth table".

> 4. If we do go with a new function, I'd like to just call it "consistent"

> (or consistent2 or something, to keep it separate form the old consistent

> function), and pass it a tri-state input for each search term. It might not

> be any different for the full-text search implementation, or any of the

> other ones for that matter, but I think it would be a more understandable

> API.

Understandable API makes sense. But for now, I can't see even potentional

usage of third state (exact false). Also, with preConsistent interface "as

is" in some cases we can use old consistent method as both consistent and

preConsistent when it implements monotonous boolean function. For example,

it's consistent function for opclasses of arrays.

Revised version of patch is attaches with more comments and docs.

------

With best regards,

Alexander Korotkov.

| Attachment | Content-Type | Size |

|---|---|---|

| gin_fast_scan.4.patch.gz | application/x-gzip | 8.2 KB |

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-28 19:31:03 |

| Message-ID: | CAPpHfduRrMOvXNrmJ0+ALk9dDR3Z34HpNdQPkQZtF_oYX58h9A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jun 25, 2013 at 2:20 AM, Alexander Korotkov <aekorotkov(at)gmail(dot)com>wrote:

> 4. If we do go with a new function, I'd like to just call it "consistent"

>> (or consistent2 or something, to keep it separate form the old consistent

>> function), and pass it a tri-state input for each search term. It might not

>> be any different for the full-text search implementation, or any of the

>> other ones for that matter, but I think it would be a more understandable

>> API.

>

>

> Understandable API makes sense. But for now, I can't see even potentional

> usage of third state (exact false).

>

Typo here. I meant "exact true".

> Also, with preConsistent interface "as is" in some cases we can use old

> consistent method as both consistent and preConsistent when it implements

> monotonous boolean function. For example, it's consistent function for

> opclasses of arrays.

>

Now, I got the point of three state consistent: we can keep only one

consistent in opclasses that support new interface. exact true and exact

false values will be passed in the case of current patch consistent; exact

false and unknown will be passed in the case of current patch

preConsistent. That's reasonable.

------

With best regards,

Alexander Korotkov.

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-06-30 11:00:06 |

| Message-ID: | 51D00FB6.9030008@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 28.06.2013 22:31, Alexander Korotkov wrote:

> Now, I got the point of three state consistent: we can keep only one

> consistent in opclasses that support new interface. exact true and exact

> false values will be passed in the case of current patch consistent; exact

> false and unknown will be passed in the case of current patch

> preConsistent. That's reasonable.

I'm going to mark this as "returned with feedback". For the next

version, I'd like to see the API changed per above. Also, I'd like us to

do something about the tidbitmap overhead, as a separate patch before

this, so that we can assess the actual benefit of this patch. And a new

test case that demonstrates the I/O benefits.

- Heikki

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-07-06 16:48:00 |

| Message-ID: | 51D84A40.3070004@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

this is a follow-up to the message I posted to the thread about

additional info in GIN.

I've applied both ginaddinfo.7.patch and gin_fast_scan.4.patch on commit

b8fd1a09, but I'm observing a lot of failures like this:

STATEMENT: SELECT id FROM messages WHERE body_tsvector @@

plainto_tsquery('english', 'email free') LIMIT 100

ERROR: buffer 238068 is not owned by resource owner Portal

There's a GIN index on messages(body_tsvector). I haven't dug into why

it fails like this.

Tomas

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-14 17:26:50 |

| Message-ID: | CAPpHfdtsDe-O6oQdCZUh6N1gGOMz0XKTMNQro-fueg-R06PaWw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sun, Jun 30, 2013 at 3:00 PM, Heikki Linnakangas <hlinnakangas(at)vmware(dot)com

> wrote:

> On 28.06.2013 22:31, Alexander Korotkov wrote:

>

>> Now, I got the point of three state consistent: we can keep only one

>> consistent in opclasses that support new interface. exact true and exact

>> false values will be passed in the case of current patch consistent; exact

>> false and unknown will be passed in the case of current patch

>> preConsistent. That's reasonable.

>>

>

> I'm going to mark this as "returned with feedback". For the next version,

> I'd like to see the API changed per above. Also, I'd like us to do

> something about the tidbitmap overhead, as a separate patch before this, so

> that we can assess the actual benefit of this patch. And a new test case

> that demonstrates the I/O benefits.

Revised version of patch is attached.

Changes are so:

1) Patch rebased against packed posting lists, not depends on additional

information now.

2) New API with tri-state logic is introduced.

------

With best regards,

Alexander Korotkov.

| Attachment | Content-Type | Size |

|---|---|---|

| gin-fast-scan.6.patch.gz | application/x-gzip | 8.2 KB |

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-14 20:34:33 |

| Message-ID: | 528533D9.3040501@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 14.11.2013 19:26, Alexander Korotkov wrote:

> On Sun, Jun 30, 2013 at 3:00 PM, Heikki Linnakangas <hlinnakangas(at)vmware(dot)com

>> wrote:

>

>> On 28.06.2013 22:31, Alexander Korotkov wrote:

>>

>>> Now, I got the point of three state consistent: we can keep only one

>>> consistent in opclasses that support new interface. exact true and exact

>>> false values will be passed in the case of current patch consistent; exact

>>> false and unknown will be passed in the case of current patch

>>> preConsistent. That's reasonable.

>>>

>>

>> I'm going to mark this as "returned with feedback". For the next version,

>> I'd like to see the API changed per above. Also, I'd like us to do

>> something about the tidbitmap overhead, as a separate patch before this, so

>> that we can assess the actual benefit of this patch. And a new test case

>> that demonstrates the I/O benefits.

>

>

> Revised version of patch is attached.

> Changes are so:

> 1) Patch rebased against packed posting lists, not depends on additional

> information now.

> 2) New API with tri-state logic is introduced.

Thanks! A couple of thoughts after a 5-minute glance:

* documentation

* How about defining the tri-state consistent function to also return a

tri-state? True would mean that the tuple definitely matches, false

means the tuple definitely does not match, and Unknown means it might

match. Or does return value true with recheck==true have the same

effect? If I understood the patch, right, returning Unknown or True

wouldn't actually make any difference, but it's conceivable that we

might come up with more optimizations in the future that could take

advantage of that. For example, for a query like "foo OR (bar AND baz)",

you could immediately return any tuples that match foo, and not bother

scanning for bar and baz at all.

- Heikki

| From: | Rod Taylor <rbt(at)simple-knowledge(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-14 23:25:37 |

| Message-ID: | CAKddOFBAp39whKbbYLvyK8sYOFXO_gkGGeFtD0rUVu=6pY18GQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I checked out master and put together a test case using a small percentage

of production data for a known problem we have with Pg 9.2 and text search

scans.

A small percentage in this case means 10 million records randomly selected;

has a few billion records.

Tests ran for master successfully and I recorded timings.

Applied the patch included here to master along with

gin-packed-postinglists-14.patch.

Run make clean; ./configure; make; make install.

make check (All 141 tests passed.)

initdb, import dump

The GIN index fails to build with a segfault.

DETAIL: Failed process was running: CREATE INDEX textsearch_gin_idx ON kp

USING gin (to_tsvector('simple'::regconfig, string)) WHERE (score1 IS NOT

NULL);

#0 XLogCheckBuffer (holdsExclusiveLock=1 '\001', lsn=lsn(at)entry=0x7fffcf341920,

bkpb=bkpb(at)entry=0x7fffcf341960, rdata=0x468f11 <ginFindLeafPage+529>,

rdata=0x468f11 <ginFindLeafPage+529>) at xlog.c:2339

#1 0x00000000004b9ddd in XLogInsert (rmid=rmid(at)entry=13 '\r',

info=info(at)entry=16 '\020', rdata=rdata(at)entry=0x7fffcf341bf0) at xlog.c:936

#2 0x0000000000468a9e in createPostingTree (index=0x7fa4e8d31030,

items=items(at)entry=0xfb55680, nitems=nitems(at)entry=762,

buildStats=buildStats(at)entry=0x7fffcf343dd0) at gindatapage.c:1324

#3 0x00000000004630c0 in buildFreshLeafTuple (buildStats=0x7fffcf343dd0,

nitem=762, items=0xfb55680, category=<optimized out>, key=34078256,

attnum=<optimized out>, ginstate=0x7fffcf341df0) at gininsert.c:281

#4 ginEntryInsert (ginstate=ginstate(at)entry=0x7fffcf341df0,

attnum=<optimized out>, key=34078256, category=<optimized out>,

items=0xfb55680, nitem=762,

buildStats=buildStats(at)entry=0x7fffcf343dd0) at gininsert.c:351

#5 0x00000000004635b0 in ginbuild (fcinfo=<optimized out>) at

gininsert.c:531

#6 0x0000000000718637 in OidFunctionCall3Coll

(functionId=functionId(at)entry=2738,

collation=collation(at)entry=0, arg1=arg1(at)entry=140346257507968,

arg2=arg2(at)entry=140346257510448, arg3=arg3(at)entry=32826432) at

fmgr.c:1649

#7 0x00000000004ce1da in index_build

(heapRelation=heapRelation(at)entry=0x7fa4e8d30680,

indexRelation=indexRelation(at)entry=0x7fa4e8d31030,

indexInfo=indexInfo(at)entry=0x1f4e440, isprimary=isprimary(at)entry=0

'\000', isreindex=isreindex(at)entry=0 '\000') at index.c:1963

#8 0x00000000004ceeaa in index_create

(heapRelation=heapRelation(at)entry=0x7fa4e8d30680,

indexRelationName=indexRelationName(at)entry=0x1f4e660

"textsearch_gin_knn_idx", indexRelationId=16395, indexRelationId(at)entry=0,

relFileNode=<optimized out>, indexInfo=indexInfo(at)entry=0x1f4e440,

indexColNames=indexColNames(at)entry=0x1f4f728,

accessMethodObjectId=accessMethodObjectId(at)entry=2742,

tableSpaceId=tableSpaceId(at)entry=0,

collationObjectId=collationObjectId(at)entry=0x1f4fcc8,

classObjectId=classObjectId(at)entry=0x1f4fce0,

coloptions=coloptions(at)entry=0x1f4fcf8,

reloptions=reloptions(at)entry=0, isprimary=0 '\000',

isconstraint=0 '\000', deferrable=0 '\000', initdeferred=0 '\000',

allow_system_table_mods=0 '\000', skip_build=0 '\000', concurrent=0 '\000',

is_internal=0 '\000') at index.c:1082

#9 0x0000000000546a78 in DefineIndex (stmt=<optimized out>,

indexRelationId=indexRelationId(at)entry=0, is_alter_table=is_alter_table(at)entry=0

'\000',

check_rights=check_rights(at)entry=1 '\001', skip_build=skip_build(at)entry=0

'\000', quiet=quiet(at)entry=0 '\000') at indexcmds.c:594

#10 0x000000000065147e in ProcessUtilitySlow

(parsetree=parsetree(at)entry=0x1f7fb68,

queryString=0x1f7eb10 "CREATE INDEX textsearch_gin_idx ON kp USING gin

(to_tsvector('simple'::regconfig, string)) WHERE (score1 IS NOT NULL);",

context=<optimized out>, params=params(at)entry=0x0,

completionTag=completionTag(at)entry=0x7fffcf344c10 "", dest=<optimized out>)

at utility.c:1163

#11 0x000000000065079e in standard_ProcessUtility (parsetree=0x1f7fb68,

queryString=<optimized out>, context=<optimized out>, params=0x0,

dest=<optimized out>, completionTag=0x7fffcf344c10 "") at utility.c:873

#12 0x000000000064de61 in PortalRunUtility (portal=portal(at)entry=0x1f4c350,

utilityStmt=utilityStmt(at)entry=0x1f7fb68, isTopLevel=isTopLevel(at)entry=1

'\001',

dest=dest(at)entry=0x1f7ff08, completionTag=completionTag(at)entry=0x7fffcf344c10

"") at pquery.c:1187

#13 0x000000000064e9e5 in PortalRunMulti (portal=portal(at)entry=0x1f4c350,

isTopLevel=isTopLevel(at)entry=1 '\001', dest=dest(at)entry=0x1f7ff08,

altdest=altdest(at)entry=0x1f7ff08,

completionTag=completionTag(at)entry=0x7fffcf344c10

"") at pquery.c:1318

#14 0x000000000064f459 in PortalRun (portal=portal(at)entry=0x1f4c350,

count=count(at)entry=9223372036854775807, isTopLevel=isTopLevel(at)entry=1

'\001',

dest=dest(at)entry=0x1f7ff08, altdest=altdest(at)entry=0x1f7ff08,

completionTag=completionTag(at)entry=0x7fffcf344c10 "") at pquery.c:816

#15 0x000000000064d2d5 in exec_simple_query (

query_string=0x1f7eb10 "CREATE INDEX textsearch_gin_idx ON kp USING gin

(to_tsvector('simple'::regconfig, string)) WHERE (score1 IS NOT NULL);") at

postgres.c:1048

#16 PostgresMain (argc=<optimized out>, argv=argv(at)entry=0x1f2ad40,

dbname=0x1f2abf8 "rbt", username=<optimized out>) at postgres.c:3992

#17 0x000000000045b1b4 in BackendRun (port=0x1f47280) at postmaster.c:4085

#18 BackendStartup (port=0x1f47280) at postmaster.c:3774

#19 ServerLoop () at postmaster.c:1585

#20 0x000000000060d031 in PostmasterMain (argc=argc(at)entry=3,

argv=argv(at)entry=0x1f28b20)

at postmaster.c:1240

#21 0x000000000045bb25 in main (argc=3, argv=0x1f28b20) at main.c:196

On Thu, Nov 14, 2013 at 12:26 PM, Alexander Korotkov

<aekorotkov(at)gmail(dot)com>wrote:

> On Sun, Jun 30, 2013 at 3:00 PM, Heikki Linnakangas <

> hlinnakangas(at)vmware(dot)com> wrote:

>

>> On 28.06.2013 22:31, Alexander Korotkov wrote:

>>

>>> Now, I got the point of three state consistent: we can keep only one

>>> consistent in opclasses that support new interface. exact true and exact

>>> false values will be passed in the case of current patch consistent;

>>> exact

>>> false and unknown will be passed in the case of current patch

>>> preConsistent. That's reasonable.

>>>

>>

>> I'm going to mark this as "returned with feedback". For the next version,

>> I'd like to see the API changed per above. Also, I'd like us to do

>> something about the tidbitmap overhead, as a separate patch before this, so

>> that we can assess the actual benefit of this patch. And a new test case

>> that demonstrates the I/O benefits.

>

>

> Revised version of patch is attached.

> Changes are so:

> 1) Patch rebased against packed posting lists, not depends on additional

> information now.

> 2) New API with tri-state logic is introduced.

>

> ------

> With best regards,

> Alexander Korotkov.

>

>

> --

> Sent via pgsql-hackers mailing list (pgsql-hackers(at)postgresql(dot)org)

> To make changes to your subscription:

> http://www.postgresql.org/mailpref/pgsql-hackers

>

>

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Rod Taylor <rbt(at)simple-knowledge(dot)com> |

| Cc: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-15 06:51:00 |

| Message-ID: | CAPpHfdt2uGgD1r+xnHO6SqN=ShgJeJqvWcjnVa2PU2ApP4H=hg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Nov 15, 2013 at 3:25 AM, Rod Taylor <rbt(at)simple-knowledge(dot)com>wrote:

> I checked out master and put together a test case using a small percentage

> of production data for a known problem we have with Pg 9.2 and text search

> scans.

>

> A small percentage in this case means 10 million records randomly

> selected; has a few billion records.

>

>

> Tests ran for master successfully and I recorded timings.

>

>

>

> Applied the patch included here to master along with

> gin-packed-postinglists-14.patch.

> Run make clean; ./configure; make; make install.

> make check (All 141 tests passed.)

>

> initdb, import dump

>

>

> The GIN index fails to build with a segfault.

>

Thanks for testing. See fixed version in thread about packed posting lists.

------

With best regards,

Alexander Korotkov.

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-15 07:19:09 |

| Message-ID: | CAPpHfduOvUFT+GgCrnBOzRR19eTb9mDsDJwU=S0h477fdgr=RA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Nov 15, 2013 at 12:34 AM, Heikki Linnakangas <

hlinnakangas(at)vmware(dot)com> wrote:

> On 14.11.2013 19:26, Alexander Korotkov wrote:

>

>> On Sun, Jun 30, 2013 at 3:00 PM, Heikki Linnakangas <

>> hlinnakangas(at)vmware(dot)com

>>

>>> wrote:

>>>

>>

>> On 28.06.2013 22:31, Alexander Korotkov wrote:

>>>

>>> Now, I got the point of three state consistent: we can keep only one

>>>> consistent in opclasses that support new interface. exact true and exact

>>>> false values will be passed in the case of current patch consistent;

>>>> exact

>>>> false and unknown will be passed in the case of current patch

>>>> preConsistent. That's reasonable.

>>>>

>>>>

>>> I'm going to mark this as "returned with feedback". For the next version,

>>> I'd like to see the API changed per above. Also, I'd like us to do

>>> something about the tidbitmap overhead, as a separate patch before this,

>>> so

>>> that we can assess the actual benefit of this patch. And a new test case

>>> that demonstrates the I/O benefits.

>>>

>>

>>

>> Revised version of patch is attached.

>> Changes are so:

>> 1) Patch rebased against packed posting lists, not depends on additional

>> information now.

>> 2) New API with tri-state logic is introduced.

>>

>

> Thanks! A couple of thoughts after a 5-minute glance:

>

> * documentation

>

Will provide documented version this week.

> * How about defining the tri-state consistent function to also return a

> tri-state? True would mean that the tuple definitely matches, false means

> the tuple definitely does not match, and Unknown means it might match. Or

> does return value true with recheck==true have the same effect? If I

> understood the patch, right, returning Unknown or True wouldn't actually

> make any difference, but it's conceivable that we might come up with more

> optimizations in the future that could take advantage of that. For example,

> for a query like "foo OR (bar AND baz)", you could immediately return any

> tuples that match foo, and not bother scanning for bar and baz at all.

The meaning of recheck flag when input contains unknown is undefined now. :)

For instance, we could define it in following ways:

1) Like returning Unknown meaning that consistent with true of false

instead of input Unknown could return either true or false.

2) Consistent with true of false instead of input Unknown could return

recheck. This meaning is probably logical, but I don't see any usage of it.

I'm not against idea of tri-state returning value for consisted, because

it's logical continuation of its tri-state input. However, I don't see

usage of distinguish True and Unknown in returning value for now :)

In example you give we can return foo immediately, but we have to create

full bitmap. So we anyway will have to scan (bar AND baz). We could skip

part of trees for bar and baz. But it's possible only when foo contains

large amount of sequential TIDS so we can be sure that we didn't miss any

TIDs. This seems to be very narrow use-case for me.

Another point is that one day we probably could immediately return tuples

in gingettuple. And with LIMIT clause and no sorting we can don't search

for other tuples. However, gingettuple was removed because of reasons of

concurrency. And my patches for index-based ordering didn't return it in

previous manner: it collects all the results and then returns them

one-by-one.

------

With best regards,

Alexander Korotkov.

| From: | Rod Taylor <pg(at)rbt(dot)ca> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-15 14:57:16 |

| Message-ID: | CAKddOFA6so_-eNYY1J0P2w6XogC6A_kNykRvS-1cLVBsXpFv+g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I tried again this morning using gin-packed-postinglists-16.patch and

gin-fast-scan.6.patch. No crashes.

It is about a 0.1% random sample of production data (10,000,000 records)

with the below structure. Pg was compiled with debug enabled in both cases.

Table "public.kp"

Column | Type | Modifiers

--------+---------+-----------

id | bigint | not null

string | text | not null

score1 | integer |

score2 | integer |

score3 | integer |

score4 | integer |

Indexes:

"kp_pkey" PRIMARY KEY, btree (id)

"kp_string_key" UNIQUE CONSTRAINT, btree (string)

"textsearch_gin_idx" gin (to_tsvector('simple'::regconfig, string))

WHERE score1 IS NOT NULL

This is a query tested. All data is in Pg buffer cache for these timings.

Words like "the" and "and" are very common (~9% of entries, each) and a

word like "hotel" is much less common (~0.2% of entries).

SELECT id,string

FROM kp

WHERE score1 IS NOT NULL

AND to_tsvector('simple', string) @@ to_tsquery('simple', ?)

-- ? is substituted with the query strings

ORDER BY score1 DESC, score2 ASC

LIMIT 1000;

Limit (cost=56.04..56.04 rows=1 width=37) (actual time=250.010..250.032

rows=142 loops=1)

-> Sort (cost=56.04..56.04 rows=1 width=37) (actual

time=250.008..250.017 rows=142 loops=1)

Sort Key: score1, score2

Sort Method: quicksort Memory: 36kB

-> Bitmap Heap Scan on kp (cost=52.01..56.03 rows=1 width=37)

(actual time=249.711..249.945 rows=142 loops=1)

Recheck Cond: ((to_tsvector('simple'::regconfig, string) @@

'''hotel'' & ''and'' & ''the'''::tsquery) AND (score1 IS NOT NULL))

-> Bitmap Index Scan on textsearch_gin_idx

(cost=0.00..52.01 rows=1 width=0) (actual time=249.681..249.681 rows=142

loops=1)

Index Cond: (to_tsvector('simple'::regconfig, string)

@@ '''hotel'' & ''and'' & ''the'''::tsquery)

Total runtime: 250.096 ms

Times are from \timing on.

MASTER

=======

the: 888.436 ms 926.609 ms 885.502 ms

and: 944.052 ms 937.732 ms 920.050 ms

hotel: 53.992 ms 57.039 ms 65.581 ms

and & the & hotel: 260.308 ms 248.275 ms 248.098 ms

These numbers roughly match what we get with Pg 9.2. The time savings

between 'the' and 'and & the & hotel' is mostly heap lookups for the score

and the final sort.

The size of the index on disk is about 2% smaller in the patched version.

PATCHED

=======

the: 1055.169 ms 1081.976 ms 1083.021 ms

and: 912.173 ms 949.364 ms 965.261 ms

hotel: 62.591 ms 64.341 ms 62.923 ms

and & the & hotel: 268.577 ms 259.293 ms 257.408 ms

hotel & and & the: 253.574 ms 258.071 ms 250.280 ms

I was hoping that the 'and & the & hotel' case would improve with this

patch to be closer to the 'hotel' search, as I thought that was the kind of

thing it targeted. Unfortunately, it did not. I actually applied the

patches, compiled, initdb/load data, and ran it again thinking I made a

mistake.

Reordering the terms 'hotel & and & the' doesn't change the result.

On Fri, Nov 15, 2013 at 1:51 AM, Alexander Korotkov <aekorotkov(at)gmail(dot)com>wrote:

> On Fri, Nov 15, 2013 at 3:25 AM, Rod Taylor <rbt(at)simple-knowledge(dot)com>wrote:

>

>> I checked out master and put together a test case using a small

>> percentage of production data for a known problem we have with Pg 9.2 and

>> text search scans.

>>

>> A small percentage in this case means 10 million records randomly

>> selected; has a few billion records.

>>

>>

>> Tests ran for master successfully and I recorded timings.

>>

>>

>>

>> Applied the patch included here to master along with

>> gin-packed-postinglists-14.patch.

>> Run make clean; ./configure; make; make install.

>> make check (All 141 tests passed.)

>>

>> initdb, import dump

>>

>>

>> The GIN index fails to build with a segfault.

>>

>

> Thanks for testing. See fixed version in thread about packed posting lists.

>

> ------

> With best regards,

> Alexander Korotkov.

>

| From: | Rod Taylor <rod(dot)taylor(at)gmail(dot)com> |

|---|---|

| To: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

| Cc: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-15 15:17:29 |

| Message-ID: | CAKddOFCqZw-t0e1aoz7i+de0itVU9x1=bNcn8kv=g_92TK1BWA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I tried again this morning using gin-packed-postinglists-16.patch and

gin-fast-scan.6.patch. No crashes during index building.

Pg was compiled with debug enabled in both cases. The data is a ~0.1%

random sample of production data (10,000,000 records for the test) with the

below structure.

Table "public.kp"

Column | Type | Modifiers

--------+---------+-----------

id | bigint | not null

string | text | not null

score1 | integer |

score2 | integer |

score3 | integer |

score4 | integer |

Indexes:

"kp_pkey" PRIMARY KEY, btree (id)

"kp_string_key" UNIQUE CONSTRAINT, btree (string)

"textsearch_gin_idx" gin (to_tsvector('simple'::regconfig, string))

WHERE score1 IS NOT NULL

All data is in Pg buffer cache for these timings. Words like "the" and

"and" are very common (~9% of entries, each) and a word like "hotel" is

much less common (~0.2% of entries). Below is the query tested:

SELECT id,string

FROM kp

WHERE score1 IS NOT NULL

AND to_tsvector('simple', string) @@ to_tsquery('simple', ?)

-- ? is substituted with the query strings

ORDER BY score1 DESC, score2 ASC

LIMIT 1000;

Limit (cost=56.04..56.04 rows=1 width=37) (actual time=250.010..250.032

rows=142 loops=1)

-> Sort (cost=56.04..56.04 rows=1 width=37) (actual

time=250.008..250.017 rows=142 loops=1)

Sort Key: score1, score2

Sort Method: quicksort Memory: 36kB

-> Bitmap Heap Scan on kp (cost=52.01..56.03 rows=1 width=37)

(actual time=249.711..249.945 rows=142 loops=1)

Recheck Cond: ((to_tsvector('simple'::regconfig, string) @@

'''hotel'' & ''and'' & ''the'''::tsquery) AND (score1 IS NOT NULL))

-> Bitmap Index Scan on textsearch_gin_idx

(cost=0.00..52.01 rows=1 width=0) (actual time=249.681..249.681 rows=142

loops=1)

Index Cond: (to_tsvector('simple'::regconfig, string)

@@ '''hotel'' & ''and'' & ''the'''::tsquery)

Total runtime: 250.096 ms

Times are from \timing on.

MASTER

=======

the: 888.436 ms 926.609 ms 885.502 ms

and: 944.052 ms 937.732 ms 920.050 ms

hotel: 53.992 ms 57.039 ms 65.581 ms

and & the & hotel: 260.308 ms 248.275 ms 248.098 ms

These numbers roughly match what we get with Pg 9.2. The time savings

between 'the' and 'and & the & hotel' is mostly heap lookups for the score

and the final sort.

The size of the index on disk is about 2% smaller in the patched version.

PATCHED

=======

the: 1055.169 ms 1081.976 ms 1083.021 ms

and: 912.173 ms 949.364 ms 965.261 ms

hotel: 62.591 ms 64.341 ms 62.923 ms

and & the & hotel: 268.577 ms 259.293 ms 257.408 ms

hotel & and & the: 253.574 ms 258.071 ms 250.280 ms

I was hoping that the 'and & the & hotel' case would improve with this

patch to be closer to the 'hotel' search, as I thought that was the kind of

thing it targeted. Unfortunately, it did not. I actually applied the

patches, compiled, initdb/load data, and ran it again thinking I made a

mistake.

Reordering the terms 'hotel & and & the' doesn't change the result.

On Fri, Nov 15, 2013 at 1:51 AM, Alexander Korotkov <aekorotkov(at)gmail(dot)com>wrote:

> On Fri, Nov 15, 2013 at 3:25 AM, Rod Taylor <rbt(at)simple-knowledge(dot)com>wrote:

>

>> I checked out master and put together a test case using a small

>> percentage of production data for a known problem we have with Pg 9.2 and

>> text search scans.

>>

>> A small percentage in this case means 10 million records randomly

>> selected; has a few billion records.

>>

>>

>> Tests ran for master successfully and I recorded timings.

>>

>>

>>

>> Applied the patch included here to master along with

>> gin-packed-postinglists-14.patch.

>> Run make clean; ./configure; make; make install.

>> make check (All 141 tests passed.)

>>

>> initdb, import dump

>>

>>

>> The GIN index fails to build with a segfault.

>>

>

> Thanks for testing. See fixed version in thread about packed posting lists.

>

> ------

> With best regards,

> Alexander Korotkov.

>

| From: | Alexander Korotkov <aekorotkov(at)gmail(dot)com> |

|---|---|

| To: | Rod Taylor <pg(at)rbt(dot)ca> |

| Cc: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: GIN improvements part2: fast scan |

| Date: | 2013-11-15 17:51:31 |

| Message-ID: | CAPpHfdt1wY=czFk0==0Q_ByCV6i71LWEkt0YCwH6JBzo=Q-yXw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Nov 15, 2013 at 6:57 PM, Rod Taylor <pg(at)rbt(dot)ca> wrote:

> I tried again this morning using gin-packed-postinglists-16.patch and

> gin-fast-scan.6.patch. No crashes.

>

> It is about a 0.1% random sample of production data (10,000,000 records)

> with the below structure. Pg was compiled with debug enabled in both cases.

>

> Table "public.kp"

> Column | Type | Modifiers

> --------+---------+-----------

> id | bigint | not null

> string | text | not null

> score1 | integer |

> score2 | integer |

> score3 | integer |

> score4 | integer |

> Indexes:

> "kp_pkey" PRIMARY KEY, btree (id)

> "kp_string_key" UNIQUE CONSTRAINT, btree (string)

> "textsearch_gin_idx" gin (to_tsvector('simple'::regconfig, string))

> WHERE score1 IS NOT NULL

>

>

>

> This is a query tested. All data is in Pg buffer cache for these timings.

> Words like "the" and "and" are very common (~9% of entries, each) and a

> word like "hotel" is much less common (~0.2% of entries).

>

> SELECT id,string

> FROM kp

> WHERE score1 IS NOT NULL

> AND to_tsvector('simple', string) @@ to_tsquery('simple', ?)

> -- ? is substituted with the query strings

> ORDER BY score1 DESC, score2 ASC

> LIMIT 1000;

>

> Limit (cost=56.04..56.04 rows=1 width=37) (actual time=250.010..250.032

> rows=142 loops=1)

> -> Sort (cost=56.04..56.04 rows=1 width=37) (actual

> time=250.008..250.017 rows=142 loops=1)

> Sort Key: score1, score2

> Sort Method: quicksort Memory: 36kB

> -> Bitmap Heap Scan on kp (cost=52.01..56.03 rows=1 width=37)

> (actual time=249.711..249.945 rows=142 loops=1)

> Recheck Cond: ((to_tsvector('simple'::regconfig, string) @@

> '''hotel'' & ''and'' & ''the'''::tsquery) AND (score1 IS NOT NULL))

> -> Bitmap Index Scan on textsearch_gin_idx

> (cost=0.00..52.01 rows=1 width=0) (actual time=249.681..249.681 rows=142

> loops=1)

> Index Cond: (to_tsvector('simple'::regconfig, string)

> @@ '''hotel'' & ''and'' & ''the'''::tsquery)

> Total runtime: 250.096 ms

>

>

>

> Times are from \timing on.

>

> MASTER

> =======

> the: 888.436 ms 926.609 ms 885.502 ms

> and: 944.052 ms 937.732 ms 920.050 ms

> hotel: 53.992 ms 57.039 ms 65.581 ms

> and & the & hotel: 260.308 ms 248.275 ms 248.098 ms

>

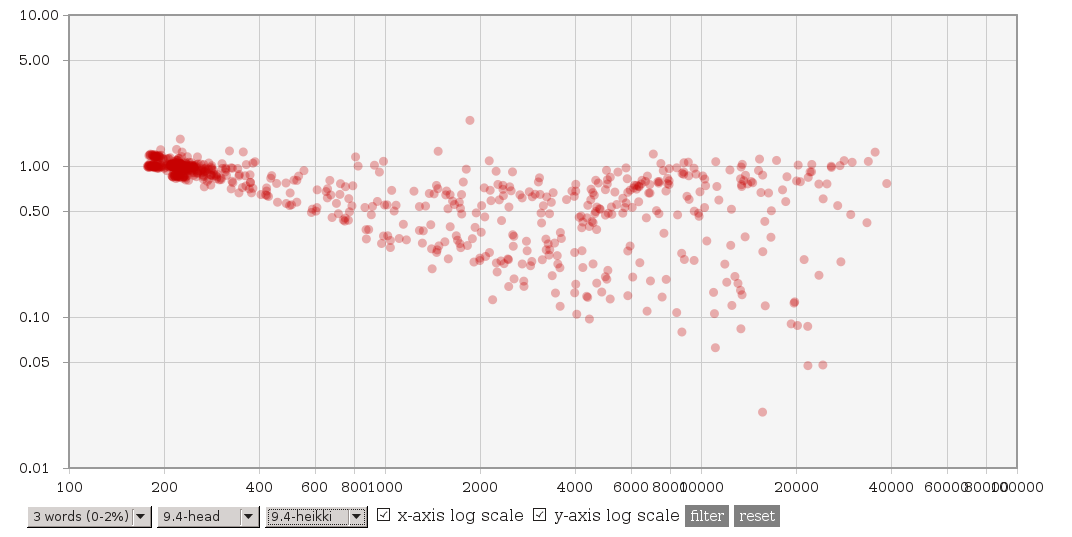

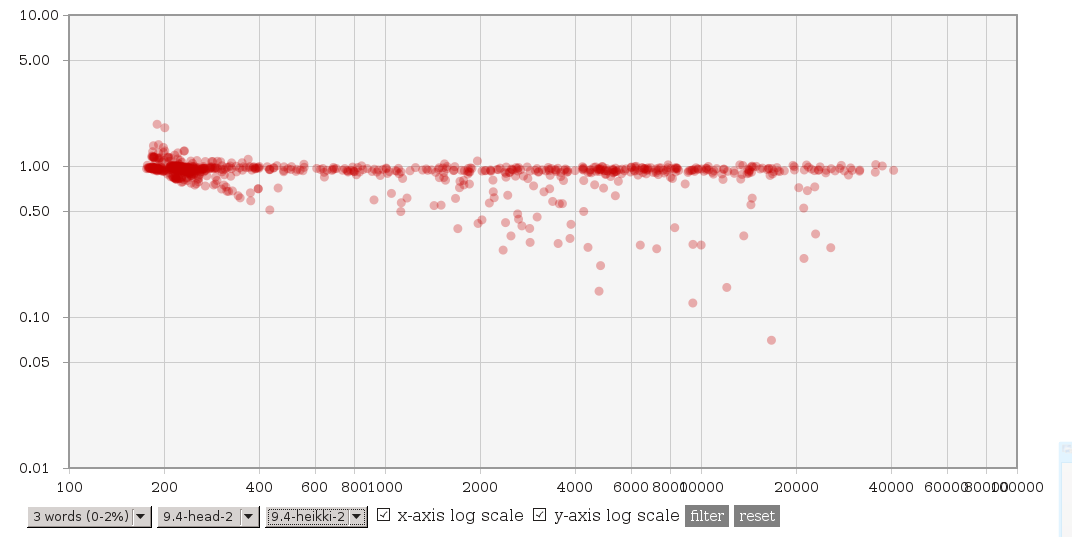

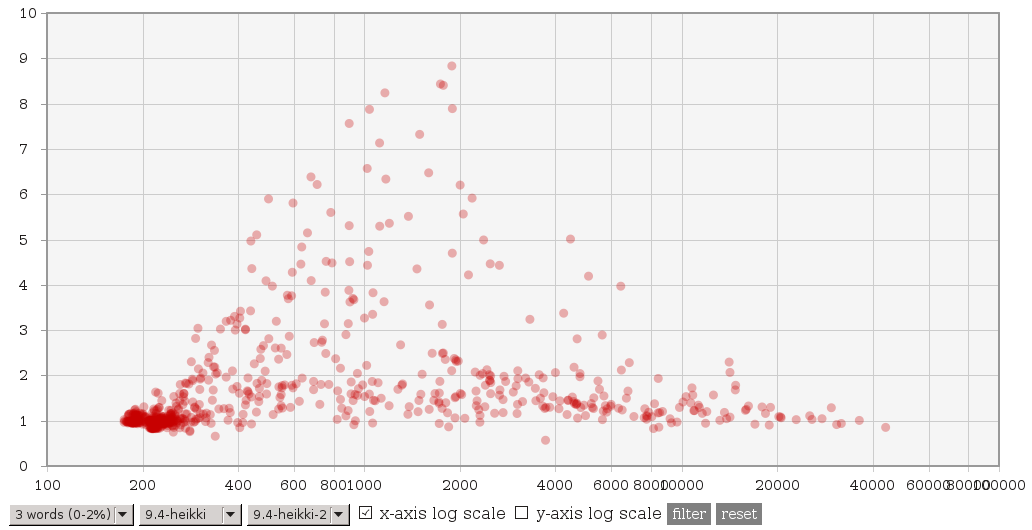

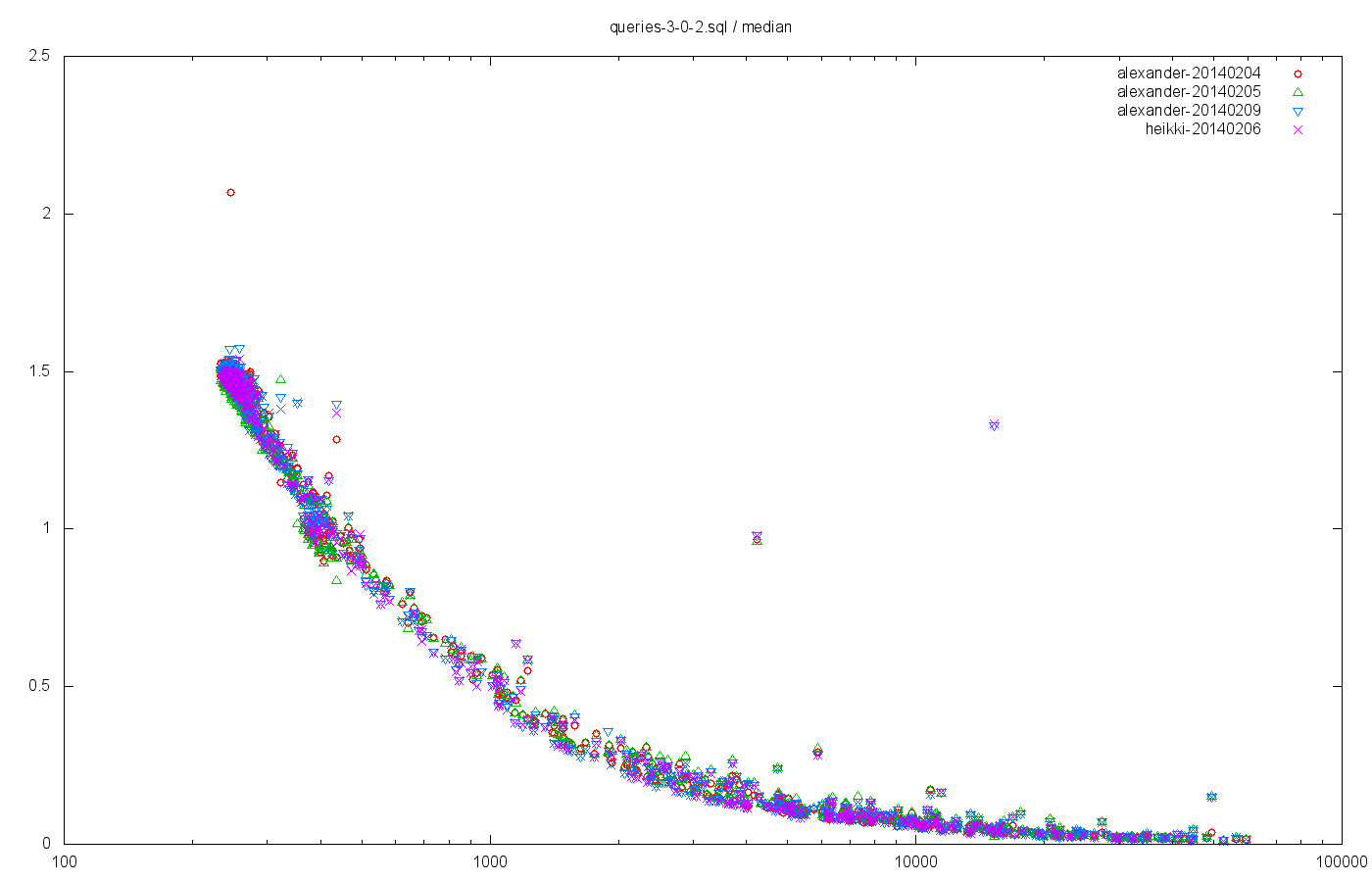

> These numbers roughly match what we get with Pg 9.2. The time savings