Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement)

| Lists: | pgsql-hackers |

|---|

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | [PATCH] pgbench --throttle (submission 4) |

| Date: | 2013-05-02 12:09:51 |

| Message-ID: | alpine.DEB.2.02.1305021406190.27669@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Minor changes wrt to the previous submission, so as to avoid running some

stuff twice under some conditions. This is for reference to the next

commit fest.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle.patch | text/x-diff | 6.6 KB |

| From: | Michael Paquier <michael(dot)paquier(at)gmail(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 4) |

| Date: | 2013-05-03 01:39:18 |

| Message-ID: | CAB7nPqRh=vXEFc77cjk41ouY06aNhVUPEFjsH3zDDw8UQaAxiA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

It would be better to submit updated versions of a patch on the email

thread it is dedicated to and not create a new thread so as people can

easily follow the progress you are doing.

Thanks,

--

Michael

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 5) |

| Date: | 2013-05-11 15:17:56 |

| Message-ID: | alpine.DEB.2.02.1305111713480.3419@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Simpler version of 'pgbench --throttle' by handling throttling at the

beginning of the transaction instead of doing it at the end.

This is for reference to the next commitfest.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle.patch | text/x-diff | 5.7 KB |

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 6) |

| Date: | 2013-05-12 06:54:54 |

| Message-ID: | alpine.DEB.2.02.1305120847540.3419@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

New submission which put option help in alphabetical position, as

per Peter Eisentraut f0ed3a8a99b052d2d5e0b6153a8907b90c486636

This is for reference to the next commitfest.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle.patch | text/x-diff | 5.6 KB |

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-01 09:00:27 |

| Message-ID: | alpine.DEB.2.02.1306011053070.12964@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

New submission for the next commit fest.

This new version also reports the average lag time, i.e. the delay between

scheduled and actual transaction start times. This may help detect whether

things went smothly, or if at some time some delay was introduced because

of the load and some catchup was done afterwards.

Question 1: should it report the maximum lang encountered?

Question 2: the next step would be to have the current lag shown under

option --progress, but that would mean having a combined --throttle

--progress patch submission, or maybe dependencies between patches.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle.patch | text/x-diff | 7.8 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-08 19:31:13 |

| Message-ID: | 51B38681.70509@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/1/13 5:00 AM, Fabien COELHO wrote:

> Question 1: should it report the maximum lang encountered?

I haven't found the lag measurement to be very useful yet, outside of

debugging the feature itself. Accordingly I don't see a reason to add

even more statistics about the number outside of testing the code. I'm

seeing some weird lag problems that this will be useful for though right

now, more on that a few places below.

> Question 2: the next step would be to have the current lag shown under

> option --progress, but that would mean having a combined --throttle

> --progress patch submission, or maybe dependencies between patches.

This is getting too far ahead. Let's get the throttle part nailed down

before introducing even more moving parts into this. I've attached an

updated patch that changes a few things around already. I'm not done

with this yet and it needs some more review before commit, but it's not

too far away from being ready.

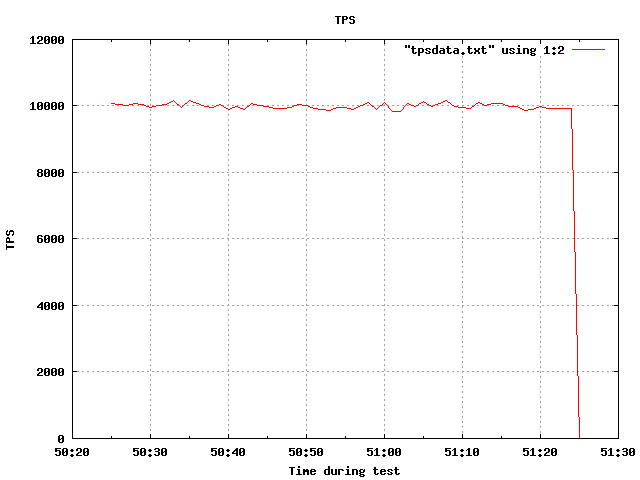

This feature works quite well. On a system that will run at 25K TPS

without any limit, I did a run with 25 clients and a rate of 400/second,

aiming at 10,000 TPS, and that's what I got:

number of clients: 25

number of threads: 1

duration: 60 s

number of transactions actually processed: 599620

average transaction lag: 0.307 ms

tps = 9954.779317 (including connections establishing)

tps = 9964.947522 (excluding connections establishing)

I never thought of implementing the throttle like this before, but it

seems to work out well so far. Check out tps.png to see the smoothness

of the TPS curve (the graphs came out of pgbench-tools. There's a

little more play outside of the target than ideal for this case. Maybe

it's worth tightening the Poisson curve a bit around its center?

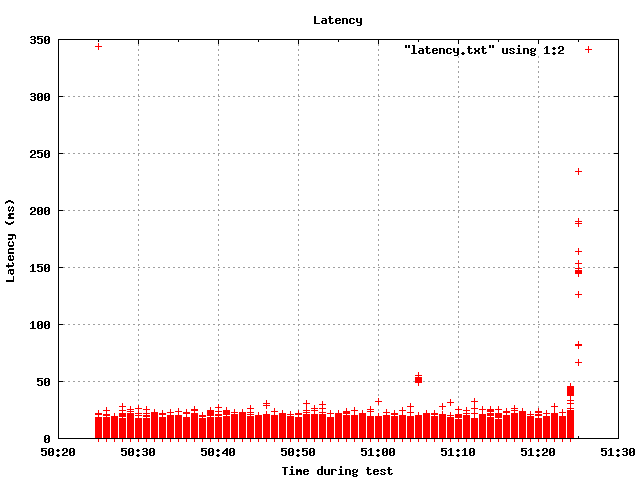

The main implementation issue I haven't looked into yet is why things

can get weird at the end of the run. See the latency.png graph attached

and you can see what I mean.

I didn't like the naming on this option or all of the ways you could

specify the delay. None of those really added anything, since you can

get every other behavior by specifying a non-integer TPS. And using the

word "throttle" inside the code is fine, but I didn't like exposing that

implementation detail more than it had to be.

What I did instead was think of this as a transaction rate target, which

makes the help a whole lot simpler:

-R SPEC, --rate SPEC

target rate per client in transactions per second

Made the documentation easier to write too. I'm not quite done with

that yet, the docs wording in this updated patch could still be better.

I personally would like this better if --rate specified a *total* rate

across all clients. However, there are examples of both types of

settings in the program already, so there's no one precedent for which

is right here. -t is per-client and now -R is too; I'd prefer it to be

like -T instead. It's not that important though, and the code is

cleaner as it's written right now. Maybe this is better; I'm not sure.

I did some basic error handling checks on this and they seemed good, the

program rejects target rates of <=0.

On the topic of this weird latency spike issue, I did see that show up

in some of the results too. Here's one where I tried to specify a rate

higher than the system can actually handle, 80000 TPS total on a

SELECT-only test

$ pgbench -S -T 30 -c 8 -j 4 -R10000tps pgbench

starting vacuum...end.

transaction type: SELECT only

scaling factor: 100

query mode: simple

number of clients: 8

number of threads: 4

duration: 30 s

number of transactions actually processed: 761779

average transaction lag: 10298.380 ms

tps = 25392.312544 (including connections establishing)

tps = 25397.294583 (excluding connections establishing)

It was actually limited by the capabilities of the hardware, 25K TPS.

10298 ms of lag per transaction can't be right though.

Some general patch submission suggestions for you as a new contributor:

-When re-submitting something with improvements, it's a good idea to add

a version number to the patch so reviewers can tell them apart easily.

But there is no reason to change the subject line of the e-mail each

time. I followed that standard here. If you updated this again I would

name the file pgbench-throttle-v9.patch but keep the same e-mail subject.

-There were some extra carriage return characters in your last

submission. Wasn't a problem this time, but if you can get rid of those

that makes for a better patch.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 4.0 KB |

|

image/png | 4.4 KB |

| pgbench-throttle-v8.patch | text/plain | 7.2 KB |

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-10 10:40:04 |

| Message-ID: | alpine.DEB.2.02.1306101010220.12980@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hello Greg,

Thanks for this very detailed review and the suggestions!

I'll submit a new patch

>> Question 1: should it report the maximum lang encountered?

>

> I haven't found the lag measurement to be very useful yet, outside of

> debugging the feature itself. Accordingly I don't see a reason to add even

> more statistics about the number outside of testing the code. I'm seeing

> some weird lag problems that this will be useful for though right now, more

> on that a few places below.

I'll explain below why it is really interesting to get this figure, and

that it is not really available as precisely elsewhere.

>> Question 2: the next step would be to have the current lag shown under

>> option --progress, but that would mean having a combined --throttle

>> --progress patch submission, or maybe dependencies between patches.

>

> This is getting too far ahead.

Ok!

> Let's get the throttle part nailed down before introducing even more

> moving parts into this. I've attached an updated patch that changes a

> few things around already. I'm not done with this yet and it needs some

> more review before commit, but it's not too far away from being ready.

Ok. I'll submit a new version by the end of the week.

> This feature works quite well. On a system that will run at 25K TPS without

> any limit, I did a run with 25 clients and a rate of 400/second, aiming at

> 10,000 TPS, and that's what I got:

>

> number of clients: 25

> number of threads: 1

> duration: 60 s

> number of transactions actually processed: 599620

> average transaction lag: 0.307 ms

> tps = 9954.779317 (including connections establishing)

> tps = 9964.947522 (excluding connections establishing)

>

> I never thought of implementing the throttle like this before,

Stochastic processes are a little bit magic:-)

> but it seems to work out well so far. Check out tps.png to see the

> smoothness of the TPS curve (the graphs came out of pgbench-tools.

> There's a little more play outside of the target than ideal for this

> case. Maybe it's worth tightening the Poisson curve a bit around its

> center?

The point of a Poisson distribution is to model random events the kind of

which are a little bit irregular, such as web requests or queuing clients

at a taxi stop. I cannot really change the formula, but if you want to

argue with Siméon Denis Poisson, hist current address is 19th section of

"Père Lachaise" graveyard in Paris:-)

More seriously, the only parameter that can be changed is the "1000000.0"

which drives the granularity of the Poisson process. A smaller value would

mean a smaller potential multiplier; that is how far from the average time

the schedule can go. This may come under "tightening", although it would

depart from a "perfect" process and possibly may be a little less

"smooth"... for a given definition of "tight", "perfect" and "smooth":-)

> [...] What I did instead was think of this as a transaction rate target,

> which makes the help a whole lot simpler:

>

> -R SPEC, --rate SPEC

> target rate per client in transactions per second

Ok, I'm fine with this name.

> Made the documentation easier to write too. I'm not quite done with that

> yet, the docs wording in this updated patch could still be better.

I'm not an English native speaker, any help is welcome here. I'll do my

best.

> I personally would like this better if --rate specified a *total* rate across

> all clients.

Ok, I can do that, with some reworking so that the stochastic process is

shared by all threads instead of being within each client. This mean that

a lock between threads to access some variables, which should not impact

the test much. Another option is to have a per-thread stochastic process.

> However, there are examples of both types of settings in the

> program already, so there's no one precedent for which is right here. -t is

> per-client and now -R is too; I'd prefer it to be like -T instead. It's not

> that important though, and the code is cleaner as it's written right now.

> Maybe this is better; I'm not sure.

I like the idea of just one process instead of a per-client one. I did not

try at the beginning because the implementation is less straightforward.

> On the topic of this weird latency spike issue, I did see that show up in

> some of the results too.

Your example illustrates *exactly* why the lag measure was added.

The Poisson processes generate an ideal event line (that is irregularly

scheduled transaction start times targetting the expected tps) which

induces a varrying load that the database is trying to handle.

If it cannot start right away, this means that some transactions are

differed with respect to their schedule start time. The measure latency

reports exactly that: the clients do not handle the load. There may be

some catchup later, that is the clients come back in line with the

scheduled transactions.

I need to put this measure here because the "schedluled time" is only

known to pgbench and not available elsewhere. The max would really be more

interesting than the mean, so as to catch that some things were

temporarily amiss, even if things went back to nominal later.

> Here's one where I tried to specify a rate higher

> than the system can actually handle, 80000 TPS total on a SELECT-only test

>

> $ pgbench -S -T 30 -c 8 -j 4 -R10000tps pgbench

> starting vacuum...end.

> transaction type: SELECT only

> scaling factor: 100

> query mode: simple

> number of clients: 8

> number of threads: 4

> duration: 30 s

> number of transactions actually processed: 761779

> average transaction lag: 10298.380 ms

The interpretation is the following: as the database cannot handle the

load, transactions were processed on average 10 seconds behind their

scheduled transaction time. You had on average a 10 second latency to

answer "incoming" requests. Also some transactions where implicitely not

even scheduled, so the situation is worse than that...

> tps = 25392.312544 (including connections establishing)

> tps = 25397.294583 (excluding connections establishing)

>

> It was actually limited by the capabilities of the hardware, 25K TPS. 10298

> ms of lag per transaction can't be right though.

>

> Some general patch submission suggestions for you as a new contributor:

Hmmm, I did a few things such as "pgxs" back in 2004, so maybe "not very

active" is a better description than "new":-)

> -When re-submitting something with improvements, it's a good idea to add a

> version number to the patch so reviewers can tell them apart easily. But

> there is no reason to change the subject line of the e-mail each time. I

> followed that standard here. If you updated this again I would name the file

> pgbench-throttle-v9.patch but keep the same e-mail subject.

Ok.

> -There were some extra carriage return characters in your last submission.

> Wasn't a problem this time, but if you can get rid of those that makes for a

> better patch.

Ok.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-10 22:02:48 |

| Message-ID: | alpine.DEB.2.02.1306102351580.18266@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Here is submission v9 based on your v8 version.

- the tps is global, with a mutex to share the global stochastic process

- there is an adaptation for the "fork" emulation

- I do not know wheter this works with Win32 pthread stuff.

- reduced multiplier ln(1000000) -> ln(1000)

- avg & max throttling lag are reported

> There's a little more play outside of the target than ideal for this

> case. Maybe it's worth tightening the Poisson curve a bit around its

> center?

A stochastic process moves around the target value, but is not right on

it.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-v9.patch | text/x-diff | 10.6 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-11 15:34:37 |

| Message-ID: | 51B7438D.6060101@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/10/13 6:02 PM, Fabien COELHO wrote:

> - the tps is global, with a mutex to share the global stochastic process

> - there is an adaptation for the "fork" emulation

> - I do not know wheter this works with Win32 pthread stuff.

Instead of this complexity, can we just split the TPS input per client?

That's all I was thinking of here, not adding a new set of threading

issues. If 10000 TPS is requested and there's 10 clients, just set the

delay so that each of them targets 1000 TPS.

I'm guessing it's more accurate to have them all communicate as you've

done here, but it seems like a whole class of new bugs and potential

bottlenecks could come out of that. Whenever someone touches the

threading model for pgbench it usually gives a stack of build farm

headaches. Better to avoid those unless there's really a compelling

reason to go through that.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-11 19:27:19 |

| Message-ID: | alpine.DEB.2.02.1306112104420.6293@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

>> - the tps is global, with a mutex to share the global stochastic process

>> - there is an adaptation for the "fork" emulation

>> - I do not know wheter this works with Win32 pthread stuff.

>

> Instead of this complexity,

Well, the mutex impact is very localized in the code. The complexity is

more linked to the three "thread" implementations intermixed there.

> can we just split the TPS input per client?

Obviously it is possible. Note that it is more logical to do that per

thread. I did one shared stochastic process because it makes more sense to

have just one.

> That's all I was thinking of here, not adding a new set of threading issues.

> If 10000 TPS is requested and there's 10 clients, just set the delay so that

> each of them targets 1000 TPS.

Ok, so I understand that a mutex is too much!

> I'm guessing it's more accurate to have them all communicate as you've done

> here, but it seems like a whole class of new bugs and potential bottlenecks

> could come out of that.

I do not think that there is a performance or locking contension issue: it

is about getting a mutex for a section which performs one integer add and

two integer assignements, that is about 3 instructions, to be compared

with the task of performing database operations over the network. There

are several orders of magnitudes between those tasks. It would need a more

than terrible mutex implementation to have any significant impact.

> Whenever someone touches the threading model for pgbench it usually

> gives a stack of build farm headaches. Better to avoid those unless

> there's really a compelling reason to go through that.

I agree that the threading model in pgbench is a mess, mostly because of

the 3 concurrent implementations intermixed in the code. Getting rid of

the fork emulation and win32 special handling and only keeping the pthread

implementation, which seems to be available even on windows, would be a

relief. I'm not sure if there is still a rationale to have these 3

implementations, but it ensures a maintenance mess:-(

I'll submit a version without mutex, but ISTM that this one is

conceptually cleaner, although I'm not sure about what happens on windows.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-11 20:11:37 |

| Message-ID: | alpine.DEB.2.02.1306112203080.6293@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Submission 10:

- per thread throttling instead of a global process with a mutex.

this avoids a mutex, and the process is shared between clients

of a given thread.

- ISTM that there "thread start time" should be initialized at the

beginning of threadRun instead of in the loop *before* thread creation,

otherwise the thread creation delays are incorporated in the

performance measure, but ISTM that the point of pgbench is not to

measure thread creation performance...

I've added a comment suggesting where it should be put instead,

first thing in threadRun.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-v10.patch | text/x-diff | 9.4 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-11 20:22:29 |

| Message-ID: | 51B78705.7050603@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/11/13 4:11 PM, Fabien COELHO wrote:

> - ISTM that there "thread start time" should be initialized at the

> beginning of threadRun instead of in the loop *before* thread creation,

> otherwise the thread creation delays are incorporated in the

> performance measure, but ISTM that the point of pgbench is not to

> measure thread creation performance...

I noticed that, but it seemed a pretty minor issue. Did you look at the

giant latency spikes at the end of the test run I submitted the graph

for? I wanted to nail down what was causing those before worrying about

the startup timing.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-11 21:13:05 |

| Message-ID: | alpine.DEB.2.02.1306112254010.10500@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

>> - ISTM that there "thread start time" should be initialized at the

>> beginning of threadRun instead of in the loop *before* thread creation,

>> otherwise the thread creation delays are incorporated in the

>> performance measure, but ISTM that the point of pgbench is not to

>> measure thread creation performance...

>

> I noticed that, but it seemed a pretty minor issue.

Not for me, because the "max lag" measured in my first version was really

the threads creation time, not very interesting.

> Did you look at the giant latency spikes at the end of the test run I

> submitted the graph for?

I've looked at the graph you sent. I must admit that I did not understand

exactly what is measured and where it is measured. Because of its position

at the end of the run, I thought of some disconnection related effects

when pgbench run is interrupted by a time out signal, so some things are

done more slowly. Fine with me, we are stopping anyway, and out of the

steady state.

> I wanted to nail down what was causing those before worrying about the

> startup timing.

Well, the short answer is that I'm not worried by that, for the reason

explained above. I would be worried if it was anywhere else but where the

transactions are interrupted, the connections are closed and the threads

are stopped. I may be wrong:-)

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-12 07:19:36 |

| Message-ID: | alpine.DEB.2.02.1306120859540.10500@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> Did you look at the giant latency spikes at the end of the test run I

> submitted the graph for? I wanted to nail down what was causing those

> before worrying about the startup timing.

If you are still worried: if you run the very same command without

throttling and measure the same latency, does the same thing happens at

the end? My guess is that it should be "yes". If it is no, I'll try out

pgbench-tools.

--

Fabien.

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 14:09:54 |

| Message-ID: | 51BB2432.6040102@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/12/13 3:19 AM, Fabien COELHO wrote:

> If you are still worried: if you run the very same command without

> throttling and measure the same latency, does the same thing happens at

> the end? My guess is that it should be "yes". If it is no, I'll try out

> pgbench-tools.

It looks like it happens rarely for one client without the rate limit,

but that increases to every time for multiple client with limiting in

place. pgbench-tools just graphs the output from the latency log.

Here's a setup that runs the test I'm doing:

$ createdb pgbench

$ pgbench -i -s 10 pgbench

$ pgbench -S -c 25 -T 30 -l pgbench && tail -n 40 pgbench_log*

Sometimes there's no slow entries. but I've seen this once so far:

0 21822 1801 0 1371217462 945264

1 21483 1796 0 1371217462 945300

8 20891 1931 0 1371217462 945335

14 20520 2084 0 1371217462 945374

15 20517 1991 0 1371217462 945410

16 20393 1928 0 1371217462 945444

17 20183 2000 0 1371217462 945479

18 20277 2209 0 1371217462 945514

23 20316 2114 0 1371217462 945549

22 20267 250128 0 1371217463 193656

The third column is the latency for that transaction. Notice how it's a

steady ~2000 us except for the very last transaction, which takes

250,128 us. That's the weird thing; these short SELECT statements

should never take that long. It suggests there's something weird

happening with how the client exits, probably that its latency number is

being computed after more work than it should.

Here's what a rate limited run looks like for me. Note that I'm still

using the version I re-submitted since that's where I ran into this

issue, I haven't merged your changes to split the rate among each client

here--which means this is 400 TPS per client == 10000 TPS total:

$ pgbench -S -c 25 -T 30 -R 400 -l pgbench && tail -n 40 pgbench_log

7 12049 2070 0 1371217859 195994

22 12064 2228 0 1371217859 196115

18 11957 1570 0 1371217859 196243

23 12130 989 0 1371217859 196374

8 11922 1598 0 1371217859 196646

11 12229 4833 0 1371217859 196702

21 11981 1943 0 1371217859 196754

20 11930 1026 0 1371217859 196799

14 11990 13119 0 1371217859 208014

^^^ fast section

vvv delayed section

1 11982 91926 0 1371217859 287862

2 12033 116601 0 1371217859 308644

6 12195 115957 0 1371217859 308735

17 12130 114375 0 1371217859 308776

0 12026 115507 0 1371217859 308822

3 11948 118228 0 1371217859 308859

4 12061 113484 0 1371217859 308897

5 12110 113586 0 1371217859 308933

9 12032 117744 0 1371217859 308969

10 12045 114626 0 1371217859 308989

12 11953 113372 0 1371217859 309030

13 11883 114405 0 1371217859 309066

15 12018 116069 0 1371217859 309101

16 11890 115727 0 1371217859 309137

19 12140 114006 0 1371217859 309177

24 11884 115782 0 1371217859 309212

There's almost 90,000 usec of latency showing up between epoch time

1371217859.208014 and 1371217859.287862 here. What's weird about it is

that the longer the test runs, the larger the gap is. If collecting the

latency data itself caused the problem, that would make sense, so maybe

this is related to flushing that out to disk.

If you want to look just at the latency numbers without the other

columns in the way you can use:

cat pgbench_log.* | awk {'print $3'}

That is how I was evaluating the smoothness of the rate limit, by

graphing those latency values. pgbench-tools takes those and a derived

TPS/s number and plots them, which made it easier for me to spot this

weirdness. But I've already moved onto analyzing the raw latency data

instead, I can see the issue without the graph once I've duplicated the

conditions.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 17:46:22 |

| Message-ID: | 51BB56EE.4030405@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I don't have this resolved yet, but I think I've identified the cause.

Updating here mainly so Fabien doesn't duplicate my work trying to track

this down. I'm going to keep banging at this until it's resolved now

that I got this far.

Here's a slow transaction:

1371226017.568515 client 1 executing \set naccounts 100000 * :scale

1371226017.568537 client 1 throttling 6191 us

1371226017.747858 client 1 executing \setrandom aid 1 :naccounts

1371226017.747872 client 1 sending SELECT abalance FROM pgbench_accounts

WHERE aid = 268721;

1371226017.789816 client 1 receiving

That confirms it is getting stuck at the "throttling" step. Looks like

the code pauses there because it's trying to overload the "sleeping"

state that was already in pgbench, but handle it in a special way inside

of doCustom(), and that doesn't always work.

The problem is that pgbench doesn't always stay inside doCustom when a

client sleeps. It exits there to poll for incoming messages from the

other clients, via select() on a shared socket. It's not safe to assume

doCustom will be running regularly; that's only true if clients keep

returning messages.

So as long as other clients keep banging on the shared socket, doCustom

is called regularly, and everything works as expected. But at the end

of the test run that happens less often, and that's when the problem

shows up.

pgbench already has a "\sleep" command, and the way that delay is

handled happens inside threadRun() instead. The pausing of the rate

limit throttle needs to operate in the same place. I have to redo a few

things to confirm this actually fixes the issue, as well as look at

Fabien's later updates to this since I wandered off debugging. I'm sure

it's in the area of code I'm poking at now though.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 18:14:32 |

| Message-ID: | alpine.DEB.2.02.1306142001440.10940@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> pgbench already has a "\sleep" command, and the way that delay is

> handled happens inside threadRun() instead. The pausing of the rate

> limit throttle needs to operate in the same place.

It does operate at the same place. The throttling is performed by

inserting a "sleep" first thing when starting a new transaction. So if

their is a weirdness, it should show as well without throttling but with a

fixed \sleep instead?

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 19:50:16 |

| Message-ID: | alpine.DEB.2.02.1306142033530.10940@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hello Greg,

I think that the weirdness really comes from the way transactions times

are measured, their interactions with throttling, and latent bugs in the

code.

One issue is that the throttling time was included in the measure, but not

the first time because "txn_begin" is not set at the beginning of

doCustom.

Also, flag st->listen is set to 1 but *never* set back to 0...

sh> grep listen pgbench.c

int listen;

if (st->listen)

st->listen = 1;

st->listen = 1;

st->listen = 1;

st->listen = 1;

st->listen = 1;

st->listen = 1;

ISTM that I can fix the "weirdness" by inserting an ugly "goto top;", but

I would feel better about it by removing all gotos and reordering some

actions in doCustom in a more logical way. However that would be a bigger

patch.

Please find attached 2 patches:

- the first is the full throttle patch which ensures that the

txn_begin is taken at a consistent point, after throttling,

which requires resetting "listen". There is an ugly goto.

I've also put times in a consistent format in the log,

"789.012345" instead of "789 12345".

- the second patch just shows the diff between v10 and the first one.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-test.patch | text/x-diff | 10.4 KB |

| pgbench-throttle-v10-to-test.patch | text/x-diff | 1.5 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 20:19:27 |

| Message-ID: | 51BB7ACF.4000300@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/14/13 3:50 PM, Fabien COELHO wrote:

> I think that the weirdness really comes from the way transactions times

> are measured, their interactions with throttling, and latent bugs in the

> code.

measurement times, no; interactions with throttling, no. If it was

either of those I'd have finished this off days ago. Latent bugs,

possibly. We may discover there's nothing wrong with your code at the

end here, that it just makes hitting this bug more likely.

Unfortunately today is the day *some* bug is popping up, and I want to

get it squashed before I'll be happy.

The lag is actually happening during a kernel call that isn't working as

expected. I'm not sure whether this bug was there all along if \sleep

was used, or if it's specific to the throttle sleep.

> Also, flag st->listen is set to 1 but *never* set back to 0...

I noticed that st-listen was weird too, and that's on my short list of

suspicious things I haven't figured out yet.

I added a bunch more logging as pgbench steps through its work to track

down where it's stuck at. Until the end all transactions look like this:

1371238832.084783 client 10 throttle lag 2 us

1371238832.084783 client 10 executing \setrandom aid 1 :naccounts

1371238832.084803 client 10 sending SELECT abalance FROM

pgbench_accounts WHERE aid = 753099;

1371238832.084840 calling select

1371238832.086539 client 10 receiving

1371238832.086539 client 10 finished

All clients who hit lag spikes at the end are going through this

sequence instead:

1371238832.085912 client 13 throttle lag 790 us

1371238832.085912 client 13 executing \setrandom aid 1 :naccounts

1371238832.085931 client 13 sending SELECT abalance FROM

pgbench_accounts WHERE aid = 564894;

1371238832.086592 client 13 receiving

1371238832.086662 calling select

1371238832.235543 client 13 receiving

1371238832.235543 client 13 finished

Note the "calling select" here that wasn't in the normal length

transaction before it. The client is receiving something here, but

rather than it finishing the transaction it falls through and ends up at

the select() system call outside of doCustom. All of the clients that

are sleeping when the system slips into one of these long select() calls

are getting stuck behind it. I'm not 100% sure, but I think this only

happens when all remaining clients are sleeping.

Here's another one, it hits the receive that doesn't finish the

transaction earlier (1371238832.086587) but then falls into the same

select() call at 1371238832.086662:

1371238832.085884 client 12 throttle lag 799 us

1371238832.085884 client 12 executing \setrandom aid 1 :naccounts

1371238832.085903 client 12 sending SELECT abalance FROM

pgbench_accounts WHERE aid = 299080;

1371238832.086587 client 12 receiving

1371238832.086662 calling select

1371238832.231032 client 12 receiving

1371238832.231032 client 12 finished

Investigation is still going here...

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-14 20:47:11 |

| Message-ID: | alpine.DEB.2.02.1306142227180.10940@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

>> I think that the weirdness really comes from the way transactions times

>> are measured, their interactions with throttling, and latent bugs in the

>> code.

>

> measurement times, no; interactions with throttling, no. If it was either of

> those I'd have finished this off days ago. Latent bugs, possibly. We may

> discover there's nothing wrong with your code at the end here,

To summarize my point: I think my v10 code does not take into account all

of the strangeness in doCustom, and I'm pretty sure that there is no point

in including thottle sleeps into latency measures, which was more or less

the case. So it is somehow a "bug" which only shows up if you look at the

latency measures, but the tps are fine.

> that it just makes hitting this bug more likely. Unfortunately today is

> the day *some* bug is popping up, and I want to get it squashed before

> I'll be happy.

>

> The lag is actually happening during a kernel call that isn't working as

> expected. I'm not sure whether this bug was there all along if \sleep was

> used, or if it's specific to the throttle sleep.

The throttle sleep is inserted out of the state machine. That is why in

the "test" patch I added a goto to ensure that it is always taken at the

right time, that is when state==0 and before txn_begin is set, and not

possibly between other states when doCustom happens to be recalled after a

return.

> I added a bunch more logging as pgbench steps through its work to track down

> where it's stuck at. Until the end all transactions look like this:

>

> 1371238832.084783 client 10 throttle lag 2 us

> 1371238832.084783 client 10 executing \setrandom aid 1 :naccounts

> 1371238832.084803 client 10 sending SELECT abalance FROM pgbench_accounts

> WHERE aid = 753099;

> 1371238832.084840 calling select

> 1371238832.086539 client 10 receiving

> 1371238832.086539 client 10 finished

>

> All clients who hit lag spikes at the end are going through this sequence

> instead:

>

> 1371238832.085912 client 13 throttle lag 790 us

> 1371238832.085912 client 13 executing \setrandom aid 1 :naccounts

> 1371238832.085931 client 13 sending SELECT abalance FROM pgbench_accounts

> WHERE aid = 564894;

> 1371238832.086592 client 13 receiving

> 1371238832.086662 calling select

> 1371238832.235543 client 13 receiving

> 1371238832.235543 client 13 finished

> Note the "calling select" here that wasn't in the normal length transaction

> before it. The client is receiving something here, but rather than it

> finishing the transaction it falls through and ends up at the select() system

> call outside of doCustom. All of the clients that are sleeping when the

> system slips into one of these long select() calls are getting stuck behind

> it. I'm not 100% sure, but I think this only happens when all remaining

> clients are sleeping.

Note: in both the slow cases there is a "receiving" between "sending" and

"select". This suggests that the "goto top" at the very end of doCustom is

followed in one case but not the other.

ISTM that there is a timeout passed to select which is computed based on

the current sleeping time of each client. I'm pretty sure that not a well

tested path...

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-15 19:56:52 |

| Message-ID: | alpine.DEB.2.02.1306152151120.10940@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hello Greg,

I've done some more testing with the "test" patch. I have not seen any

spike at the end of the throttled run.

The attached version 11 patch does ensure that throttle added sleeps are

not included in latency measures (-r) and that throttling is performed

right at the beginning of a transaction. There is an ugly goto to do that.

I think there is still a latent bug in the code with listen which should

be set back to 0 in other places, but this bug is already there.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-v11.patch | text/x-diff | 9.9 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-19 01:17:49 |

| Message-ID: | 51C106BD.2090407@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I'm still getting the same sort of pauses waiting for input with your

v11. This is a pretty frustrating problem; I've spent about two days so

far trying to narrow down how it happens. I've attached the test

program I'm using. It seems related to my picking a throttled rate

that's close to (but below) the maximum possible on your system. I'm

using 10,000 on a system that can do about 16,000 TPS when running

pgbench in debug mode.

This problem is 100% reproducible here; happens every time. This is a

laptop running Mac OS X. It's possible the problem is specific to that

platform. I'm doing all my tests with the database itself setup for

development, with debug and assertions on. The lag spikes seem smaller

without assertions on, but they are still there.

Here's a sample:

transaction type: SELECT only

scaling factor: 10

query mode: simple

number of clients: 25

number of threads: 1

duration: 30 s

number of transactions actually processed: 301921

average transaction lag: 1.133 ms (max 137.683 ms)

tps = 10011.527543 (including connections establishing)

tps = 10027.834189 (excluding connections establishing)

And those slow ones are all at the end of the latency log file, as shown

in column 3 here:

22 11953 3369 0 1371578126 954881

23 11926 3370 0 1371578126 954918

3 12238 30310 0 1371578126 984634

7 12205 30350 0 1371578126 984742

8 12207 30359 0 1371578126 984792

11 12176 30325 0 1371578126 984837

13 12074 30292 0 1371578126 984882

0 12288 175452 0 1371578127 126340

9 12194 171948 0 1371578127 126421

12 12139 171915 0 1371578127 126466

24 11876 175657 0 1371578127 126507

Note that there are two long pauses here, which happens maybe half of

the time. There's a 27 ms pause and then a 145 ms one.

The exact sequence for when the pauses show up is:

-All remaining clients have sent their SELECT statement and are waiting

for a response. They are not sleeping, they're waiting for the server

to return a query result.

-A client receives part of the data it wants, but there is still data

left. This is the path through pgbench.c where the "if

(PQisBusy(st->con))" test is true after receiving some information. I

hacked up some logging that distinguishes those as a "client %d partial

receive" to make this visible.

-A select() call is made with no timeout, so it can only be satisfied by

more data being returned.

-Around ~100ms (can be less, can be more) goes by before that select()

returns more data to the client, where normally it would happen in ~2ms.

You were concerned about a possible bug in the timeout code. If you

hack up the select statement to show some state information, the setup

for the pauses at the end always looks like this:

calling select min_usec=9223372036854775807 sleeping=0

When no one is sleeping, the timeout becomes infinite, so only returning

data will break it. This is intended behavior though. This exact same

sequence and select() call parameters happen in pgbench code every time

at the end of a run. But clients without the throttling patch applied

never seem to get into the state where they pause for so long, waiting

for one of the active clients to receive the next bit of result.

I don't think the st->listen related code has anything to do with this

either. That flag is only used to track when clients have completed

sending their first query over to the server. Once reaching that point

once, afterward they should be "listening" for results each time they

exit the doCustom() code. st->listen goes to 1 very soon after startup

and then it stays there, and that logic seems fine too.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| Attachment | Content-Type | Size |

|---|---|---|

| test | text/plain | 194 bytes |

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-19 13:57:56 |

| Message-ID: | alpine.DEB.2.02.1306191531570.25404@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> I'm still getting the same sort of pauses waiting for input with your v11.

Alas.

> This is a pretty frustrating problem; I've spent about two days so far trying

> to narrow down how it happens. I've attached the test program I'm using. It

> seems related to my picking a throttled rate that's close to (but below) the

> maximum possible on your system. I'm using 10,000 on a system that can do

> about 16,000 TPS when running pgbench in debug mode.

>

> This problem is 100% reproducible here; happens every time. This is a laptop

> running Mac OS X. It's possible the problem is specific to that platform.

> I'm doing all my tests with the database itself setup for development, with

> debug and assertions on. The lag spikes seem smaller without assertions on,

> but they are still there.

>

> Here's a sample:

>

> transaction type: SELECT only

What is this test script? I'm doing pgbench for tests.

> scaling factor: 10

> query mode: simple

> number of clients: 25

> number of threads: 1

> duration: 30 s

> number of transactions actually processed: 301921

> average transaction lag: 1.133 ms (max 137.683 ms)

> tps = 10011.527543 (including connections establishing)

> tps = 10027.834189 (excluding connections establishing)

>

> And those slow ones are all at the end of the latency log file, as shown in

> column 3 here:

>

> 22 11953 3369 0 1371578126 954881

> 23 11926 3370 0 1371578126 954918

> 3 12238 30310 0 1371578126 984634

> 7 12205 30350 0 1371578126 984742

> 8 12207 30359 0 1371578126 984792

> 11 12176 30325 0 1371578126 984837

> 13 12074 30292 0 1371578126 984882

> 0 12288 175452 0 1371578127 126340

> 9 12194 171948 0 1371578127 126421

> 12 12139 171915 0 1371578127 126466

> 24 11876 175657 0 1371578127 126507

Indeed, there are two spikes, but not all clients are concerned.

As I have not seen that, debuging is hard. I'll give it a try on

tomorrow.

> When no one is sleeping, the timeout becomes infinite, so only returning data

> will break it. This is intended behavior though.

This is not coherent. Under --throttle there should basically always be

someone asleep, unless the server cannot cope with the load and *all*

transactions are late. A no time out state looks pretty unrealistic,

because it means that there is no throttling.

> I don't think the st->listen related code has anything to do with this

> either. That flag is only used to track when clients have completed sending

> their first query over to the server. Once reaching that point once,

> afterward they should be "listening" for results each time they exit the

> doCustom() code.

This assumption seems false if you can have a "sleep" at the beginning of

the sequence, which is what throttle is doing, but can be done by any

custom script, so that the client is expected to wait before sending any

command, thus there can be no select underway in that case.

So listen should be set to 1 when a select as been sent, and set back to 0

when the result data have all been received.

"doCustom" makes implicit assumptions about what is going on, whereas it

should focus on looking at the incoming state, performing operations, and

leaving with a state which correspond to the actual status, without

assumptions about what is going to happen next.

> st->listen goes to 1 very soon after startup and then it stays there,

> and that logic seems fine too.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-19 18:42:30 |

| Message-ID: | alpine.DEB.2.02.1306192034560.25404@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> number of transactions actually processed: 301921

Just a thought before spending too much time on this subtle issue.

The patch worked reasonnably for 301900 transactions in your above run,

and the few last ones, less than the number of clients, show strange

latency figures which suggest that something is amiss in some corner case

when pgbench is stopping. However, the point of pgbench is to test a

steady state, not to achieve the cleanest stop at the end of a run.

So my question is: should this issue be a blocker wrt to the feature?

--

Fabien.

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | Greg Smith <greg(at)2ndquadrant(dot)com>, PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-21 13:28:37 |

| Message-ID: | CA+TgmoYMw6xssTqKhKzS88orXScM+B_hXSf8x_5WcJPdxdnXbw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 19, 2013 at 2:42 PM, Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> wrote:

>> number of transactions actually processed: 301921

> Just a thought before spending too much time on this subtle issue.

>

> The patch worked reasonnably for 301900 transactions in your above run, and

> the few last ones, less than the number of clients, show strange latency

> figures which suggest that something is amiss in some corner case when

> pgbench is stopping. However, the point of pgbench is to test a steady

> state, not to achieve the cleanest stop at the end of a run.

>

> So my question is: should this issue be a blocker wrt to the feature?

I think so. If it doesn't get fixed now, it's not likely to get fixed

later. And the fact that nobody understands why it's happening is

kinda worrisome...

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com>, Greg Smith <greg(at)2ndquadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-22 16:54:40 |

| Message-ID: | alpine.DEB.2.02.1306221634211.23902@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Dear Robert and Greg,

> I think so. If it doesn't get fixed now, it's not likely to get fixed

> later. And the fact that nobody understands why it's happening is

> kinda worrisome...

Possibly, but I thing that it is not my fault:-)

So, I spent some time at performance debugging...

First, I finally succeeded in reproducing Greg Smith spikes on my ubuntu

laptop. It needs short transactions and many clients per thread so as to

be a spike. With "pgbench" standard transactions and not too many clients

per thread it is more of a little bump, or even it is not there at all.

After some poking around, and pursuing various red herrings, I resorted to

measure the delay for calling "PQfinish()", which is really the only

special thing going around at the end of pgbench run...

BINGO!

In his tests Greg is using one thread. Once the overall timer is exceeded,

clients start closing their connections by calling PQfinish once their

transactions are done. This call takes between a few us and a few ... ms.

So if some client closing time hit the bad figures, the transactions of

other clients are artificially delayed by this time, and it seems they

have a high latency, but it is really because the thread is in another

client's PQfinish and was not available to process the data. If you have

one thread per client, no problem, especially as the final PQfinish() time

is not measured at all by pgbench:-) Also, the more clients, the higher

the spike because more are to be stopped and may hit the bad figures.

Here is a trace, with the simple SELECT transaction.

sh> ./pgbench --rate=14000 -T 10 -r -l -c 30 -S bench

...

sh> less pgbench_log.*

# short transactions, about 0.250 ms

...

20 4849 241 0 1371916583.455400

21 4844 256 0 1371916583.455470

22 4832 348 0 1371916583.455569

23 4829 218 0 1371916583.455627

** TIMER EXCEEDED **

25 4722 390 0 1371916583.455820

25 done in 1560 [1,2] # BING, 1560 us for PQfinish()

26 4557 1969 0 1371916583.457407

26 done in 21 [1,2]

27 4372 1969 0 1371916583.457447

27 done in 19 [1,2]

28 4009 1910 0 1371916583.457486

28 done in 1445 [1,2] # BOUM

2 interrupted in 1300 [0,0] # BANG

3 interrupted in 15 [0,0]

4 interrupted in 40 [0,0]

5 interrupted in 203 [0,0] # boum?

6 interrupted in 1352 [0,0] # ZIP

7 interrupted in 18 [0,0]

...

23 interrupted in 12 [0,0]

## the cumulated PQfinish() time above is about 6 ms which

## appears as an apparent latency for the next clients:

0 4880 6521 0 1371916583.462157

0 done in 9 [1,2]

1 4877 6397 0 1371916583.462194

1 done in 9 [1,2]

24 4807 6796 0 1371916583.462217

24 done in 9 [1,2]

...

Note that the bad measures also appear when there is no throttling:

sh> ./pgbench -T 10 -r -l -c 30 -S bench

sh> grep 'done.*[0-9][0-9][0-9]' pgbench_log.*

0 done in 1974 [1,2]

1 done in 312 [1,2]

3 done in 2159 [1,2]

7 done in 409 [1,2]

11 done in 393 [1,2]

12 done in 2212 [1,2]

13 done in 1458 [1,2]

## other clients execute PQfinish in less than 100 us

This "done" is issued by my instrumented version of clientDone().

The issue does also appear if I instrument pgbench from master, without

anything from the throttling patch at all:

sh> git diff master

diff --git a/contrib/pgbench/pgbench.c b/contrib/pgbench/pgbench.c

index 1303217..7c5ea81 100644

--- a/contrib/pgbench/pgbench.c

+++ b/contrib/pgbench/pgbench.c

@@ -869,7 +869,15 @@ clientDone(CState *st, bool ok)

if (st->con != NULL)

{

+ instr_time now;

+ int64 s0, s1;

+ INSTR_TIME_SET_CURRENT(now);

+ s0 = INSTR_TIME_GET_MICROSEC(now);

PQfinish(st->con);

+ INSTR_TIME_SET_CURRENT(now);

+ s1 = INSTR_TIME_GET_MICROSEC(now);

+ fprintf(stderr, "%d done in %ld [%d,%d]\n",

+ st->id, s1-s0, st->listen, st->state);

st->con = NULL;

}

return false; /* always false */

sh> ./pgbench -T 10 -r -l -c 30 -S bench 2> x.err

sh> grep 'done.*[0-9][0-9][0-9]' x.err

14 done in 1985 [1,2]

16 done in 276 [1,2]

17 done in 1418 [1,2]

So my argumented conclusion is that the issue is somewhere within

PQfinish(), possibly in interaction with pgbench doings, but is *NOT*

related in any way to the throttling patch, as it is preexisting it. Gregs

stumbled upon it because he looked at latencies.

I'll submit a slightly improved v12 shortly.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-22 18:06:39 |

| Message-ID: | alpine.DEB.2.02.1306222001540.23902@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Please find attached a v12, which under timer_exceeded interrupts clients

which are being throttled instead of waiting for the end of the

transaction, as the transaction is not started yet.

I've also changed the log format that I used for debugging the apparent

latency issue:

x y z 12345677 1234 -> x y z 12345677.001234

It seems much clearer that way.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-22 18:09:21 |

| Message-ID: | alpine.DEB.2.02.1306222008370.23902@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> Please find attached a v12, which under timer_exceeded interrupts clients

> which are being throttled instead of waiting for the end of the transaction,

> as the transaction is not started yet.

Oops, I forgot the attachment. Here it is!

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-v12.patch | text/x-diff | 12.2 KB |

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com>, Greg Smith <greg(at)2ndquadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-23 07:00:45 |

| Message-ID: | alpine.DEB.2.02.1306230839110.1793@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> So my argumented conclusion is that the issue is somewhere within PQfinish(),

> possibly in interaction with pgbench doings, but is *NOT* related in any way

> to the throttling patch, as it is preexisting it. Gregs stumbled upon it

> because he looked at latencies.

An additional thought:

The latency measures *elapsed* time. As a small laptop is running 30

clients and their server processes at a significant load, there are a lot

of context switching going on, so maybe it just happens that the pgbench

process is switched off and then on as PQfinish() is running, so the point

would really be that the host is loaded and that's it. I'm not sure of the

likelyhood of such an event. It is possible that would be more frequent

after timer_exceeded because the system is closing postgres processes, and

would depend on what the kernel process scheduler does.

So the explanation would be: your system is loaded, and it shows in subtle

ways here and there when you do detailed measures. That is life.

Basically this is a summary my (long) experience with performance

experiments on computers. What are you really measuring? What is really

happening?

When a system is loaded, there are many things which interact one with the

other and induce particular effects on performance measures. So usually

what is measured is not what one is expecting.

Greg thought that he was measuring transaction latencies, but it was

really client contention in a thread. I thought that I was measuring

PQfinish() time, but maybe it is the probability of a context switch.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com>, Greg Smith <greg(at)2ndquadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-23 20:08:13 |

| Message-ID: | alpine.DEB.2.02.1306232203250.1793@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> An additional thought:

Yet another thought, hopefully final on this subject.

I think that the probability of a context switch is higher when calling

PQfinish than in other parts of pgbench because it contains system calls

(e.g. closing the network connection) where the kernel is likely to stop

this process and activate another one.

--

Fabien.

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-27 18:23:28 |

| Message-ID: | alpine.DEB.2.02.1306272019070.6384@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> Please find attached a v12, which under timer_exceeded interrupts

> clients which are being throttled instead of waiting for the end of the

> transaction, as the transaction is not started yet.

Please find attached a v13 which fixes conflicts introduced by the long

options patch committed by Robert Haas.

--

Fabien.

| Attachment | Content-Type | Size |

|---|---|---|

| pgbench-throttle-v13.patch | text/x-diff | 12.3 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-29 23:11:21 |

| Message-ID: | 51CF6999.4060103@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/22/13 12:54 PM, Fabien COELHO wrote:

> After some poking around, and pursuing various red herrings, I resorted

> to measure the delay for calling "PQfinish()", which is really the only

> special thing going around at the end of pgbench run...

This wasn't what I was seeing, but it's related. I've proved to myself

the throttle change isn't reponsible for the weird stuff I'm seeing now.

I'd like to rearrange when PQfinish happens now based on what I'm

seeing, but that's not related to this review.

I duplicated the PQfinish problem you found too. On my Linux system,

calls to PQfinish are normally about 36 us long. They will sometimes

get lost for >15ms before they return. That's a different problem

though, because the ones I'm seeing on my Mac are sometimes >150ms.

PQfinish never takes quite that long.

PQfinish doesn't pause for a long time on this platform. But it does

*something* that causes socket select() polling to stutter. I have

instrumented everything interesting in this part of the pgbench code,

and here is the problem event.

1372531862.062236 select with no timeout sleeping=0

1372531862.109111 select returned 6 sockets latency 46875 us

Here select() is called with 0 sleeping processes, 11 that are done, and

14 that are running. The running ones have all sent SELECT statements

to the server, and they are waiting for a response. Some of them

received some data from the server, but they haven't gotten the entire

response back. (The PQfinish calls could be involved in how that happened)

With that setup, select runs for 47 *ms* before it gets the next byte to

a client. During that time 6 clients get responses back to it, but it

stays stuck in there for a long time anyway. Why? I don't know exactly

why, but I am sure that pgbench isn't doing anything weird. It's either

libpq acting funny, or the OS. When pgbench is waiting on a set of

sockets, and none of them are returning anything, that's interesting.

But there's nothing pgbench can do about it.

The cause/effect here is that the randomness to the throttling code

spreads out when all the connections end a bit. There are more times

during which you might have 20 connections finished while 5 still run.

I need to catch up with revisions done to this feature since I started

instrumenting my copy more heavily. I hope I can get this ready for

commit by Monday. I've certainly beaten on the feature for long enough now.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-06-30 06:04:15 |

| Message-ID: | alpine.DEB.2.02.1306300749020.2808@localhost6.localdomain6 |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

> [...] Why? I don't know exactly why, but I am sure that pgbench isn't

> doing anything weird. It's either libpq acting funny, or the OS.

My guess is the OS. "PQfinish" or "select" do/are systems calls that

present opportunities to switch context. I think that the OS is passing

time with other processes on the same host, expecially postgres backends,

when it is not with the client. In order to test that, pgbench should run

on a dedicated box with less threads than the number of available cores,

or user time could be measured in addition to elapsed time. Also, testing

with many clients per thread means that if any client is stuck all other

clients incur an artificial latency: measures are intrinsically fragile.

> I need to catch up with revisions done to this feature since I started

> instrumenting my copy more heavily. I hope I can get this ready for

> commit by Monday.

Ok, thanks!

--

Fabien.

| From: | Josh Berkus <josh(at)agliodbs(dot)com> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr>, Robert Haas <robertmhaas(at)gmail(dot)com>, PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-07-08 18:50:24 |

| Message-ID: | 51DB09F0.8010903@agliodbs.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 06/29/2013 04:11 PM, Greg Smith wrote:

> I need to catch up with revisions done to this feature since I started

> instrumenting my copy more heavily. I hope I can get this ready for

> commit by Monday. I've certainly beaten on the feature for long enough

> now.

Greg, any progress? Haven't seen an update on this in 10 days.

--

Josh Berkus

PostgreSQL Experts Inc.

http://pgexperts.com

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Fabien COELHO <coelho(at)cri(dot)ensmp(dot)fr> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PostgreSQL Developers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PATCH] pgbench --throttle (submission 7 - with lag measurement) |

| Date: | 2013-07-13 15:37:49 |

| Message-ID: | 51E1744D.50104@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/30/13 2:04 AM, Fabien COELHO wrote:

> My guess is the OS. "PQfinish" or "select" do/are systems calls that

> present opportunities to switch context. I think that the OS is passing

> time with other processes on the same host, expecially postgres

> backends, when it is not with the client.

I went looking for other instances of this issue in pgbench results,

that's what I got lost in the last two weeks. It's subtle because the