Re: tweaking NTUP_PER_BUCKET

| Lists: | pgsql-hackers |

|---|

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 00:13:51 |

| Message-ID: | 53B4A03F.3070409@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

while hacking on the 'dynamic nbucket' patch, scheduled for the next CF

(https://commitfest.postgresql.org/action/patch_view?id=1494) I was

repeatedly stumbling over NTUP_PER_BUCKET. I'd like to propose a change

in how we handle it.

TL;DR; version

--------------

I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

once the table is built and there's free space in work_mem. The patch

mentioned above makes implementing this possible / rather simple.

Long version

------------

I've seen discussions in the list in the past, but I don't recall any

numbers comparing impact of different values. So I did a simple test,

measuring the duration of a simple hashjoin with different hashtable

sizes and NTUP_PER_BUCKET values. In short, something like this:

CREATE TABLE outer_table (id INT);

CREATE TABLE inner_table (id INT, val_int INT, val_txt TEXT);

INSERT INTO outer_table SELECT i

FROM generate_series(1,50000000) s(i);

INSERT INTO inner_table SELECT i, mod(i,10000), md5(i::text)

FROM generate_series(1,10000000) s(i);

ANALYZE outer_table;

ANALYZE inner_table;

-- force hash join with a single batch

set work_mem = '1GB';

set enable_sort = off;

set enable_indexscan = off;

set enable_bitmapscan = off;

set enable_mergejoin = off;

set enable_nestloop = off;

-- query with various values in the WHERE condition, determining

-- the size of the hash table (single batch, thanks to work_mem)

SELECT COUNT(i.val_txt)

FROM outer_table o LEFT JOIN inner_table i ON (i.id = o.id)

WHERE val_int < 1000;

which leads to plans like this: http://explain.depesz.com/s/i7W

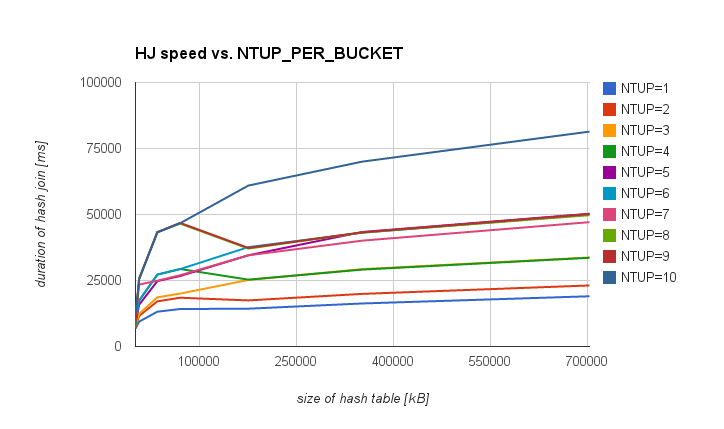

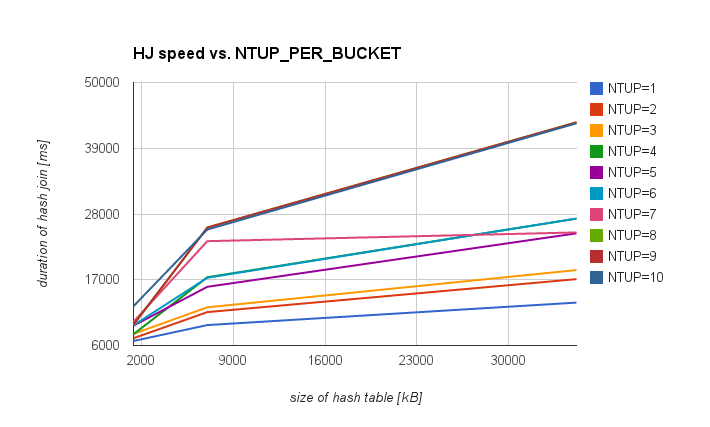

I've repeated this with with NTUP_PER_BUCKET between 1 and 10, and

various values in the WHERE condition, and I got this table,

illustrating impact of various NTUP_PER_BUCKET values for various

hashtable sizes:

kB NTUP=1 NTUP=2 NTUP=4 NTUP=5 NTUP=9 NTUP=10

1407 6753 7218 7890 9324 9488 12574

7032 9417 11586 17417 15820 25732 25402

35157 13179 17101 27225 24763 43323 43175

70313 14203 18475 29354 26690 46840 46676

175782 14319 17458 25325 34542 37342 60949

351563 16297 19928 29124 43364 43232 70014

703125 19027 23125 33610 50283 50165 81398

(I've skipped columns for NTUP values that are almost exactly the same

as the adjacent columns due to the fact bucket count grows by doubling).

If you prefer charts to tables, attached are two showing the same data

(complete and "small hashtables"). Also, attached is a CSV with the

results, tracking number of buckets and durations for each hashtable

size / NTUP_PER_BUCKET combination.

I'm aware that the numbers and exact behaviour depends on the hardware

(e.g. L1/L2 cache, RAM etc.), and the query used to get these values is

somewhat simple (at least compared to some real-world values). But I

believe the general behaviour won't vary too much.

The first observation is that for large hashtables the difference

between NTUP=1 and NTUP=10 is much larger than for small ones. Not

really surprising - probably due to better hit ratios for CPU caches.

For the large hashtable the difference is significant, easily beating

price for the rebuild of the hashtable (increasing the nbuckets).

Therefore I'd like to propose dynamic increase of bucket count. The

patch mentioned at the beginning does pretty much exactly this for plans

with significantly underestimated cardinality of the hashtable (thus

causing inappropriately low bucket count and poor performance of the

join). However it allows dynamic approach to NTUP_PER_BUCKET too, so I

propose a change along these lines:

1) consider lowering the NTUP_PER_BUCKET (see measurements below)

2) do the initial nbucket/nbatch estimation as today (with whatever

NTUP_PER_BUCKET value we end up using) and build the hashtable

3) while building the hashtable, include space for buckets into

spaceUsed while deciding whether to increase number of batches

(the fact it's not already included seems wrong), but instead of

using initial bucket count, do this:

if (hashtable->spaceUsed + BUCKET_SPACE(hashtable)

> hashtable->spaceAllowed)

ExecHashIncreaseNumBatches(hashtable);

where BUCKET_SPACE is 'how many buckets would be appropriate, given

current tuples per batch'.

4) consider what is the "appropriate" number of buckets and rebuild

the table if appropriate

This is where the patch fixes the underestimated nbuckets value, but

what we can do is look at how much free space remains in work_mem

and use as many buckets as fit into work_mem (capped with tuples

per batch, of course) if there's free space. Even if the initial

cardinality estimate was correct (so we're below NTUP_PER_BUCKET)

this may be a significant speedup.

Say for example we have 10M tuples in the table - with the default

NTUP_PER_BUCKET this means ~1M buckets. But there's significant

performance difference between hashtable with 1 or 10 tuples per

bucket (see below), and if we have 80MB in work_mem (instead of the

default 8MB) this may be a huge gain.

Any comments / objections welcome.

regards

Tomas

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 27.5 KB |

|

image/png | 27.5 KB |

| hashtable.csv | text/csv | 1.2 KB |

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 18:03:08 |

| Message-ID: | 53B59ADC.5060709@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 3.7.2014 02:13, Tomas Vondra wrote:

> Hi,

>

> while hacking on the 'dynamic nbucket' patch, scheduled for the next CF

> (https://commitfest.postgresql.org/action/patch_view?id=1494) I was

> repeatedly stumbling over NTUP_PER_BUCKET. I'd like to propose a change

> in how we handle it.

>

>

> TL;DR; version

> --------------

>

> I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

> once the table is built and there's free space in work_mem. The patch

> mentioned above makes implementing this possible / rather simple.

Attached is v1 of this experimental patch. It's supposed to be applied

on top of v7 of this patch

http://www.postgresql.org/message-id/53B59498.3000800@fuzzy.cz

as it shared most of the implementation. So apply it like this:

patch -p1 < hashjoin-nbuckets-growth-v7.patch

patch -p1 < hashjoin-dynamic-ntup-v1.patch

It implements the ideas outlined in the previous message, most importantly:

(a) decreases NTUP_PER_BUCKET to 4

(b) checks free work_mem when deciding whether to add batch

(c) after building the batches, increases the number of buckets as

much as possible, i.e. up to the number of batch tuples, but not

exceeding work_mem

The improvements for the queries I tried so far are quite significant

(partially due to the NTUP_PER_BUCKET decrease, partially due to the

additional bucket count increase).

The rebuild is quite fast - the patch measures and reports this as a

WARNING, and the timings I've seen are ~12ms per 7MB (i.e. ~120ms for

70MB and ~1200ms for 700MB). Of course, this only makes sense when

compared to how much time it saved, but for the queries I tested so far

this was a good investment.

However it's likely there are queries where this may not be the case,

i.e. where rebuilding the hash table is not worth it. Let me know if you

can construct such query (I wasn't).

regards

Tomas

| Attachment | Content-Type | Size |

|---|---|---|

| hashjoin-dynamic-ntup-v1.patch | text/x-diff | 5.9 KB |

| hashjoin-nbuckets-growth-v7.patch | text/x-diff | 14.3 KB |

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 18:10:13 |

| Message-ID: | 20140703181013.GS16422@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Tomas,

* Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

> However it's likely there are queries where this may not be the case,

> i.e. where rebuilding the hash table is not worth it. Let me know if you

> can construct such query (I wasn't).

Thanks for working on this! I've been thinking on this for a while and

this seems like it may be a good approach. Have you considered a bloom

filter over the buckets..? Also, I'd suggest you check the archives

from about this time last year for test cases that I was using which

showed cases where hashing the larger table was a better choice- those

same cases may also show regression here (or at least would be something

good to test).

Have you tried to work out what a 'worst case' regression for this

change would look like? Also, how does the planning around this change?

Are we more likely now to hash the smaller table (I'd guess 'yes' just

based on the reduction in NTUP_PER_BUCKET, but did you make any changes

due to the rehashing cost?)?

Thanks,

Stephen

| From: | Atri Sharma <atri(dot)jiit(at)gmail(dot)com> |

|---|---|

| To: | Stephen Frost <sfrost(at)snowman(dot)net> |

| Cc: | Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 18:40:26 |

| Message-ID: | CAOeZVic7oqWJUAwnQyZwR8WhjAmMK4jKTad7rhk=jSN-qEQfTw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jul 3, 2014 at 11:40 PM, Stephen Frost <sfrost(at)snowman(dot)net> wrote:

> Tomas,

>

> * Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

> > However it's likely there are queries where this may not be the case,

> > i.e. where rebuilding the hash table is not worth it. Let me know if you

> > can construct such query (I wasn't).

>

> Thanks for working on this! I've been thinking on this for a while and

> this seems like it may be a good approach. Have you considered a bloom

> filter?

>

IIRC, last time when we tried doing bloom filters, I was short of some real

world useful hash functions that we could use for building the bloom filter.

If we are restarting experiments on this, I would be glad to assist.

Regards,

Atri

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 18:50:34 |

| Message-ID: | 53B5A5FA.4050705@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi Stephen,

On 3.7.2014 20:10, Stephen Frost wrote:

> Tomas,

>

> * Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

>> However it's likely there are queries where this may not be the case,

>> i.e. where rebuilding the hash table is not worth it. Let me know if you

>> can construct such query (I wasn't).

>

> Thanks for working on this! I've been thinking on this for a while

> and this seems like it may be a good approach. Have you considered a

> bloom filter over the buckets..? Also, I'd suggest you check the

I know you've experimented with it, but I haven't looked into that yet.

> archives from about this time last year for test cases that I was

> using which showed cases where hashing the larger table was a better

> choice- those same cases may also show regression here (or at least

> would be something good to test).

Good idea, I'll look at the test cases - thanks.

> Have you tried to work out what a 'worst case' regression for this

> change would look like? Also, how does the planning around this

> change? Are we more likely now to hash the smaller table (I'd guess

> 'yes' just based on the reduction in NTUP_PER_BUCKET, but did you

> make any changes due to the rehashing cost?)?

The case I was thinking about is underestimated cardinality of the inner

table and a small outer table. That'd lead to a large hash table and

very few lookups (thus making the rehash inefficient). I.e. something

like this:

Hash Join

Seq Scan on small_table (rows=100) (actual rows=100)

Hash

Seq Scan on bad_estimate (rows=100) (actual rows=1000000000)

Filter: ((a < 100) AND (b < 100))

But I wasn't able to reproduce this reasonably, because in practice

that'd lead to a nested loop or something like that (which is a planning

issue, impossible to fix in hashjoin code).

Tomas

| From: | Greg Stark <stark(at)mit(dot)edu> |

|---|---|

| To: | Atri Sharma <atri(dot)jiit(at)gmail(dot)com> |

| Cc: | Stephen Frost <sfrost(at)snowman(dot)net>, Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 18:51:40 |

| Message-ID: | CAM-w4HPt83UWMmZQG3N+DchW97N5_FEYO-QrJ4zw38_-taTr1A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jul 3, 2014 at 11:40 AM, Atri Sharma <atri(dot)jiit(at)gmail(dot)com> wrote:

> IIRC, last time when we tried doing bloom filters, I was short of some real

> world useful hash functions that we could use for building the bloom filter.

Last time was we wanted to use bloom filters in hash joins to filter

out tuples that won't match any of the future hash batches to reduce

the amount of tuples that need to be spilled to disk. However the

problem was that it was unclear for a given amount of memory usage how

to pick the right size bloom filter and how to model how much it would

save versus how much it would cost in reduced hash table size.

I think it just required some good empirical tests and hash join heavy

workloads to come up with some reasonable guesses. We don't need a

perfect model just some reasonable bloom filter size that we're pretty

sure will usually help more than it hurts.

--

greg

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-03 21:45:23 |

| Message-ID: | 53B5CEF3.1010305@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 3.7.2014 20:50, Tomas Vondra wrote:

> Hi Stephen,

>

> On 3.7.2014 20:10, Stephen Frost wrote:

>> Tomas,

>>

>> * Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

>>> However it's likely there are queries where this may not be the case,

>>> i.e. where rebuilding the hash table is not worth it. Let me know if you

>>> can construct such query (I wasn't).

>>

>> Thanks for working on this! I've been thinking on this for a while

>> and this seems like it may be a good approach. Have you considered a

>> bloom filter over the buckets..? Also, I'd suggest you check the

>> archives from about this time last year for test cases that I was

>> using which showed cases where hashing the larger table was a better

>> choice- those same cases may also show regression here (or at least

>> would be something good to test).

>

> Good idea, I'll look at the test cases - thanks.

I can't find the thread / test cases in the archives. I've found this

thread in hackers:

Can you point me to the right one, please?

regards

Tomas

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | Greg Stark <stark(at)mit(dot)edu> |

| Cc: | Atri Sharma <atri(dot)jiit(at)gmail(dot)com>, Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-06 04:47:47 |

| Message-ID: | 20140706044747.GU16422@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

* Greg Stark (stark(at)mit(dot)edu) wrote:

> On Thu, Jul 3, 2014 at 11:40 AM, Atri Sharma <atri(dot)jiit(at)gmail(dot)com> wrote:

> > IIRC, last time when we tried doing bloom filters, I was short of some real

> > world useful hash functions that we could use for building the bloom filter.

>

> Last time was we wanted to use bloom filters in hash joins to filter

> out tuples that won't match any of the future hash batches to reduce

> the amount of tuples that need to be spilled to disk. However the

> problem was that it was unclear for a given amount of memory usage how

> to pick the right size bloom filter and how to model how much it would

> save versus how much it would cost in reduced hash table size.

Right. There's only one hash function available, really, (we don't

currently support multiple hash functions..), unless we want to try and

re-hash the 32bit hash value that we get back (not against trying that,

but it isn't what I'd start with) and it would hopefully be sufficient

for this.

> I think it just required some good empirical tests and hash join heavy

> workloads to come up with some reasonable guesses. We don't need a

> perfect model just some reasonable bloom filter size that we're pretty

> sure will usually help more than it hurts.

This would help out a lot of things, really.. Perhaps what Tomas is

developing regarding test cases would help here also.

Thanks,

Stephen

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-06 14:16:36 |

| Message-ID: | 53B95A44.7010402@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6.7.2014 06:47, Stephen Frost wrote:

> * Greg Stark (stark(at)mit(dot)edu) wrote:

>> Last time was we wanted to use bloom filters in hash joins to

>> filter out tuples that won't match any of the future hash batches

>> to reduce the amount of tuples that need to be spilled to disk.

>> However the problem was that it was unclear for a given amount of

>> memory usage how to pick the right size bloom filter and how to

>> model how much it would save versus how much it would cost in

>> reduced hash table size.

>

> Right. There's only one hash function available, really, (we don't

> currently support multiple hash functions..), unless we want to try

> and re-hash the 32bit hash value that we get back (not against trying

> that, but it isn't what I'd start with) and it would hopefully be

> sufficient for this.

I don't really see a need to add more hashing functions. Use the hash

value itself as an input to the bloom filter seems just fine to me.

According to [1] the expected number of collisions is

n - k + k * math.pow((1 - 1/k), n)

where 'k' is the number of possible values (2^32 in our case), and 'n'

is the number of inserts (i.e. values inserted into the table). With a

1GB work_mem and ~50B per row, that's ~20M rows. According to the

formula, that's ~50k collisions. In other words, 0.25% of false

positives. This seems more than sufficient for the bloom filter, and if

0.25% of false positives causes it to be inefficient I'd say the gains

are not that great anyway. (Unless my calculations are somehow flawed,

of course).

[1] https://www.cs.duke.edu/courses/cps102/spring10/Lectures/L-18.pdf

>> I think it just required some good empirical tests and hash join

>> heavy workloads to come up with some reasonable guesses. We don't

>> need a perfect model just some reasonable bloom filter size that

>> we're pretty sure will usually help more than it hurts.

>

> This would help out a lot of things, really.. Perhaps what Tomas is

> developing regarding test cases would help here also.

The test cases might be reused I guess. However I still don't have a

clear idea of how exactly the bloom filter would be implemented. In the

threads I found it was suggested to use per-bucket filtersm, which seems

really strange to me. I see two options:

(a) global filter

- bloom filter sized according to estimates from planner

- ... so we're screwed if the estimates are significantly wrong

- create the filter in ExecHashTableCreate

- insert all tuples in ExecHashTableInsert (all batches)

(b) per-batch filter

- may be built after the batching is completed

- we have reliable numbers at this point (not just estimates)

- per-batch filters may be smaller (only fraction of tuples)

So the per-batch filter seems to be a better approach. The question is

the sizing of the bloom filter. According to [2] there are three rules

of thumb for sizing bloom filters:

(1) 1B per item, to get 2% error rate

(2) 0.7 * bits of hash functions (~5 when using 1B / item)

(3) number of hashes dominates the performance (more to compute,

more memory accesses)

When working with 20M rows, this means ~20MB and 5 hash functions. Not

that bad, although we don't know how expensive it's to build the filter.

[2] http://corte.si/posts/code/bloom-filter-rules-of-thumb/index.html

However this is somewhat flawed because this assumes the 20M hashed

values are distinct, so if the inner table contains duplicate keys, we

might use smaller bloom filter. But we don't know that value (ndistinct

estimates are rather unreliable, but we might use hyperloglog counter to

do this).

Also, there are cases when we know there will be no misses (e.g. a join

on a FK), and in that case it's pointless to build the bloom filter.

Would be nice to get some flag from the planner whether to build it, a

threshold when to build it (e.g. if rowcount is > 10k, build the filter).

Doing something similar for the two patches I posted (tweaking the

nbuckets dynamically) might also benefit from such planning information,

although it's not that critical for them.

regards

Tomas

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-06 15:57:53 |

| Message-ID: | 20140706155753.GX16422@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Tomas,

* Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

> I can't find the thread / test cases in the archives. I've found this

> thread in hackers:

>

> http://www.postgresql.org/message-id/CAOeZVif-R-iLF966wEipk5By-KhzVLOqpWqurpaK3p5fYw-Rdw@mail.gmail.com

>

> Can you point me to the right one, please?

This:

http://www.postgresql.org/message-id/20130328235627.GV4361@tamriel.snowman.net

and I think there may have been other test cases on that thread

somewhere... I certainly recall having at least a couple of them that I

was playing with.

I can probably find them around here somewhere too, if necessary.

Thanks,

Stephen

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-06 16:44:35 |

| Message-ID: | 53B97CF3.6010906@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6.7.2014 17:57, Stephen Frost wrote:

> Tomas,

>

> * Tomas Vondra (tv(at)fuzzy(dot)cz) wrote:

>> I can't find the thread / test cases in the archives. I've found this

>> thread in hackers:

>>

>> http://www.postgresql.org/message-id/CAOeZVif-R-iLF966wEipk5By-KhzVLOqpWqurpaK3p5fYw-Rdw@mail.gmail.com

>>

>> Can you point me to the right one, please?

>

> This:

>

> http://www.postgresql.org/message-id/20130328235627.GV4361@tamriel.snowman.net

Sadly, the link to the SQL does not work :-(

http://snowman.net/~sfrost/test_case.sql => 404

> and I think there may have been other test cases on that thread

> somewhere... I certainly recall having at least a couple of them that I

> was playing with.

>

> I can probably find them around here somewhere too, if necessary.

If you could look for them, that'd be great.

regards

Tomas

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 12:49:50 |

| Message-ID: | CA+TgmobMzPsA-N04=yX2jtMHeb3tGYw5HoZOE+Aq8PTHLoHYLw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jul 2, 2014 at 8:13 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

> once the table is built and there's free space in work_mem. The patch

> mentioned above makes implementing this possible / rather simple.

Another idea would be to start with NTUP_PER_BUCKET=1 and then, if we

run out of memory, increase NTUP_PER_BUCKET. I'd like to think that

the common case is that work_mem will be large enough that everything

fits; and if you do it that way, then you save yourself the trouble of

rehashing later, which as you point out might lose if there are only a

few probes. If it turns out that you run short of memory, you can

merge pairs of buckets up to three times, effectively doubling

NTUP_PER_BUCKET each time.

Yet another idea is to stick with your scheme, but do the dynamic

bucket splits lazily. Set a flag on each bucket indicating whether or

not it needs a lazy split. When someone actually probes the hash

table, if the flag is set for a particular bucket, move any tuples

that don't belong as we scan the bucket. If we reach the end of the

bucket chain, clear the flag.

Not sure either of these are better (though I kind of like the first

one) but I thought I'd throw them out there...

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | "Tomas Vondra" <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | "Robert Haas" <robertmhaas(at)gmail(dot)com> |

| Cc: | "Tomas Vondra" <tv(at)fuzzy(dot)cz>, "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 13:35:12 |

| Message-ID: | fd0c13e59dbba297472ffd8bbe20dcd1.squirrel@sq.gransy.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 8 Červenec 2014, 14:49, Robert Haas wrote:

> On Wed, Jul 2, 2014 at 8:13 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

>> once the table is built and there's free space in work_mem. The patch

>> mentioned above makes implementing this possible / rather simple.

>

> Another idea would be to start with NTUP_PER_BUCKET=1 and then, if we

> run out of memory, increase NTUP_PER_BUCKET. I'd like to think that

> the common case is that work_mem will be large enough that everything

> fits; and if you do it that way, then you save yourself the trouble of

> rehashing later, which as you point out might lose if there are only a

> few probes. If it turns out that you run short of memory, you can

> merge pairs of buckets up to three times, effectively doubling

> NTUP_PER_BUCKET each time.

Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large outer

relations it may be way cheaper to use higher NTUP_PER_BUCKET values

instead of increasing the number of batches (resulting in repeated scans

of the outer table). I think it's important (but difficult) to keep these

things somehow balanced.

With large work_mem values the amount of memory for buckets may be quite

significant. E.g. 800MB work_mem may easily give ~120MB of memory taken by

buckets with NTUP_PER_BUCKET=1. With NTUP_PER_BUCKET=4 it's just ~30MB.

> Yet another idea is to stick with your scheme, but do the dynamic

> bucket splits lazily. Set a flag on each bucket indicating whether or

> not it needs a lazy split. When someone actually probes the hash

> table, if the flag is set for a particular bucket, move any tuples

> that don't belong as we scan the bucket. If we reach the end of the

> bucket chain, clear the flag.

I don't think a lazy scheme makes much sense here. There are two clearly

separated phases - loading the table and search.

Also, it seems to me it can't work as described. Say we build a table and

then find out we need a table 4x the size. If I understand your proposal

correctly, you'd just resize buckets array, copy the existing buckets

(first 1/4 buckets) and set 'needs_split' flags. Correct?

Well, the problem is that while scanning a bucket you already need to know

which tuples belong there. But with the lazy approach, the tuples might

still be in the old (not yet split) buckets. So you'd have to search the

current bucket and all buckets that were not split yet. Or did I miss

something?

Tomas

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 14:16:18 |

| Message-ID: | CA+TgmoZjpGaqT11o1+tSa6yKoMpn4GkGfnEVKjbeiej1E_FTsA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jul 8, 2014 at 9:35 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> On 8 Červenec 2014, 14:49, Robert Haas wrote:

>> On Wed, Jul 2, 2014 at 8:13 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>>> I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

>>> once the table is built and there's free space in work_mem. The patch

>>> mentioned above makes implementing this possible / rather simple.

>>

>> Another idea would be to start with NTUP_PER_BUCKET=1 and then, if we

>> run out of memory, increase NTUP_PER_BUCKET. I'd like to think that

>> the common case is that work_mem will be large enough that everything

>> fits; and if you do it that way, then you save yourself the trouble of

>> rehashing later, which as you point out might lose if there are only a

>> few probes. If it turns out that you run short of memory, you can

>> merge pairs of buckets up to three times, effectively doubling

>> NTUP_PER_BUCKET each time.

>

> Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large outer

> relations it may be way cheaper to use higher NTUP_PER_BUCKET values

> instead of increasing the number of batches (resulting in repeated scans

> of the outer table). I think it's important (but difficult) to keep these

> things somehow balanced.

Right, I think that's clear. I'm just pointing out that you get to

decide: you can either start with a larger NTUP_PER_BUCKET and then

reduce it if you enough memory left, or you can start with a smaller

NTUP_PER_BUCKET and then increase it if you run short of memory.

>> Yet another idea is to stick with your scheme, but do the dynamic

>> bucket splits lazily. Set a flag on each bucket indicating whether or

>> not it needs a lazy split. When someone actually probes the hash

>> table, if the flag is set for a particular bucket, move any tuples

>> that don't belong as we scan the bucket. If we reach the end of the

>> bucket chain, clear the flag.

>

> I don't think a lazy scheme makes much sense here. There are two clearly

> separated phases - loading the table and search.

>

> Also, it seems to me it can't work as described. Say we build a table and

> then find out we need a table 4x the size. If I understand your proposal

> correctly, you'd just resize buckets array, copy the existing buckets

> (first 1/4 buckets) and set 'needs_split' flags. Correct?

>

> Well, the problem is that while scanning a bucket you already need to know

> which tuples belong there. But with the lazy approach, the tuples might

> still be in the old (not yet split) buckets. So you'd have to search the

> current bucket and all buckets that were not split yet. Or did I miss

> something?

If you have, say, bucket 0..63 and you decide to change it to buckets

0..255, then any tuple that isn't in bucket N has to be in bucket N %

64. More generally, any time you expand or contract by a power of two

it's pretty easy to figure out where tuples have to go.

But even if you do something that's not a power of two, like say a 10x

compaction of the buckets, there's still only two buckets that can

contain any particular hash value. If the hash value is H, the old

number of buckets is O, and the new number of buckets is N, then the

tuple has got to be in bucket H % O or bucket H % N. If bucket H % O

doesn't have the needs-split flag set, then it must be in H % N (if it

exists at all).

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | "Tomas Vondra" <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | "Robert Haas" <robertmhaas(at)gmail(dot)com> |

| Cc: | "Tomas Vondra" <tv(at)fuzzy(dot)cz>, "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 16:06:22 |

| Message-ID: | bac6aa883f79114477b1bd665efd7742.squirrel@sq.gransy.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 8 Červenec 2014, 16:16, Robert Haas wrote:

> On Tue, Jul 8, 2014 at 9:35 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large outer

>> relations it may be way cheaper to use higher NTUP_PER_BUCKET values

>> instead of increasing the number of batches (resulting in repeated scans

>> of the outer table). I think it's important (but difficult) to keep

>> these

>> things somehow balanced.

>

> Right, I think that's clear. I'm just pointing out that you get to

> decide: you can either start with a larger NTUP_PER_BUCKET and then

> reduce it if you enough memory left, or you can start with a smaller

> NTUP_PER_BUCKET and then increase it if you run short of memory.

I don't think those two approaches are equal.

With the approach I took, I can use a compromise value (NTUP=4) initially,

and only resize the hash table once at the end (while keeping the amount

of memory under work_mem all the time).

With the "NTUP=1 and increase in case of memory pressure" you have to

shrink the table immediately (to fit into work_mem), and if the hash table

gets split into multiple batches you're suddenly in a situation with lower

memory pressure and you may need to increase it again.

>>> Yet another idea is to stick with your scheme, but do the dynamic

>>> bucket splits lazily. Set a flag on each bucket indicating whether or

>>> not it needs a lazy split. When someone actually probes the hash

>>> table, if the flag is set for a particular bucket, move any tuples

>>> that don't belong as we scan the bucket. If we reach the end of the

>>> bucket chain, clear the flag.

>>

>> I don't think a lazy scheme makes much sense here. There are two clearly

>> separated phases - loading the table and search.

>>

>> Also, it seems to me it can't work as described. Say we build a table

>> and

>> then find out we need a table 4x the size. If I understand your proposal

>> correctly, you'd just resize buckets array, copy the existing buckets

>> (first 1/4 buckets) and set 'needs_split' flags. Correct?

>>

>> Well, the problem is that while scanning a bucket you already need to

>> know

>> which tuples belong there. But with the lazy approach, the tuples might

>> still be in the old (not yet split) buckets. So you'd have to search the

>> current bucket and all buckets that were not split yet. Or did I miss

>> something?

>

> If you have, say, bucket 0..63 and you decide to change it to buckets

> 0..255, then any tuple that isn't in bucket N has to be in bucket N %

> 64. More generally, any time you expand or contract by a power of two

> it's pretty easy to figure out where tuples have to go.

OK.

> But even if you do something that's not a power of two, like say a 10x

> compaction of the buckets, there's still only two buckets that can

> contain any particular hash value. If the hash value is H, the old

> number of buckets is O, and the new number of buckets is N, then the

> tuple has got to be in bucket H % O or bucket H % N. If bucket H % O

> doesn't have the needs-split flag set, then it must be in H % N (if it

> exists at all).

I wonder if this is really worth the effort - my guess is it's efficient

only if large portion of buckets is not visited (and thus does not need to

be split) at all. Not sure how common that is (our workloads certainly are

not like that).

regards

Tomas

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 17:00:22 |

| Message-ID: | CA+TgmoY-0L5iCnwC1xzrXTRqWc7VBQDtymxiCUp+-TugvLAoyg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jul 8, 2014 at 12:06 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> On 8 Červenec 2014, 16:16, Robert Haas wrote:

>> On Tue, Jul 8, 2014 at 9:35 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>>> Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large outer

>>> relations it may be way cheaper to use higher NTUP_PER_BUCKET values

>>> instead of increasing the number of batches (resulting in repeated scans

>>> of the outer table). I think it's important (but difficult) to keep

>>> these

>>> things somehow balanced.

>>

>> Right, I think that's clear. I'm just pointing out that you get to

>> decide: you can either start with a larger NTUP_PER_BUCKET and then

>> reduce it if you enough memory left, or you can start with a smaller

>> NTUP_PER_BUCKET and then increase it if you run short of memory.

>

> I don't think those two approaches are equal.

>

> With the approach I took, I can use a compromise value (NTUP=4) initially,

> and only resize the hash table once at the end (while keeping the amount

> of memory under work_mem all the time).

>

> With the "NTUP=1 and increase in case of memory pressure" you have to

> shrink the table immediately (to fit into work_mem), and if the hash table

> gets split into multiple batches you're suddenly in a situation with lower

> memory pressure and you may need to increase it again.

True. On the other hand, this really only comes into play when the

estimates are wrong. If you know at the start how many tuples you're

going to need to store and how big they are, you determine whether

NTUP_PER_BUCKET=1 is going to work before you even start building the

hash table. If it isn't, then you use fewer buckets right from the

start. If we start by estimating a small value for NTUP_PER_BUCKET

and then let it float upward if we turn out to have more tuples than

expected, we're optimizing for the case where our statistics are

right. If we start by estimating a larger value for NTUP_PER_BUCKET

than what we think we need to fit within work_mem, we're basically

guessing that our statistics are more likely to be wrong than to be

right. I think.

> I wonder if this is really worth the effort - my guess is it's efficient

> only if large portion of buckets is not visited (and thus does not need to

> be split) at all. Not sure how common that is (our workloads certainly are

> not like that).

Yeah. It may be a bad idea. I threw it out there as a possible way

of trying to mitigate the worst case, which is when you trouble to

build the hash table and then make very few probes. But that may not

be worth the complexity that this would introduce.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 18:28:41 |

| Message-ID: | 53BC3859.5080006@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 8.7.2014 19:00, Robert Haas wrote:

> On Tue, Jul 8, 2014 at 12:06 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> On 8 Červenec 2014, 16:16, Robert Haas wrote:

>>

>>> Right, I think that's clear. I'm just pointing out that you get

>>> to decide: you can either start with a larger NTUP_PER_BUCKET and

>>> then reduce it if you enough memory left, or you can start with a

>>> smaller NTUP_PER_BUCKET and then increase it if you run short of

>>> memory.

>>

>> I don't think those two approaches are equal.

>>

>> With the approach I took, I can use a compromise value (NTUP=4)

>> initially, and only resize the hash table once at the end (while

>> keeping the amount of memory under work_mem all the time).

>>

>> With the "NTUP=1 and increase in case of memory pressure" you have

>> to shrink the table immediately (to fit into work_mem), and if the

>> hash table gets split into multiple batches you're suddenly in a

>> situation with lower memory pressure and you may need to increase

>> it again.

>

> True. On the other hand, this really only comes into play when the

> estimates are wrong. If you know at the start how many tuples you're

> going to need to store and how big they are, you determine whether

> NTUP_PER_BUCKET=1 is going to work before you even start building

> the hash table. If it isn't, then you use fewer buckets right from

> the start. If we start by estimating a small value for

> NTUP_PER_BUCKET and then let it float upward if we turn out to have

> more tuples than expected, we're optimizing for the case where our

> statistics are right. If we start by estimating a larger value for

> NTUP_PER_BUCKET than what we think we need to fit within work_mem,

> we're basically guessing that our statistics are more likely to be

> wrong than to be right. I think.

Good point. The fist patch was targetted exactly at the wrongly

estimated queries. This patch attempts to apply the rehash to all plans,

and maybe there's a better way.

If the estimates are correct / not too off, we can use this information

to do the sizing 'right' at the beginning (without facing rehashes later).

Over-estimates are not a problem, because it won't make the hash table

slower (it'll be sized for more tuples) and we can't change the number

of batches anyway.

With under-estimates we have to decide whether to resize the hash or

increase the number of batches.

In both cases that matter (correct estimates and under-estimates) we

have to decide whether to increase the number of buckets or batches. I'm

not sure how to do that.

>> I wonder if this is really worth the effort - my guess is it's

>> efficient only if large portion of buckets is not visited (and

>> thus does not need to be split) at all. Not sure how common that is

>> (our workloads certainly are not like that).

>

> Yeah. It may be a bad idea. I threw it out there as a possible way of

> trying to mitigate the worst case, which is when you trouble to build

> the hash table and then make very few probes. But that may not be

> worth the complexity that this would introduce.

Let's keep it simple for now. I think the sizing question (explained

above) is more important and needs to be solved first.

regards

Tomas

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 19:53:32 |

| Message-ID: | CAMkU=1xDecxEuNL6xhSNUBqvHRm=e96F9H=hZp1UpMe5jDH-fg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jul 8, 2014 at 6:35 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> On 8 Červenec 2014, 14:49, Robert Haas wrote:

> > On Wed, Jul 2, 2014 at 8:13 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> >> I propose dynamic increase of the nbuckets (up to NTUP_PER_BUCKET=1)

> >> once the table is built and there's free space in work_mem. The patch

> >> mentioned above makes implementing this possible / rather simple.

> >

> > Another idea would be to start with NTUP_PER_BUCKET=1 and then, if we

> > run out of memory, increase NTUP_PER_BUCKET. I'd like to think that

> > the common case is that work_mem will be large enough that everything

> > fits; and if you do it that way, then you save yourself the trouble of

> > rehashing later, which as you point out might lose if there are only a

> > few probes. If it turns out that you run short of memory, you can

> > merge pairs of buckets up to three times, effectively doubling

> > NTUP_PER_BUCKET each time.

>

> Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large outer

> relations it may be way cheaper to use higher NTUP_PER_BUCKET values

> instead of increasing the number of batches (resulting in repeated scans

> of the outer table). I think it's important (but difficult) to keep these

> things somehow balanced.

>

> With large work_mem values the amount of memory for buckets may be quite

> significant. E.g. 800MB work_mem may easily give ~120MB of memory taken by

> buckets with NTUP_PER_BUCKET=1. With NTUP_PER_BUCKET=4 it's just ~30MB.

>

That extra 90MB is memory well spent, in my book. Versus having to walk a

4-deep linked list which is scattered all over main memory just to find one

entry we care about in it.

It might cause some things that were very close to the edge to tip over

into multi-pass hash joins, but even that is not necessarily a bad thing.

(When I test with work_mem in the 20 to 50 MB range, I often find

batches=2 be ~30% faster than batches=1, I think because partitioning into

main memory using sequential writes is not much of a burden, and building

and walking two hash tables that both fit in L3 cache is much faster than

building 1 hash table in main memory, and more than makes up for the work

of partitioning. Presumably that situation doesn't apply to work_mem

900MB, but isn't NUMA about the same thing as L4 cache, in effect?).

And if someone had a whole bunch of hash joins which were right in that

anti-sweet spot, all they have to do is increase work_mem by (at most) 15%

to get out of it. work_mem is basically impossible to tune, so I doubt

anyone exists who has a reasonable setting for it which can' be increased

by 15% and still be reasonable. And if someone does have it tuned so

tightly, they probably won't be upgrading to new major versions without

expecting to do some re-tuning.

Cheers,

Jeff

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 21:04:22 |

| Message-ID: | 53BC5CD6.4080502@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 8.7.2014 21:53, Jeff Janes wrote:

> On Tue, Jul 8, 2014 at 6:35 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>>

>> Maybe. I'm not against setting NTUP_PER_BUCKET=1, but with large

>> outer relations it may be way cheaper to use higher NTUP_PER_BUCKET

>> values instead of increasing the number of batches (resulting in

>> repeated scans of the outer table). I think it's important (but

>> difficult) to keep these things somehow balanced.

>>

>> With large work_mem values the amount of memory for buckets may be

>> quite significant. E.g. 800MB work_mem may easily give ~120MB of

>> memory taken by buckets with NTUP_PER_BUCKET=1. With

>> NTUP_PER_BUCKET=4 it's just ~30MB.

>

>

> That extra 90MB is memory well spent, in my book. Versus having to

> walk a 4-deep linked list which is scattered all over main memory

> just to find one entry we care about in it.

Yes, I share this view, although it really depends on how expensive it's

to rescan the outer relation (due to more batches) vs. lookup in a

"deeper" hash table.

The other thing is that this memory is currently unaccounted for, i.e.

the space for buckets is not counted within the work_mem limit (unless

I'm missing something in the code). I'm not sure why, and I believe it

should be, so I changer this in the patch.

> It might cause some things that were very close to the edge to tip

> over into multi-pass hash joins, but even that is not necessarily a

> bad thing. (When I test with work_mem in the 20 to 50 MB range, I

> often find batches=2 be ~30% faster than batches=1, I think because

> partitioning into main memory using sequential writes is not much of

> a burden, and building and walking two hash tables that both fit in

> L3 cache is much faster than building 1 hash table in main memory,

> and more than makes up for the work of partitioning. Presumably that

> situation doesn't apply to work_mem 900MB, but isn't NUMA about the

> same thing as L4 cache, in effect?).

Yes, I've seen cases where plans with (nbatch>1) were faster than a plan

with (nbatch=1). I'm not entirely sure why :-( but I have two hypotheses

so far:

(a) Caching within CPU (current CPUs have multiple MBs of L2 cache),

which may make a difference, especially in the size range you've

mentioned.

(b) Lower tuple/bucket ratio - this may easily happen for example if

the estimates are slighly lower than reality (either row count or

row width) and narrowly exceed work_mem, thus forcing batching.

The resulting hash table has ~50% tuple/bucket on average, and

thus is faster.

> And if someone had a whole bunch of hash joins which were right in

> that anti-sweet spot, all they have to do is increase work_mem by (at

> most) 15% to get out of it. work_mem is basically impossible to tune,

> so I doubt anyone exists who has a reasonable setting for it which

> can' be increased by 15% and still be reasonable. And if someone does

> have it tuned so tightly, they probably won't be upgrading to new

> major versions without expecting to do some re-tuning.

Right. Still, it's not really nice to get slower hash joins after

upgrading to a new version - I somehow expect to get the same or better

performance, if possible. So I'd like to make it as efficient as

possible, within the given memory limit.

Sadly, the increase may be needed anyway because of counting the bucket

memory into work_mem, as mentioned above. If committed, this should be

probably mentioned in release notes or something.

regards

Tomas

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-08 21:16:50 |

| Message-ID: | 53BC5FC2.6020806@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

Thinking about this a bit more, do we really need to build the hash

table on the first pass? Why not to do this:

(1) batching

- read the tuples, stuff them into a simple list

- don't build the hash table yet

(2) building the hash table

- we have all the tuples in a simple list, batching is done

- we know exact row count, can size the table properly

- build the table

Also, maybe we could use a regular linear hash table [1], instead of

using the current implementation with NTUP_PER_BUCKET=1. (Although,

that'd be absolutely awful with duplicates.)

regards

Tomas

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-09 14:07:45 |

| Message-ID: | CA+TgmoZRWuAxAKzodDEjRUJyrcFVGhuE9_LAvexzx8ffWrUJwA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jul 8, 2014 at 5:16 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> Thinking about this a bit more, do we really need to build the hash

> table on the first pass? Why not to do this:

>

> (1) batching

> - read the tuples, stuff them into a simple list

> - don't build the hash table yet

>

> (2) building the hash table

> - we have all the tuples in a simple list, batching is done

> - we know exact row count, can size the table properly

> - build the table

We could do this, and in fact we could save quite a bit of memory if

we allocated say 1MB chunks and packed the tuples in tightly instead

of palloc-ing each one separately. But I worry that rescanning the

data to build the hash table would slow things down too much.

> Also, maybe we could use a regular linear hash table [1], instead of

> using the current implementation with NTUP_PER_BUCKET=1. (Although,

> that'd be absolutely awful with duplicates.)

Linear probing is pretty awful unless your load factor is << 1. You'd

probably want NTUP_PER_BUCKET=0.25, or something like that, which

would eat up a lot of memory.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-09 17:54:35 |

| Message-ID: | 53BD81DB.2070504@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 9.7.2014 16:07, Robert Haas wrote:

> On Tue, Jul 8, 2014 at 5:16 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> Thinking about this a bit more, do we really need to build the

>> hash table on the first pass? Why not to do this:

>>

>> (1) batching - read the tuples, stuff them into a simple list -

>> don't build the hash table yet

>>

>> (2) building the hash table - we have all the tuples in a simple

>> list, batching is done - we know exact row count, can size the

>> table properly - build the table

>

> We could do this, and in fact we could save quite a bit of memory if

> we allocated say 1MB chunks and packed the tuples in tightly instead

> of palloc-ing each one separately. But I worry that rescanning the

> data to build the hash table would slow things down too much.

OK. I think we should shoot for no negative impact on well estimated

plans, and the rescans might violate that. I need to think about this

for some time, but my current plan is this:

(1) size the buckets for NTUP_PER_BUCKET=1 (and use whatever number

of batches this requires)

(2) build the batches as in the current patch

(3) if the hash table is too small, resize it

Which is not really different from the current patch.

>> Also, maybe we could use a regular linear hash table [1], instead

>> of using the current implementation with NTUP_PER_BUCKET=1.

>> (Although, that'd be absolutely awful with duplicates.)

>

> Linear probing is pretty awful unless your load factor is << 1.

> You'd probably want NTUP_PER_BUCKET=0.25, or something like that,

> which would eat up a lot of memory.

My experience is that 0.5-0.75 load factor is quite OK, and

NTUP_PER_BUCKET=1 gives you ~0.75 load on average, so it's not that

different. Anyway, the inability to handle duplicies reasonably is

probably a show-stopper.

Tomas

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-10 19:33:50 |

| Message-ID: | 53BEEA9E.2080009@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 9.7.2014 16:07, Robert Haas wrote:

> On Tue, Jul 8, 2014 at 5:16 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> Thinking about this a bit more, do we really need to build the hash

>> table on the first pass? Why not to do this:

>>

>> (1) batching

>> - read the tuples, stuff them into a simple list

>> - don't build the hash table yet

>>

>> (2) building the hash table

>> - we have all the tuples in a simple list, batching is done

>> - we know exact row count, can size the table properly

>> - build the table

>

> We could do this, and in fact we could save quite a bit of memory if

> we allocated say 1MB chunks and packed the tuples in tightly instead

> of palloc-ing each one separately. But I worry that rescanning the

> data to build the hash table would slow things down too much.

I did a quick test of how much memory we could save by this. The

attached patch densely packs the tuples into 32kB chunks (1MB seems way

too much because of small work_mem values, but I guess this might be

tuned based on number of tuples / work_mem size ...).

Tested on query like this (see the first message in this thread how to

generate the tables):

QUERY PLAN

-----------------------------------------------------------------------

Aggregate (cost=2014697.64..2014697.65 rows=1 width=33) (actual

time=63796.270..63796.271 rows=1 loops=1)

-> Hash Left Join (cost=318458.14..1889697.60 rows=50000016

width=33) (actual time=2865.656..61778.592 rows=50000000 loops=1)

Hash Cond: (o.id = i.id)

-> Seq Scan on outer_table o (cost=0.00..721239.16

rows=50000016 width=4) (actual time=0.033..2676.234 rows=50000000 loops=1)

-> Hash (cost=193458.06..193458.06 rows=10000006 width=37)

(actual time=2855.408..2855.408 rows=10000000 loops=1)

Buckets: 1048576 Batches: 1 Memory Usage: 703125kB

-> Seq Scan on inner_table i (cost=0.00..193458.06

rows=10000006 width=37) (actual time=0.044..952.802 rows=10000000 loops=1)

Planning time: 1.139 ms

Execution time: 63889.056 ms

(9 rows)

I.e. it creates a single batch with ~700 MB of tuples. Without the

patch, top shows this:

VIRT RES SHR S %CPU %MEM COMMAND

2540356 1,356g 5936 R 100,0 17,6 postgres: EXPLAIN

and the MemoryContextStats added to MultiExecHash shows this:

HashBatchContext: 1451221040 total in 182 blocks; 2826592 free (11

chunks); 1448394448 used

So yeah, the overhead is pretty huge in this case - basicaly 100%

overhead, because the inner table row width is only ~40B. With wider

rows the overhead will be lower.

Now, with the patch it looks like this:

VIRT RES SHR S %CPU %MEM COMMAND

1835332 720200 6096 R 100,0 8,9 postgres: EXPLAIN

HashBatchContext: 729651520 total in 21980 blocks; 0 free (0 chunks);

729651520 used

So, pretty much no overhead at all. It was slightly faster too (~5%) but

I haven't done much testing so it might be measurement error.

This patch is pretty independent of the other changes discussed here

(tweaking NTUP_PER_BUCKET / nbuckets) so I'll keep it separate.

regards

Tomas

| Attachment | Content-Type | Size |

|---|---|---|

| hashjoin-alloc.patch | text/x-diff | 4.5 KB |

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-11 07:27:41 |

| Message-ID: | CA+U5nMLaNa2vWcUPeTjDKQxqLCmJTasdAtyW0mZFwybDq-GtCg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 9 July 2014 18:54, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> (1) size the buckets for NTUP_PER_BUCKET=1 (and use whatever number

> of batches this requires)

If we start off by assuming NTUP_PER_BUCKET = 1, how much memory does

it save to recalculate the hash bucket at 10 instead?

Resizing sounds like it will only be useful of we only just overflow our limit.

If we release next version with this as a hardcoded change, my

understanding is that memory usage for hash joins will leap upwards,

even if the run time of queries reduces. It sounds like we need some

kind of parameter to control this. "We made it faster" might not be

true if we run this on servers that are already experiencing high

memory pressure.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | "Tomas Vondra" <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | "Simon Riggs" <simon(at)2ndQuadrant(dot)com> |

| Cc: | "Tomas Vondra" <tv(at)fuzzy(dot)cz>, "PostgreSQL-development" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-11 09:23:21 |

| Message-ID: | a17d6217fe0c9e459cb45cb764ad727c.squirrel@sq.gransy.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11 Červenec 2014, 9:27, Simon Riggs wrote:

> On 9 July 2014 18:54, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>

>> (1) size the buckets for NTUP_PER_BUCKET=1 (and use whatever number

>> of batches this requires)

>

> If we start off by assuming NTUP_PER_BUCKET = 1, how much memory does

> it save to recalculate the hash bucket at 10 instead?

> Resizing sounds like it will only be useful of we only just overflow our

> limit.

>

> If we release next version with this as a hardcoded change, my

> understanding is that memory usage for hash joins will leap upwards,

> even if the run time of queries reduces. It sounds like we need some

> kind of parameter to control this. "We made it faster" might not be

> true if we run this on servers that are already experiencing high

> memory pressure.

Sure. We certainly don't want to make things worse for environments with

memory pressure.

The current implementation has two issues regarding memory:

(1) It does not include buckets into used memory, i.e. it's not included

into work_mem (so we may overflow work_mem). I plan to fix this, to make

work_mem a bit more correct, as it's important for cases with

NTUP_PER_BUCKET=1.

(2) There's a significant palloc overhead, because of allocating each

tuple separately - see my message from yesterday, where I observed the

batch memory context to get 1.4GB memory for 700MB of tuple data. By

densely packing the tuples, I got down to ~700MB (i.e. pretty much no

overhead).

The palloc overhead seems to be 20B (on x86_64) per tuple, and eliminating

this it more than compensates for ~8B per tuple, required for

NTUP_PER_BUCKET=1. And fixing (1) makes it more correct / predictable.

It also improves the issue that palloc overhead is not counted into

work_mem at all (that's why I got ~1.4GB batch context with work_mem=1GB).

So in the end this should give us much lower memory usage for hash joins,

even if we switch to NTUP_PER_BUCKET=1 (although that's pretty much

independent change). Does that seem reasonable?

Regarding the tunable to control this - I certainly don't want another GUC

no one really knows how to set right. And I think it's unnecessary thanks

to the palloc overhead / work_mem accounting fix, described above.

The one thing I'm not sure about is what to do in case of reaching the

work_mem limit (which should only happen with underestimated row count /

row width) - how to decide whether to shrink the hash table or increment

the number of batches. But this is not exclusive to NTUP_PER_BUCKET=1, it

may happen with whatever NTUP_PER_BUCKET value you choose.

The current code does not support resizing at all, so it always increments

the number of batches, but I feel an "interleaved" approach might be more

appropriate (nbuckets/2, nbatches*2, nbuckets/2, nbatches*2, ...). It'd be

nice to have some cost estimates ('how expensive is a rescan' vs. 'how

expensive is a resize'), but I'm not sure how to get that.

regards

Tomas

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-11 10:38:03 |

| Message-ID: | CA+U5nM+Tz_upfK7_s=hWOaKTC42Cb725sB_1A7KyMCQpGEZkCg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11 July 2014 10:23, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> On 11 Červenec 2014, 9:27, Simon Riggs wrote:

>> On 9 July 2014 18:54, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>>

>>> (1) size the buckets for NTUP_PER_BUCKET=1 (and use whatever number

>>> of batches this requires)

>>

>> If we start off by assuming NTUP_PER_BUCKET = 1, how much memory does

>> it save to recalculate the hash bucket at 10 instead?

>> Resizing sounds like it will only be useful of we only just overflow our

>> limit.

>>

>> If we release next version with this as a hardcoded change, my

>> understanding is that memory usage for hash joins will leap upwards,

>> even if the run time of queries reduces. It sounds like we need some

>> kind of parameter to control this. "We made it faster" might not be

>> true if we run this on servers that are already experiencing high

>> memory pressure.

>

> Sure. We certainly don't want to make things worse for environments with

> memory pressure.

>

> The current implementation has two issues regarding memory:

>

> (1) It does not include buckets into used memory, i.e. it's not included

> into work_mem (so we may overflow work_mem). I plan to fix this, to make

> work_mem a bit more correct, as it's important for cases with

> NTUP_PER_BUCKET=1.

>

> (2) There's a significant palloc overhead, because of allocating each

> tuple separately - see my message from yesterday, where I observed the

> batch memory context to get 1.4GB memory for 700MB of tuple data. By

> densely packing the tuples, I got down to ~700MB (i.e. pretty much no

> overhead).

>

> The palloc overhead seems to be 20B (on x86_64) per tuple, and eliminating

> this it more than compensates for ~8B per tuple, required for

> NTUP_PER_BUCKET=1. And fixing (1) makes it more correct / predictable.

>

> It also improves the issue that palloc overhead is not counted into

> work_mem at all (that's why I got ~1.4GB batch context with work_mem=1GB).

>

> So in the end this should give us much lower memory usage for hash joins,

> even if we switch to NTUP_PER_BUCKET=1 (although that's pretty much

> independent change). Does that seem reasonable?

Yes, that alleviates my concern. Thanks.

> Regarding the tunable to control this - I certainly don't want another GUC

> no one really knows how to set right. And I think it's unnecessary thanks

> to the palloc overhead / work_mem accounting fix, described above.

Agreed, nor does anyone.

> The one thing I'm not sure about is what to do in case of reaching the

> work_mem limit (which should only happen with underestimated row count /

> row width) - how to decide whether to shrink the hash table or increment

> the number of batches. But this is not exclusive to NTUP_PER_BUCKET=1, it

> may happen with whatever NTUP_PER_BUCKET value you choose.

>

> The current code does not support resizing at all, so it always increments

> the number of batches, but I feel an "interleaved" approach might be more

> appropriate (nbuckets/2, nbatches*2, nbuckets/2, nbatches*2, ...). It'd be

> nice to have some cost estimates ('how expensive is a rescan' vs. 'how

> expensive is a resize'), but I'm not sure how to get that.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-11 17:25:36 |

| Message-ID: | 53C01E10.6030105@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 10.7.2014 21:33, Tomas Vondra wrote:

> On 9.7.2014 16:07, Robert Haas wrote:

>> On Tue, Jul 8, 2014 at 5:16 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>>> Thinking about this a bit more, do we really need to build the hash

>>> table on the first pass? Why not to do this:

>>>

>>> (1) batching

>>> - read the tuples, stuff them into a simple list

>>> - don't build the hash table yet

>>>

>>> (2) building the hash table

>>> - we have all the tuples in a simple list, batching is done

>>> - we know exact row count, can size the table properly

>>> - build the table

>>

>> We could do this, and in fact we could save quite a bit of memory if

>> we allocated say 1MB chunks and packed the tuples in tightly instead

>> of palloc-ing each one separately. But I worry that rescanning the

>> data to build the hash table would slow things down too much.

>

> I did a quick test of how much memory we could save by this. The

> attached patch densely packs the tuples into 32kB chunks (1MB seems

> way too much because of small work_mem values, but I guess this might

> be tuned based on number of tuples / work_mem size ...).

Turns out getting this working properly will quite complicated. The

patch was only a quick attempt to see how much overhead there is, and

didn't solve one important details - batching.

The problem is that when increasing the number of batches, we need to

get rid of the tuples from one of them. Which means calling pfree() on

the tuples written to a temporary file, and that's not possible with the

dense allocation.

1) copy into new chunks (dead end)

----------------------------------

The first idea I had was to just "copy" everything into new chunks and

then throw away the old ones, but this way we might end up using 150% of

work_mem on average (when the two batches are about 1/2 the data each),

and in an extreme case up to 200% of work_mem (all tuples having the

same key and thus falling into the same batch). So I don't think that's

really a good approach.

2) walking through the tuples sequentially

------------------------------------------

The other option is not to walk the tuples through buckets, but by

walking throught the chunks - we know the tuples are stored as

HashJoinTuple/MinimalTuple, so it shouldn't be that difficult. And by

walking the tuples in the order they're stored, we may just rearrange

the tuples in already allocated memory. And maybe it could be even

faster than the current code, because of the sequential access to the

memory (as opposed to the random access through buckets) and CPU caches.

So I like this approach - it's simple, clean and shouldn't add any

overhead (memory or CPU).

3) batch-aware chunks

---------------------

I also think a "batch-aware" chunks might work. Say we're starting with

nbatch=N. Instead of allocating everything in a single chunk, we'll

allocate the tuples from the chunks according to a "hypothetical batch

number" - what batch would the tuple belong to if we had (nbatch=N*2).

So we'd have two chunks (or sequences of chunks), and we'd allocate the

tuples into them.

Then if we actually need to increase the number of batches, we know we

can just free one of the chunks, because all of the tuples are from the

'wrong' batche, and redistribute the remaining tuples into the new

chunks (according to the new hypothetical batch numbers).

I'm not sure how much overhead this would be, though. I think it can be

done quite efficiently, though, and it shouldn't have any impact at all,

if we don't do any additional batching (i.e. if the initial estimates

are ok).

Any other ideas how to tackle this?

regards

Tomas

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: tweaking NTUP_PER_BUCKET |

| Date: | 2014-07-12 09:39:04 |

| Message-ID: | CA+U5nM+VyPNE629qUAvwwHTP7B_ex+Y-_LpG5Z5TemzPKWfrVg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11 July 2014 18:25, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> Turns out getting this working properly will quite complicated.

Lets keep this patch simple then. Later research can be another patch.

In terms of memory pressure, having larger joins go x4 faster has a

much more significant reducing effect on memory pressure than anything

else. So my earlier concerns seem less of a concern.

So lets just this change done and then do more later.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: tweaking NTUP_PER_BUCKET |