Re: Parallel Aggregates for string_agg and array_agg

| Lists: | pgsql-hackers |

|---|

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Parallel Aggregates for string_agg and array_agg |

| Date: | 2017-12-17 14:30:55 |

| Message-ID: | CAKJS1f9sx_6GTcvd6TMuZnNtCh0VhBzhX6FZqw17TgVFH-ga_A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

While working on partial aggregation a few years ago, I didn't really

think it was worthwhile allowing partial aggregation of string_agg and

array_agg. I soon realised that I was wrong about that and allowing

parallelisation of these aggregates still could be very useful when

many rows are filtered out during the scan.

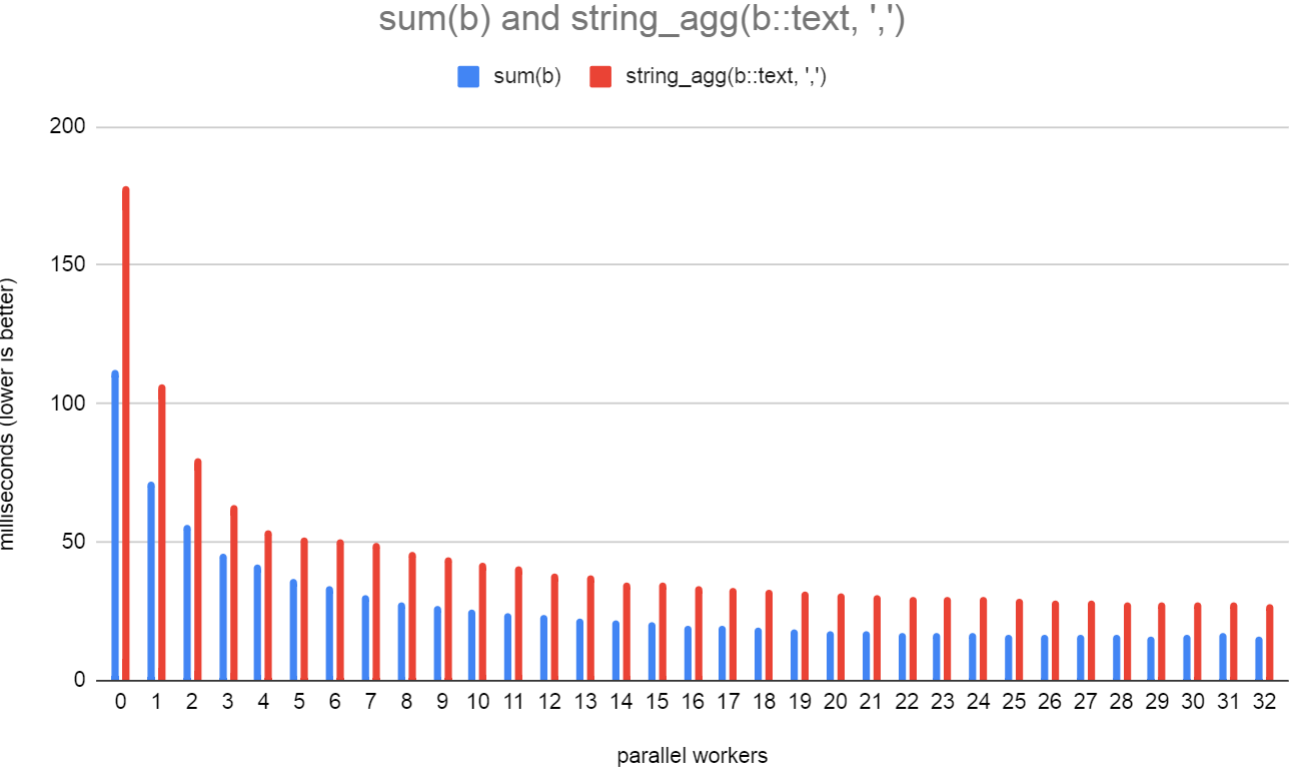

Some benchmarks that I've done locally show that parallel string_agg

and array_agg do actually perform better, despite the fact that the

aggregate state grows linearly with each aggregated item. Obviously,

the performance will get even better when workers are filtering out

rows before aggregation takes place, so it seems worthwhile doing

this. However, the main reason that I'm motivated to do this is that

there are more uses for partial aggregation other than just parallel

aggregation, and it seems a shame to disable all these features if a

single aggregate does not support partial mode.

I've attached a patch which implements all this. I've had most of it

stashed away for a while now, but I managed to get some time this

weekend to get it into a more completed state.

Things are now looking pretty good for the number of aggregates that

support partial mode.

Just a handful of aggregates now don't support partial aggregation;

postgres=# select aggfnoid from pg_aggregate where aggcombinefn=0 and

aggkind='n';

aggfnoid

------------------

xmlagg

json_agg

json_object_agg

jsonb_agg

jsonb_object_agg

(5 rows)

... and a good number do support it;

postgres=# select count(*) from pg_aggregate where aggcombinefn<>0 and

aggkind='n';

count

-------

122

(1 row)

There's probably no reason why the last 5 of those couldn't be done

either, it might just require shifting a bit more work into the final

functions, although, I'm not planning on that for this patch.

As for the patch; there's a bit of a quirk in the implementation of

string_agg. We previously always threw away the delimiter that belongs

to the first aggregated value, but we do now need to keep that around

so we can put it in between two states in the combine function. I

decided the path of least resistance to do this was just to borrow

StringInfo's cursor variable to use as a pointer to the state of the

first value and put the first delimiter before that. Both the

string_agg(text) and string_agg(bytea) already have a final function,

so we just need to skip over the bytes up until the cursor position to

get rid of the first delimiter. I could go and invent some new state

type to do the same, but I don't really see the trouble with what I've

done with StringInfo, but I'll certainly listen if someone else thinks

this is wrong.

Another thing that I might review later about this is seeing about

getting rid of some of the code duplication between

array_agg_array_combine and accumArrayResultArr.

I'm going to add this to PG11's final commitfest rather than the

January 'fest as it seems more like a final commitfest type of patch.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v1.patch | application/octet-stream | 31.4 KB |

| From: | David Steele <david(at)pgmasters(dot)net> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-01 19:33:17 |

| Message-ID: | 107aab8d-e7ed-e312-8b38-2005d0d4d9e0@pgmasters.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi David,

On 12/17/17 9:30 AM, David Rowley wrote:

>

> I'm going to add this to PG11's final commitfest rather than the

> January 'fest as it seems more like a final commitfest type of patch.

This patch applies but no longer builds:

$ make -C /home/vagrant/test/build

<...>

cd /postgres/src/include/catalog && '/usr/bin/perl' ./duplicate_oids

3419

3420

3421

3422

Looks like duplicate OIDs in pg_proc.h.

Thanks,

--

-David

david(at)pgmasters(dot)net

| From: | Andres Freund <andres(at)anarazel(dot)de> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-01 21:26:31 |

| Message-ID: | 20180301212631.kede4nyuttzxwoxq@alap3.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

On 2017-12-18 03:30:55 +1300, David Rowley wrote:

> While working on partial aggregation a few years ago, I didn't really

> think it was worthwhile allowing partial aggregation of string_agg and

> array_agg. I soon realised that I was wrong about that and allowing

> parallelisation of these aggregates still could be very useful when

> many rows are filtered out during the scan.

+1

> Some benchmarks that I've done locally show that parallel string_agg

> and array_agg do actually perform better, despite the fact that the

> aggregate state grows linearly with each aggregated item.

It also allows *other* aggregates with different space consumption to be

computed in parallel, that can be fairly huge.

> Just a handful of aggregates now don't support partial aggregation;

>

> postgres=# select aggfnoid from pg_aggregate where aggcombinefn=0 and

> aggkind='n';

> aggfnoid

> ------------------

> xmlagg

> json_agg

> json_object_agg

> jsonb_agg

> jsonb_object_agg

> (5 rows)

FWIW, I've heard numerous people yearn for json*agg

> I'm going to add this to PG11's final commitfest rather than the

> January 'fest as it seems more like a final commitfest type of patch.

Not sure I understand?

> +/*

> + * array_agg_serialize

> + * Serialize ArrayBuildState into bytea.

> + */

> +Datum

> +array_agg_serialize(PG_FUNCTION_ARGS)

> +{

> + /*

> + * dvalues -- this is very simple when the value type is byval, we can

> + * simply just send the Datums over, however, for non-byval types we must

> + * work a little harder.

> + */

> + if (state->typbyval)

> + pq_sendbytes(&buf, (char *) state->dvalues, sizeof(Datum) * state->nelems);

> + else

> + {

> + Oid typsend;

> + bool typisvarlena;

> + bytea *outputbytes;

> + int i;

> +

> + /* XXX is there anywhere to cache this to save calling each time? */

> + getTypeBinaryOutputInfo(state->element_type, &typsend, &typisvarlena);

Yes, you should be able to store this in fcinfo->flinfo->fn_extra. Or do

you see a problem doing so?

> +

> +Datum

> +array_agg_deserialize(PG_FUNCTION_ARGS)

> +{

> + /*

> + * dvalues -- this is very simple when the value type is byval, we can

> + * simply just get all the Datums at once, however, for non-byval types we

> + * must work a little harder.

> + */

> + if (result->typbyval)

> + {

> + temp = pq_getmsgbytes(&buf, sizeof(Datum) * nelems);

> + memcpy(result->dvalues, temp, sizeof(Datum) * nelems);

> + }

> + else

> + {

> + Oid typreceive;

> + Oid typioparam;

> + int i;

> +

> + getTypeBinaryInputInfo(element_type, &typreceive, &typioparam);

> +

> + for (i = 0; i < nelems; i++)

> + {

> + /* XXX? Surely this cannot be the way to do this? */

> + StringInfoData tmp;

> + tmp.cursor = 0;

> + tmp.maxlen = tmp.len = pq_getmsgint(&buf, 4);

> + tmp.data = (char *) pq_getmsgbytes(&buf, tmp.len);

> +

> + result->dvalues[i] = OidReceiveFunctionCall(typreceive, &tmp,

> + typioparam, -1);

Hm, doesn't look too bad to me? What's your concern here?

This isn't a real review, I was basically just doing a quick

scroll-through.

Greetings,

Andres Freund

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | David Steele <david(at)pgmasters(dot)net> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-01 21:27:42 |

| Message-ID: | CAKJS1f-=KY=XMf2wL6qWcVNH7n8rswBovVcq4zE2fcHz+cXs3g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Thanks for looking at the patch.

On 2 March 2018 at 08:33, David Steele <david(at)pgmasters(dot)net> wrote:

> This patch applies but no longer builds:

...

> Looks like duplicate OIDs in pg_proc.h.

Who stole my OIDs?!

Updated patch attached.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v2.patch | application/octet-stream | 31.4 KB |

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-02 00:48:00 |

| Message-ID: | CAKJS1f-T=Ryw9OVG6hUtJ4hJoBUtM3q3bZkbCko6nU9pv+vi4A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2 March 2018 at 10:26, Andres Freund <andres(at)anarazel(dot)de> wrote:

> On 2017-12-18 03:30:55 +1300, David Rowley wrote:

>> Just a handful of aggregates now don't support partial aggregation;

>>

>> postgres=# select aggfnoid from pg_aggregate where aggcombinefn=0 and

>> aggkind='n';

>> aggfnoid

>> ------------------

>> xmlagg

>> json_agg

>> json_object_agg

>> jsonb_agg

>> jsonb_object_agg

>> (5 rows)

>

> FWIW, I've heard numerous people yearn for json*agg

I guess it'll need to be PG12 for now. I'd imagine string_agg and

array_agg are more important ones to tick off for now, but I bet many

people would disagree with that.

>

>> I'm going to add this to PG11's final commitfest rather than the

>> January 'fest as it seems more like a final commitfest type of patch.

>

> Not sure I understand?

hmm, yeah. That was more of a consideration that I should be working

hard on + reviewing more complex and invasive patches before the final

'fest. I saw this more as something that would gain more interest once

patches such as partition-wise aggs gets in.

>> + /* XXX is there anywhere to cache this to save calling each time? */

>> + getTypeBinaryOutputInfo(state->element_type, &typsend, &typisvarlena);

>

> Yes, you should be able to store this in fcinfo->flinfo->fn_extra. Or do

> you see a problem doing so?

No, just didn't think of it.

>> + /* XXX? Surely this cannot be the way to do this? */

>> + StringInfoData tmp;

>> + tmp.cursor = 0;

>> + tmp.maxlen = tmp.len = pq_getmsgint(&buf, 4);

>> + tmp.data = (char *) pq_getmsgbytes(&buf, tmp.len);

>> +

>> + result->dvalues[i] = OidReceiveFunctionCall(typreceive, &tmp,

>> + typioparam, -1);

>

> Hm, doesn't look too bad to me? What's your concern here?

I was just surprised at having to fake up a StringInfoData.

> This isn't a real review, I was basically just doing a quick

> scroll-through.

Understood.

I've attached a delta patch which can be applied on top of the v2

patch which removes that XXX comment above and also implements the

fn_extra caching.

Thanks for looking!

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v3_delta.patch | application/octet-stream | 3.3 KB |

| From: | Andres Freund <andres(at)anarazel(dot)de> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-02 00:50:20 |

| Message-ID: | 20180302005020.ppmybylrjl2rowxg@alap3.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2018-03-02 13:48:00 +1300, David Rowley wrote:

> On 2 March 2018 at 10:26, Andres Freund <andres(at)anarazel(dot)de> wrote:

> > FWIW, I've heard numerous people yearn for json*agg

>

> I guess it'll need to be PG12 for now. I'd imagine string_agg and

> array_agg are more important ones to tick off for now, but I bet many

> people would disagree with that.

Oh, this was definitely not meant as an ask for something done together

with this submission.

Thanks for the quick update.

Greetings,

Andres Freund

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-03 09:47:21 |

| Message-ID: | CAKJS1f8cB3cc5AK=wPvByhU_jKkgd81iRt2kE6cru8uxx2HCuA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I've attached a rebased patch which fixes the conflicts caused by fd1a421.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v4.patch | application/octet-stream | 32.7 KB |

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-04 15:54:07 |

| Message-ID: | c302afa6-6415-27a5-336a-e1d4332fab09@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

I've looked at the rebased patch you sent yesterday, and I do have a

couple of comments.

1) There seems to be forgotten declaration of initArrayResultInternal in

arrayfuncs.c. I suppose you've renamed it to initArrayResultWithSize and

moved it to a header file, and forgotten to remove this bit.

2) bytea_string_agg_deserialize has this comment:

/*

* data: technically we could reuse the buf.data buffer, but that is

* slightly larger than we need due to the extra 4 bytes for the cursor

*/

I find the argument "it has 4 extra bytes, so we'll allocate new chunk"

somewhat weak. We do allocate the memory in 2^n chunks anyway, so the

new chunk is likely to be much larger anyway. I'm not saying we need to

reuse the buffer, IMHO the savings will be non-measurable.

3) string_agg_transfn and bytea_string_agg_transfn say this"

/*

* We always append the delimiter text before the value text, even

* with the first aggregated item. The reason for this is that if

* this state needs to be combined with another state using the

* aggregate's combine function, then we need to have the delimiter

* for the first aggregated item. The first delimiter will be

* stripped off in the final function anyway. We use a little cheat

* here and store the position of the actual first value (after the

* delimiter) using the StringInfo's cursor variable. This relies on

* the cursor not being used for any other purpose.

*/

How does this make the code any simpler, compared to not adding the

delimiter (as before) and adding it when combining the values (at which

point you should know when it's actually needed)?

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-05 03:51:20 |

| Message-ID: | CAKJS1f9xEZdTaBbBEKY+TCKEoSZYaH1oSAtsEJHSYDJCCNnQPQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 5 March 2018 at 04:54, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

> 1) There seems to be forgotten declaration of initArrayResultInternal in

> arrayfuncs.c. I suppose you've renamed it to initArrayResultWithSize and

> moved it to a header file, and forgotten to remove this bit.

Oops. Must be left over from trying things another way. Removed.

> 2) bytea_string_agg_deserialize has this comment:

>

> /*

> * data: technically we could reuse the buf.data buffer, but that is

> * slightly larger than we need due to the extra 4 bytes for the cursor

> */

>

> I find the argument "it has 4 extra bytes, so we'll allocate new chunk"

> somewhat weak. We do allocate the memory in 2^n chunks anyway, so the

> new chunk is likely to be much larger anyway. I'm not saying we need to

> reuse the buffer, IMHO the savings will be non-measurable.

Agreed. I've removed that part of the comment.

> 3) string_agg_transfn and bytea_string_agg_transfn say this"

>

> /*

> * We always append the delimiter text before the value text, even

> * with the first aggregated item. The reason for this is that if

> * this state needs to be combined with another state using the

> * aggregate's combine function, then we need to have the delimiter

> * for the first aggregated item. The first delimiter will be

> * stripped off in the final function anyway. We use a little cheat

> * here and store the position of the actual first value (after the

> * delimiter) using the StringInfo's cursor variable. This relies on

> * the cursor not being used for any other purpose.

> */

>

> How does this make the code any simpler, compared to not adding the

> delimiter (as before) and adding it when combining the values (at which

> point you should know when it's actually needed)?

The problem is that if we don't record the first delimiter then we

won't know what it is when it comes to combining the states.

Consider the following slightly backward-looking case;

select string_agg(',', x::text) from generate_Series(1,10)x;

string_agg

----------------------

,2,3,4,5,6,7,8,9,10,

Here the delimiter is the number, not the ','. Of course, the

delimiter for the first aggregated result is not shown. The previous

implementation of the transfn for this aggregate just threw away the

first delimiter, but that's no good for parallel aggregates as the

transfn may be getting called in a parallel worker, in which case

we'll need that delimiter in the combine function to properly join to

the other partial aggregated results matching the same group.

Probably I could improve the comment a bit. I understand that having a

variable delimiter is not commonplace in the normal world. I certainly

had never considered it before working on this. I scratched my head a

bit when doing this and thought about inventing a new trans type, but

I decided that the most efficient design for an aggregate state was to

store the delimiter and data all in one buffer and have a pointer to

the start of the actual data... StringInfo has exactly what's required

if you use the cursor as the pointer, so I didn't go and reinvent the

wheel.

I've now changed the comment to read:

/*

* It's important that we store the delimiter text for all aggregated

* items, even the first one, which at first thought you might think

* could just be discarded. The reason for this is that if this

* function is being called from a parallel worker, then we'll need

* the first delimiter in order to properly combine the partially

* aggregated state with the states coming from other workers. In the

* final output, the first delimiter will be stripped off of the final

* aggregate state, but in order to know where the actual first data

* is we must store the position of the first data value somewhere.

* Conveniently, StringInfo has a cursor property which can be used

* to serve our needs here.

*/

I've also updated an incorrect Assert in array_agg_array_serialize.

Please find attached the updated patch.

Many thanks for reviewing this.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v5.patch | application/octet-stream | 32.3 KB |

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-10 23:11:57 |

| Message-ID: | 24abe018-b3c6-2117-b5b9-39e660db00bd@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03/05/2018 04:51 AM, David Rowley wrote:

> On 5 March 2018 at 04:54, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

>> 1) There seems to be forgotten declaration of initArrayResultInternal in

>> arrayfuncs.c. I suppose you've renamed it to initArrayResultWithSize and

>> moved it to a header file, and forgotten to remove this bit.

>

> Oops. Must be left over from trying things another way. Removed.

>

>> 2) bytea_string_agg_deserialize has this comment:

>>

>> /*

>> * data: technically we could reuse the buf.data buffer, but that is

>> * slightly larger than we need due to the extra 4 bytes for the cursor

>> */

>>

>> I find the argument "it has 4 extra bytes, so we'll allocate new chunk"

>> somewhat weak. We do allocate the memory in 2^n chunks anyway, so the

>> new chunk is likely to be much larger anyway. I'm not saying we need to

>> reuse the buffer, IMHO the savings will be non-measurable.

>

> Agreed. I've removed that part of the comment.

>

>> 3) string_agg_transfn and bytea_string_agg_transfn say this"

>>

>> /*

>> * We always append the delimiter text before the value text, even

>> * with the first aggregated item. The reason for this is that if

>> * this state needs to be combined with another state using the

>> * aggregate's combine function, then we need to have the delimiter

>> * for the first aggregated item. The first delimiter will be

>> * stripped off in the final function anyway. We use a little cheat

>> * here and store the position of the actual first value (after the

>> * delimiter) using the StringInfo's cursor variable. This relies on

>> * the cursor not being used for any other purpose.

>> */

>>

>> How does this make the code any simpler, compared to not adding the

>> delimiter (as before) and adding it when combining the values (at which

>> point you should know when it's actually needed)?

>

> The problem is that if we don't record the first delimiter then we

> won't know what it is when it comes to combining the states.

>

> Consider the following slightly backward-looking case;

>

> select string_agg(',', x::text) from generate_Series(1,10)x;

> string_agg

> ----------------------

> ,2,3,4,5,6,7,8,9,10,

>

> Here the delimiter is the number, not the ','. Of course, the

> delimiter for the first aggregated result is not shown. The previous

> implementation of the transfn for this aggregate just threw away the

> first delimiter, but that's no good for parallel aggregates as the

> transfn may be getting called in a parallel worker, in which case

> we'll need that delimiter in the combine function to properly join to

> the other partial aggregated results matching the same group.

>

Hmmm, you're right I haven't considered the delimiter might be variable.

But isn't just yet another hint that while StringInfo was suitable for

aggregate state of non-parallel string_agg, it may not be for the

parallel version?

What if the parallel string_agg instead used something like this:

struct StringAggState

{

char *delimiter; /* first delimiter */

StringInfoData str; /* partial aggregate state */

} StringAggState;

I think that would make the code cleaner, compared to using the cursor

field for that purpose. But maybe it'd make it not possible to share

code with the non-parallel aggregate, not sure.

I always considered the cursor field to be meant for scanning through

StringInfo, so using it for this purpose seems a bit like a misuse.

> Probably I could improve the comment a bit. I understand that having a

> variable delimiter is not commonplace in the normal world. I certainly

> had never considered it before working on this. I scratched my head a

> bit when doing this and thought about inventing a new trans type, but

> I decided that the most efficient design for an aggregate state was to

> store the delimiter and data all in one buffer and have a pointer to

> the start of the actual data... StringInfo has exactly what's required

> if you use the cursor as the pointer, so I didn't go and reinvent the

> wheel.

>

I wonder why the variable delimiter is not mentioned in the docs ... (at

least I haven't found anything mentioning the behavior).

> I've now changed the comment to read:

>

> /*

> * It's important that we store the delimiter text for all aggregated

> * items, even the first one, which at first thought you might think

> * could just be discarded. The reason for this is that if this

> * function is being called from a parallel worker, then we'll need

> * the first delimiter in order to properly combine the partially

> * aggregated state with the states coming from other workers. In the

> * final output, the first delimiter will be stripped off of the final

> * aggregate state, but in order to know where the actual first data

> * is we must store the position of the first data value somewhere.

> * Conveniently, StringInfo has a cursor property which can be used

> * to serve our needs here.

> */

>

> I've also updated an incorrect Assert in array_agg_array_serialize.

>

> Please find attached the updated patch.

>

Seems fine to me. I plan to do a bit more testing/review next week, but

I plan to move it to RFC after that (unless I run into something during

the review, but I don't expect that).

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-11 06:31:49 |

| Message-ID: | CAKJS1f8LV7AT=AAhdYGKtGrGkSkEgO6C_SW2Ztz1sR3encisqw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11 March 2018 at 12:11, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

> On 03/05/2018 04:51 AM, David Rowley wrote:

>> On 5 March 2018 at 04:54, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

>> Consider the following slightly backward-looking case;

>>

>> select string_agg(',', x::text) from generate_Series(1,10)x;

>> string_agg

>> ----------------------

>> ,2,3,4,5,6,7,8,9,10,

>>

>> Here the delimiter is the number, not the ','. Of course, the

>> delimiter for the first aggregated result is not shown. The previous

>> implementation of the transfn for this aggregate just threw away the

>> first delimiter, but that's no good for parallel aggregates as the

>> transfn may be getting called in a parallel worker, in which case

>> we'll need that delimiter in the combine function to properly join to

>> the other partial aggregated results matching the same group.

>>

>

> Hmmm, you're right I haven't considered the delimiter might be variable.

> But isn't just yet another hint that while StringInfo was suitable for

> aggregate state of non-parallel string_agg, it may not be for the

> parallel version?

>

> What if the parallel string_agg instead used something like this:

>

> struct StringAggState

> {

> char *delimiter; /* first delimiter */

> StringInfoData str; /* partial aggregate state */

> } StringAggState;

>

> I think that would make the code cleaner, compared to using the cursor

> field for that purpose. But maybe it'd make it not possible to share

> code with the non-parallel aggregate, not sure.

It would be possible to use something like that. The final function

would just need to take 'str' and ignore 'delimiter', whereas the

combine function would need both. However, you could optimize the

above to just have a single StringInfoData and have a pointer to the

start of the actual data (beyond the first delimiter), that would save

us a call to palloc and also allow the state's data to exist in one

contiguous block of memory, which will be more cache friendly when it

comes to reading it back again. The pointer here would, of course,

have to be an offset into the data array, since repallocs would cause

problems as the state grew.

This is kinda the option I was going for with using the cursor. I

didn't think that was going to be a problem. It seems that cursor was

invented so that users of StringInfo could store a marker somehow

along with the string. The comment for cursor read:

* cursor is initialized to zero by makeStringInfo or initStringInfo,

* but is not otherwise touched by the stringinfo.c routines.

* Some routines use it to scan through a StringInfo.

The use of the cursor field does not interfere with pqformat.c's use

of it as in the serialization functions we're creating a new

StringInfo for the 'result'. If anything tries to send the internal

state, then that's certainly broken. It needs to be serialized before

that can happen.

I don't quite see how inventing a new struct would make the code

cleaner. It would make the serialization and deserialization functions

have to write and read more fields for the lengths of the data

contained in the state.

I understand that the cursor field is used in pqformat.c quite a bit,

but I don't quite understand why it should get to use that field the

way it wants, but the serialization and deserialization functions

shouldn't. I'd understand that if we were trying to phase out the

cursor field from StringInfoData, but I can't imagine that's going to

happen.

Of course, with that all said. If you really strongly object to how

I've done this, then I'll change it to use a new struct type. I had

just tried to make the patch footprint as small as possible, and the

above text is me just explaining my reasoning behind this, not me

objecting to your request for me to change it. Let me know your

thoughts after reading the above.

In the meantime, I've attached an updated patch. The only change it

contains is updating the "Partial Mode" column in the docs from "No"

to "Yes".

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v6.patch | application/octet-stream | 33.3 KB |

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-11 11:10:00 |

| Message-ID: | 1b6ee15c-c218-761e-0120-c1ed438e2db2@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03/11/2018 07:31 AM, David Rowley wrote:

> On 11 March 2018 at 12:11, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

>> On 03/05/2018 04:51 AM, David Rowley wrote:

>>> On 5 March 2018 at 04:54, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

>>> Consider the following slightly backward-looking case;

>>>

>>> select string_agg(',', x::text) from generate_Series(1,10)x;

>>> string_agg

>>> ----------------------

>>> ,2,3,4,5,6,7,8,9,10,

>>>

>>> Here the delimiter is the number, not the ','. Of course, the

>>> delimiter for the first aggregated result is not shown. The previous

>>> implementation of the transfn for this aggregate just threw away the

>>> first delimiter, but that's no good for parallel aggregates as the

>>> transfn may be getting called in a parallel worker, in which case

>>> we'll need that delimiter in the combine function to properly join to

>>> the other partial aggregated results matching the same group.

>>>

>>

>> Hmmm, you're right I haven't considered the delimiter might be variable.

>> But isn't just yet another hint that while StringInfo was suitable for

>> aggregate state of non-parallel string_agg, it may not be for the

>> parallel version?

>>

>> What if the parallel string_agg instead used something like this:

>>

>> struct StringAggState

>> {

>> char *delimiter; /* first delimiter */

>> StringInfoData str; /* partial aggregate state */

>> } StringAggState;

>>

>> I think that would make the code cleaner, compared to using the cursor

>> field for that purpose. But maybe it'd make it not possible to share

>> code with the non-parallel aggregate, not sure.

>

> It would be possible to use something like that. The final function

> would just need to take 'str' and ignore 'delimiter', whereas the

> combine function would need both. However, you could optimize the

> above to just have a single StringInfoData and have a pointer to the

> start of the actual data (beyond the first delimiter), that would save

> us a call to palloc and also allow the state's data to exist in one

> contiguous block of memory, which will be more cache friendly when it

> comes to reading it back again. The pointer here would, of course,

> have to be an offset into the data array, since repallocs would cause

> problems as the state grew.

>

> This is kinda the option I was going for with using the cursor. I

> didn't think that was going to be a problem. It seems that cursor was

> invented so that users of StringInfo could store a marker somehow

> along with the string. The comment for cursor read:

>

> * cursor is initialized to zero by makeStringInfo or initStringInfo,

> * but is not otherwise touched by the stringinfo.c routines.

> * Some routines use it to scan through a StringInfo.

>

> The use of the cursor field does not interfere with pqformat.c's use

> of it as in the serialization functions we're creating a new

> StringInfo for the 'result'. If anything tries to send the internal

> state, then that's certainly broken. It needs to be serialized before

> that can happen.

>

> I don't quite see how inventing a new struct would make the code

> cleaner. It would make the serialization and deserialization functions

> have to write and read more fields for the lengths of the data

> contained in the state.

>

> I understand that the cursor field is used in pqformat.c quite a bit,

> but I don't quite understand why it should get to use that field the

> way it wants, but the serialization and deserialization functions

> shouldn't. I'd understand that if we were trying to phase out the

> cursor field from StringInfoData, but I can't imagine that's going to

> happen.

>

> Of course, with that all said. If you really strongly object to how

> I've done this, then I'll change it to use a new struct type. I had

> just tried to make the patch footprint as small as possible, and the

> above text is me just explaining my reasoning behind this, not me

> objecting to your request for me to change it. Let me know your

> thoughts after reading the above.

>

Hmmm, I guess you're right, I didn't really thought that through. I

don't object to sticking to the current approach.

> In the meantime, I've attached an updated patch. The only change it

> contains is updating the "Partial Mode" column in the docs from "No"

> to "Yes".

>

Yeah, seems fine to me. I wonder what else would be needed before

switching the patch to RFC. I plan to do that after a bit more testing

sometime early next week, unless someone objects.

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-16 00:46:39 |

| Message-ID: | 657be73a-a21d-0ae1-5c62-870afbdfddd4@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03/11/2018 12:10 PM, Tomas Vondra wrote:

>

> ...

>

> Yeah, seems fine to me. I wonder what else would be needed before

> switching the patch to RFC. I plan to do that after a bit more

> testing sometime early next week, unless someone objects.

>

I've done more testing on this patch, and I haven't found any new issues

with it, so PFA I'm marking it as ready for committer.

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-16 02:44:39 |

| Message-ID: | CAKJS1f-bKAD+s-CJetRQPjmrR-gF-kfvwfzhQm-A0PA-nsr1xw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 16 March 2018 at 13:46, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

> I've done more testing on this patch, and I haven't found any new issues

> with it, so PFA I'm marking it as ready for committer.

Great! Many thanks for the review.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 02:26:47 |

| Message-ID: | 20180326022647.GL24540@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Greetings,

* David Rowley (david(dot)rowley(at)2ndquadrant(dot)com) wrote:

> On 16 March 2018 at 13:46, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> wrote:

> > I've done more testing on this patch, and I haven't found any new issues

> > with it, so PFA I'm marking it as ready for committer.

>

> Great! Many thanks for the review.

I read through the thread and started reviewing this, comments below.

diff --git a/src/backend/utils/adt/array_userfuncs.c b/src/backend/utils/adt/array_userfuncs.c

[...]

+ for (i = 0; i < state2->nelems; i++)

+ state1->dvalues[i] = datumCopy(state2->dvalues[i],

+ state1->typbyval, state1->typlen);

+

+ memcpy(state1->dnulls, state2->dnulls, sizeof(bool) * state2->nelems);

The header at the top of datumCopy() pretty clearly says that it's for

"non-NULL" datums, yet this function seems to be happily ignoring that

and just trying to use it to copy everything. Perhaps I'm missing

something, but I don't see anything obvious that would lead me to

conclude that what's being done here (or in other similar cases in this

patch) is acceptable.

[...]

+ for (i = 0; i < state->nelems; i++)

+ {

+ outputbytes = OidSendFunctionCall(iodata->typsend, state->dvalues[i]);

+ pq_sendint32(&buf, VARSIZE(outputbytes) - VARHDRSZ);

+ pq_sendbytes(&buf, VARDATA(outputbytes),

+ VARSIZE(outputbytes) - VARHDRSZ);

+ }

+ }

+

+ /* dnulls */

+ pq_sendbytes(&buf, (char *) state->dnulls, sizeof(bool) * state->nelems);

SendFunctionCall() has a similar "Do not call this on NULL datums"

comment.

+ for (i = 0; i < nelems; i++)

+ {

+ StringInfoData tmp;

+ tmp.cursor = 0;

+ tmp.maxlen = tmp.len = pq_getmsgint(&buf, 4);

+ tmp.data = (char *) pq_getmsgbytes(&buf, tmp.len);

+

+ result->dvalues[i] = OidReceiveFunctionCall(iodata->typreceive,

+ &tmp,

+ iodata->typioparam,

+ -1);

+ }

+ }

+

+ /* dnulls */

+ temp = pq_getmsgbytes(&buf, sizeof(bool) * nelems);

+ memcpy(result->dnulls, temp, sizeof(bool) * nelems);

And if we aren't sending them then we probably need to do something

different on the receive side...

I glanced through the rest and it seemed alright, but will want to still

go over it more carefully once the above comments are addressed.

Marking Waiting-for-Author.

Thanks!

Stephen

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Stephen Frost <sfrost(at)snowman(dot)net> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 09:37:37 |

| Message-ID: | CAKJS1f-axkksX3XzduLJuTL=o8CSwf1NaQYO6=iHSXR_B3pCKQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26 March 2018 at 15:26, Stephen Frost <sfrost(at)snowman(dot)net> wrote:

> The header at the top of datumCopy() pretty clearly says that it's for

> "non-NULL" datums, yet this function seems to be happily ignoring that

> and just trying to use it to copy everything. Perhaps I'm missing

> something, but I don't see anything obvious that would lead me to

> conclude that what's being done here (or in other similar cases in this

> patch) is acceptable.

Thanks for looking at this.

You're right. I've overlooked this. The code should be checking for

NULL value Datums there. I've fixed this locally, but on testing, I

discovered another bug around string_agg. At the moment string_agg's

transfn only allocates the state when it gets a non-NULL string to

aggregate, whereas it seems other trans functions which return an

internal state allocate their state on the first call. e.g.

int8_avg_accum(). This NULL state is causing the serial function

segfault on a null pointer dereference. I think the fix is to always

allocate the state in the transfn, but I just wanted to point this out

before I go and do that.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 13:20:09 |

| Message-ID: | 20180326132009.GN24540@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Greetings,

* David Rowley (david(dot)rowley(at)2ndquadrant(dot)com) wrote:

> On 26 March 2018 at 15:26, Stephen Frost <sfrost(at)snowman(dot)net> wrote:

> > The header at the top of datumCopy() pretty clearly says that it's for

> > "non-NULL" datums, yet this function seems to be happily ignoring that

> > and just trying to use it to copy everything. Perhaps I'm missing

> > something, but I don't see anything obvious that would lead me to

> > conclude that what's being done here (or in other similar cases in this

> > patch) is acceptable.

>

> Thanks for looking at this.

>

> You're right. I've overlooked this. The code should be checking for

> NULL value Datums there. I've fixed this locally, but on testing, I

> discovered another bug around string_agg. At the moment string_agg's

> transfn only allocates the state when it gets a non-NULL string to

> aggregate, whereas it seems other trans functions which return an

> internal state allocate their state on the first call. e.g.

> int8_avg_accum(). This NULL state is causing the serial function

> segfault on a null pointer dereference. I think the fix is to always

> allocate the state in the transfn, but I just wanted to point this out

> before I go and do that.

Just to be clear- the segfault is just happening with your patch and

you're just contemplating having string_agg always allocate state on the

first call, similar to what int8_avg_accum() does?

If so, then, yes, that seems alright to me.

Thanks!

Stephen

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Stephen Frost <sfrost(at)snowman(dot)net> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 14:04:29 |

| Message-ID: | CAKJS1f8JVpUUkYP2c+RyVRdpS1dnjB_DA5fbcDSL3qfMRqc81Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 27 March 2018 at 02:20, Stephen Frost <sfrost(at)snowman(dot)net> wrote:

> Just to be clear- the segfault is just happening with your patch and

> you're just contemplating having string_agg always allocate state on the

> first call, similar to what int8_avg_accum() does?

>

> If so, then, yes, that seems alright to me.

I did write some code to do this, but I ended up having to invent a

new state type which contained an additional "gotvalue" bool flag. We

needed to know the difference between a new state and one that's just

not had any non-NULL values aggregated yet. This meant the transfn and

combinefn needed to track this extra flag, and the serial and deserial

functions had to send it too. It started to look pretty horrible so I

started wondering about modifying the serial and deserial functions to

allow NULL states instead, that's when I realised that the serial and

deserial functions were both strict, and should never have been called

with NULL states in the first place!

It turns out this is a bit of an existing bug, but perhaps one that is

pretty harmless without this patch. Basically, the string_agg trans

function was always returning PG_RETURN_POINTER(state), even when the

state was NULL. This meant that the fcinfo->isnull flag was not

properly set, which caused the nodeAgg.c code to call the strict

serial function regardless.

In the end, I just fixed this by changing the PG_RETURN_POINTER(state) into:

if (state)

PG_RETURN_POINTER(state);

PG_RETURN_NULL();

The final functions seem to have managed to escape this bug by the way

they check for NULL states:

state = PG_ARGISNULL(0) ? NULL : (StringInfo) PG_GETARG_POINTER(0);

if (state != NULL)

...

Which allows an SQL null, or a NULL pointer. These could probably now

be changed to become:

if (!PG_ARGISNULL(0))

{

state = (StringInfo) PG_GETARG_POINTER(0);

...

Or it may be possible to make the finalfn strict, but I didn't do

either of these things.

I did also wonder if PG_RETURN_POINTER should test for NULL pointers

and set fcinfo->isnull, but there might be valid cases where we'd want

to not set the flag... I just can't imagine a valid reason why right

now.

In the attached, I also got rid of the serial and deserial function

for string_agg(text). I realised that these were doing pq_sendtext()

instead of pq_sendbytes. This meant that text would be changed to the

client encoding which was not what we wanted as we were not sending

anything to the client. Fixing this meant that the string_agg(bytea)

and string_agg(text) aggregates had identical serial/deserial

functions, so they now share the functions instead.

I also looked at all the built-in aggregate trans functions which use

an internal aggregate state to verify they're also doing the right

thing in regards to NULLs. The following query returns 16 distinct

functions, all of these apart from the ones I've fixed in the attached

patch all allocate the internal state on first call, so it does not

appear that there's anything else to fix in this area:

select distinct aggtransfn from pg_aggregate where aggtranstype=2281 order by 1;

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v7.patch | application/octet-stream | 31.5 KB |

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Stephen Frost <sfrost(at)snowman(dot)net>, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 20:27:47 |

| Message-ID: | 6538.1522096067@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> writes:

> [ combinefn_for_string_and_array_aggs_v7.patch ]

I spent a fair amount of time hacking on this with intent to commit,

but just as I was getting to code that I liked, I started to have second

thoughts about whether this is a good idea at all. I quote from the fine

manual:

The aggregate functions array_agg, json_agg, jsonb_agg,

json_object_agg, jsonb_object_agg, string_agg, and xmlagg, as well as

similar user-defined aggregate functions, produce meaningfully

different result values depending on the order of the input

values. This ordering is unspecified by default, but can be controlled

by writing an ORDER BY clause within the aggregate call, as shown in

Section 4.2.7. Alternatively, supplying the input values from a sorted

subquery will usually work ...

I do not think it is accidental that these aggregates are exactly the ones

that do not have parallelism support today. Rather, that's because you

just about always have an interest in the order in which the inputs get

aggregated, which is something that parallel aggregation cannot support.

I fear that what will happen, if we commit this, is that something like

0.01% of the users of array_agg and string_agg will be pleased, another

maybe 20% will be unaffected because they wrote ORDER BY which prevents

parallel aggregation, and the remaining 80% will scream because we broke

their queries. Telling them they should've written ORDER BY isn't going

to cut it, IMO, when the benefit of that breakage will accrue only to some

very tiny fraction of use-cases.

In short, I think we ought to reject this.

Just in case I'm outvoted, attached is what I'd gotten done so far.

The main noncosmetic changes I'd made were to improve the caching logic

(it's silly to set up a lookup cache and then not cache the fmgr_info

lookup) and to not cheat quite as much on the StringInfo passed down to

the element typreceive function. There isn't any other place, I don't

think, where we don't honor the expectation that StringInfos have trailing

null bytes, and some places may depend on it --- array_recv does.

The main thing that remains undone is to get some test coverage ---

AFAICS, none of these new functions get exercised in the standard

regression tests.

I'm also a bit unhappy that the patch introduces code into

array_userfuncs.c that knows everything there is to know about the

contents of ArrayBuildState and ArrayBuildStateArr. Previously that

knowledge was pretty well localized in arrayfuncs.c. I wonder if it'd

be better to move the new combinefuncs and serial/deserial funcs into

arrayfuncs.c. Or perhaps export primitives from there that could be

used to build them.

regards, tom lane

| Attachment | Content-Type | Size |

|---|---|---|

| combinefn_for_string_and_array_aggs_v8.patch | text/x-diff | 37.8 KB |

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us>, David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

| Cc: | Stephen Frost <sfrost(at)snowman(dot)net>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 20:55:22 |

| Message-ID: | cd620e7f-a9dd-ad15-72a8-6c0ac10ea4c3@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03/26/2018 10:27 PM, Tom Lane wrote:

> David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> writes:

>> [ combinefn_for_string_and_array_aggs_v7.patch ]

>

> I spent a fair amount of time hacking on this with intent to commit,

> but just as I was getting to code that I liked, I started to have second

> thoughts about whether this is a good idea at all. I quote from the fine

> manual:

>

> The aggregate functions array_agg, json_agg, jsonb_agg,

> json_object_agg, jsonb_object_agg, string_agg, and xmlagg, as well as

> similar user-defined aggregate functions, produce meaningfully

> different result values depending on the order of the input

> values. This ordering is unspecified by default, but can be controlled

> by writing an ORDER BY clause within the aggregate call, as shown in

> Section 4.2.7. Alternatively, supplying the input values from a sorted

> subquery will usually work ...

>

> I do not think it is accidental that these aggregates are exactly the ones

> that do not have parallelism support today. Rather, that's because you

> just about always have an interest in the order in which the inputs get

> aggregated, which is something that parallel aggregation cannot support.

>

I don't think that's quite true. I know plenty of people who do things

like this:

SELECT

a,

b,

avg(c),

sum(d),

array_agg(e),

array_agg(f),

string_agg(g)

FROM hugetable GROUP BY a,b HAVING avg(c) > 100.89;

and then do some additional processing on the result in some way

(subquery, matview, ...). They don't really care about ordering of

values in the arrays, as long as orderings of all the arrays match.

Currently queries like this can use parallelism at all, and the patch

fixes that I believe.

> I fear that what will happen, if we commit this, is that something like

> 0.01% of the users of array_agg and string_agg will be pleased, another

> maybe 20% will be unaffected because they wrote ORDER BY which prevents

> parallel aggregation, and the remaining 80% will scream because we broke

> their queries. Telling them they should've written ORDER BY isn't going

> to cut it, IMO, when the benefit of that breakage will accrue only to some

> very tiny fraction of use-cases.

>

Isn't the ordering unreliable *already*? It depends on ordering of

tuples on the input. So if the table is scanned by index scan or

sequential scan, that will affect the array_agg/string_agg results. If

the input is a join, it's even more volatile.

IMHO it's not like we're making the ordering unpredictable - it's been

like that since forever.

Also, how is this different from ORDER BY clause? If a user does not

specify an ORDER BY clause, I don't think we'd care very much about

changes to output ordering due to plan changes, for example.

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

| Cc: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, Stephen Frost <sfrost(at)snowman(dot)net>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 21:19:54 |

| Message-ID: | 18594.1522099194@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> writes:

> On 03/26/2018 10:27 PM, Tom Lane wrote:

>> I fear that what will happen, if we commit this, is that something like

>> 0.01% of the users of array_agg and string_agg will be pleased, another

>> maybe 20% will be unaffected because they wrote ORDER BY which prevents

>> parallel aggregation, and the remaining 80% will scream because we broke

>> their queries. Telling them they should've written ORDER BY isn't going

>> to cut it, IMO, when the benefit of that breakage will accrue only to some

>> very tiny fraction of use-cases.

> Isn't the ordering unreliable *already*?

Not if the query is such that what gets chosen is, say, an indexscan or

mergejoin. It might be theoretically unreliable and yet work fine for

a given application.

I might be too pessimistic about the fraction of users who are depending

on ordered input without having written anything that explicitly forces

that ... but I stand by the theory that it substantially exceeds the

fraction of users who could get any benefit.

Your own example of assuming that separate aggregates are computed

in the same order reinforces my point, I think. In principle, anybody

who's doing that should write

array_agg(e order by x),

array_agg(f order by x),

string_agg(g order by x)

because otherwise they shouldn't assume that; the manual certainly doesn't

promise it. But nobody does that in production, because if they did

they'd get killed by the fact that the sorts are all done independently.

(We should improve that someday, but it hasn't been done yet.) So I think

there are an awful lot of people out there who are assuming more than a

lawyerly reading of the manual would allow. Their reaction to this will

be about like ours every time the GCC guys decide that some longstanding

behavior of C code isn't actually promised by the text of the C standard.

regards, tom lane

| From: | Stephen Frost <sfrost(at)snowman(dot)net> |

|---|---|

| To: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

| Cc: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us>, David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 21:20:06 |

| Message-ID: | 20180326212006.GT24540@tamriel.snowman.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Greetings,

* Tomas Vondra (tomas(dot)vondra(at)2ndquadrant(dot)com) wrote:

> On 03/26/2018 10:27 PM, Tom Lane wrote:

> > I fear that what will happen, if we commit this, is that something like

> > 0.01% of the users of array_agg and string_agg will be pleased, another

> > maybe 20% will be unaffected because they wrote ORDER BY which prevents

> > parallel aggregation, and the remaining 80% will scream because we broke

> > their queries. Telling them they should've written ORDER BY isn't going

> > to cut it, IMO, when the benefit of that breakage will accrue only to some

> > very tiny fraction of use-cases.

>

> Isn't the ordering unreliable *already*? It depends on ordering of

> tuples on the input. So if the table is scanned by index scan or

> sequential scan, that will affect the array_agg/string_agg results. If

> the input is a join, it's even more volatile.

It's not even just that- for a seq scan, it'll also depend on if anyone

else is also scanning the table as we might not start at the beginning

of the table thanks to synchronize seq scans.

> IMHO it's not like we're making the ordering unpredictable - it's been

> like that since forever.

Yeah, there certainly seems like a lot of opportunity for the ordering

to end up being volatile already and queries depending on it not

changing really shouldn't be making that assumption. I do think it was

probably a bad move on our part to say that ordering a subquery will

"usually" work in the documentation but having that slip-up in the

documentation mean that we actually *are* going to guarantee it'll

always work to use a subquery ordering to feed an aggregate is a pretty

big stretch (and is it even really true today anyway? the documentation

certainly doesn't seem to be clear on that...).

> Also, how is this different from ORDER BY clause? If a user does not

> specify an ORDER BY clause, I don't think we'd care very much about

> changes to output ordering due to plan changes, for example.

This seems like it boils down to "well, everyone *knows* that rows can

come back in any order" combined with "our docs claim that an ordered

subquery will *usually* work".

In the end, I do tend to agree that we probably should add parallel

support to these aggregates, but it'd also be nice to hear from those

who had worked to add parallelism to the various aggregates as to if

there was some reason these were skipped.

Thanks!

Stephen

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Stephen Frost <sfrost(at)snowman(dot)net> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 21:31:18 |

| Message-ID: | 19117.1522099878@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Stephen Frost <sfrost(at)snowman(dot)net> writes:

> Yeah, there certainly seems like a lot of opportunity for the ordering

> to end up being volatile already and queries depending on it not

> changing really shouldn't be making that assumption. I do think it was

> probably a bad move on our part to say that ordering a subquery will

> "usually" work in the documentation but having that slip-up in the

> documentation mean that we actually *are* going to guarantee it'll

> always work to use a subquery ordering to feed an aggregate is a pretty

> big stretch (and is it even really true today anyway? the documentation

> certainly doesn't seem to be clear on that...).

It's true as long as there's nothing in the outer query that would cause

re-ordering of the subquery output. I'd be the first to agree that the

wording is a bit sloppy, but I think there are lots of people depending

on that behavior, not least because that was the only way to do it before

we supported ORDER BY inside an aggregate call.

Now, that doesn't directly bear on the argument at hand, because if you're

aggregating over the result of an ORDER BY subquery then you aren't doing

parallel aggregation anyway. (Perhaps there's parallelism inside the

subquery, but that's irrelevant.) It is related in the sense that there

are lots of people depending on things other than ORDER-BY-in-the-

aggregate-call to get the results they expect.

regards, tom lane

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Stephen Frost <sfrost(at)snowman(dot)net> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 21:54:32 |

| Message-ID: | CAKJS1f_n_Z+cGjG5SGeKxhCpRmpEifcoTgEqXkH2sra_ze8KJg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 27 March 2018 at 10:20, Stephen Frost <sfrost(at)snowman(dot)net> wrote:

> In the end, I do tend to agree that we probably should add parallel

> support to these aggregates, but it'd also be nice to hear from those

> who had worked to add parallelism to the various aggregates as to if

> there was some reason these were skipped.

That was me. I originally skipped string_agg and array_agg as I

thought parallelism would not be much use due to the growth of the

size of the state. I thought the combine function could become very

expensive as it has to work on each aggregated value, instead of a

consolidated result, as the other aggregates provide. The reason for

adding this now and not back then is:

1. I was wrong, parallel aggregation can improve performance for these

aggregates, and;

2. It's annoying that a single non-partial aggregate in the query

disabled parallel aggregation, and;

3. When I originally considered the performance I didn't consider that

some queries may filter values before aggregation via a FILTER or a

WHERE clause.

4. It's not just parallel aggregation that uses partial aggregation,

we can now push aggregation below Append for partitioned tables in

some cases. Hopefully, we'll also have "group before join" one day

too.

While 2 is still true, there should be a reduction in the times this

happens. Just the json and xml aggs holding us back from the standard

set now.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | Stephen Frost <sfrost(at)snowman(dot)net>, Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 22:05:07 |

| Message-ID: | CAKJS1f9zUpF4Ntb4=2ba5cQ9YmHptnKUB4tuZZNDmv0OAZ2T4g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 27 March 2018 at 09:27, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> I spent a fair amount of time hacking on this with intent to commit,

> but just as I was getting to code that I liked, I started to have second

> thoughts about whether this is a good idea at all. I quote from the fine

> manual:

>

> The aggregate functions array_agg, json_agg, jsonb_agg,

> json_object_agg, jsonb_object_agg, string_agg, and xmlagg, as well as

> similar user-defined aggregate functions, produce meaningfully

> different result values depending on the order of the input

> values. This ordering is unspecified by default, but can be controlled

> by writing an ORDER BY clause within the aggregate call, as shown in

> Section 4.2.7. Alternatively, supplying the input values from a sorted

> subquery will usually work ...

>

> I do not think it is accidental that these aggregates are exactly the ones

> that do not have parallelism support today. Rather, that's because you

> just about always have an interest in the order in which the inputs get

> aggregated, which is something that parallel aggregation cannot support.

This was not in my list of reasons for not adding them the first time

around. I mentioned these reasons in a response to Stephen.

> I fear that what will happen, if we commit this, is that something like

> 0.01% of the users of array_agg and string_agg will be pleased, another

> maybe 20% will be unaffected because they wrote ORDER BY which prevents

> parallel aggregation, and the remaining 80% will scream because we broke

> their queries. Telling them they should've written ORDER BY isn't going

> to cut it, IMO, when the benefit of that breakage will accrue only to some

> very tiny fraction of use-cases.

This very much reminds me of something that exists in the 8.4 release notes:

> SELECT DISTINCT and UNION/INTERSECT/EXCEPT no longer always produce sorted output (Tom)

> Previously, these types of queries always removed duplicate rows by means of Sort/Unique processing (i.e., sort then remove adjacent duplicates). Now they can be implemented by hashing, which will not produce sorted output. If an application relied on the output being in sorted order, the recommended fix is to add an ORDER BY clause. As a short-term workaround, the previous behavior can be restored by disabling enable_hashagg, but that is a very performance-expensive fix. SELECT DISTINCT ON never uses hashing, however, so its behavior is unchanged.

Seems we were happy enough then to tell users to add an ORDER BY.

However, this case is different, since before the results were always

ordered. This time they're possibly ordered. So we'll probably

surprise fewer people this time around.

--

David Rowley http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | David Rowley <david(dot)rowley(at)2ndquadrant(dot)com>, Stephen Frost <sfrost(at)snowman(dot)net>, Andres Freund <andres(at)anarazel(dot)de>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Aggregates for string_agg and array_agg |

| Date: | 2018-03-26 22:14:27 |

| Message-ID: | 13a2ab54-0d83-d2a2-faa2-963310d8f75f@2ndquadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03/26/2018 11:19 PM, Tom Lane wrote:

> Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> writes:

>> On 03/26/2018 10:27 PM, Tom Lane wrote:

>>> I fear that what will happen, if we commit this, is that something like

>>> 0.01% of the users of array_agg and string_agg will be pleased, another

>>> maybe 20% will be unaffected because they wrote ORDER BY which prevents

>>> parallel aggregation, and the remaining 80% will scream because we broke

>>> their queries. Telling them they should've written ORDER BY isn't going

>>> to cut it, IMO, when the benefit of that breakage will accrue only to some

>>> very tiny fraction of use-cases.

>

>> Isn't the ordering unreliable *already*?

>

> Not if the query is such that what gets chosen is, say, an indexscan

> or mergejoin. It might be theoretically unreliable and yet work fine

> for a given application.

>

What about parallel versions of those plans, though? That is, what

prevents the planner from generating plans like this

Hash Aggregate

-> Gather

-> Partial Index Scan

AFAIK that's a valid plan, and it does not have the stable ordering

property of a non-parallel index scan. Similarly for Merge Join.

> I might be too pessimistic about the fraction of users who are depending

> on ordered input without having written anything that explicitly forces

> that ... but I stand by the theory that it substantially exceeds the

> fraction of users who could get any benefit.

>

Not sure, it's pretty much impossible to produce a number that would

meaningfully represent the whole user base. All I can say is that the

number of people I know, who would benefit from this is significant.

>

> Your own example of assuming that separate aggregates are computed

> in the same order reinforces my point, I think. In principle, anybody

> who's doing that should write

>

> array_agg(e order by x),

> array_agg(f order by x),

> string_agg(g order by x)

>