Re: Proposing pg_hibernate

| Lists: | pgsql-hackers |

|---|

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Proposing pg_hibernate |

| Date: | 2014-02-04 00:18:54 |

| Message-ID: | CABwTF4Ui_anAG+ybseFunAH5Z6DE9aw2NPdy4HryK+M5OdXCCA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Please find attached the pg_hibernate extension. It is a

set-it-and-forget-it solution to enable hibernation of Postgres

shared-buffers. It can be thought of as an amalgam of pg_buffercache and

pg_prewarm.

It uses the background worker infrastructure. It registers one worker

process (BufferSaver) to save the shared-buffer metadata when server is

shutting down, and one worker per database (BlockReader) when restoring the

shared buffers.

It stores the buffer metadata under $PGDATA/pg_database/, one file per

database, and one separate file for global objects. It sorts the list of

buffers before storage, so that when it encounters a range of consecutive

blocks of a relation's fork, it stores that range as just one entry, hence

reducing the storage and I/O overhead.

On-disk binary format, which is used to create the per-database save-files,

is defined as:

1. One or more relation filenodes; stored as r<relfilenode>.

2. Each realtion is followed by one or more fork number; stored as

f<forknumber>

3. Each fork number is followed by one or more block numbers; stored as

b<blocknumber>

4. Each block number is followed by zero or more range numbers; stored as

N<number>

{r {f {b N* }+ }+ }+

Possible enhancements:

- Ability to save/restore only specific databases.

- Control how many BlockReaders are active at a time; to avoid I/O storms.

- Be smart about lowered shared_buffers across the restart.

- Different modes of reading like pg_prewarm does.

- Include PgFincore functionality, at least for Linux platforms.

The extension currently works with PG 9.3, and may work on 9.4 without any

changes; I haven't tested, though. If not, I think it'd be easy to port to

HEAD/PG 9.4. I see that 9.4 has put a cap on maximum background workers via

a GUC, and since my aim is to provide a non-intrusive no-tuning-required

extension, I'd like to use the new dynamic-background-worker infrastructure

in 9.4, which doesn't seem to have any preset limits (I think it's limited

by max_connections, but I may be wrong). I can work on 9.4 port, if there's

interest in including this as a contrib/ module.

To see the extension in action:

.) Compile it.

.) Install it.

.) Add it to shared_preload_libraries.

.) Start/restart Postgres.

.) Install pg_buffercache extension, to inspect the shared buffers.

.) Note the result of pg_buffercache view.

.) Work on your database to fill up the shared buffers.

.) Note the result of pg_buffercache view, again; there should be more

blocks than last time we checked.

.) Stop and start the Postgres server.

.) Note the output of pg_buffercache view; it should contain the blocks

seen just before the shutdown.

.) Future server restarts will automatically save and restore the blocks in

shared-buffers.

The code is also available as Git repository at

https://github.com/gurjeet/pg_hibernate/

Demo:

$ make -C contrib/pg_hibernate/

$ make -C contrib/pg_hibernate/ install

$ vi $B/db/data/postgresql.conf

$ grep shared_preload_libraries $PGDATA/postgresql.conf

shared_preload_libraries = 'pg_hibernate' # (change requires restart)

$ pgstart

waiting for server to start.... done

server started

$ pgsql -c 'create extension pg_buffercache;'

CREATE EXTENSION

$ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

null group by reldatabase;'

count

-------

163

14

(2 rows)

$ pgsql -c 'create table test_hibernate as select s as a, s::char(1000) as

b from generate_series(1, 100000) as s;'

SELECT 100000

$ pgsql -c 'create index on test_hibernate(a);'

CREATE INDEX

$ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

null group by reldatabase;'

count

-------

2254

14

(2 rows)

$ pgstop

waiting for server to shut down....... done

server stopped

$ pgstart

waiting for server to start.... done

server started

$ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

null group by reldatabase;'

count

-------

2264

17

(2 rows)

There are a few more blocks than the time they were saved, but all the

blocks from before the restart are present in shared buffers after the

restart.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| Attachment | Content-Type | Size |

|---|---|---|

| pg_hibernate.tgz | application/x-gzip | 8.6 KB |

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-28 02:01:11 |

| Message-ID: | CABwTF4UT-+ahuNfC-uVXHgPAC53ztaarcHUu35OAjCEVJgSYmA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Please find attached the updated code of Postgres Hibenator. Notable

changes since the first proposal are:

.) The name has been changed to pg_hibernator (from pg_hibernate), to

avoid confusion with the ORM Hibernate.

.) Works with Postgres 9.4

.) Uses DynamicBackgroundWorker infrastructure.

.) Ability to restore one database at a time, to avoid random-read

storms. Can be disabled by parameter.

.) A couple of bug-fixes.

.) Detailed documentation.

I am pasting the README here (also included in the attachment).

Best regards,

Postgres Hibernator

===================

This Postgres extension is a set-it-and-forget-it solution to save and restore

the Postgres shared-buffers contents, across Postgres server restarts.

It performs the automatic save and restore of database buffers, integrated with

database shutdown and startup, hence reducing the durations of

database maintenance

windows, in effect increasing the uptime of your applications.

Postgres Hibernator automatically saves the list of shared buffers to the disk

on database shutdown, and automatically restores the buffers on

database startup.

This acts pretty much like your Operating System's hibernate feature, except,

instead of saving the contents of the memory to disk, Postgres Hibernator saves

just a list of block identifiers. And it uses that list after startup to restore

the blocks from data directory into Postgres' shared buffers.

Why

--------------

DBAs are often faced with the task of performing some maintenance on their

database server(s) which requires shutting down the database. The maintenance

may involve anything from a database patch application, to a hardware upgrade.

One ugly side-effect of restarting the database server/service is that all the

data currently in database server's memory will be all lost, which was

painstakingly fetched from disk and put there in response to application queries

over time. And this data will have to be rebuilt as applications start querying

database again. The query response times will be very high until all the "hot"

data is fetched from disk and put back in memory again.

People employ a few tricks to get around this ugly truth, which range from

running a `select * from app_table;`, to `dd if=table_file ...`, to using

specialized utilities like pgfincore to prefetch data files into OS cache.

Wouldn't it be ideal if the database itself could save and restore its memory

contents across restarts!

The duration for which the server is building up caches, and trying to reach its

optimal cache performance is called ramp-up time. Postgres Hibernator is aimed

at reducing the ramp-up time of Postgres servers.

How

--------------

Compile and install the extension (you'll need a Postgres instalation and its

`pg_config` in `$PATH`):

$ cd pg_hibernator

$ make install

Then.

1. Add `pg_hibernator` to the `shared_preload_libraries` variable in

`postgresql.conf` file.

2. Restart the Postgres server.

3. You are done.

How it works

--------------

This extension uses the `Background Worker` infrastructure of

Postgres, which was

introduced in Postgres 9.3. When the server starts, this extension registers

background workers; one for saving the buffers (called `Buffer Saver`) when the

server shuts down, and one for each database in the cluster (called

`Block Readers`)

for restoring the buffers saved during previous shutdown.

When the Postgres server is being stopped/shut down, the `Buffer

Saver` scans the

shared-buffers of Postgres, and stores the unique block identifiers of

each cached

block to the disk. This information is saved under the `$PGDATA/pg_hibernator/`

directory. For each of the database whose blocks are resident in shared buffers,

one file is created; for eg.: `$PGDATA/pg_hibernator/2.postgres.save`.

During the next startup sequence, the `Block Reader` threads are

registered, one for

each file present under `$PGDATA/pg_hibernator/` directory. When the

Postgres server

has reached stable state (that is, it's ready for database connections), these

`Block Reader` processes are launched. The `Block Reader` process

reads the save-files

looking for block-ids to restore. It then connects to the respective database,

and requests Postgres to fetch the blocks into shared-buffers.

Configuration

--------------

This extension can be controlled via the following parameters. These parameters

can be set in postgresql.conf or on postmaster's command-line.

- `pg_hibernator.enabled`

Setting this parameter to false disables the hibernator features. That is,

on server startup the BlockReader processes will not be launched, and on

server shutdown the list of blocks in shared buffers will not be saved.

Note that the BuffersSaver process exists at all times, even when this

parameter is set to `false`. This is to allow the DBA to enable/disable the

extension without having to restart the server. The BufferSaver process

checks this parameter during server startup and right before shutdown, and

honors this parameter's value at that time.

To enable/disable Postgres Hibernator at runtime, change the value in

`postgresql.conf` and use `pg_ctl reload` to make Postgres re-read the new

parameter values from `postgresql.conf`.

Default value: `true`.

- `pg_hibernator.parallel`

This parameter controls whether Postgres Hibernator launches the BlockReader

processes in parallel, or sequentially, waiting for current BlockReader to

exit before launching the next one.

When enabled, all the BlockReaders, one for each database, will be launched

simultaneously, and this may cause huge random-read flood on disks if there

are many databases in cluster. This may also cause some BlockReaders to fail

to launch successfully because of `max_worker_processes` limit.

Default value: `false`.

- `pg_hibernator.default_database`

The BufferSaver process needs to connect to a database in order to perform

the database-name lookups etc. This parameter controls which database the

BufferSaver process connects to for performing these operations.

Default value: `postgres`.

Caveats

--------------

- Buffer list is saved only when Postgres is shutdown in "smart" and

"fast" modes.

That is, buffer list is not saved when database crashes, or on "immediate"

shutdown.

- A reduction in `shared_buffers` is not detected.

If the `shared_buffers` is reduced across a restart, and if the combined

saved buffer list is larger than the new shared_buffers, Postgres

Hibernator continues to read and restore blocks even after `shared_buffers`

worth of buffers have been restored.

FAQ

--------------

- What is the relationship between `pg_buffercache`, `pg_prewarm`, and

`pg_hibernator`?

They all allow you to do different things with Postgres' shared buffers.

+ pg_buffercahce:

Inspect and show contents of shared buffers

+ pg_prewarm:

Load some table/index/fork blocks into shared buffers. User needs

to tell it which blocks to load.

+ pg_hibernator:

Upon shutdown, save list of blocks stored in shared buffers. Upon

startup, loads those blocks back into shared buffers.

The goal of Postgres Hibernator is to be invisible to the user/DBA.

Whereas with `pg_prewarm` the user needs to know a lot of stuff about

what they really want to do, most likely information gathered via

`pg_buffercahce`.

- Does `pg_hibernate` use either `pg_buffercache` or `pg_prewarm`?

No, Postgres Hibernator works all on its own.

If the concern is, "Do I have to install pg_buffercache and pg_prewarm

to use pg_hibernator", the answer is no. pg_hibernator is a stand-alone

extension, although influenced by pg_buffercache and pg_prewarm.

With `pg_prewarm` you can load blocks of **only** the database

you're connected

to. So if you have `N` databases in your cluster, to restore blocks of all

databases, the DBA will have to connect to each database and invoke

`pg_prewarm` functions.

With `pg_hibernator`, DBA isn't required to do anything, let alone

connecting to the database!

- Where can I learn more about it?

There are a couple of blog posts and initial proposal to Postgres

hackers' mailing list. They may provide a better understanding of

Postgres Hibernator.

[Introducing Postrges

Hibernator](http://gurjeet.singh.im/blog/2014/02/03/introducing-postgres-hibernator/)

[Demostrating Performance

Benefits](http://gurjeet.singh.im/blog/2014/04/30/postgres-hibernator-reduce-planned-database-down-times/)

On Mon, Feb 3, 2014 at 7:18 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> Please find attached the pg_hibernate extension. It is a

> set-it-and-forget-it solution to enable hibernation of Postgres

> shared-buffers. It can be thought of as an amalgam of pg_buffercache and

> pg_prewarm.

>

> It uses the background worker infrastructure. It registers one worker

> process (BufferSaver) to save the shared-buffer metadata when server is

> shutting down, and one worker per database (BlockReader) when restoring the

> shared buffers.

>

> It stores the buffer metadata under $PGDATA/pg_database/, one file per

> database, and one separate file for global objects. It sorts the list of

> buffers before storage, so that when it encounters a range of consecutive

> blocks of a relation's fork, it stores that range as just one entry, hence

> reducing the storage and I/O overhead.

>

> On-disk binary format, which is used to create the per-database save-files,

> is defined as:

> 1. One or more relation filenodes; stored as r<relfilenode>.

> 2. Each realtion is followed by one or more fork number; stored as

> f<forknumber>

> 3. Each fork number is followed by one or more block numbers; stored as

> b<blocknumber>

> 4. Each block number is followed by zero or more range numbers; stored as

> N<number>

>

> {r {f {b N* }+ }+ }+

>

> Possible enhancements:

> - Ability to save/restore only specific databases.

> - Control how many BlockReaders are active at a time; to avoid I/O storms.

> - Be smart about lowered shared_buffers across the restart.

> - Different modes of reading like pg_prewarm does.

> - Include PgFincore functionality, at least for Linux platforms.

>

> The extension currently works with PG 9.3, and may work on 9.4 without any

> changes; I haven't tested, though. If not, I think it'd be easy to port to

> HEAD/PG 9.4. I see that 9.4 has put a cap on maximum background workers via

> a GUC, and since my aim is to provide a non-intrusive no-tuning-required

> extension, I'd like to use the new dynamic-background-worker infrastructure

> in 9.4, which doesn't seem to have any preset limits (I think it's limited

> by max_connections, but I may be wrong). I can work on 9.4 port, if there's

> interest in including this as a contrib/ module.

>

> To see the extension in action:

>

> .) Compile it.

> .) Install it.

> .) Add it to shared_preload_libraries.

> .) Start/restart Postgres.

> .) Install pg_buffercache extension, to inspect the shared buffers.

> .) Note the result of pg_buffercache view.

> .) Work on your database to fill up the shared buffers.

> .) Note the result of pg_buffercache view, again; there should be more

> blocks than last time we checked.

> .) Stop and start the Postgres server.

> .) Note the output of pg_buffercache view; it should contain the blocks seen

> just before the shutdown.

> .) Future server restarts will automatically save and restore the blocks in

> shared-buffers.

>

> The code is also available as Git repository at

> https://github.com/gurjeet/pg_hibernate/

>

> Demo:

>

> $ make -C contrib/pg_hibernate/

> $ make -C contrib/pg_hibernate/ install

> $ vi $B/db/data/postgresql.conf

> $ grep shared_preload_libraries $PGDATA/postgresql.conf

> shared_preload_libraries = 'pg_hibernate' # (change requires restart)

>

> $ pgstart

> waiting for server to start.... done

> server started

>

> $ pgsql -c 'create extension pg_buffercache;'

> CREATE EXTENSION

>

> $ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

> null group by reldatabase;'

> count

> -------

> 163

> 14

> (2 rows)

>

> $ pgsql -c 'create table test_hibernate as select s as a, s::char(1000) as b

> from generate_series(1, 100000) as s;'

> SELECT 100000

>

> $ pgsql -c 'create index on test_hibernate(a);'

> CREATE INDEX

>

> $ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

> null group by reldatabase;'

> count

> -------

> 2254

> 14

> (2 rows)

>

> $ pgstop

> waiting for server to shut down....... done

> server stopped

>

> $ pgstart

> waiting for server to start.... done

> server started

>

> $ pgsql -c 'select count(*) from pg_buffercache where relblocknumber is not

> null group by reldatabase;'

> count

> -------

> 2264

> 17

> (2 rows)

>

> There are a few more blocks than the time they were saved, but all the

> blocks from before the restart are present in shared buffers after the

> restart.

>

> Best regards,

> --

> Gurjeet Singh http://gurjeet.singh.im/

>

> EDB www.EnterpriseDB.com

--

Gurjeet Singh http://gurjeet.singh.im/

| Attachment | Content-Type | Size |

|---|---|---|

| pg_hibernator.tgz | application/x-gzip | 12.5 KB |

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-28 06:15:14 |

| Message-ID: | CAA4eK1LByHmxrLP+djEMfSD9vKqxQnVyFWsMuhE-TF3hgWzPzw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, May 28, 2014 at 7:31 AM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> Caveats

> --------------

>

> - Buffer list is saved only when Postgres is shutdown in "smart" and

> "fast" modes.

>

> That is, buffer list is not saved when database crashes, or on

"immediate"

> shutdown.

>

> - A reduction in `shared_buffers` is not detected.

>

> If the `shared_buffers` is reduced across a restart, and if the

combined

> saved buffer list is larger than the new shared_buffers, Postgres

> Hibernator continues to read and restore blocks even after

`shared_buffers`

> worth of buffers have been restored.

How about the cases when shared buffers already contain some

data:

a. Before Readers start filling shared buffers, if this cluster wishes

to join replication as a slave and receive the data from master, then

this utility might need to evict some buffers filled during startup

phase.

b. As soon as the server completes startup (reached consistent

point), it allows new connections which can also use some shared

buffers before Reader process could use shared buffers or are you

planing to change the time when users can connect to database.

I am not sure if replacing shared buffer contents in such cases can

always be considered useful.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-28 12:00:32 |

| Message-ID: | CABwTF4V-J--zuyvyaW=zfsTtk1cT+P_ofJ-h5unj6SS6TPuZjQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, May 28, 2014 at 2:15 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Wed, May 28, 2014 at 7:31 AM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> > Caveats

>> --------------

>>

>> - Buffer list is saved only when Postgres is shutdown in "smart" and

>> "fast" modes.

>>

>> That is, buffer list is not saved when database crashes, or on

>> "immediate"

>> shutdown.

>>

>> - A reduction in `shared_buffers` is not detected.

>>

>> If the `shared_buffers` is reduced across a restart, and if the

>> combined

>> saved buffer list is larger than the new shared_buffers, Postgres

>> Hibernator continues to read and restore blocks even after

>> `shared_buffers`

>> worth of buffers have been restored.

>

> How about the cases when shared buffers already contain some

> data:

> a. Before Readers start filling shared buffers, if this cluster wishes

> to join replication as a slave and receive the data from master, then

> this utility might need to evict some buffers filled during startup

> phase.

A cluster that wishes to be a replication standby, it would do so

while it's in startup phase. The BlockReaders are launched immediately

on cluster reaching consistent state, at which point, I presume, in

most of the cases, most of the buffers would be unoccupied. Hence

BlockReaders might evict the occupied buffers, which may be a small

fraction of total shared_buffers.

> b. As soon as the server completes startup (reached consistent

> point), it allows new connections which can also use some shared

> buffers before Reader process could use shared buffers or are you

> planing to change the time when users can connect to database.

The BlockReaders are launched immediately after the cluster reaches

consistent state, that is, just about when it is ready to accept

connections. So yes, there is a possibility that the I/O caused by the

BlockReaders may affect the performance of queries executed right at

cluster startup. But given that the performance of those queries was

anyway going to be low (because of empty shared buffers), and that

BlockReaders tend to cause sequential reads, and that by default

there's only one BlockReader active at a time, I think this won't be a

concern in most of the cases. By the time the shared buffers start

getting filled up, the buffer replacement strategy will evict any

buffers populated by BlockReaders if they are not used by the normal

queries.

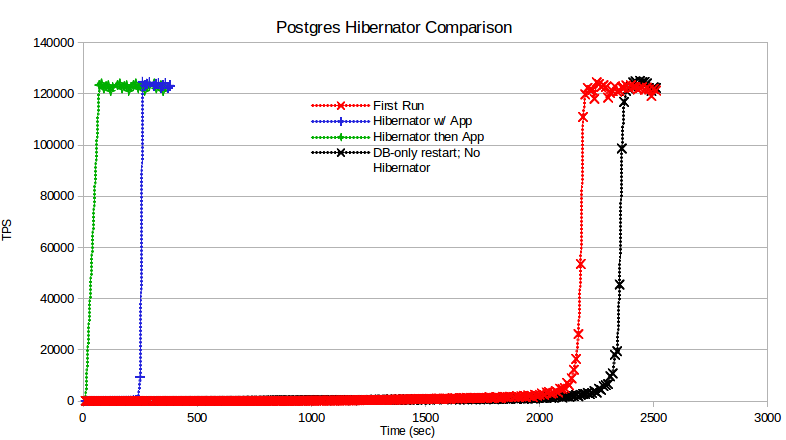

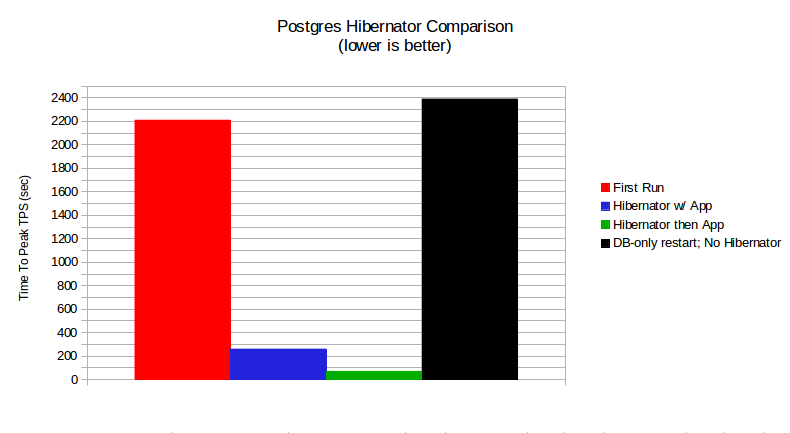

In the 'Sample Runs' section of my blog [1], I compared the cases

'Hibernator w/ App' and 'Hibernator then App', which demonstrate that

launching application load while the BlockReaders are active does

cause performance of both to be impacted by each other. But overall

it's a net win for application performance.

> I am not sure if replacing shared buffer contents in such cases can

> always be considered useful.

IMHO, all of these caveats, would affect a very small fraction of

use-cases and are eclipsed by the benefits this extension provides in

normal cases.

[1]: http://gurjeet.singh.im/blog/2014/04/30/postgres-hibernator-reduce-planned-database-down-times/

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-29 04:12:27 |

| Message-ID: | CAA4eK1L7KmCaf_9wOoK2bYj4V4hNs53aTxqc9sC-qS7JuXmhPw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, May 28, 2014 at 5:30 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> On Wed, May 28, 2014 at 2:15 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > How about the cases when shared buffers already contain some

> > data:

> > a. Before Readers start filling shared buffers, if this cluster wishes

> > to join replication as a slave and receive the data from master, then

> > this utility might need to evict some buffers filled during startup

> > phase.

>

> A cluster that wishes to be a replication standby, it would do so

> while it's in startup phase. The BlockReaders are launched immediately

> on cluster reaching consistent state, at which point, I presume, in

> most of the cases, most of the buffers would be unoccupied.

Even to reach consistent state, it might need to get the records

from master (example to get to STANDBY_SNAPSHOT_READY state).

> Hence

> BlockReaders might evict the occupied buffers, which may be a small

> fraction of total shared_buffers.

Yes, but I think still it depends on how much redo replay happens

on different pages.

> > b. As soon as the server completes startup (reached consistent

> > point), it allows new connections which can also use some shared

> > buffers before Reader process could use shared buffers or are you

> > planing to change the time when users can connect to database.

>

> The BlockReaders are launched immediately after the cluster reaches

> consistent state, that is, just about when it is ready to accept

> connections. So yes, there is a possibility that the I/O caused by the

> BlockReaders may affect the performance of queries executed right at

> cluster startup. But given that the performance of those queries was

> anyway going to be low (because of empty shared buffers), and that

> BlockReaders tend to cause sequential reads, and that by default

> there's only one BlockReader active at a time, I think this won't be a

> concern in most of the cases. By the time the shared buffers start

> getting filled up, the buffer replacement strategy will evict any

> buffers populated by BlockReaders if they are not used by the normal

> queries.

Even Block Readers might need to evict buffers filled by user

queries or by itself in which case there is chance of contention, but

again all these are quite rare scenario's.

> > I am not sure if replacing shared buffer contents in such cases can

> > always be considered useful.

>

> IMHO, all of these caveats, would affect a very small fraction of

> use-cases and are eclipsed by the benefits this extension provides in

> normal cases.

I agree with you that there are only few corner cases where evicting

shared buffers by this utility would harm, but was wondering if we could

even save those, say if it would only use available free buffers. I think

currently there is no such interface and inventing a new interface for this

case doesn't seem to reasonable unless we see any other use case of

such a interface.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-29 11:52:56 |

| Message-ID: | CABwTF4UHKb7jnpPNeB0iJ63OX1GzzEoh0EsYE5K5FMfRd0ei+g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On May 29, 2014 12:12 AM, "Amit Kapila" <amit(dot)kapila16(at)gmail(dot)com> wrote:

>

> I agree with you that there are only few corner cases where evicting

> shared buffers by this utility would harm, but was wondering if we could

> even save those, say if it would only use available free buffers. I think

> currently there is no such interface and inventing a new interface for

this

> case doesn't seem to reasonable unless we see any other use case of

> such a interface.

+1

| From: | Josh Kupershmidt <schmiddy(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-05-30 21:33:26 |

| Message-ID: | CAK3UJRGKOzx_pO+QESNKncHRVZeaNfeueJDM+L41aKcKNogPqw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, May 27, 2014 at 10:01 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> When the Postgres server is being stopped/shut down, the `Buffer

> Saver` scans the

> shared-buffers of Postgres, and stores the unique block identifiers of

> each cached

> block to the disk. This information is saved under the `$PGDATA/pg_hibernator/`

> directory. For each of the database whose blocks are resident in shared buffers,

> one file is created; for eg.: `$PGDATA/pg_hibernator/2.postgres.save`.

This file-naming convention seems a bit fragile. For example, on my

filesystem (HFS) if I create a database named "foo / bar", I'll get a

complaint like:

ERROR: could not open "pg_hibernator/5.foo / bar.save": No such file

or directory

during shutdown.

Josh

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Josh Kupershmidt <schmiddy(at)gmail(dot)com> |

| Cc: | PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-02 21:49:37 |

| Message-ID: | CABwTF4XGbpgwmkKt6ezSFVnpF11KmAg5m969twkGoDhnPj-QLA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, May 30, 2014 at 5:33 PM, Josh Kupershmidt <schmiddy(at)gmail(dot)com> wrote:

> On Tue, May 27, 2014 at 10:01 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>

>> When the Postgres server is being stopped/shut down, the `Buffer

>> Saver` scans the

>> shared-buffers of Postgres, and stores the unique block identifiers of

>> each cached

>> block to the disk. This information is saved under the `$PGDATA/pg_hibernator/`

>> directory. For each of the database whose blocks are resident in shared buffers,

>> one file is created; for eg.: `$PGDATA/pg_hibernator/2.postgres.save`.

>

> This file-naming convention seems a bit fragile. For example, on my

> filesystem (HFS) if I create a database named "foo / bar", I'll get a

> complaint like:

>

> ERROR: could not open "pg_hibernator/5.foo / bar.save": No such file

> or directory

>

> during shutdown.

Thanks for the report. I have reworked the file naming, and now the

save-file name is simply '<integer>.save', so the name of a database

does not affect the file name on disk. Instead, the null-terminated

database name is now written in the file, and read back for use when

restoring the buffers.

Attached is the new version of pg_hibernator, with updated code and README.

Just a heads up for anyone who might have read/reviewed previous

version's code, there's some unrelated trivial code and Makefile

changes as well in this version, which can be easily spotted by a

`diff -r`.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| Attachment | Content-Type | Size |

|---|---|---|

| pg_hibernator.v2.tgz | application/x-gzip | 13.1 KB |

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-03 11:57:34 |

| Message-ID: | CA+TgmoZtaLPinLC2nrXz2xrt_MJKK_m3VCNP8KtN0+pymc7pMg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, May 29, 2014 at 12:12 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>> IMHO, all of these caveats, would affect a very small fraction of

>> use-cases and are eclipsed by the benefits this extension provides in

>> normal cases.

>

> I agree with you that there are only few corner cases where evicting

> shared buffers by this utility would harm, but was wondering if we could

> even save those, say if it would only use available free buffers. I think

> currently there is no such interface and inventing a new interface for this

> case doesn't seem to reasonable unless we see any other use case of

> such a interface.

It seems like it would be best to try to do this at cluster startup

time, rather than once recovery has reached consistency. Of course,

that might mean doing it with a single process, which could have its

own share of problems. But I'm somewhat inclined to think that if

recovery has already run for a significant period of time, the blocks

that recovery has brought into shared_buffers are more likely to be

useful than whatever pg_hibernate would load.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-03 12:13:35 |

| Message-ID: | CABwTF4XAQ+C7ABieDNkmiBwiw0tm4nL0iXSHiY+5UevW8r1sNA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jun 3, 2014 at 7:57 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> On Thu, May 29, 2014 at 12:12 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>>> IMHO, all of these caveats, would affect a very small fraction of

>>> use-cases and are eclipsed by the benefits this extension provides in

>>> normal cases.

>>

>> I agree with you that there are only few corner cases where evicting

>> shared buffers by this utility would harm, but was wondering if we could

>> even save those, say if it would only use available free buffers. I think

>> currently there is no such interface and inventing a new interface for this

>> case doesn't seem to reasonable unless we see any other use case of

>> such a interface.

>

> It seems like it would be best to try to do this at cluster startup

> time, rather than once recovery has reached consistency. Of course,

> that might mean doing it with a single process, which could have its

> own share of problems. But I'm somewhat inclined to think that if

> recovery has already run for a significant period of time, the blocks

> that recovery has brought into shared_buffers are more likely to be

> useful than whatever pg_hibernate would load.

I am not absolutely sure of the order of execution between recovery

process and the BGWorker, but ...

For sizeable shared_buffers size, the restoration of the shared

buffers can take several seconds. I have a feeling the users wouldn't

like their master database take up to a few minutes to start accepting

connections. From my tests [1], " In the 'App after Hibernator' [case]

... This took 70 seconds for reading the ~4 GB database."

[1]: http://gurjeet.singh.im/blog/2014/04/30/postgres-hibernator-reduce-planned-database-down-times/

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-03 14:56:42 |

| Message-ID: | CABwTF4XosMr9VTOSx6999OOnrzK4vycT3+27DVubdBD7rcRLRw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jun 3, 2014 at 8:13 AM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> On Tue, Jun 3, 2014 at 7:57 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>> On Thu, May 29, 2014 at 12:12 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>>>> IMHO, all of these caveats, would affect a very small fraction of

>>>> use-cases and are eclipsed by the benefits this extension provides in

>>>> normal cases.

>>>

>>> I agree with you that there are only few corner cases where evicting

>>> shared buffers by this utility would harm, but was wondering if we could

>>> even save those, say if it would only use available free buffers. I think

>>> currently there is no such interface and inventing a new interface for this

>>> case doesn't seem to reasonable unless we see any other use case of

>>> such a interface.

>>

>> It seems like it would be best to try to do this at cluster startup

>> time, rather than once recovery has reached consistency. Of course,

>> that might mean doing it with a single process, which could have its

>> own share of problems. But I'm somewhat inclined to think that if

Currently pg_hibernator uses ReadBufferExtended() API, and AIUI, that

API requires a database connection//shared-memory attachment, and that

a backend process cannot switch between databases after the initial

connection.

>> own share of problems. But I'm somewhat inclined to think that if

>> recovery has already run for a significant period of time, the blocks

>> that recovery has brought into shared_buffers are more likely to be

>> useful than whatever pg_hibernate would load.

The applications that connect to a standby may have a different access

pattern than the applications that are operating on the master

database. So the buffers that are being restored by startup process

may not be relevant to the workload on the standby.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 04:54:13 |

| Message-ID: | CAA4eK1KVm+cwTtvV_v0WBksUaus228sSX3O9HbF+g8COzhy6zA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> On Tue, Jun 3, 2014 at 7:57 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> > It seems like it would be best to try to do this at cluster startup

> > time, rather than once recovery has reached consistency. Of course,

> > that might mean doing it with a single process, which could have its

> > own share of problems. But I'm somewhat inclined to think that if

> > recovery has already run for a significant period of time, the blocks

> > that recovery has brought into shared_buffers are more likely to be

> > useful than whatever pg_hibernate would load.

>

> I am not absolutely sure of the order of execution between recovery

> process and the BGWorker, but ...

>

> For sizeable shared_buffers size, the restoration of the shared

> buffers can take several seconds.

Incase of recovery, the shared buffers saved by this utility are

from previous shutdown which doesn't seem to be of more use

than buffers loaded by recovery.

> I have a feeling the users wouldn't

> like their master database take up to a few minutes to start accepting

> connections.

I think this is fair point and to address this we can have an option to

decide when to load buffer's and have default value as load before

recovery.

> Currently pg_hibernator uses ReadBufferExtended() API, and AIUI, that

> API requires a database connection//shared-memory attachment, and that

> a backend process cannot switch between databases after the initial

> connection.

If recovery can load the buffer's to apply WAL, why can't it be done with

pg_hibernator. Can't we use ReadBufferWithoutRelcache() to achieve

the purpose of pg_hibernator?

One other point:

> Note that the BuffersSaver process exists at all times, even when this

> parameter is set to `false`. This is to allow the DBA to enable/disable

the

> extension without having to restart the server. The BufferSaver process

> checks this parameter during server startup and right before shutdown, and

> honors this parameter's value at that time.

Why can't it be done when user register's the extension by using dynamic

background facility "RegisterDynamicBackgroundWorker"?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Andres Freund <andres(at)2ndquadrant(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Gurjeet Singh <gurjeet(at)singh(dot)im>, Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 06:08:48 |

| Message-ID: | 20140604060848.GX24145@awork2.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

> On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> > On Tue, Jun 3, 2014 at 7:57 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> > > It seems like it would be best to try to do this at cluster startup

> > > time, rather than once recovery has reached consistency. Of course,

> > > that might mean doing it with a single process, which could have its

> > > own share of problems. But I'm somewhat inclined to think that if

> > > recovery has already run for a significant period of time, the blocks

> > > that recovery has brought into shared_buffers are more likely to be

> > > useful than whatever pg_hibernate would load.

> >

> > I am not absolutely sure of the order of execution between recovery

> > process and the BGWorker, but ...

> >

> > For sizeable shared_buffers size, the restoration of the shared

> > buffers can take several seconds.

>

> Incase of recovery, the shared buffers saved by this utility are

> from previous shutdown which doesn't seem to be of more use

> than buffers loaded by recovery.

Why? The server might have been queried if it's a hot standby one?

Greetings,

Andres Freund

--

Andres Freund http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)2ndquadrant(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 13:51:36 |

| Message-ID: | CA+TgmoahPkD4=S8JkdOShwL_ot_LD2Hvs6246i1oVaKpz3SZzw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 2:08 AM, Andres Freund <andres(at)2ndquadrant(dot)com> wrote:

> On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

>> On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>> > On Tue, Jun 3, 2014 at 7:57 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>> > > It seems like it would be best to try to do this at cluster startup

>> > > time, rather than once recovery has reached consistency. Of course,

>> > > that might mean doing it with a single process, which could have its

>> > > own share of problems. But I'm somewhat inclined to think that if

>> > > recovery has already run for a significant period of time, the blocks

>> > > that recovery has brought into shared_buffers are more likely to be

>> > > useful than whatever pg_hibernate would load.

>> >

>> > I am not absolutely sure of the order of execution between recovery

>> > process and the BGWorker, but ...

>> >

>> > For sizeable shared_buffers size, the restoration of the shared

>> > buffers can take several seconds.

>>

>> Incase of recovery, the shared buffers saved by this utility are

>> from previous shutdown which doesn't seem to be of more use

>> than buffers loaded by recovery.

>

> Why? The server might have been queried if it's a hot standby one?

I think that's essentially the same point Amit is making. Gurjeet is

arguing for reloading the buffers from the previous shutdown at end of

recovery; IIUC, Amit, you, and I all think this isn't a good idea.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Andres Freund <andres(at)2ndquadrant(dot)com> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 13:56:57 |

| Message-ID: | 20140604135657.GA10482@alap3.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2014-06-04 09:51:36 -0400, Robert Haas wrote:

> On Wed, Jun 4, 2014 at 2:08 AM, Andres Freund <andres(at)2ndquadrant(dot)com> wrote:

> > On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

> >> Incase of recovery, the shared buffers saved by this utility are

> >> from previous shutdown which doesn't seem to be of more use

> >> than buffers loaded by recovery.

> >

> > Why? The server might have been queried if it's a hot standby one?

>

> I think that's essentially the same point Amit is making. Gurjeet is

> arguing for reloading the buffers from the previous shutdown at end of

> recovery; IIUC, Amit, you, and I all think this isn't a good idea.

I think I am actually arguing for Gurjeet's position. If the server is

actively being queried (i.e. hot_standby=on and actually used for

queries) it's quite reasonable to expect that shared_buffers has lots of

content that is *not* determined by WAL replay.

There's not that much read IO going on during WAL replay anyway - after

a crash/start from a restartpoint most of it is loaded via full page

anyway. So it's only disadvantageous to fault in pages via pg_hibernate

if that causes pages that already have been read in via FPIs to be

thrown out.

Greetings,

Andres Freund

--

Andres Freund http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)2ndquadrant(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 15:21:10 |

| Message-ID: | CAA4eK1JNO0JkwPHivFAUTb7sp6C7w+A8p0gxDL3qansdN+GqVg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 7:26 PM, Andres Freund <andres(at)2ndquadrant(dot)com>

wrote:

> On 2014-06-04 09:51:36 -0400, Robert Haas wrote:

> > On Wed, Jun 4, 2014 at 2:08 AM, Andres Freund <andres(at)2ndquadrant(dot)com>

wrote:

> > > On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

> > >> Incase of recovery, the shared buffers saved by this utility are

> > >> from previous shutdown which doesn't seem to be of more use

> > >> than buffers loaded by recovery.

> > >

> > > Why? The server might have been queried if it's a hot standby one?

Consider the case, crash (force kill or some other way) occurs when

BGSaver is saving the buffers, now I think it is possible that it has

saved partial information (information about some buffers is correct

and others is missing) and it is also possible by that time checkpoint

record is not written (which means recovery will start from previous

restart point). So whats going to happen is that pg_hibernate might

load some less used buffers/blocks (which have lower usage count)

and WAL replayed blocks will be sacrificed. So the WAL data from

previous restart point and some more due to delay in start of

standby (changes occured in master during that time) will be

sacrificed.

Another case is of standalone server in which case there is always

high chance that blocks recovered by recovery are the active one's.

Now I agree that case of standalone servers is less, but still some

small applications might be using it. Also I think same is true if

the crashed server is master.

> > I think that's essentially the same point Amit is making. Gurjeet is

> > arguing for reloading the buffers from the previous shutdown at end of

> > recovery; IIUC, Amit, you, and I all think this isn't a good idea.

>

> I think I am actually arguing for Gurjeet's position. If the server is

> actively being queried (i.e. hot_standby=on and actually used for

> queries) it's quite reasonable to expect that shared_buffers has lots of

> content that is *not* determined by WAL replay.

Yes, that's quite possible, however there can be situations where it

is not true as explained above.

> There's not that much read IO going on during WAL replay anyway - after

> a crash/start from a restartpoint most of it is loaded via full page

> anyway.

> So it's only disadvantageous to fault in pages via pg_hibernate

> if that causes pages that already have been read in via FPIs to be

> thrown out.

So for such cases, pages loaded by pg_hibernate turn out to be loss.

Overall I think there can be both kind of cases when it is beneficial

to load buffers after recovery and before recovery, thats why I

mentioned above that either it can be a parameter from user to

decide the same or may be we can have a new API which will

load buffers by BGworker without evicting any existing buffer

(use buffers from free list only).

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)2ndquadrant(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 18:50:39 |

| Message-ID: | CA+TgmoaqK7RCOK9NU8QUgbZaDLUDZqyRNdHWeQpXHihHvpAjGg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 9:56 AM, Andres Freund <andres(at)2ndquadrant(dot)com> wrote:

> On 2014-06-04 09:51:36 -0400, Robert Haas wrote:

>> On Wed, Jun 4, 2014 at 2:08 AM, Andres Freund <andres(at)2ndquadrant(dot)com> wrote:

>> > On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

>> >> Incase of recovery, the shared buffers saved by this utility are

>> >> from previous shutdown which doesn't seem to be of more use

>> >> than buffers loaded by recovery.

>> >

>> > Why? The server might have been queried if it's a hot standby one?

>>

>> I think that's essentially the same point Amit is making. Gurjeet is

>> arguing for reloading the buffers from the previous shutdown at end of

>> recovery; IIUC, Amit, you, and I all think this isn't a good idea.

>

> I think I am actually arguing for Gurjeet's position. If the server is

> actively being queried (i.e. hot_standby=on and actually used for

> queries) it's quite reasonable to expect that shared_buffers has lots of

> content that is *not* determined by WAL replay.

>

> There's not that much read IO going on during WAL replay anyway - after

> a crash/start from a restartpoint most of it is loaded via full page

> anyway. So it's only disadvantageous to fault in pages via pg_hibernate

> if that causes pages that already have been read in via FPIs to be

> thrown out.

The thing I was concerned about is that the system might have been in

recovery for months. What was hot at the time the base backup was

taken seems like a poor guide to what will be hot at the time of

promotion. Consider a history table, for example: the pages at the

end, which have just been written, are much more likely to be useful

than anything earlier.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Andres Freund <andres(at)2ndquadrant(dot)com> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-04 18:52:44 |

| Message-ID: | 20140604185244.GA785@awork2.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2014-06-04 14:50:39 -0400, Robert Haas wrote:

> The thing I was concerned about is that the system might have been in

> recovery for months. What was hot at the time the base backup was

> taken seems like a poor guide to what will be hot at the time of

> promotion. Consider a history table, for example: the pages at the

> end, which have just been written, are much more likely to be useful

> than anything earlier.

I'd assumed that the hibernation files would simply be excluded from the

basebackup...

Greetings,

Andres Freund

--

Andres Freund http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Andres Freund <andres(at)2ndquadrant(dot)com> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-05 12:09:07 |

| Message-ID: | CABwTF4VFqZgLgdTeRZ6ToJ3LdCwSvy9iddCYQSjxE=2=tx7G+Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 12:54 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>>

>> For sizeable shared_buffers size, the restoration of the shared

>> buffers can take several seconds.

>

> Incase of recovery, the shared buffers saved by this utility are

> from previous shutdown which doesn't seem to be of more use

> than buffers loaded by recovery.

I feel the need to enumerate the recovery scenarios we're talking

about so that we're all on the same page.

1) Hot backup (cp/rsync/pg_basebackup/.. while the master was running)

followed by

1a) recovery using archives or streaming replication.

1a.i) database in hot-standby mode

1a.ii) database not in hot-standby mode, i.e. it's in warm-standby mode.

1b) minimal recovery, that is, recover only the WAL available in

pg_xlog, then come online.

2) Cold backup of a crashed master, followed by startup of the copy

(causing crash recovery; IMHO same as case 1b above.).

3) Cold backup of clean-shutdown master, followed by startup of the

copy (no recovery).

In cases 1.x there won't be any save-files (*), because the

BlockReader processes remove their respective save-file when they are

done restoring the buffers, So the hot/warm-standby created thus will

not inherit the save-files, and hence post-recovery will not cause any

buffer restores.

Case 2 also won't cause any buffer restores because the save-files are

created only on clean shutdowons; not on a crash or immediate

shutdown.

Case 3, is the sweet spot of pg_hibernator. It will save buffer-list

on shutdown, and restore them when the backup-copy is started

(provided pg_hibernator is installed there).

(*) If a hot-backup is taken immediately after database comes online,

since BlockReaders may still be running and not have deleted the

save-files, the save-files may end up in backup, and hence cause the

recovery-time conflicts we're talking about. This should be rare in

practice, and even when it does happen, at worst it will affect the

initial performance of the cluster.

>> I have a feeling the users wouldn't

>> like their master database take up to a few minutes to start accepting

>> connections.

>

> I think this is fair point and to address this we can have an option to

> decide when to load buffer's and have default value as load before

> recovery.

Given the above description, I don't think crash/archive recovery is a

concern anymore. But if that corner case is still a concern, I

wouldn't favour making recovery slow by default, and make users of

pg_hibernator pay for choosing to use the extension. I'd prefer the

user explicitly ask for a behaviour that makes startups slow.

> One other point:

>> Note that the BuffersSaver process exists at all times, even when this

>> parameter is set to `false`. This is to allow the DBA to enable/disable

>> the

>> extension without having to restart the server. The BufferSaver process

>> checks this parameter during server startup and right before shutdown, and

>> honors this parameter's value at that time.

>

> Why can't it be done when user register's the extension by using dynamic

> background facility "RegisterDynamicBackgroundWorker"?

There's no user interface to this extension except for the 3 GUC

parameters; not even CREATE EXTENSION. The DBA is expected to append

this extension's name in shared_preload_libraries.

Since this extension declares one of its parameters PGC_POSTMASTER, it

can't be loaded via the SQL 'LOAD ' command.

postgres=# load 'pg_hibernator';

FATAL: cannot create PGC_POSTMASTER variables after startup

FATAL: cannot create PGC_POSTMASTER variables after startup

The connection to the server was lost. Attempting reset: Succeeded.

Best regards,

PS: I was out sick yesterday, so couldn't respond promptly.

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Andres Freund <andres(at)2ndquadrant(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-05 12:22:44 |

| Message-ID: | CABwTF4VtGN6654OVML9Nb9ktbJ4UD-J27kW2NKwwPF7jRqmjAg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 2:52 PM, Andres Freund <andres(at)2ndquadrant(dot)com> wrote:

> On 2014-06-04 14:50:39 -0400, Robert Haas wrote:

>> The thing I was concerned about is that the system might have been in

>> recovery for months. What was hot at the time the base backup was

>> taken seems like a poor guide to what will be hot at the time of

>> promotion. Consider a history table, for example: the pages at the

>> end, which have just been written, are much more likely to be useful

>> than anything earlier.

>

> I'd assumed that the hibernation files would simply be excluded from the

> basebackup...

Yes, they will be excluded, provided the BlockReader processes have

finished, because each BlockReader unlinks its save-file after it is

done restoring buffers listed in it.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Andres Freund <andres(at)2ndquadrant(dot)com>, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-05 12:32:09 |

| Message-ID: | CABwTF4Xh4zF5xhfgCZCpDjLpt21yhegO2xYZTcf2uV++okfrUw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jun 4, 2014 at 2:50 PM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> The thing I was concerned about is that the system might have been in

> recovery for months. What was hot at the time the base backup was

> taken seems like a poor guide to what will be hot at the time of

> promotion. Consider a history table, for example: the pages at the

> end, which have just been written, are much more likely to be useful

> than anything earlier.

I think you are specifically talking about a warm-standby that runs

recovery for months before being brought online. As described in my

response to Amit, if the base backup used to create that standby was

taken after the BlockReaders had restored the buffers (which should

complete within few minutes of startup, even for large databases),

then there's no concern since the base backup wouldn't contain the

save-files.

If it's a hot-standby, the restore process would start as soon as the

database starts accepting connections, finish soon after, and get

completely out of the way of the normal recovery process. At which

point the buffers populated by the recovery would compete only with

the buffers being requested by backends, which is the normal

behaviour.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Andres Freund <andres(at)2ndquadrant(dot)com> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-06 03:32:41 |

| Message-ID: | CAA4eK1LOBdnLX+5=WOGP=OVL2s5GVK3FmbCD8BZRQb4XEpNh+w@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jun 5, 2014 at 5:39 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>

> On Wed, Jun 4, 2014 at 12:54 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> >>

> >> For sizeable shared_buffers size, the restoration of the shared

> >> buffers can take several seconds.

> >

> > Incase of recovery, the shared buffers saved by this utility are

> > from previous shutdown which doesn't seem to be of more use

> > than buffers loaded by recovery.

>

> I feel the need to enumerate the recovery scenarios we're talking

> about so that we're all on the same page.

>

> 1) Hot backup (cp/rsync/pg_basebackup/.. while the master was running)

> followed by

> 1a) recovery using archives or streaming replication.

> 1a.i) database in hot-standby mode

> 1a.ii) database not in hot-standby mode, i.e. it's in warm-standby

mode.

> 1b) minimal recovery, that is, recover only the WAL available in

> pg_xlog, then come online.

>

> 2) Cold backup of a crashed master, followed by startup of the copy

> (causing crash recovery; IMHO same as case 1b above.).

>

> 3) Cold backup of clean-shutdown master, followed by startup of the

> copy (no recovery).

>

> In cases 1.x there won't be any save-files (*), because the

> BlockReader processes remove their respective save-file when they are

> done restoring the buffers, So the hot/warm-standby created thus will

> not inherit the save-files, and hence post-recovery will not cause any

> buffer restores.

>

> Case 2 also won't cause any buffer restores because the save-files are

> created only on clean shutdowons; not on a crash or immediate

> shutdown.

How do you ensure that buffers are saved only on clean shutdown?

Buffer saver process itself can crash while saving or restoring

buffers.

IIUC on shutdown request, postmaster will send signal to BG Saver

and BG Saver will save the buffers and then postmaster will send

signal to checkpointer to shutdown. So before writing Checkpoint

record, BG Saver can crash (it might have saved half the buffers)

or may BG saver saves buffers, but checkpointer crashes (due to

power outage or any such thing).

Another thing is don't you want to handle SIGQUIT signal in bg saver?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Andres Freund <andres(at)2ndquadrant(dot)com> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-06 11:04:29 |

| Message-ID: | CABwTF4UZrAZNu6jto6Ekz75mNK32AbvrGSaMMVj01e8dEP54uA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jun 5, 2014 at 11:32 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> Another thing is don't you want to handle SIGQUIT signal in bg saver?

I think bgworker_quickdie registered in StartBackgroundWorker() serves

the purpose just fine.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Andres Freund <andres(at)2ndquadrant(dot)com> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-06 12:01:09 |

| Message-ID: | CABwTF4UMUiA79vtdyrUx5=gb0qSpX3E286jWFwwbSZOw-_EvUA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jun 5, 2014 at 11:32 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Thu, Jun 5, 2014 at 5:39 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>>

>> > On Tue, Jun 3, 2014 at 5:43 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

>> Case 2 also won't cause any buffer restores because the save-files are

>> created only on clean shutdowons; not on a crash or immediate

>> shutdown.

>

> How do you ensure that buffers are saved only on clean shutdown?

Postmaster sends SIGTERM only in "smart" or "fast" shutdown requests.

> Buffer saver process itself can crash while saving or restoring

> buffers.

True. That may lead to partial list of buffers being saved. And the

code in Reader process tries hard to read only valid data, and punts

at the first sight of data that doesn't make sense or on ERROR raised

from Postgres API call.

> IIUC on shutdown request, postmaster will send signal to BG Saver

> and BG Saver will save the buffers and then postmaster will send

> signal to checkpointer to shutdown. So before writing Checkpoint

> record, BG Saver can crash (it might have saved half the buffers)

Case handled as described above.

> or may BG saver saves buffers, but checkpointer crashes (due to

> power outage or any such thing).

Checkpointer process' crash seems to be irrelevant to Postgres

Hibernator's workings.

I think you are trying to argue the wording in my claim "save-files

are created only on clean shutdowons; not on a crash or immediate

shutdown", by implying that a crash may occur at any time during and

after the BufferSaver processing. I agree the wording can be improved.

How about

... save-files are created only when Postgres is requested to shutdown

in normal (smart or fast) modes.

Note that I am leaving out the mention of crash.

Best regards,

--

Gurjeet Singh http://gurjeet.singh.im/

| From: | Jim Nasby <jim(at)nasby(dot)net> |

|---|---|

| To: | Andres Freund <andres(at)2ndquadrant(dot)com>, Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, Gurjeet Singh <gurjeet(at)singh(dot)im>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-06 18:51:59 |

| Message-ID: | 53920DCF.3040503@nasby.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 6/4/14, 8:56 AM, Andres Freund wrote:

> On 2014-06-04 09:51:36 -0400, Robert Haas wrote:

>> >On Wed, Jun 4, 2014 at 2:08 AM, Andres Freund<andres(at)2ndquadrant(dot)com> wrote:

>>> > >On 2014-06-04 10:24:13 +0530, Amit Kapila wrote:

>>>> > >>Incase of recovery, the shared buffers saved by this utility are

>>>> > >>from previous shutdown which doesn't seem to be of more use

>>>> > >>than buffers loaded by recovery.

>>> > >

>>> > >Why? The server might have been queried if it's a hot standby one?

>> >

>> >I think that's essentially the same point Amit is making. Gurjeet is

>> >arguing for reloading the buffers from the previous shutdown at end of

>> >recovery; IIUC, Amit, you, and I all think this isn't a good idea.

> I think I am actually arguing for Gurjeet's position. If the server is

> actively being queried (i.e. hot_standby=on and actually used for

> queries) it's quite reasonable to expect that shared_buffers has lots of

> content that is*not* determined by WAL replay.

Perhaps instead of trying to get data actually into shared buffers it would be better to just advise the kernel that we think we're going to need it? ISTM it's reasonably fast to pull data from disk cache into shared buffers.

On a related note, what I really wish for is the ability to restore the disk cash after a restart/unmount...

--

Jim C. Nasby, Data Architect jim(at)nasby(dot)net

512.569.9461 (cell) http://jim.nasby.net

| From: | Cédric Villemain <cedric(at)2ndquadrant(dot)com> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Cc: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-07 10:48:29 |

| Message-ID: | 2328330.T2qD6s7k5m@obelix |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Le lundi 3 février 2014 19:18:54 Gurjeet Singh a écrit :

> Possible enhancements:

> - Ability to save/restore only specific databases.

> - Control how many BlockReaders are active at a time; to avoid I/O

> storms. - Be smart about lowered shared_buffers across the restart.

> - Different modes of reading like pg_prewarm does.

> - Include PgFincore functionality, at least for Linux platforms.

Please note that pgfincore is working on any system where PostgreSQL

prefetch is working, exactly like pg_prewarm. This includes linux, BSD and

many unix-like. It *is not* limited to linux.

I never had a single request for windows, but windows does provides an

API for that too (however I have no windows offhand to test).

Another side note is that currently BSD (at least freeBSD) have a more

advanced mincore() syscall than linux and offers a better analysis (dirty

status is known) and they implemented posix_fadvise...

PS:

There is a previous thread about that hibernation feature. Mitsuru IWASAKI

did a patch, and it triggers some interesting discussions.

Some notes in this thread are outdated now, but it's worth having a look

at it:

http://www.postgresql.org/message-id/20110504.231048.113741617.iwasaki@jp.FreeBSD.org

https://commitfest.postgresql.org/action/patch_view?id=549

--

Cédric Villemain +33 (0)6 20 30 22 52

http://2ndQuadrant.fr/

PostgreSQL: Support 24x7 - Développement, Expertise et Formation

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Gurjeet Singh <gurjeet(at)singh(dot)im> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, PGSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Andres Freund <andres(at)2ndquadrant(dot)com> |

| Subject: | Re: Proposing pg_hibernate |

| Date: | 2014-06-08 07:24:28 |

| Message-ID: | CAA4eK1Kue1iPWGnshJZtd5i0ub1M=g1mr-tfZbhdyuoOrHkUGA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Jun 6, 2014 at 5:31 PM, Gurjeet Singh <gurjeet(at)singh(dot)im> wrote:

> On Thu, Jun 5, 2014 at 11:32 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > Buffer saver process itself can crash while saving or restoring

> > buffers.

>

> True. That may lead to partial list of buffers being saved. And the

> code in Reader process tries hard to read only valid data, and punts

> at the first sight of data that doesn't make sense or on ERROR raised

> from Postgres API call.

Inspite of Reader Process trying hard, I think we should ensure by