Re: [PoC] pgstattuple2: block sampling to reduce physical read

| Lists: | pgsql-hackers |

|---|

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-07-23 06:16:27 |

| Message-ID: | 51EE1FBB.2050502@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

I've been working on new pgstattuple function to allow

block sampling [1] in order to reduce block reads while

scanning a table. A PoC patch is attached.

[1] Re: [RFC] pgstattuple/pgstatindex enhancement

This new function, pgstattuple2(), samples only 3,000 blocks

(which accounts 24MB) from the table randomly, and estimates

several parameters of the entire table.

The function calculates the averages of the samples, estimates

the parameters (averages and SDs), and shows "standard errors

(SE)" to allow estimating status of the table with statistical

approach.

And, of course, it reduces number of physical block reads

while scanning a bigger table.

The following example shows that new pgstattuple2 function

runs x100 faster than the original pgstattuple function with

well-estimated results.

----------------------------------------------

postgres=# select * from pgstattuple('pgbench_accounts');

-[ RECORD 1 ]------+-----------

table_len | 1402642432

tuple_count | 10000000

tuple_len | 1210000000

tuple_percent | 86.27

dead_tuple_count | 182895

dead_tuple_len | 22130295

dead_tuple_percent | 1.58

free_space | 21012328

free_percent | 1.5

Time: 1615.651 ms

postgres=# select * from pgstattuple2('pgbench_accounts');

NOTICE: pgstattuple2: SE tuple_count 2376.47, tuple_len 287552.58,

dead_tuple_count 497.63, dead_tuple_len 60213.08, free_space 289752.38

-[ RECORD 1 ]------+-----------

table_len | 1402642432

tuple_count | 9978074

tuple_len | 1207347074

tuple_percent | 86.08

dead_tuple_count | 187315

dead_tuple_len | 22665208

dead_tuple_percent | 1.62

free_space | 23400431

free_percent | 1.67

Time: 15.026 ms

postgres=#

----------------------------------------------

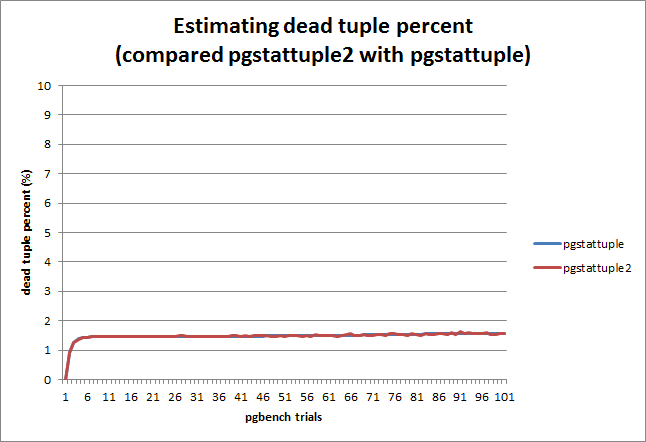

In addition to that, see attached chart to know how pgstattuple2

estimates well during repeating (long-running) pgbench.

I understand that pgbench would generate "random" transactions,

and those update operations might not have any skew over the table,

so estimating table status seems to be easy in this test.

However, I'm still curious to know whether it would work in

"real-world" worklaod.

Is it worth having this? Any comment or suggestion?

Regards,

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| Attachment | Content-Type | Size |

|---|---|---|

| pgstattuple2_v1.diff | text/plain | 11.4 KB |

|

image/png | 10.6 KB |

| From: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-07-23 11:02:44 |

| Message-ID: | 51EE62D4.7020401@2ndQuadrant.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 7/23/13 2:16 AM, Satoshi Nagayasu wrote:

> I've been working on new pgstattuple function to allow

> block sampling [1] in order to reduce block reads while

> scanning a table. A PoC patch is attached.

Take a look at all of the messages linked in

https://commitfest.postgresql.org/action/patch_view?id=778

Jaime and I tried to do what you're working on then, including a random

block sampling mechanism modeled on the stats_target mechanism. We

didn't do that as part of pgstattuple though, which was a mistake.

Noah created some test cases as part of his thorough review that were

not computing the correct results. Getting the results correct for all

of the various types of PostgreSQL tables and indexes ended up being

much harder than the sampling part. See

http://www.postgresql.org/message-id/20120222052747.GE8592@tornado.leadboat.com

in particular for that.

> This new function, pgstattuple2(), samples only 3,000 blocks

> (which accounts 24MB) from the table randomly, and estimates

> several parameters of the entire table.

There should be an input parameter to the function for how much sampling

to do, and if it's possible to make the scale for it to look like

ANALYZE that's helpful too.

I have a project for this summer that includes reviving this topic and

making sure it works on some real-world systems. If you want to work on

this too, I can easily combine that project into what you're doing.

--

Greg Smith 2ndQuadrant US greg(at)2ndQuadrant(dot)com Baltimore, MD

PostgreSQL Training, Services, and 24x7 Support www.2ndQuadrant.com

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | Greg Smith <greg(at)2ndQuadrant(dot)com> |

| Cc: | PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-09-14 07:18:50 |

| Message-ID: | 52340DDA.9090606@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/07/23 20:02), Greg Smith wrote:

> On 7/23/13 2:16 AM, Satoshi Nagayasu wrote:

>> I've been working on new pgstattuple function to allow

>> block sampling [1] in order to reduce block reads while

>> scanning a table. A PoC patch is attached.

>

> Take a look at all of the messages linked in

> https://commitfest.postgresql.org/action/patch_view?id=778

>

> Jaime and I tried to do what you're working on then, including a random

> block sampling mechanism modeled on the stats_target mechanism. We

> didn't do that as part of pgstattuple though, which was a mistake.

>

> Noah created some test cases as part of his thorough review that were

> not computing the correct results. Getting the results correct for all

> of the various types of PostgreSQL tables and indexes ended up being

> much harder than the sampling part. See

> http://www.postgresql.org/message-id/20120222052747.GE8592@tornado.leadboat.com

> in particular for that.

Thanks for the info. I have read the previous discussion.

I'm looking forward to seeing more feedback on this approach,

in terms of design and performance improvement.

So, I have submitted this for the next CF.

>> This new function, pgstattuple2(), samples only 3,000 blocks

>> (which accounts 24MB) from the table randomly, and estimates

>> several parameters of the entire table.

>

> There should be an input parameter to the function for how much sampling

> to do, and if it's possible to make the scale for it to look like

> ANALYZE that's helpful too.

>

> I have a project for this summer that includes reviving this topic and

> making sure it works on some real-world systems. If you want to work on

> this too, I can easily combine that project into what you're doing.

Yeah, I'm interested in that. Something can be shared?

Regards,

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| From: | Peter Eisentraut <peter_e(at)gmx(dot)net> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

| Cc: | Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-09-15 02:07:43 |

| Message-ID: | 1379210863.19286.29.camel@vanquo.pezone.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

> I'm looking forward to seeing more feedback on this approach,

> in terms of design and performance improvement.

> So, I have submitted this for the next CF.

Your patch fails to build:

pgstattuple.c: In function ‘pgstat_heap_sample’:

pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in this function)

pgstattuple.c:737:13: note: each undeclared identifier is reported only once for each function it appears in

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | Peter Eisentraut <peter_e(at)gmx(dot)net> |

| Cc: | Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-09-16 04:20:52 |

| Message-ID: | 52368724.6050706@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/09/15 11:07), Peter Eisentraut wrote:

> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>> I'm looking forward to seeing more feedback on this approach,

>> in terms of design and performance improvement.

>> So, I have submitted this for the next CF.

>

> Your patch fails to build:

>

> pgstattuple.c: In function ‘pgstat_heap_sample’:

> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in this function)

> pgstattuple.c:737:13: note: each undeclared identifier is reported only once for each function it appears in

Thanks for checking. Fixed to eliminate SnapshotNow.

Regards,

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| Attachment | Content-Type | Size |

|---|---|---|

| pgstattuple2_v2.diff | text/plain | 11.6 KB |

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net> |

| Cc: | Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-10 22:09:34 |

| Message-ID: | 5257259E.2070103@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 16/09/13 16:20, Satoshi Nagayasu wrote:

> (2013/09/15 11:07), Peter Eisentraut wrote:

>> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>>> I'm looking forward to seeing more feedback on this approach,

>>> in terms of design and performance improvement.

>>> So, I have submitted this for the next CF.

>>

>> Your patch fails to build:

>>

>> pgstattuple.c: In function ‘pgstat_heap_sample’:

>> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in

>> this function)

>> pgstattuple.c:737:13: note: each undeclared identifier is reported

>> only once for each function it appears in

>

> Thanks for checking. Fixed to eliminate SnapshotNow.

>

This seems like a cool idea! I took a quick look, and initally

replicated the sort of improvement you saw:

bench=# explain analyze select * from pgstattuple('pgbench_accounts');

QUERY PLAN

--------------------------------------------------------------------------------

Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

time=786.368..786.369 rows=1 loops=1)

Total runtime: 786.384 ms

(2 rows)

bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

QUERY PLAN

--------------------------------------------------------------------------------

Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

time=12.004..12.005 rows=1 loops=1)

Total runtime: 12.019 ms

(2 rows)

I wondered what sort of difference eliminating caching would make:

$ sudo sysctl -w vm.drop_caches=3

Repeating the above queries:

bench=# explain analyze select * from pgstattuple('pgbench_accounts');

QUERY PLAN

--------------------------------------------------------------------------------

Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

time=9503.774..9503.776 rows=1 loops=1)

Total runtime: 9504.523 ms

(2 rows)

bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

QUERY PLAN

--------------------------------------------------------------------------------

Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

time=12330.630..12330.631 rows=1 loops=1)

Total runtime: 12331.353 ms

(2 rows)

So the sampling code seems *slower* when the cache is completely cold -

is that expected? (I have not looked at how the code works yet - I'll

dive in later if I get a chance)!

Regards

Mark

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net> |

| Cc: | Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-10 22:32:13 |

| Message-ID: | 52572AED.3030609@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11/10/13 11:09, Mark Kirkwood wrote:

> On 16/09/13 16:20, Satoshi Nagayasu wrote:

>> (2013/09/15 11:07), Peter Eisentraut wrote:

>>> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>>>> I'm looking forward to seeing more feedback on this approach,

>>>> in terms of design and performance improvement.

>>>> So, I have submitted this for the next CF.

>>> Your patch fails to build:

>>>

>>> pgstattuple.c: In function ‘pgstat_heap_sample’:

>>> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in

>>> this function)

>>> pgstattuple.c:737:13: note: each undeclared identifier is reported

>>> only once for each function it appears in

>> Thanks for checking. Fixed to eliminate SnapshotNow.

>>

> This seems like a cool idea! I took a quick look, and initally

> replicated the sort of improvement you saw:

>

>

> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

> QUERY PLAN

>

> --------------------------------------------------------------------------------

> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

> time=786.368..786.369 rows=1 loops=1)

> Total runtime: 786.384 ms

> (2 rows)

>

> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

> QUERY PLAN

>

> --------------------------------------------------------------------------------

> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

> time=12.004..12.005 rows=1 loops=1)

> Total runtime: 12.019 ms

> (2 rows)

>

>

>

> I wondered what sort of difference eliminating caching would make:

>

> $ sudo sysctl -w vm.drop_caches=3

>

> Repeating the above queries:

>

>

> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

> QUERY PLAN

>

> --------------------------------------------------------------------------------

> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

> time=9503.774..9503.776 rows=1 loops=1)

> Total runtime: 9504.523 ms

> (2 rows)

>

> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

> QUERY PLAN

>

> --------------------------------------------------------------------------------

> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

> time=12330.630..12330.631 rows=1 loops=1)

> Total runtime: 12331.353 ms

> (2 rows)

>

>

> So the sampling code seems *slower* when the cache is completely cold -

> is that expected? (I have not looked at how the code works yet - I'll

> dive in later if I get a chance)!

>

Quietly replying to myself - looking at the code the sampler does 3000

random page reads... I guess this is slower than 163935 (number of pages

in pgbench_accounts) sequential page reads thanks to os readahead on my

type of disk (WD Velociraptor). Tweaking the number of random reads (i.e

the sample size) down helps - but obviously that can impact estimation

accuracy.

Thinking about this a bit more, I guess the elapsed runtime is not the

*only* theng to consider - the sampling code will cause way less

disruption to the os page cache (3000 pages vs possibly lots more than

3000 for reading an entire ralation).

Thoughts?

Cheers

Mark

| From: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

|---|---|

| To: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

| Cc: | Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-11 04:08:37 |

| Message-ID: | 525779C5.2020608@uptime.jp |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

(2013/10/11 7:32), Mark Kirkwood wrote:

> On 11/10/13 11:09, Mark Kirkwood wrote:

>> On 16/09/13 16:20, Satoshi Nagayasu wrote:

>>> (2013/09/15 11:07), Peter Eisentraut wrote:

>>>> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>>>>> I'm looking forward to seeing more feedback on this approach,

>>>>> in terms of design and performance improvement.

>>>>> So, I have submitted this for the next CF.

>>>> Your patch fails to build:

>>>>

>>>> pgstattuple.c: In function ‘pgstat_heap_sample’:

>>>> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in

>>>> this function)

>>>> pgstattuple.c:737:13: note: each undeclared identifier is reported

>>>> only once for each function it appears in

>>> Thanks for checking. Fixed to eliminate SnapshotNow.

>>>

>> This seems like a cool idea! I took a quick look, and initally

>> replicated the sort of improvement you saw:

>>

>>

>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>> QUERY PLAN

>>

>> --------------------------------------------------------------------------------

>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>> time=786.368..786.369 rows=1 loops=1)

>> Total runtime: 786.384 ms

>> (2 rows)

>>

>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>> QUERY PLAN

>>

>> --------------------------------------------------------------------------------

>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>> time=12.004..12.005 rows=1 loops=1)

>> Total runtime: 12.019 ms

>> (2 rows)

>>

>>

>>

>> I wondered what sort of difference eliminating caching would make:

>>

>> $ sudo sysctl -w vm.drop_caches=3

>>

>> Repeating the above queries:

>>

>>

>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>> QUERY PLAN

>>

>> --------------------------------------------------------------------------------

>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>> time=9503.774..9503.776 rows=1 loops=1)

>> Total runtime: 9504.523 ms

>> (2 rows)

>>

>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>> QUERY PLAN

>>

>> --------------------------------------------------------------------------------

>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>> time=12330.630..12330.631 rows=1 loops=1)

>> Total runtime: 12331.353 ms

>> (2 rows)

>>

>>

>> So the sampling code seems *slower* when the cache is completely cold -

>> is that expected? (I have not looked at how the code works yet - I'll

>> dive in later if I get a chance)!

Thanks for testing that. It would be very helpful to improve the

performance.

> Quietly replying to myself - looking at the code the sampler does 3000

> random page reads... I guess this is slower than 163935 (number of pages

> in pgbench_accounts) sequential page reads thanks to os readahead on my

> type of disk (WD Velociraptor). Tweaking the number of random reads (i.e

> the sample size) down helps - but obviously that can impact estimation

> accuracy.

>

> Thinking about this a bit more, I guess the elapsed runtime is not the

> *only* theng to consider - the sampling code will cause way less

> disruption to the os page cache (3000 pages vs possibly lots more than

> 3000 for reading an entire ralation).

>

> Thoughts?

I think it could be improved by sorting sample block numbers

*before* physical block reads in order to eliminate random access

on the disk.

pseudo code:

--------------------------------------

for (i=0 ; i<SAMPLE_SIZE ; i++)

{

sample_block[i] = random();

}

qsort(sample_block);

for (i=0 ; i<SAMPLE_SIZE ; i++)

{

buf = ReadBuffer(rel, sample_block[i]);

do_some_stats_stuff(buf);

}

--------------------------------------

I guess it would be helpful for reducing random access thing.

Any comments?

--

Satoshi Nagayasu <snaga(at)uptime(dot)jp>

Uptime Technologies, LLC. http://www.uptime.jp

| From: | Jaime Casanova <jaime(at)2ndquadrant(dot)com> |

|---|---|

| To: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

| Cc: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndquadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-11 04:33:12 |

| Message-ID: | CAJKUy5g1Kh8Urc1nSUDOyTG_b3opBVjp=7_ZBjgiGdPutee-jQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Oct 10, 2013 at 5:32 PM, Mark Kirkwood

<mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> wrote:

>

> Quietly replying to myself - looking at the code the sampler does 3000

> random page reads...

FWIW, something that bothers me is that there is 3000 random page

reads... i mean, why 3000? how do you get that number as absolute for

good accuracy in every relation? why not a percentage, maybe an

argument to the function?

also the name pgstattuple2, doesn't convince me... maybe you can use

pgstattuple() if you use a second argument (percentage of the sample)

to overload the function

--

Jaime Casanova www.2ndQuadrant.com

Professional PostgreSQL: Soporte 24x7 y capacitación

Phone: +593 4 5107566 Cell: +593 987171157

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

| Cc: | Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-11 04:49:33 |

| Message-ID: | 5257835D.9020100@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11/10/13 17:08, Satoshi Nagayasu wrote:

> (2013/10/11 7:32), Mark Kirkwood wrote:

>> On 11/10/13 11:09, Mark Kirkwood wrote:

>>> On 16/09/13 16:20, Satoshi Nagayasu wrote:

>>>> (2013/09/15 11:07), Peter Eisentraut wrote:

>>>>> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>>>>>> I'm looking forward to seeing more feedback on this approach,

>>>>>> in terms of design and performance improvement.

>>>>>> So, I have submitted this for the next CF.

>>>>> Your patch fails to build:

>>>>>

>>>>> pgstattuple.c: In function ‘pgstat_heap_sample’:

>>>>> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in

>>>>> this function)

>>>>> pgstattuple.c:737:13: note: each undeclared identifier is reported

>>>>> only once for each function it appears in

>>>> Thanks for checking. Fixed to eliminate SnapshotNow.

>>>>

>>> This seems like a cool idea! I took a quick look, and initally

>>> replicated the sort of improvement you saw:

>>>

>>>

>>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>>> QUERY PLAN

>>>

>>> --------------------------------------------------------------------------------

>>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>>> time=786.368..786.369 rows=1 loops=1)

>>> Total runtime: 786.384 ms

>>> (2 rows)

>>>

>>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>>> QUERY PLAN

>>>

>>> --------------------------------------------------------------------------------

>>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>>> time=12.004..12.005 rows=1 loops=1)

>>> Total runtime: 12.019 ms

>>> (2 rows)

>>>

>>>

>>>

>>> I wondered what sort of difference eliminating caching would make:

>>>

>>> $ sudo sysctl -w vm.drop_caches=3

>>>

>>> Repeating the above queries:

>>>

>>>

>>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>>> QUERY PLAN

>>>

>>> --------------------------------------------------------------------------------

>>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>>> time=9503.774..9503.776 rows=1 loops=1)

>>> Total runtime: 9504.523 ms

>>> (2 rows)

>>>

>>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>>> QUERY PLAN

>>>

>>> --------------------------------------------------------------------------------

>>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>>> time=12330.630..12330.631 rows=1 loops=1)

>>> Total runtime: 12331.353 ms

>>> (2 rows)

>>>

>>>

>>> So the sampling code seems *slower* when the cache is completely cold -

>>> is that expected? (I have not looked at how the code works yet - I'll

>>> dive in later if I get a chance)!

> Thanks for testing that. It would be very helpful to improve the

> performance.

>

>> Quietly replying to myself - looking at the code the sampler does 3000

>> random page reads... I guess this is slower than 163935 (number of pages

>> in pgbench_accounts) sequential page reads thanks to os readahead on my

>> type of disk (WD Velociraptor). Tweaking the number of random reads (i.e

>> the sample size) down helps - but obviously that can impact estimation

>> accuracy.

>>

>> Thinking about this a bit more, I guess the elapsed runtime is not the

>> *only* theng to consider - the sampling code will cause way less

>> disruption to the os page cache (3000 pages vs possibly lots more than

>> 3000 for reading an entire ralation).

>>

>> Thoughts?

> I think it could be improved by sorting sample block numbers

> *before* physical block reads in order to eliminate random access

> on the disk.

>

> pseudo code:

> --------------------------------------

> for (i=0 ; i<SAMPLE_SIZE ; i++)

> {

> sample_block[i] = random();

> }

>

> qsort(sample_block);

>

> for (i=0 ; i<SAMPLE_SIZE ; i++)

> {

> buf = ReadBuffer(rel, sample_block[i]);

>

> do_some_stats_stuff(buf);

> }

> --------------------------------------

>

> I guess it would be helpful for reducing random access thing.

>

> Any comments?

Ah yes - that's a good idea (rough patch to your patch attached)!

bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

QUERY PLAN

--------------------------------------------------------------------------------

Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

time=9968.318..9968.319 rows=1 loops=1)

Total runtime: 9968.443 ms

(2 rows)

It would appear that we are now not any worse than a complete sampling...

Cheers

Mark

| Attachment | Content-Type | Size |

|---|---|---|

| pgstattuple.c.diff | text/x-patch | 2.1 KB |

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Jaime Casanova <jaime(at)2ndquadrant(dot)com> |

| Cc: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndquadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-11 05:02:43 |

| Message-ID: | 52578673.5010800@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11/10/13 17:33, Jaime Casanova wrote:

> On Thu, Oct 10, 2013 at 5:32 PM, Mark Kirkwood

> <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> wrote:

>> Quietly replying to myself - looking at the code the sampler does 3000

>> random page reads...

> FWIW, something that bothers me is that there is 3000 random page

> reads... i mean, why 3000? how do you get that number as absolute for

> good accuracy in every relation? why not a percentage, maybe an

> argument to the function?

Right,

Looking at http://en.wikipedia.org/wiki/Sample_size_determination maybe

it is not such a bad setting - tho 400 or 1000 seem to be good magic

numbers too (if we are gonna punt on single number that is).

Perhaps it should reuse (some of) the code from acquire_sample_rows in

src/commands/analyze.c (we can't use exactly the same logic, as we need

to keep block data together in this case).

Cheers

Mark

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Satoshi Nagayasu <snaga(at)uptime(dot)jp> |

| Cc: | Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndQuadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-15 22:34:49 |

| Message-ID: | 525DC309.4070401@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11/10/13 17:49, Mark Kirkwood wrote:

> On 11/10/13 17:08, Satoshi Nagayasu wrote:

>> (2013/10/11 7:32), Mark Kirkwood wrote:

>>> On 11/10/13 11:09, Mark Kirkwood wrote:

>>>> On 16/09/13 16:20, Satoshi Nagayasu wrote:

>>>>> (2013/09/15 11:07), Peter Eisentraut wrote:

>>>>>> On Sat, 2013-09-14 at 16:18 +0900, Satoshi Nagayasu wrote:

>>>>>>> I'm looking forward to seeing more feedback on this approach,

>>>>>>> in terms of design and performance improvement.

>>>>>>> So, I have submitted this for the next CF.

>>>>>> Your patch fails to build:

>>>>>>

>>>>>> pgstattuple.c: In function ‘pgstat_heap_sample’:

>>>>>> pgstattuple.c:737:13: error: ‘SnapshotNow’ undeclared (first use in

>>>>>> this function)

>>>>>> pgstattuple.c:737:13: note: each undeclared identifier is reported

>>>>>> only once for each function it appears in

>>>>> Thanks for checking. Fixed to eliminate SnapshotNow.

>>>>>

>>>> This seems like a cool idea! I took a quick look, and initally

>>>> replicated the sort of improvement you saw:

>>>>

>>>>

>>>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>>>> QUERY PLAN

>>>>

>>>> --------------------------------------------------------------------------------

>>>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>>>> time=786.368..786.369 rows=1 loops=1)

>>>> Total runtime: 786.384 ms

>>>> (2 rows)

>>>>

>>>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>>>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>>>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>>>> QUERY PLAN

>>>>

>>>> --------------------------------------------------------------------------------

>>>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>>>> time=12.004..12.005 rows=1 loops=1)

>>>> Total runtime: 12.019 ms

>>>> (2 rows)

>>>>

>>>>

>>>>

>>>> I wondered what sort of difference eliminating caching would make:

>>>>

>>>> $ sudo sysctl -w vm.drop_caches=3

>>>>

>>>> Repeating the above queries:

>>>>

>>>>

>>>> bench=# explain analyze select * from pgstattuple('pgbench_accounts');

>>>> QUERY PLAN

>>>>

>>>> --------------------------------------------------------------------------------

>>>> Function Scan on pgstattuple (cost=0.00..0.01 rows=1 width=72) (actual

>>>> time=9503.774..9503.776 rows=1 loops=1)

>>>> Total runtime: 9504.523 ms

>>>> (2 rows)

>>>>

>>>> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

>>>> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

>>>> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

>>>> QUERY PLAN

>>>>

>>>> --------------------------------------------------------------------------------

>>>> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

>>>> time=12330.630..12330.631 rows=1 loops=1)

>>>> Total runtime: 12331.353 ms

>>>> (2 rows)

>>>>

>>>>

>>>> So the sampling code seems *slower* when the cache is completely cold -

>>>> is that expected? (I have not looked at how the code works yet - I'll

>>>> dive in later if I get a chance)!

>> Thanks for testing that. It would be very helpful to improve the

>> performance.

>>

>>> Quietly replying to myself - looking at the code the sampler does 3000

>>> random page reads... I guess this is slower than 163935 (number of pages

>>> in pgbench_accounts) sequential page reads thanks to os readahead on my

>>> type of disk (WD Velociraptor). Tweaking the number of random reads (i.e

>>> the sample size) down helps - but obviously that can impact estimation

>>> accuracy.

>>>

>>> Thinking about this a bit more, I guess the elapsed runtime is not the

>>> *only* theng to consider - the sampling code will cause way less

>>> disruption to the os page cache (3000 pages vs possibly lots more than

>>> 3000 for reading an entire ralation).

>>>

>>> Thoughts?

>> I think it could be improved by sorting sample block numbers

>> *before* physical block reads in order to eliminate random access

>> on the disk.

>>

>> pseudo code:

>> --------------------------------------

>> for (i=0 ; i<SAMPLE_SIZE ; i++)

>> {

>> sample_block[i] = random();

>> }

>>

>> qsort(sample_block);

>>

>> for (i=0 ; i<SAMPLE_SIZE ; i++)

>> {

>> buf = ReadBuffer(rel, sample_block[i]);

>>

>> do_some_stats_stuff(buf);

>> }

>> --------------------------------------

>>

>> I guess it would be helpful for reducing random access thing.

>>

>> Any comments?

> Ah yes - that's a good idea (rough patch to your patch attached)!

>

> bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

> NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

> dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

> QUERY PLAN

>

> --------------------------------------------------------------------------------

> Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

> time=9968.318..9968.319 rows=1 loops=1)

> Total runtime: 9968.443 ms

> (2 rows)

>

Actually - correcting my compare function to sort the blocks in

*increasing* order (doh), gets a better result:

bench=# explain analyze select * from pgstattuple2('pgbench_accounts');

NOTICE: pgstattuple2: SE tuple_count 0.00, tuple_len 0.00,

dead_tuple_count 0.00, dead_tuple_len 0.00, free_space 0.00

QUERY PLAN

------------------------------------------------------------------------------------------------------------------

Function Scan on pgstattuple2 (cost=0.00..0.01 rows=1 width=72) (actual

time=7055.840..7055.841 rows=1 loops=1)

Total runtime: 7055.954 ms

(2 rows)

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Jaime Casanova <jaime(at)2ndquadrant(dot)com> |

| Cc: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndquadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-15 22:51:28 |

| Message-ID: | 525DC6F0.9060300@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 11/10/13 17:33, Jaime Casanova wrote:

> also the name pgstattuple2, doesn't convince me... maybe you can use

> pgstattuple() if you use a second argument (percentage of the sample)

> to overload the function

+1, that seems much nicer.

Regards

Mark

| From: | Mark Kirkwood <mark(dot)kirkwood(at)catalyst(dot)net(dot)nz> |

|---|---|

| To: | Jaime Casanova <jaime(at)2ndquadrant(dot)com> |

| Cc: | Satoshi Nagayasu <snaga(at)uptime(dot)jp>, Peter Eisentraut <peter_e(at)gmx(dot)net>, Greg Smith <greg(at)2ndquadrant(dot)com>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PoC] pgstattuple2: block sampling to reduce physical read |

| Date: | 2013-10-21 22:39:18 |

| Message-ID: | 5265AD16.3090704@catalyst.net.nz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 16/10/13 11:51, Mark Kirkwood wrote:

> On 11/10/13 17:33, Jaime Casanova wrote:

>> also the name pgstattuple2, doesn't convince me... maybe you can use

>> pgstattuple() if you use a second argument (percentage of the sample)

>> to overload the function

>

> +1, that seems much nicer.

>

Oh - and if you produce a new version I'd be happy to review it (I guess

it will need to go in the next CF, given the discussion happening now).

Regards

Mark