PATCH: Split stats file per database WAS: autovacuum stress-testing our system

| Lists: | pgsql-hackers |

|---|

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | autovacuum stress-testing our system |

| Date: | 2012-09-26 12:43:30 |

| Message-ID: | 1718942738eb65c8407fcd864883f4c8@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

I've been struggling with autovacuum generating a lot of I/O and CPU on

some of our

systems - after a night spent analyzing this behavior, I believe the

current

autovacuum accidentally behaves a bit like a stress-test in some corner

cases (but

I may be seriously wrong, after all it was a long night).

First - our system really is not a "common" one - we do have ~1000 of

databases of

various size, each containing up to several thousands of tables

(several user-defined

tables, the rest serve as caches for a reporting application - yes,

it's a bit weird

design but that's life). This all leads to pgstat.stat significantly

larger than 60 MB.

Now, the two main pieces of information from the pgstat.c are the timer

definitions

---------------------------------- pgstat.c : 80

----------------------------------

#define PGSTAT_STAT_INTERVAL 500 /* Minimum time between stats

file

* updates; in milliseconds. */

#define PGSTAT_RETRY_DELAY 10 /* How long to wait between

checks for

* a new file; in milliseconds.

*/

#define PGSTAT_MAX_WAIT_TIME 10000 /* Maximum time to wait for a

stats

* file update; in milliseconds.

*/

#define PGSTAT_INQ_INTERVAL 640 /* How often to ping the

collector for

* a new file; in milliseconds.

*/

#define PGSTAT_RESTART_INTERVAL 60 /* How often to attempt to

restart a

* failed statistics collector;

in

* seconds. */

#define PGSTAT_POLL_LOOP_COUNT (PGSTAT_MAX_WAIT_TIME /

PGSTAT_RETRY_DELAY)

#define PGSTAT_INQ_LOOP_COUNT (PGSTAT_INQ_INTERVAL /

PGSTAT_RETRY_DELAY)

-----------------------------------------------------------------------------------

and then this loop (the current HEAD does this a bit differently, but

the 9.2 code

is a bit readable and suffers the same issue):

---------------------------------- pgstat.c : 3560

--------------------------------

/*

* Loop until fresh enough stats file is available or we ran out of

time.

* The stats inquiry message is sent repeatedly in case collector

drops

* it; but not every single time, as that just swamps the collector.

*/

for (count = 0; count < PGSTAT_POLL_LOOP_COUNT; count++)

{

TimestampTz file_ts = 0;

CHECK_FOR_INTERRUPTS();

if (pgstat_read_statsfile_timestamp(false, &file_ts) &&

file_ts >= min_ts)

break;

/* Not there or too old, so kick the collector and wait a bit */

if ((count % PGSTAT_INQ_LOOP_COUNT) == 0)

pgstat_send_inquiry(min_ts);

pg_usleep(PGSTAT_RETRY_DELAY * 1000L);

}

if (count >= PGSTAT_POLL_LOOP_COUNT)

elog(WARNING, "pgstat wait timeout");

/* Autovacuum launcher wants stats about all databases */

if (IsAutoVacuumLauncherProcess())

pgStatDBHash = pgstat_read_statsfile(InvalidOid, false);

else

pgStatDBHash = pgstat_read_statsfile(MyDatabaseId, false);

-----------------------------------------------------------------------------------

What this code does it that it checks the statfile, and if it's not

stale (the

timestamp of the write start is not older than PGSTAT_RETRY_DELAY

milliseconds),

the loop is terminated and the file is read.

Now, let's suppose the write takes >10 ms, which is the

PGSTAT_RETRY_DELAY values.

With our current pgstat.stat filesize/num of relations, this is quite

common.

Actually the common write time in our case is ~100 ms, even if we move

the file

into tmpfs. That means that almost all the calls to

backend_read_statsfile (which

happen in all pgstat_fetch_stat_*entry calls) result in continuous

stream of

inquiries from the autovacuum workers, writing/reading of the file.

We're not getting 'pgstat wait timeout' though, because it finally gets

written

before PGSTAT_MAX_WAIT_TIME.

By moving the file to a tmpfs we've minimized the I/O impact, but now

the collector

and autovacuum launcher consume ~75% of CPU (i.e. ~ one core) and do

nothing except

burning power because the database is almost read-only. Not a good

thing in the

"green computing" era I guess.

First, I'm interested in feedback - did I get all the details right, or

am I

missing something important?

Next, I'm thinking about ways to solve this:

1) turning of autovacuum, doing regular VACUUM ANALYZE from cron -

certainly an

option, but it's rather a workaround than a solution and I'm not

very fond of

it. Moreover it fixes only one side of the problem - triggering the

statfile

writes over and over. The file will be written anyway, although not

that

frequently.

2) tweaking the timer values, especially increasing PGSTAT_RETRY_DELAY

and so on

to consider several seconds to be fresh enough - Would be nice to

have this

as a GUC variables, although we can do another private patch on our

own. But

more knobs is not always better.

3) logic detecting the proper PGSTAT_RETRY_DELAY value - based mostly

on the time

it takes to write the file (e.g. 10x the write time or something).

4) keeping some sort of "dirty flag" in stat entries - and then writing

only info

about objects were modified enough to be eligible for vacuum/analyze

(e.g.

increasing number of index scans can't trigger autovacuum while

inserting

rows can). Also, I'm not worried about getting a bit older num of

index scans,

so 'clean' records might be written less frequently than 'dirty'

ones.

5) splitting the single stat file into multiple pieces - e.g. per

database,

written separately, so that the autovacuum workers don't need to

read all

the data even for databases that don't need to be vacuumed. This

might be

combined with (4).

Ideas? Objections? Preferred options?

I kinda like (4+5), although that'd be a pretty big patch and I'm not

entirely

sure it can be done without breaking other things.

regards

Tomas

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 14:47:01 |

| Message-ID: | 1348670702-sup-1884@alvh.no-ip.org |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Really, as far as autovacuum is concerned, it would be much more useful

to be able to reliably detect that a table has been recently vacuumed,

without having to request a 10ms-recent pgstat snapshot. That would

greatly reduce the amount of time autovac spends on pgstat requests.

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 14:51:03 |

| Message-ID: | CAMkU=1ymG8tR=xEjwLOKbSQNagA7VRs-TApQTxmCzeu5pPp2ww@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Sep 26, 2012 at 5:43 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> First - our system really is not a "common" one - we do have ~1000 of

> databases of

> various size, each containing up to several thousands of tables (several

> user-defined

> tables, the rest serve as caches for a reporting application - yes, it's a

> bit weird

> design but that's life). This all leads to pgstat.stat significantly larger

> than 60 MB.

>

...

> Now, let's suppose the write takes >10 ms, which is the PGSTAT_RETRY_DELAY

> values.

> With our current pgstat.stat filesize/num of relations, this is quite

> common.

> Actually the common write time in our case is ~100 ms, even if we move the

> file

> into tmpfs. That means that almost all the calls to backend_read_statsfile

> (which

> happen in all pgstat_fetch_stat_*entry calls) result in continuous stream of

> inquiries from the autovacuum workers, writing/reading of the file.

I don't think it actually does. What you are missing is the same

thing I was missing a few weeks ago when I also looked into something

like this.

3962:

* We don't recompute min_ts after sleeping, except in the

* unlikely case that cur_ts went backwards.

That means the file must have been written within 10 ms of when we

*first* asked for it.

What is generating the endless stream you are seeing is that you have

1000 databases so if naptime is one minute you are vacuuming 16 per

second. Since every database gets a new process, that process needs

to read the file as it doesn't inherit one.

...

>

> First, I'm interested in feedback - did I get all the details right, or am I

> missing something important?

>

> Next, I'm thinking about ways to solve this:

>

> 1) turning of autovacuum, doing regular VACUUM ANALYZE from cron

Increasing autovacuum_naptime seems like a far better way to do

effectively the same thing.

>

> 2) tweaking the timer values, especially increasing PGSTAT_RETRY_DELAY and

> so on

> to consider several seconds to be fresh enough - Would be nice to have

> this

> as a GUC variables, although we can do another private patch on our own.

> But

> more knobs is not always better.

I think forking it off to to another value would be better. If you

are an autovacuum worker which is just starting up and so getting its

initial stats, you can tolerate a stats file up to "autovacuum_naptime

/ 5.0" stale. If you are already started up and are just about to

vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as it

currently is, so as not to redundantly vacuum a table.

>

> 3) logic detecting the proper PGSTAT_RETRY_DELAY value - based mostly on the

> time

> it takes to write the file (e.g. 10x the write time or something).

This is already in place.

> 5) splitting the single stat file into multiple pieces - e.g. per database,

> written separately, so that the autovacuum workers don't need to read all

> the data even for databases that don't need to be vacuumed. This might be

> combined with (4).

I think this needs to happen eventually.

Cheers,

Jeff

| From: | Euler Taveira <euler(at)timbira(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 14:53:27 |

| Message-ID: | 506316E7.7060303@timbira.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26-09-2012 09:43, Tomas Vondra wrote:

> I've been struggling with autovacuum generating a lot of I/O and CPU on some

> of our

> systems - after a night spent analyzing this behavior, I believe the current

> autovacuum accidentally behaves a bit like a stress-test in some corner cases

> (but

> I may be seriously wrong, after all it was a long night).

>

It is known that statistic collector doesn't scale for a lot of databases. It

wouldn't be a problem if we don't have automatic maintenance (aka autovacuum).

> Next, I'm thinking about ways to solve this:

>

> 1) turning of autovacuum, doing regular VACUUM ANALYZE from cron - certainly an

> option, but it's rather a workaround than a solution and I'm not very fond of

> it. Moreover it fixes only one side of the problem - triggering the statfile

> writes over and over. The file will be written anyway, although not that

> frequently.

>

It solves your problem if you combine scheduled VA with pgstat.stat in a

tmpfs. I don't see it as a definitive solution if we want to scale auto

maintenance for several hundreds or even thousands databases in a single

cluster (Someone could think it is not that common but in hosting scenarios

this is true. DBAs don't want to run several VMs or pg servers just to

minimize the auto maintenance scalability problem).

> 2) tweaking the timer values, especially increasing PGSTAT_RETRY_DELAY and so on

> to consider several seconds to be fresh enough - Would be nice to have this

> as a GUC variables, although we can do another private patch on our own. But

> more knobs is not always better.

>

It doesn't solve the problem. Also it could be a problem for autovacuum (that

make assumptions based in those fixed values).

> 3) logic detecting the proper PGSTAT_RETRY_DELAY value - based mostly on the time

> it takes to write the file (e.g. 10x the write time or something).

>

Such adaptive logic would be good only iff it takes a small time fraction to

execute. It have to pay attention to the limits. It appears to be a candidate

for exploration.

> 4) keeping some sort of "dirty flag" in stat entries - and then writing only info

> about objects were modified enough to be eligible for vacuum/analyze (e.g.

> increasing number of index scans can't trigger autovacuum while inserting

> rows can). Also, I'm not worried about getting a bit older num of index scans,

> so 'clean' records might be written less frequently than 'dirty' ones.

>

It minimizes your problem but harms collector tools (that want fresh

statistics about databases).

> 5) splitting the single stat file into multiple pieces - e.g. per database,

> written separately, so that the autovacuum workers don't need to read all

> the data even for databases that don't need to be vacuumed. This might be

> combined with (4).

>

IMHO that's the definitive solution. It would be one file per database plus a

global one. That way, the check would only read the global.stat and process

those database that were modified. Also, an in-memory map could store that

information to speed up the checks. The only downside I can see is that you

will increase the number of opened file descriptors.

> Ideas? Objections? Preferred options?

>

I prefer to attack 3, sort of 4 (explained in 5 -- in-memory map) and 5.

Out of curiosity, did you run perf (or some other performance analyzer) to

verify if some (stats and/or autovac) functions pop up in the report?

--

Euler Taveira de Oliveira - Timbira http://www.timbira.com.br/

PostgreSQL: Consultoria, Desenvolvimento, Suporte 24x7 e Treinamento

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 15:25:58 |

| Message-ID: | 1c9a6358f18915d74e375746b0f6e311@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Dne 26.09.2012 16:51, Jeff Janes napsal:

> On Wed, Sep 26, 2012 at 5:43 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>

>> First - our system really is not a "common" one - we do have ~1000

>> of

>> databases of

>> various size, each containing up to several thousands of tables

>> (several

>> user-defined

>> tables, the rest serve as caches for a reporting application - yes,

>> it's a

>> bit weird

>> design but that's life). This all leads to pgstat.stat significantly

>> larger

>> than 60 MB.

>>

> ...

>> Now, let's suppose the write takes >10 ms, which is the

>> PGSTAT_RETRY_DELAY

>> values.

>> With our current pgstat.stat filesize/num of relations, this is

>> quite

>> common.

>> Actually the common write time in our case is ~100 ms, even if we

>> move the

>> file

>> into tmpfs. That means that almost all the calls to

>> backend_read_statsfile

>> (which

>> happen in all pgstat_fetch_stat_*entry calls) result in continuous

>> stream of

>> inquiries from the autovacuum workers, writing/reading of the file.

>

> I don't think it actually does. What you are missing is the same

> thing I was missing a few weeks ago when I also looked into something

> like this.

>

> 3962:

>

> * We don't recompute min_ts after sleeping, except in

> the

> * unlikely case that cur_ts went backwards.

>

> That means the file must have been written within 10 ms of when we

> *first* asked for it.

Yeah, right - I've missed the first "if (pgStatDBHash)" check right at

the beginning.

> What is generating the endless stream you are seeing is that you have

> 1000 databases so if naptime is one minute you are vacuuming 16 per

> second. Since every database gets a new process, that process needs

> to read the file as it doesn't inherit one.

Right. But that makes the 10ms timeout even more strange, because the

worker is then using the data for very long time (even minutes).

>

> ...

>>

>> First, I'm interested in feedback - did I get all the details right,

>> or am I

>> missing something important?

>>

>> Next, I'm thinking about ways to solve this:

>>

>> 1) turning of autovacuum, doing regular VACUUM ANALYZE from cron

>

> Increasing autovacuum_naptime seems like a far better way to do

> effectively the same thing.

Agreed. One of my colleagues turned autovacuum off a few years back and

that

was a nice lesson how not to solve this kind of issues.

>> 2) tweaking the timer values, especially increasing

>> PGSTAT_RETRY_DELAY and

>> so on

>> to consider several seconds to be fresh enough - Would be nice to

>> have

>> this

>> as a GUC variables, although we can do another private patch on

>> our own.

>> But

>> more knobs is not always better.

>

> I think forking it off to to another value would be better. If you

> are an autovacuum worker which is just starting up and so getting its

> initial stats, you can tolerate a stats file up to

> "autovacuum_naptime

> / 5.0" stale. If you are already started up and are just about to

> vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as it

> currently is, so as not to redundantly vacuum a table.

I always thought there's a "no more than one worker per database"

limit,

and that the file is always reloaded when switching to another

database.

So I'm not sure how could a worker see such a stale table info? Or are

the workers keeping the stats across multiple databases?

>> 3) logic detecting the proper PGSTAT_RETRY_DELAY value - based

>> mostly on the

>> time

>> it takes to write the file (e.g. 10x the write time or

>> something).

>

> This is already in place.

Really? Where?

I've checked the current master, and the only thing I see in

pgstat_write_statsfile

is this (line 3558):

last_statwrite = globalStats.stats_timestamp;

https://github.com/postgres/postgres/blob/master/src/backend/postmaster/pgstat.c#L3558

I don't think that's doing what I meant. That really doesn't scale the

timeout

according to write time. What happens right now is that when the stats

file is

written at time 0 (starts at zero, write finishes at 100 ms), and a

worker asks

for the file at 99 ms (i.e. 1ms before the write finishes), it will set

the time

of the inquiry to last_statrequest and then do this

if (last_statwrite < last_statrequest)

pgstat_write_statsfile(false);

i.e. comparing it to the start of the write. So another write will

start right

after the file is written out. And over and over.

Moreover there's the 'rename' step making the new file invisible for

the worker

processes, which makes the thing a bit more complicated.

What I'm suggesting it that there should be some sort of tracking the

write time

and then deciding whether the file is fresh enough using 10x that

value. So when

a file is written in 100 ms, it's be considered OK for the next 900 ms,

i.e. 1 sec

in total. Sure, we could use 5x or other coefficient, doesn't really

matter.

>> 5) splitting the single stat file into multiple pieces - e.g. per

>> database,

>> written separately, so that the autovacuum workers don't need to

>> read all

>> the data even for databases that don't need to be vacuumed. This

>> might be

>> combined with (4).

>

> I think this needs to happen eventually.

Yes, a nice patch idea ;-)

thanks for the feedback

Tomas

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Euler Taveira <euler(at)timbira(dot)com> |

| Cc: | Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 15:27:44 |

| Message-ID: | 1348673153-sup-4361@alvh.no-ip.org |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Excerpts from Euler Taveira's message of mié sep 26 11:53:27 -0300 2012:

> On 26-09-2012 09:43, Tomas Vondra wrote:

> > 5) splitting the single stat file into multiple pieces - e.g. per database,

> > written separately, so that the autovacuum workers don't need to read all

> > the data even for databases that don't need to be vacuumed. This might be

> > combined with (4).

> >

> IMHO that's the definitive solution. It would be one file per database plus a

> global one. That way, the check would only read the global.stat and process

> those database that were modified. Also, an in-memory map could store that

> information to speed up the checks.

+1

> The only downside I can see is that you

> will increase the number of opened file descriptors.

Note that most users of pgstat will only have two files open (instead of

one as currently) -- one for shared, one for their own database. Only

pgstat itself and autovac launcher would need to open pgstat files for

all databases; but both do not have a need to open other files

(arbitrary tables) so this shouldn't be a major problem.

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 15:29:22 |

| Message-ID: | 1348673324-sup-4711@alvh.no-ip.org |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Excerpts from Tomas Vondra's message of mié sep 26 12:25:58 -0300 2012:

> Dne 26.09.2012 16:51, Jeff Janes napsal:

> > I think forking it off to to another value would be better. If you

> > are an autovacuum worker which is just starting up and so getting its

> > initial stats, you can tolerate a stats file up to

> > "autovacuum_naptime

> > / 5.0" stale. If you are already started up and are just about to

> > vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as it

> > currently is, so as not to redundantly vacuum a table.

>

> I always thought there's a "no more than one worker per database"

> limit,

There is no such limitation.

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 15:35:02 |

| Message-ID: | 66087d1adc6b71732628a025e970de7c@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Dne 26.09.2012 17:29, Alvaro Herrera napsal:

> Excerpts from Tomas Vondra's message of mié sep 26 12:25:58 -0300

> 2012:

>> Dne 26.09.2012 16:51, Jeff Janes napsal:

>

>> > I think forking it off to to another value would be better. If

>> you

>> > are an autovacuum worker which is just starting up and so getting

>> its

>> > initial stats, you can tolerate a stats file up to

>> > "autovacuum_naptime

>> > / 5.0" stale. If you are already started up and are just about to

>> > vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as

>> it

>> > currently is, so as not to redundantly vacuum a table.

>>

>> I always thought there's a "no more than one worker per database"

>> limit,

>

> There is no such limitation.

OK, thanks. Still, reading/writing the small (per-database) files would

be

much faster so it would be easy to read/write them more often on

demand.

Tomas

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 16:14:15 |

| Message-ID: | CAMkU=1yPAmQJOXT+kNuv=QCRePjZkLJ=gJOLPv+QSD9AQ+=-Ag@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Sep 26, 2012 at 8:25 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> Dne 26.09.2012 16:51, Jeff Janes napsal:

>

>

>> What is generating the endless stream you are seeing is that you have

>> 1000 databases so if naptime is one minute you are vacuuming 16 per

>> second. Since every database gets a new process, that process needs

>> to read the file as it doesn't inherit one.

>

>

> Right. But that makes the 10ms timeout even more strange, because the

> worker is then using the data for very long time (even minutes).

On average that can't happen, or else your vacuuming would fall way

behind. But I agree, there is no reason to have very fresh statistics

to start with. naptime/5 seems like a good cutoff for me for the

start up reading. If a table only becomes eligible for vacuuming in

the last 20% of the naptime, I see no reason that it can't wait

another round. But that just means the statistics collector needs to

write the file less often, the workers still need to read it once per

database since each one only vacuums one database and don't inherit

the data from the launcher.

>> I think forking it off to to another value would be better. If you

>> are an autovacuum worker which is just starting up and so getting its

>> initial stats, you can tolerate a stats file up to "autovacuum_naptime

>> / 5.0" stale. If you are already started up and are just about to

>> vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as it

>> currently is, so as not to redundantly vacuum a table.

>

>

> I always thought there's a "no more than one worker per database" limit,

> and that the file is always reloaded when switching to another database.

> So I'm not sure how could a worker see such a stale table info? Or are

> the workers keeping the stats across multiple databases?

If you only have one "active" database, then all the workers will be

in it. I don't how likely it is that they will leap frog each other

and collide. But anyway, if you 1000s of databases, then each one

will generally require zero vacuums per naptime (as you say, it is

mostly read only), so it is the reads upon start-up, not the reads per

table that needs vacuuming, which generates most of the traffic. Once

you separate those two parameters out, playing around with the

PGSTAT_RETRY_DELAY one seems like a needless risk.

>

>>> 3) logic detecting the proper PGSTAT_RETRY_DELAY value - based mostly on

>>> the

>>> time

>>> it takes to write the file (e.g. 10x the write time or something).

>>

>>

>> This is already in place.

>

>

> Really? Where?

I had thought that this part was effectively the same thing:

* We don't recompute min_ts after sleeping, except in the

* unlikely case that cur_ts went backwards.

But I think I did not understand your proposal.

>

> I've checked the current master, and the only thing I see in

> pgstat_write_statsfile

> is this (line 3558):

>

> last_statwrite = globalStats.stats_timestamp;

>

> https://github.com/postgres/postgres/blob/master/src/backend/postmaster/pgstat.c#L3558

>

>

> I don't think that's doing what I meant. That really doesn't scale the

> timeout

> according to write time. What happens right now is that when the stats file

> is

> written at time 0 (starts at zero, write finishes at 100 ms), and a worker

> asks

> for the file at 99 ms (i.e. 1ms before the write finishes), it will set the

> time

> of the inquiry to last_statrequest and then do this

>

> if (last_statwrite < last_statrequest)

> pgstat_write_statsfile(false);

>

> i.e. comparing it to the start of the write. So another write will start

> right

> after the file is written out. And over and over.

Ah. I had wondered about this before too, and wondered if it would be

a good idea to have it go back to the beginning of the stats file, and

overwrite the timestamp with the current time (rather than the time it

started writing it), as the last action it does before the rename. I

think that would automatically make it adaptive to the time it takes

to write out the file, in a fairly simple way.

>

> Moreover there's the 'rename' step making the new file invisible for the

> worker

> processes, which makes the thing a bit more complicated.

I think renames are assumed to be atomic. Either it sees the old one,

or the new one, but never sees neither.

Cheers,

Jeff

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Euler Taveira <euler(at)timbira(dot)com>, Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 16:29:17 |

| Message-ID: | 26698.1348676957@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> writes:

> Excerpts from Euler Taveira's message of mi sep 26 11:53:27 -0300 2012:

>> On 26-09-2012 09:43, Tomas Vondra wrote:

>>> 5) splitting the single stat file into multiple pieces - e.g. per database,

>>> written separately, so that the autovacuum workers don't need to read all

>>> the data even for databases that don't need to be vacuumed. This might be

>>> combined with (4).

>> IMHO that's the definitive solution. It would be one file per database plus a

>> global one. That way, the check would only read the global.stat and process

>> those database that were modified. Also, an in-memory map could store that

>> information to speed up the checks.

> +1

That would help for the case of hundreds of databases, but how much

does it help for lots of tables in a single database?

I'm a bit suspicious of the idea that we should encourage people to use

hundreds of databases per installation anyway: the duplicated system

catalogs are going to be mighty expensive, both in disk space and in

their cache footprint in shared buffers. There was some speculation

at the last PGCon about how we might avoid the duplication, but I think

we're years away from any such thing actually happening.

What seems to me like it could help more is fixing things so that the

autovac launcher needn't even launch a child process for databases that

haven't had any updates lately. I'm not sure how to do that, but it

probably involves getting the stats collector to produce some kind of

summary file.

regards, tom lane

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com>, Euler Taveira <euler(at)timbira(dot)com>, Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 17:18:51 |

| Message-ID: | CAMkU=1w5vG6eREnwmPiNJ6tsKoJKa67tuYCCqd9wNDoLh-Ankw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Sep 26, 2012 at 9:29 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> writes:

>> Excerpts from Euler Taveira's message of mié sep 26 11:53:27 -0300 2012:

>>> On 26-09-2012 09:43, Tomas Vondra wrote:

>>>> 5) splitting the single stat file into multiple pieces - e.g. per database,

>>>> written separately, so that the autovacuum workers don't need to read all

>>>> the data even for databases that don't need to be vacuumed. This might be

>>>> combined with (4).

>

>>> IMHO that's the definitive solution. It would be one file per database plus a

>>> global one. That way, the check would only read the global.stat and process

>>> those database that were modified. Also, an in-memory map could store that

>>> information to speed up the checks.

>

>> +1

>

> That would help for the case of hundreds of databases, but how much

> does it help for lots of tables in a single database?

It doesn't help that case, but that case doesn't need much help. If

you have N statistics-kept objects in total spread over M databases,

of which T objects need vacuuming per naptime, the stats file traffic

is proportional to N*(M+T). If T is low, then there is generally is

no problem if M is also low. Or at least, the problem is much smaller

than when M is high for a fixed value of N.

> I'm a bit suspicious of the idea that we should encourage people to use

> hundreds of databases per installation anyway:

I agree with that, but we could still do a better job of tolerating

it; without encouraging it. If someone volunteers to write the code

to do this, what trade-offs would there be?

Cheers,

Jeff

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 22:48:34 |

| Message-ID: | 50638642.2080606@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26.9.2012 18:29, Tom Lane wrote:

> Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> writes:

>> Excerpts from Euler Taveira's message of miĂŠ sep 26 11:53:27 -0300 2012:

>>> On 26-09-2012 09:43, Tomas Vondra wrote:

>>>> 5) splitting the single stat file into multiple pieces - e.g. per database,

>>>> written separately, so that the autovacuum workers don't need to read all

>>>> the data even for databases that don't need to be vacuumed. This might be

>>>> combined with (4).

>

>>> IMHO that's the definitive solution. It would be one file per database plus a

>>> global one. That way, the check would only read the global.stat and process

>>> those database that were modified. Also, an in-memory map could store that

>>> information to speed up the checks.

>

>> +1

>

> That would help for the case of hundreds of databases, but how much

> does it help for lots of tables in a single database?

Well, it wouldn't, but it wouldn't make it worse either. Or at least

that's how I understand it.

> I'm a bit suspicious of the idea that we should encourage people to use

> hundreds of databases per installation anyway: the duplicated system

> catalogs are going to be mighty expensive, both in disk space and in

> their cache footprint in shared buffers. There was some speculation

> at the last PGCon about how we might avoid the duplication, but I think

> we're years away from any such thing actually happening.

You don't need to encourage us to do that ;-) We know it's not perfect

and considering a good alternative - e.g. several databases (~10) with

schemas inside, replacing the current database-only approach. This way

we'd get multiple stat files (thus gaining the benefits) with less

overhead (shared catalogs).

And yes, using tens of thousands of tables (serving as "caches") for a

reporting solution is "interesting" (as in the old Chinese curse) too.

> What seems to me like it could help more is fixing things so that the

> autovac launcher needn't even launch a child process for databases that

> haven't had any updates lately. I'm not sure how to do that, but it

> probably involves getting the stats collector to produce some kind of

> summary file.

Yes, I've proposed something like this in my original mail - setting a

"dirty" flag on objects (a database in this case) whenever a table in it

gets eligible for vacuum/analyze.

Tomas

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-26 23:53:50 |

| Message-ID: | 5063958E.1080606@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26.9.2012 18:14, Jeff Janes wrote:

> On Wed, Sep 26, 2012 at 8:25 AM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> Dne 26.09.2012 16:51, Jeff Janes napsal:

>>

>>

>>> What is generating the endless stream you are seeing is that you have

>>> 1000 databases so if naptime is one minute you are vacuuming 16 per

>>> second. Since every database gets a new process, that process needs

>>> to read the file as it doesn't inherit one.

>>

>>

>> Right. But that makes the 10ms timeout even more strange, because the

>> worker is then using the data for very long time (even minutes).

>

> On average that can't happen, or else your vacuuming would fall way

> behind. But I agree, there is no reason to have very fresh statistics

> to start with. naptime/5 seems like a good cutoff for me for the

> start up reading. If a table only becomes eligible for vacuuming in

> the last 20% of the naptime, I see no reason that it can't wait

> another round. But that just means the statistics collector needs to

> write the file less often, the workers still need to read it once per

> database since each one only vacuums one database and don't inherit

> the data from the launcher.

So what happens if there are two workers vacuuming the same database?

Wouldn't that make it more probable that were already vacuumed by the

other worker?

See the comment at the beginning of autovacuum.c, where it also states

that the statfile is reloaded before each table (probably because of the

calls to autovac_refresh_stats which in turn calls clear_snapshot).

>>> I think forking it off to to another value would be better. If you

>>> are an autovacuum worker which is just starting up and so getting its

>>> initial stats, you can tolerate a stats file up to "autovacuum_naptime

>>> / 5.0" stale. If you are already started up and are just about to

>>> vacuum a table, then keep the staleness at PGSTAT_RETRY_DELAY as it

>>> currently is, so as not to redundantly vacuum a table.

>>

>>

>> I always thought there's a "no more than one worker per database" limit,

>> and that the file is always reloaded when switching to another database.

>> So I'm not sure how could a worker see such a stale table info? Or are

>> the workers keeping the stats across multiple databases?

>

> If you only have one "active" database, then all the workers will be

> in it. I don't how likely it is that they will leap frog each other

> and collide. But anyway, if you 1000s of databases, then each one

> will generally require zero vacuums per naptime (as you say, it is

> mostly read only), so it is the reads upon start-up, not the reads per

> table that needs vacuuming, which generates most of the traffic. Once

> you separate those two parameters out, playing around with the

> PGSTAT_RETRY_DELAY one seems like a needless risk.

OK, right. My fault.

Yes, our databases are mostly readable - more precisely whenever we load

data, we immediately do VACUUM ANALYZE on the tables, so autovacuum

never kicks in on them. The only thing it works on are system catalogs

and such stuff.

>>>> 3) logic detecting the proper PGSTAT_RETRY_DELAY value - based mostly on

>>>> the

>>>> time

>>>> it takes to write the file (e.g. 10x the write time or something).

>>>

>>>

>>> This is already in place.

>>

>>

>> Really? Where?

>

> I had thought that this part was effectively the same thing:

>

> * We don't recompute min_ts after sleeping, except in the

> * unlikely case that cur_ts went backwards.

>

> But I think I did not understand your proposal.

>

>>

>> I've checked the current master, and the only thing I see in

>> pgstat_write_statsfile

>> is this (line 3558):

>>

>> last_statwrite = globalStats.stats_timestamp;

>>

>> https://github.com/postgres/postgres/blob/master/src/backend/postmaster/pgstat.c#L3558

>>

>>

>> I don't think that's doing what I meant. That really doesn't scale the

>> timeout

>> according to write time. What happens right now is that when the stats file

>> is

>> written at time 0 (starts at zero, write finishes at 100 ms), and a worker

>> asks

>> for the file at 99 ms (i.e. 1ms before the write finishes), it will set the

>> time

>> of the inquiry to last_statrequest and then do this

>>

>> if (last_statwrite < last_statrequest)

>> pgstat_write_statsfile(false);

>>

>> i.e. comparing it to the start of the write. So another write will start

>> right

>> after the file is written out. And over and over.

>

> Ah. I had wondered about this before too, and wondered if it would be

> a good idea to have it go back to the beginning of the stats file, and

> overwrite the timestamp with the current time (rather than the time it

> started writing it), as the last action it does before the rename. I

> think that would automatically make it adaptive to the time it takes

> to write out the file, in a fairly simple way.

Yeah, I was thinking about that too.

>> Moreover there's the 'rename' step making the new file invisible for the

>> worker

>> processes, which makes the thing a bit more complicated.

>

> I think renames are assumed to be atomic. Either it sees the old one,

> or the new one, but never sees neither.

I'm not quite sure what I meant, but not this - I know the renames are

atomic. I probably haven't noticed that inquiries are using min_ts, so I

though that an inquiry sent right after the write starts (with min_ts

before the write) would trigger another write, but that's not the case.

regards

Tomas

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-27 09:51:28 |

| Message-ID: | CA+U5nMJMibhhnDf3sh+UzWE5RyniZrR0cuQaj=byjMpdJYUVyQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26 September 2012 15:47, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> wrote:

>

> Really, as far as autovacuum is concerned, it would be much more useful

> to be able to reliably detect that a table has been recently vacuumed,

> without having to request a 10ms-recent pgstat snapshot. That would

> greatly reduce the amount of time autovac spends on pgstat requests.

VACUUMing generates a relcache invalidation. Can we arrange for those

invalidations to be received by autovac launcher, so it gets immediate

feedback of recent activity without polling?

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-27 14:57:24 |

| Message-ID: | 1348757745-sup-1839@alvh.no-ip.org |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Excerpts from Simon Riggs's message of jue sep 27 06:51:28 -0300 2012:

> On 26 September 2012 15:47, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> wrote:

> >

> > Really, as far as autovacuum is concerned, it would be much more useful

> > to be able to reliably detect that a table has been recently vacuumed,

> > without having to request a 10ms-recent pgstat snapshot. That would

> > greatly reduce the amount of time autovac spends on pgstat requests.

>

> VACUUMing generates a relcache invalidation. Can we arrange for those

> invalidations to be received by autovac launcher, so it gets immediate

> feedback of recent activity without polling?

Hmm, this is an interesting idea worth exploring, I think. Maybe we

should sort tables in the autovac worker to-do list by age of last

invalidation messages received, or something like that. Totally unclear

on the details, but as I said, worth exploring.

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Tomas Vondra <tv(at)fuzzy(dot)cz>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-09-27 20:06:56 |

| Message-ID: | CA+U5nMJpa2FVUVa0wwjnK1nEZ42u+TiQvT5nZd0Q51FAVGRm-g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 27 September 2012 15:57, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> wrote:

> Excerpts from Simon Riggs's message of jue sep 27 06:51:28 -0300 2012:

>> On 26 September 2012 15:47, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> wrote:

>> >

>> > Really, as far as autovacuum is concerned, it would be much more useful

>> > to be able to reliably detect that a table has been recently vacuumed,

>> > without having to request a 10ms-recent pgstat snapshot. That would

>> > greatly reduce the amount of time autovac spends on pgstat requests.

>>

>> VACUUMing generates a relcache invalidation. Can we arrange for those

>> invalidations to be received by autovac launcher, so it gets immediate

>> feedback of recent activity without polling?

>

> Hmm, this is an interesting idea worth exploring, I think. Maybe we

> should sort tables in the autovac worker to-do list by age of last

> invalidation messages received, or something like that. Totally unclear

> on the details, but as I said, worth exploring.

Just put them to back of queue if an inval is received.

There is already support for listening and yet never generating to

relcache inval messages.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-11-18 22:49:19 |

| Message-ID: | 50A965EF.50708@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi!

On 26.9.2012 19:18, Jeff Janes wrote:

> On Wed, Sep 26, 2012 at 9:29 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> writes:

>>> Excerpts from Euler Taveira's message of mié sep 26 11:53:27 -0300 2012:

>>>> On 26-09-2012 09:43, Tomas Vondra wrote:

>>>>> 5) splitting the single stat file into multiple pieces - e.g. per database,

>>>>> written separately, so that the autovacuum workers don't need to read all

>>>>> the data even for databases that don't need to be vacuumed. This might be

>>>>> combined with (4).

>>

>>>> IMHO that's the definitive solution. It would be one file per database plus a

>>>> global one. That way, the check would only read the global.stat and process

>>>> those database that were modified. Also, an in-memory map could store that

>>>> information to speed up the checks.

>>

>>> +1

>>

>> That would help for the case of hundreds of databases, but how much

>> does it help for lots of tables in a single database?

>

> It doesn't help that case, but that case doesn't need much help. If

> you have N statistics-kept objects in total spread over M databases,

> of which T objects need vacuuming per naptime, the stats file traffic

> is proportional to N*(M+T). If T is low, then there is generally is

> no problem if M is also low. Or at least, the problem is much smaller

> than when M is high for a fixed value of N.

I've done some initial hacking on splitting the stat file into multiple

smaller pieces over the weekend, and it seems promising (at least with

respect to the issues we're having).

See the patch attached, but be aware that this is a very early WIP (or

rather a proof of concept), so it has many rough edges (read "sloppy

coding"). I haven't even added it to the commitfest yet ...

The two main changes are these:

(1) The stats file is split into a common "db" file, containing all the

DB Entries, and per-database files with tables/functions. The common

file is still called "pgstat.stat", the per-db files have the

database OID appended, so for example "pgstat.stat.12345" etc.

This was a trivial hack pgstat_read_statsfile/pgstat_write_statsfile

functions, introducing two new functions:

pgstat_read_db_statsfile

pgstat_write_db_statsfile

that do the trick of reading/writing stat file for one database.

(2) The pgstat_read_statsfile has an additional parameter "onlydbs" that

says that you don't need table/func stats - just the list of db

entries. This is used for autovacuum launcher, which does not need

to read the table/stats (if I'm reading the code in autovacuum.c

correctly - it seems to be working as expected).

So what are the benefits?

(a) When a launcher asks for info about databases, something like this

is called in the end:

pgstat_read_db_statsfile(InvalidOid, false, true)

which means all databases (InvalidOid) and only db info (true). So

it reads only the one common file with db entries, not the

table/func stats.

(b) When a worker asks for stats for a given DB, something like this is

called in the end:

pgstat_read_db_statsfile(MyDatabaseId, false, false)

which reads only the common stats file (with db entries) and only

one file for the one database.

The current implementation (with the single pgstat.stat file), all

the data had to be read (and skipped silently) in both cases.

That's a lot of CPU time, and we're seeing ~60% of CPU spent on

doing just this (writing/reading huge statsfile).

So with a lot of databases/objects, this "pgstat.stat split" saves

us a lot of CPU ...

(c) This should lower the space requirements too - with a single file,

you actually need at least 2x the disk space (or RAM, if you're

using tmpfs as we are), because you need to keep two versions of

the file at the same time (pgstat.stat and pgstat.tmp).

Thanks to this split you only need additional space for a copy of

the largest piece (with some reasonable safety reserve).

Well, it's very early patch, so there are rough edges too

(a) It does not solve the "many-schema" scenario at all - that'll need

a completely new approach I guess :-(

(b) It does not solve the writing part at all - the current code uses a

single timestamp (last_statwrite) to decide if a new file needs to

be written.

That clearly is not enough for multiple files - there should be one

timestamp for each database/file. I'm thinking about how to solve

this and how to integrate it with pgstat_send_inquiry etc.

One way might be adding the timestamp(s) into PgStat_StatDBEntry

and the other one is using an array of inquiries for each database.

And yet another one I'm thinking about is using a fixed-length

array of timestamps (e.g. 256), indexed by mod(dboid,256). That

would mean stats for all databases with the same mod(oid,256) would

be written at the same time. Seems like an over-engineering though.

(c) I'm a bit worried about the number of files - right now there's one

for each database and I'm thinking about splitting them by type

(one for tables, one for functions) which might make it even faster

for some apps with a lot of stored procedures etc.

But is the large number of files actually a problem? After all,

we're using one file per relation fork in the "base" directory, so

this seems like a minor issue.

And if really an issue, this might be solved by the mod(oid,256) to

combine multiple files into one (which would work neatly with the

fixed-length array of timestamps).

kind regards

Tomas

| Attachment | Content-Type | Size |

|---|---|---|

| stats-split.patch | text/plain | 11.3 KB |

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-11-18 23:13:21 |

| Message-ID: | 50A96B91.1050409@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 26.9.2012 18:29, Tom Lane wrote:

> What seems to me like it could help more is fixing things so that the

> autovac launcher needn't even launch a child process for databases that

> haven't had any updates lately. I'm not sure how to do that, but it

> probably involves getting the stats collector to produce some kind of

> summary file.

Couldn't we use the PgStat_StatDBEntry for this? By splitting the

pgstat.stat file into multiple pieces (see my other post in this thread)

there's a file with StatDBEntry items only, so maybe it could be used as

the summary file ...

I've been thinking about this:

(a) add "needs_autovacuuming" flag to PgStat_(TableEntry|StatDBEntry)

(b) when table stats are updated, run quick check to decide whether

the table needs to be processed by autovacuum (vacuumed or

analyzed), and if yes then set needs_autovacuuming=true and both

for table and database

The worker may read the DB entries from the file and act only on those

that need to be processed (those with needs_autovacuuming=true).

Maybe the DB-level field might be a counter of tables that need to be

processed, and the autovacuum daemon might act on those first? Although

the simpler the better I guess.

Or did you mean something else?

regards

Tomas

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-11-21 18:02:56 |

| Message-ID: | CA+TgmoZr3xUQq_OM2V1nmEN6NScVBFkpYJrpFFmbyOPJKvZdzQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sun, Nov 18, 2012 at 5:49 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

> The two main changes are these:

>

> (1) The stats file is split into a common "db" file, containing all the

> DB Entries, and per-database files with tables/functions. The common

> file is still called "pgstat.stat", the per-db files have the

> database OID appended, so for example "pgstat.stat.12345" etc.

>

> This was a trivial hack pgstat_read_statsfile/pgstat_write_statsfile

> functions, introducing two new functions:

>

> pgstat_read_db_statsfile

> pgstat_write_db_statsfile

>

> that do the trick of reading/writing stat file for one database.

>

> (2) The pgstat_read_statsfile has an additional parameter "onlydbs" that

> says that you don't need table/func stats - just the list of db

> entries. This is used for autovacuum launcher, which does not need

> to read the table/stats (if I'm reading the code in autovacuum.c

> correctly - it seems to be working as expected).

I'm not an expert on the stats system, but this seems like a promising

approach to me.

> (a) It does not solve the "many-schema" scenario at all - that'll need

> a completely new approach I guess :-(

We don't need to solve every problem in the first patch. I've got no

problem kicking this one down the road.

> (b) It does not solve the writing part at all - the current code uses a

> single timestamp (last_statwrite) to decide if a new file needs to

> be written.

>

> That clearly is not enough for multiple files - there should be one

> timestamp for each database/file. I'm thinking about how to solve

> this and how to integrate it with pgstat_send_inquiry etc.

Presumably you need a last_statwrite for each file, in a hash table or

something, and requests need to specify which file is needed.

> And yet another one I'm thinking about is using a fixed-length

> array of timestamps (e.g. 256), indexed by mod(dboid,256). That

> would mean stats for all databases with the same mod(oid,256) would

> be written at the same time. Seems like an over-engineering though.

That seems like an unnecessary kludge.

> (c) I'm a bit worried about the number of files - right now there's one

> for each database and I'm thinking about splitting them by type

> (one for tables, one for functions) which might make it even faster

> for some apps with a lot of stored procedures etc.

>

> But is the large number of files actually a problem? After all,

> we're using one file per relation fork in the "base" directory, so

> this seems like a minor issue.

I don't see why one file per database would be a problem. After all,

we already have on directory per database inside base/. If the user

has so many databases that dirent lookups in a directory of that size

are a problem, they're already hosed, and this will probably still

work out to a net win.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: autovacuum stress-testing our system |

| Date: | 2012-12-03 02:07:43 |

| Message-ID: | 50BC096F.3010502@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 21.11.2012 19:02, Robert Haas wrote:

> On Sun, Nov 18, 2012 at 5:49 PM, Tomas Vondra <tv(at)fuzzy(dot)cz> wrote:

>> The two main changes are these:

>>

>> (1) The stats file is split into a common "db" file, containing all the

>> DB Entries, and per-database files with tables/functions. The common

>> file is still called "pgstat.stat", the per-db files have the

>> database OID appended, so for example "pgstat.stat.12345" etc.

>>

>> This was a trivial hack pgstat_read_statsfile/pgstat_write_statsfile

>> functions, introducing two new functions:

>>

>> pgstat_read_db_statsfile

>> pgstat_write_db_statsfile

>>

>> that do the trick of reading/writing stat file for one database.

>>

>> (2) The pgstat_read_statsfile has an additional parameter "onlydbs" that

>> says that you don't need table/func stats - just the list of db

>> entries. This is used for autovacuum launcher, which does not need

>> to read the table/stats (if I'm reading the code in autovacuum.c

>> correctly - it seems to be working as expected).

>

> I'm not an expert on the stats system, but this seems like a promising

> approach to me.

>

>> (a) It does not solve the "many-schema" scenario at all - that'll need

>> a completely new approach I guess :-(

>

> We don't need to solve every problem in the first patch. I've got no

> problem kicking this one down the road.

>

>> (b) It does not solve the writing part at all - the current code uses a

>> single timestamp (last_statwrite) to decide if a new file needs to

>> be written.

>>

>> That clearly is not enough for multiple files - there should be one

>> timestamp for each database/file. I'm thinking about how to solve

>> this and how to integrate it with pgstat_send_inquiry etc.

>

> Presumably you need a last_statwrite for each file, in a hash table or

> something, and requests need to specify which file is needed.

>

>> And yet another one I'm thinking about is using a fixed-length

>> array of timestamps (e.g. 256), indexed by mod(dboid,256). That

>> would mean stats for all databases with the same mod(oid,256) would

>> be written at the same time. Seems like an over-engineering though.

>

> That seems like an unnecessary kludge.

>

>> (c) I'm a bit worried about the number of files - right now there's one

>> for each database and I'm thinking about splitting them by type

>> (one for tables, one for functions) which might make it even faster

>> for some apps with a lot of stored procedures etc.

>>

>> But is the large number of files actually a problem? After all,

>> we're using one file per relation fork in the "base" directory, so

>> this seems like a minor issue.

>

> I don't see why one file per database would be a problem. After all,

> we already have on directory per database inside base/. If the user

> has so many databases that dirent lookups in a directory of that size

> are a problem, they're already hosed, and this will probably still

> work out to a net win.

Attached is a v2 of the patch, fixing some of the issues and unclear

points from the initial version.

The main improvement is that it implements writing only stats for the

requested database (set when sending inquiry). There's a dynamic array

of request - for each DB only the last request is kept.

I've done a number of changes - most importantly:

- added a stats_timestamp field to PgStat_StatDBEntry, keeping the last

write of the database (i.e. a per-database last_statwrite), which is

used to decide whether the file is stale or not

- handling of the 'permanent' flag correctly (used when starting or

stopping the cluster) for per-db files

- added a very simple header to the per-db files (basically just a

format ID and a timestamp) - this is needed for checking of the

timestamp of the last write from workers (although maybe we could

just read the pgstat.stat, which is now rather small)

- a 'force' parameter (true - write all databases, even if they weren't

specifically requested)

So with the exception of 'multi-schema' case (which was not the aim of

this effort), it should solve all the issues of the initial version.

There are two blocks of code dealing with clock glitches. I haven't

fixed those yet, but that can wait I guess. I've also left there some

logging I've used during development (printing inquiries and which file

is written and when).

The main unsolved problem I'm struggling with is what to do when a

database is dropped? Right now, the statfile remains in pg_stat_tmp

forewer (or until the restart) - is there a good way to remove the

file? I'm thinking about adding a message to be sent to the collector

from the code that handles DROP TABLE.

I've done some very simple performance testing - I've created 1000

databases with 1000 tables each, done ANALYZE on all of them. With only

autovacum running, I've seen this:

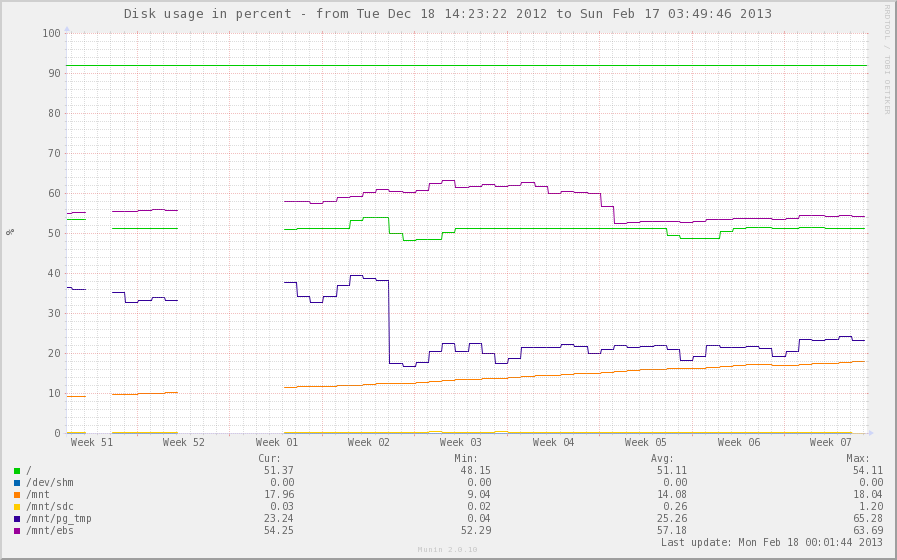

Without the patch

-----------------

%CPU %MEM TIME+ COMMAND

18 3.0 0:10.10 postgres: autovacuum launcher process

17 2.6 0:11.44 postgres: stats collector process

The I/O was seriously bogged down, doing ~150 MB/s (basically what the

drive can handle) - with less dbs, or when the statfiles are placed on

tmpfs filesystem, we usually see ~70% of one core doing just this.

With the patch

--------------

Then, the typical "top" for PostgreSQL processes looked like this:

%CPU %MEM TIME+ COMMAND

2 0.3 1:16.57 postgres: autovacuum launcher process

2 3.1 0:25.34 postgres: stats collector process

and the average write speed from the stats collector was ~3.5MB/s

(measured using iotop), and even when running the ANALYZE etc. I was

getting rather light IO usage (like ~15 MB/s or something).

With both cases, the total size was ~150MB, but without the space

requirements are actually 2x that (because of writing a copy and then

renaming).

I'd like to put this into 2013-01 commit fest, but if we can do some

prior testing / comments, that'd be great.

regards

Tomas

| Attachment | Content-Type | Size |

|---|---|---|

| stats-split-v2.patch | text/plain | 26.8 KB |

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | PATCH: Split stats file per database WAS: autovacuum stress-testing our system |

| Date: | 2013-01-02 23:15:45 |

| Message-ID: | 50E4BFA1.7000404@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

attached is a version of the patch that I believe is ready for the

commitfest. As the patch was discussed over a large number of messages,

I've prepared a brief summary for those who did not follow the thread.

Issue

=====

The patch aims to improve the situation in deployments with many tables

in many databases (think for example 1000 tables in 1000 databases).

Currently all the stats for all the objects (dbs, tables and functions)

are written in a single file (pgstat.stat), which may get quite large

and that consequently leads to various issues:

1) I/O load - the file is frequently written / read, which may use a

significant part of the I/O bandwidth. For example we'have to deal with

cases when the pgstat.stat size is >150MB, and it's written (and read)

continuously (once it's written, a new write starts) and utilizes 100%

bandwidth on that device.

2) CPU load - a common solution to the previous issue is moving the file

into RAM, using a tmpfs filesystem. That "fixes" the I/O bottleneck but

causes high CPU load because the system is serializing and deserializing

large amounts of data. We often see ~1 CPU core "lost" due to this (and

causing higher power consumption, but that's Amazon's problem ;-)

3) disk space utilization - the pgstat.stat file is updated in two

steps, i.e. a new version is written to another file (pgstat.tmp) and

then it's renamed to pgstat.stat, which means the device (amount of RAM,

if using tmpfs device) needs to be >2x the actual size of the file.

(Actually more, because there may be descriptors open to multiple

versions of the file.)

This patch does not attempt to fix a "single DB with multiple schemas"

scenario, although it should not have a negative impact on it.

What the patch does

===================

1) split into global and per-db files

-------------------------------------

The patch "splits" the huge pgstat.stat file into smaller pieces - one

"global" one (global.stat) with database stats, and one file for each of

the databases (oid.stat) with table.

This makes it possible to write/read much smaller amounts of data, because

a) autovacuum launcher does not need to read the whole file - it needs

just the list of databases (and not the table/func stats)

b) autovacuum workers do request a fresh copy of a single database, so

the stats collector may write just the global.stat + one of the per-db files

and that consequently leads to much lower I/O and CPU load. During our

tests we've seen the I/O to drop from ~150MB/s to less than 4MB/s, and

much lower CPU utilization.

2) a new global/stat directory

------------------------------

The pgstat.stat file was originally saved into the "global" directory,

but with so many files that would get rather messy so I've created a new

global/stat directory and all the files are stored there.

This also means we can do a simple "delete files in the dir" when

pgstat_reset_all is called.

3) pgstat_(read|write)_statsfile split

--------------------------------------

These two functions were moved into a global and per-db functions, so

now there's

pgstat_write_statsfile -- global.stat

pgstat_write_db_statsfile -- oid.stat

pgstat_read_statsfile -- global.stat

pgstat_read_db_statsfile -- oid.stat

There's a logic to read/write only those files that are actually needed.

4) list of (OID, timestamp) inquiries, last db-write

----------------------------------------------------

Originally there was a single pair of request/write timestamps for the

whole file, updated whenever a worker requested a fresh file or when the

file was written.

With the split, this had to be replaced by two lists - a timestamp of

the last inquiry (per DB), and a timestamp when each database file was

written for the last time.

The timestamp of the last DB write was added to the PgStat_StatDBEntry

and the list of inquiries is kept in last_statrequests. The fields are

used at several places, so it's probably best to see the code.

Handling the timestamps is a rather complex stuff because of the clock

skews. One of those checks is not needed as the list of inquiries is

freed right after writing all the databases. But I wouldn't be surprised

if there was something I missed, as splitting the file into multiple

pieces made this part more complex.

So please, if you're going to review this patch this is one of the

tricky places.

5) dummy file

-------------

A special handling is necessary when an inquiry arrives for a database

without a PgStat_StatDBEntry - this happens for example right after

initdb, when there are no stats for template0 and template1, yet the

autovacuum workers do send inqiries for them.

The backend_read_statsfile now uses the timestamp stored in the header

of the per-db file (not in the global one), and the easies way to handle

this for new databases is writing an empty 'dummy file' (just a header

with timestamp). Without this, this would result in 'pgstat wait

timeout' errors.

This is what pgstat_write_db_dummyfile (used in pgstat_write_statsfile)

is for.

6) format ID

------------

I've bumped PGSTAT_FILE_FORMAT_ID to a new random value, although the

filenames changed to so we could live with the old ID just fine.

We've done a fair amount of testing so far, and if everything goes fine

we plan to deploy a back-ported version of this patch (to 9.1) on a

production in ~2 weeks.

Then I'll be able to provide some numbers from a real-world workload

(although our deployment and workload is not quite usual I guess).

regards

| Attachment | Content-Type | Size |

|---|---|---|

| stats-split-v4.patch | text/plain | 37.3 KB |

| From: | Heikki Linnakangas <hlinnakangas(at)vmware(dot)com> |

|---|---|

| To: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: PATCH: Split stats file per database WAS: autovacuum stress-testing our system |

| Date: | 2013-01-03 17:47:40 |

| Message-ID: | 50E5C43C.5040309@vmware.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 03.01.2013 01:15, Tomas Vondra wrote:

> 2) a new global/stat directory

> ------------------------------

>

> The pgstat.stat file was originally saved into the "global" directory,

> but with so many files that would get rather messy so I've created a new

> global/stat directory and all the files are stored there.

>

> This also means we can do a simple "delete files in the dir" when

> pgstat_reset_all is called.

How about creating the new directory as a direct subdir of $PGDATA,

rather than buried in global? "global" is supposed to contain data

related to shared catalog relations (plus pg_control), so it doesn't

seem like the right location for per-database stat files. Also, if we're

going to have admins manually zapping the directory (hopefully when the

system is offline), that's less scary if the directory is not buried as

deep.

- Heikki

| From: | Tomas Vondra <tv(at)fuzzy(dot)cz> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: PATCH: Split stats file per database WAS: autovacuum stress-testing our system |

| Date: | 2013-01-03 19:31:32 |

| Message-ID: | 50E5DC94.4000205@fuzzy.cz |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 3.1.2013 18:47, Heikki Linnakangas wrote:

> On 03.01.2013 01:15, Tomas Vondra wrote:

>> 2) a new global/stat directory

>> ------------------------------

>>

>> The pgstat.stat file was originally saved into the "global" directory,