Re: Group commit, revised

| Lists: | pgsql-hackers |

|---|

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Group commit, revised |

| Date: | 2012-01-15 22:42:11 |

| Message-ID: | CAEYLb_V5Q8Zdjnkb4+30_dpD3NrgfoXhEurney3HsrCQsyDLWw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Attached is a patch that myself and Simon Riggs collaborated on. I

took the group commit patch that Simon posted to the list back in

November, and partially rewrote it. Here is that original thread:

http://archives.postgresql.org/pgsql-hackers/2011-11/msg00802.php

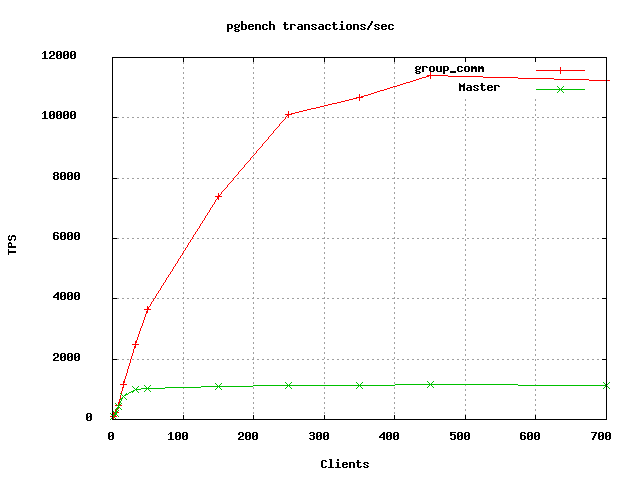

I've also attached the results of a pgbench-tools driven benchmark,

which are quite striking (Just the most relevant image - e-mail me

privately if you'd like a copy of the full report, as I don't want to

send a large PDF file to the list as a courtesy to others). Apart from

the obvious improvement in throughput, there is also a considerable

improvement in average and worst latency at all client counts.

To recap, the initial impetus to pursue this idea came from the

observation that with sync rep, we could get massive improvements in

the transactions-per-second throughput by simply adding clients. Greg

Smith performed a benchmark while in Amsterdam for the PgConf.EU

conference, which was discussed in a talk there. Over an

inter-continental connection from Amsterdam to his office in Baltimore

on the U.S. east coast, he saw TPS as reported by pgbench on what I

suppose was either an insert or update workload grow from a mere 10

TPS for a single connection to over 2000 TPS for about 300

connections. That was with the large, inherent latency imposed by

those sorts of distances (3822 miles/ 6150 km, about a 100ms ping time

on a decent connection). Quite obviously, as clients were added, the

server was able to batch increasing numbers of commits in each

confirmation message, resulting in this effect.

The main way that I've added value here is by refactoring and fixing

bugs. There were some tricky race conditions that caused the

regression tests to fail for that early draft patch, but there were a

few other small bugs too. There is an unprecedented latch pattern

introduced by this patch: Two processes (the WAL Writer and any given

connection backend) have a mutual dependency. Only one may sleep, in

which case the other is responsible for waking it. Much of the

difficulty in debugging this patch, and much of the complexity that

I've added, came from preventing both from simultaneously sleeping,

even in the face of various known failure modes like postmaster death.

If this happens, it does of course represent a denial-of-service, so

that's something that reviewers are going to want to heavily

scrutinise. I suppose that sync rep should be fine here, as it waits

on walsenders, but I haven't actually comprehensively tested the

patch, so there may be undiscovered unpleasant interactions with other

xlog related features. I can report that it passes the regression

tests successfully, and on an apparently consistently basis - I

battled with intermittent failures for a time.

Simon's original patch largely copied the syncrep.c code as an

expedient to prove the concept. Obviously this design was never

intended to get committed, and I've done some commonality and

variability analysis, refactoring to considerably slim down the new

groupcommit.c file by exposing some previously module-private

functions from syncrep.c .

I encourage others to reproduce my benchmark here. I attach a

pgbench-tools config. You can get the latest version of the tool at:

https://github.com/gregs1104/pgbench-tools

I also attach hdparm information for the disk that was used during

these benchmarks. Note that I have not disabled the write cache. It's

a Linux box, with ext4, running a recent kernel.

The benefits (and, admittedly, the controversies) of this patch go

beyond mere batching of commits: it also largely, though not entirely,

obviates the need for user backends to directly write/flush WAL, and

the WAL Writer may never sleep if it continually finds work to do -

wal_writer_delay is obsoleted, as are commit_siblings and

commit_delay. I suspect that they will not be missed. Of course, it

does all this to facilitate group commit itself. The group commit

feature does not have a GUC to control it, as this seems like

something that would be fairly pointless to turn off. FWIW, this is

currently the case for the recently introduced Maria DB group commit

implementation.

Auxiliary processes cannot use group commit. The changes made prevent

them from availing of commit_siblings/commit_delay parallelism,

because it doesn't exist anymore.

Group commit is sometimes throttled, which seems appropriate - if a

backend requests that the WAL Writer flush an LSN deemed too far from

the known flushed point, that request is rejected and the backend goes

through another path, where XLogWrite() is called. Currently the group

commit infrastructure decides that on the sole basis of there being a

volume of WAL that is equivalent in size to checkpoint_segments

between the two points. This is probably a fairly horrible heuristic,

not least since it overloads checkpoint_segments, but is of course

only a first-pass effort. Bright ideas are always welcome.

Thoughts?

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| Attachment | Content-Type | Size |

|---|---|---|

| hd_details.txt | text/plain | 3.1 KB |

|

image/png | 4.7 KB |

| group_commit_2012_01_15.patch.gz | application/x-gzip | 20.5 KB |

| config | application/octet-stream | 1.3 KB |

| From: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-16 08:11:53 |

| Message-ID: | 4F13DBC9.3070603@enterprisedb.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 16.01.2012 00:42, Peter Geoghegan wrote:

> I've also attached the results of a pgbench-tools driven benchmark,

> which are quite striking (Just the most relevant image - e-mail me

> privately if you'd like a copy of the full report, as I don't want to

> send a large PDF file to the list as a courtesy to others). Apart from

> the obvious improvement in throughput, there is also a considerable

> improvement in average and worst latency at all client counts.

Impressive results. How about uploading the PDF to the community wiki?

> The main way that I've added value here is by refactoring and fixing

> bugs. There were some tricky race conditions that caused the

> regression tests to fail for that early draft patch, but there were a

> few other small bugs too. There is an unprecedented latch pattern

> introduced by this patch: Two processes (the WAL Writer and any given

> connection backend) have a mutual dependency. Only one may sleep, in

> which case the other is responsible for waking it. Much of the

> difficulty in debugging this patch, and much of the complexity that

> I've added, came from preventing both from simultaneously sleeping,

> even in the face of various known failure modes like postmaster death.

> If this happens, it does of course represent a denial-of-service, so

> that's something that reviewers are going to want to heavily

> scrutinise. I suppose that sync rep should be fine here, as it waits

> on walsenders, but I haven't actually comprehensively tested the

> patch, so there may be undiscovered unpleasant interactions with other

> xlog related features. I can report that it passes the regression

> tests successfully, and on an apparently consistently basis - I

> battled with intermittent failures for a time.

I think it might be simpler if it wasn't the background writer that's

responsible for "driving" the group commit queue, but the backends

themselves. When a flush request comes in, you join the queue, and if

someone else is already doing the flush, sleep until the driver wakes

you up. If no-one is doing the flush yet (ie. the queue is empty), start

doing it yourself. You'll need a state variable to keep track who's

driving the queue, but otherwise I think it would be simpler as there

would be no dependency on WAL writer.

I tend think of the group commit facility as a bus. Passengers can hop

on board at any time, and they take turns on who drives the bus. When

the first passengers hops in, there is no driver so he takes the driver

seat. When the next passenger hops in, he sees that someone is driving

the bus already, so he sits down, and places a big sign on his forehead

stating the bus stop where he wants to get off, and goes to sleep. When

the driver has reached his own bus stop, he wakes up all the passengers

who wanted to get off at the same stop or any of the earlier stops [1].

He also wakes up the passenger whose bus stop is the farthest from the

current stop, and gets off the bus. The woken-up passengers who have

already reached their stops can immediately get off the bus, and the one

who has not notices that no-one is driving the bus anymore, so he takes

the driver seat.

[1] in a real bus, a passenger would not be happy if he's woken up too

late and finds himself at the next stop instead of the one where he

wanted to go, but for group commit, that is fine.

In this arrangement, you could use the per-process semaphore for the

sleep/wakeups, instead of latches. I'm not sure if there's any

difference, but semaphores are more tried and tested, at least.

> The benefits (and, admittedly, the controversies) of this patch go

> beyond mere batching of commits: it also largely, though not entirely,

> obviates the need for user backends to directly write/flush WAL, and

> the WAL Writer may never sleep if it continually finds work to do -

> wal_writer_delay is obsoleted, as are commit_siblings and

> commit_delay.

wal_writer_delay is still needed for controlling how often asynchronous

commits are flushed to disk.

> Auxiliary processes cannot use group commit. The changes made prevent

> them from availing of commit_siblings/commit_delay parallelism,

> because it doesn't exist anymore.

Auxiliary processes have PGPROC entries too. Why can't they participate?

> Group commit is sometimes throttled, which seems appropriate - if a

> backend requests that the WAL Writer flush an LSN deemed too far from

> the known flushed point, that request is rejected and the backend goes

> through another path, where XLogWrite() is called.

Hmm, if the backend doing the big flush gets the WALWriteLock before a

bunch of group committers, the group committers will have to wait until

the big flush is finished, anyway. I presume the idea of the throttling

is to avoid the situation where a bunch of small commits need to wait

for a huge flush to finish.

Perhaps the big flusher should always join the queue, but use some

heuristic to first flush up to the previous commit request, to wake up

others quickly, and do another flush to flush its own request after that.

--

Heikki Linnakangas

EnterpriseDB http://www.enterprisedb.com

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-17 14:35:28 |

| Message-ID: | CAEYLb_UqTYVZWUCRgSkVS99pRFdNaORSXaXnLXaUbpsTz-pumg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 16 January 2012 08:11, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> Impressive results. How about uploading the PDF to the community wiki?

Sure. http://wiki.postgresql.org/wiki/Group_commit .

> I think it might be simpler if it wasn't the background writer that's

> responsible for "driving" the group commit queue, but the backends

> themselves. When a flush request comes in, you join the queue, and if

> someone else is already doing the flush, sleep until the driver wakes you

> up. If no-one is doing the flush yet (ie. the queue is empty), start doing

> it yourself. You'll need a state variable to keep track who's driving the

> queue, but otherwise I think it would be simpler as there would be no

> dependency on WAL writer.

I think this replaces one problem with another. You've now effectively

elevated a nominated backend to the status of an auxiliary process -

do you intend to have the postmaster look after it, as with any other

auxiliary process? I'm not sure that that is a more difficult problem

to solve, but I suspect so. At least my proposal can have any one of

the backends, both currently participating in group commit and yet to,

wake up the WAL Writer.

> I tend think of the group commit facility as a bus. Passengers can hop on

> board at any time, and they take turns on who drives the bus. When the first

> passengers hops in, there is no driver so he takes the driver seat. When the

> next passenger hops in, he sees that someone is driving the bus already, so

> he sits down, and places a big sign on his forehead stating the bus stop

> where he wants to get off, and goes to sleep. When the driver has reached

> his own bus stop, he wakes up all the passengers who wanted to get off at

> the same stop or any of the earlier stops [1]. He also wakes up the

> passenger whose bus stop is the farthest from the current stop, and gets off

> the bus. The woken-up passengers who have already reached their stops can

> immediately get off the bus, and the one who has not notices that no-one is

> driving the bus anymore, so he takes the driver seat.

>

> [1] in a real bus, a passenger would not be happy if he's woken up too late

> and finds himself at the next stop instead of the one where he wanted to go,

> but for group commit, that is fine.

>

> In this arrangement, you could use the per-process semaphore for the

> sleep/wakeups, instead of latches. I'm not sure if there's any difference,

> but semaphores are more tried and tested, at least.

Yes, and I expect that this won't be the last time someone uses a bus

analogy in relation to this!

The proposed patch is heavily based on sync rep, which I'd have

imagined was more tried and tested than any proposed completely

alternative implementation, as it is basically a generalisation of

exactly the same principle, WAL Writer changes notwithstanding. I

would have imagined that that aspect would be particularly approved

of.

> wal_writer_delay is still needed for controlling how often asynchronous

> commits are flushed to disk.

That had occurred to me of course, but has anyone ever actually

tweaked wal_writer_delay with adjusting the behaviour of asynchronous

commits in mind? I'm pretty sure that the answer is no. I have a

slight preference for obsoleting it as a consequence of introducing

group commit, but I don't think that it matters that much.

>> Auxiliary processes cannot use group commit. The changes made prevent

>> them from availing of commit_siblings/commit_delay parallelism,

>> because it doesn't exist anymore.

>

> Auxiliary processes have PGPROC entries too. Why can't they participate?

It was deemed to be a poor design decision to effectively create a

dependency on the WAL Writer among other auxiliary processes, as to do

so would perhaps compromise the way in which the postmaster notices

and corrects isolated failures. Maybe I'll revisit that assessment,

but I am not convinced that it's worth the very careful analysis of

the implications of such an unprecedented dependency, without there

being some obvious advantage. It it's a question of their being

deprived of commit_siblings "group commit", well, we know from

experience that people didn't tend to touch it a whole lot anyway.

>> Group commit is sometimes throttled, which seems appropriate - if a

>> backend requests that the WAL Writer flush an LSN deemed too far from

>> the known flushed point, that request is rejected and the backend goes

>> through another path, where XLogWrite() is called.

>

> Hmm, if the backend doing the big flush gets the WALWriteLock before a bunch

> of group committers, the group committers will have to wait until the big

> flush is finished, anyway. I presume the idea of the throttling is to avoid

> the situation where a bunch of small commits need to wait for a huge flush

> to finish.

Exactly. Of course, you're never going to see that situation with

pgbench. I don't have much data to inform exactly what the right

trade-off is here, or some generic approximation of it across

platforms and hardware - other people will know more about this than I

do. While I have a general sense that the cost of flushing a single

page of data is the same as flushing a relatively much larger amount

of data, I cannot speak to much of an understanding of what that trade

off might be for larger amounts of data, where the question of

modelling some trade-off between throughput and latency arises,

especially with all the baggage that the implementation carries such

as whether or not we're using full_page_writes, hardware and so on.

Something simple will probably work well.

> Perhaps the big flusher should always join the queue, but use some heuristic

> to first flush up to the previous commit request, to wake up others quickly,

> and do another flush to flush its own request after that.

Maybe, but we should decide what a big flusher looks like first. That

way, if we can't figure out a way to do what you describe with it in

time for 9.2, we can at least do what I'm already doing.

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| From: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-17 17:37:16 |

| Message-ID: | 4F15B1CC.6050900@enterprisedb.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17.01.2012 16:35, Peter Geoghegan wrote:

> On 16 January 2012 08:11, Heikki Linnakangas

> <heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

>> I think it might be simpler if it wasn't the background writer that's

>> responsible for "driving" the group commit queue, but the backends

>> themselves. When a flush request comes in, you join the queue, and if

>> someone else is already doing the flush, sleep until the driver wakes you

>> up. If no-one is doing the flush yet (ie. the queue is empty), start doing

>> it yourself. You'll need a state variable to keep track who's driving the

>> queue, but otherwise I think it would be simpler as there would be no

>> dependency on WAL writer.

>

> I think this replaces one problem with another. You've now effectively

> elevated a nominated backend to the status of an auxiliary process -

> do you intend to have the postmaster look after it, as with any other

> auxiliary process?

The GroupCommitWaitForLSN() call happens within a critical section. If

the process dies, you're screwed no matter what. It's not very different

from the current situation where if one backend flushes the WAL, another

backend will notice that once it gets the WALWriteLock, and returns quickly.

>> wal_writer_delay is still needed for controlling how often asynchronous

>> commits are flushed to disk.

>

> That had occurred to me of course, but has anyone ever actually

> tweaked wal_writer_delay with adjusting the behaviour of asynchronous

> commits in mind?

I found it very helpful to reduce wal_writer_delay in pgbench tests,

when running with synchronous_commit=off. The reason is that hint bits

don't get set until the commit record is flushed to disk, so making the

flushes more frequent reduces the contention on the clog. However, Simon

made async commits nudge WAL writer if the WAL page fills up, so I'm not

sure how relevant that experience is anymore.

--

Heikki Linnakangas

EnterpriseDB http://www.enterprisedb.com

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-17 18:15:33 |

| Message-ID: | CAEYLb_UW6e0Xr4S4T=tTxr-XcQRuNu4PFi5Qja1fS5tKFi_56Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17 January 2012 17:37, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> I found it very helpful to reduce wal_writer_delay in pgbench tests, when

> running with synchronous_commit=off. The reason is that hint bits don't get

> set until the commit record is flushed to disk, so making the flushes more

> frequent reduces the contention on the clog. However, Simon made async

> commits nudge WAL writer if the WAL page fills up, so I'm not sure how

> relevant that experience is anymore.

It's quite possible that the WAL Writer will spin continuously, if

given enough work to do, with this patch.

Although it's hard to tell from the graph I sent, there is a modest

improvement in TPS for even a single client. See the tables in the

PDF.

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| From: | Jim Nasby <jim(at)nasby(dot)net> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-18 00:21:57 |

| Message-ID: | 07C6E9E8-9F4D-44CA-96DB-75B0219AC7DA@nasby.net |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Jan 15, 2012, at 4:42 PM, Peter Geoghegan wrote:

> Attached is a patch that myself and Simon Riggs collaborated on. I

> took the group commit patch that Simon posted to the list back in

> November, and partially rewrote it.

Forgive me if this is a dumb question, but I noticed a few places doing things like:

if (...)

Blah();

else

{

...

}

Is that allowed PG formatting? I thought that if braces were required on one branch of an if they were supposed to go on both sides.

Also, I didn't see any README changes in the patch... perhaps this is big enough to warrant them?

--

Jim C. Nasby, Database Architect jim(at)nasby(dot)net

512.569.9461 (cell) http://jim.nasby.net

| From: | Alvaro Herrera <alvherre(at)commandprompt(dot)com> |

|---|---|

| To: | Jim Nasby <jim(at)nasby(dot)net> |

| Cc: | Peter Geoghegan <peter(at)2ndquadrant(dot)com>, Pg Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-18 00:31:06 |

| Message-ID: | 1326846575-sup-4645@alvh.no-ip.org |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Excerpts from Jim Nasby's message of mar ene 17 21:21:57 -0300 2012:

> On Jan 15, 2012, at 4:42 PM, Peter Geoghegan wrote:

> > Attached is a patch that myself and Simon Riggs collaborated on. I

> > took the group commit patch that Simon posted to the list back in

> > November, and partially rewrote it.

>

> Forgive me if this is a dumb question, but I noticed a few places doing things like:

>

> if (...)

> Blah();

> else

> {

> ...

> }

>

> Is that allowed PG formatting? I thought that if braces were required on one branch of an if they were supposed to go on both sides.

Yeah, we even used to have pg_indent remove braces around single

statements, so if you had one statement in the "if" branch and more

around the other one, pg_indent would have left things like that anyway.

(This particular pg_indent behavior got removed because it messed up

PG_TRY blocks.)

--

Álvaro Herrera <alvherre(at)commandprompt(dot)com>

The PostgreSQL Company - Command Prompt, Inc.

PostgreSQL Replication, Consulting, Custom Development, 24x7 support

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | Peter Geoghegan <peter(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-18 01:23:23 |

| Message-ID: | CA+TgmoZ7XCR-ZAqJ_1aZyPsHcXnX7w-M1CbqWhpOO-Vu_e8DNQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Jan 17, 2012 at 12:37 PM, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> I found it very helpful to reduce wal_writer_delay in pgbench tests, when

> running with synchronous_commit=off. The reason is that hint bits don't get

> set until the commit record is flushed to disk, so making the flushes more

> frequent reduces the contention on the clog. However, Simon made async

> commits nudge WAL writer if the WAL page fills up, so I'm not sure how

> relevant that experience is anymore.

There's still a small but measurable effect there in master. I think

we might be able to make it fully auto-tuning, but I don't think we're

fully there yet (not sure how much this patch changes that equation).

I suggested a design similar to the one you just proposed to Simon

when he originally suggested this feature. It seems that if the WAL

writer is the only one doing WAL flushes, then there must be some IPC

overhead - and context switching - involved whenever WAL is flushed.

But clearly we're saving something somewhere else, on the basis of

Peter's results, so maybe it's not worth worrying about. It does seem

pretty odd to have all the regular backends going through the WAL

writer and the auxiliary processes using a different mechanism,

though. If we got rid of that, maybe WAL writer wouldn't even require

a lock, if there's only one process that can be doing it at a time.

What happens in standalone mode?

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com>, Peter Geoghegan <peter(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-18 22:38:30 |

| Message-ID: | CA+U5nM+Od94quNOsObo7gfiCRijwtJSWko8Gz9tyorHY5e96Sw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jan 18, 2012 at 1:23 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> On Tue, Jan 17, 2012 at 12:37 PM, Heikki Linnakangas

> <heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

>> I found it very helpful to reduce wal_writer_delay in pgbench tests, when

>> running with synchronous_commit=off. The reason is that hint bits don't get

>> set until the commit record is flushed to disk, so making the flushes more

>> frequent reduces the contention on the clog. However, Simon made async

>> commits nudge WAL writer if the WAL page fills up, so I'm not sure how

>> relevant that experience is anymore.

Still completely relevant and orthogonal to this discussion. The patch

retains multi-modal behaviour.

> There's still a small but measurable effect there in master. I think

> we might be able to make it fully auto-tuning, but I don't think we're

> fully there yet (not sure how much this patch changes that equation).

>

> I suggested a design similar to the one you just proposed to Simon

> when he originally suggested this feature. It seems that if the WAL

> writer is the only one doing WAL flushes, then there must be some IPC

> overhead - and context switching - involved whenever WAL is flushed.

> But clearly we're saving something somewhere else, on the basis of

> Peter's results, so maybe it's not worth worrying about. It does seem

> pretty odd to have all the regular backends going through the WAL

> writer and the auxiliary processes using a different mechanism,

> though. If we got rid of that, maybe WAL writer wouldn't even require

> a lock, if there's only one process that can be doing it at a time.

When we did sync rep it made sense to have the WALSender do the work

and for others to just wait. It would be quite strange to require a

different design for essentially the same thing for normal commits and

WAL flushes to local disk. I should mention the original proposal for

streaming replication had each backend send data to standby

independently and that was recognised as a bad idea after some time.

Same for sync rep also.

The gain is that previously there was measurable contention for the

WALWriteLock, now there is none. Plus the gang effect continues to

work even when the database gets busy, which isn't true of piggyback

commits as we use now.

Not sure why its odd to have backends do one thing and auxiliaries do

another. The whole point of auxiliary processes is that they do a

specific thing different to normal backends. Anyway, the important

thing is to have auxiliary processes be independent of each other as

much as possible, which simplifies error handling and state logic in

the postmaster.

With regard to context switching, we're making a kernel call to fsync,

so we'll get a context switch anyway. The whole process is similar to

the way lwlock wake up works.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com>, Peter Geoghegan <peter(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-19 17:40:08 |

| Message-ID: | CA+Tgmoa5LRCrCevAbqjAZxnqX7Qgv0sK9GpxsOwm2Yg3=srZ5Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jan 18, 2012 at 5:38 PM, Simon Riggs <simon(at)2ndquadrant(dot)com> wrote:

> On Wed, Jan 18, 2012 at 1:23 AM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>> On Tue, Jan 17, 2012 at 12:37 PM, Heikki Linnakangas

>> <heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

>>> I found it very helpful to reduce wal_writer_delay in pgbench tests, when

>>> running with synchronous_commit=off. The reason is that hint bits don't get

>>> set until the commit record is flushed to disk, so making the flushes more

>>> frequent reduces the contention on the clog. However, Simon made async

>>> commits nudge WAL writer if the WAL page fills up, so I'm not sure how

>>> relevant that experience is anymore.

>

> Still completely relevant and orthogonal to this discussion. The patch

> retains multi-modal behaviour.

I don't know what you mean by this. I think removing wal_writer_delay

is premature, because I think it still may have some utility, and the

patch removes it. That's a separate change that should be factored

out of this patch and discussed separately.

>> There's still a small but measurable effect there in master. I think

>> we might be able to make it fully auto-tuning, but I don't think we're

>> fully there yet (not sure how much this patch changes that equation).

>>

>> I suggested a design similar to the one you just proposed to Simon

>> when he originally suggested this feature. It seems that if the WAL

>> writer is the only one doing WAL flushes, then there must be some IPC

>> overhead - and context switching - involved whenever WAL is flushed.

>> But clearly we're saving something somewhere else, on the basis of

>> Peter's results, so maybe it's not worth worrying about. It does seem

>> pretty odd to have all the regular backends going through the WAL

>> writer and the auxiliary processes using a different mechanism,

>> though. If we got rid of that, maybe WAL writer wouldn't even require

>> a lock, if there's only one process that can be doing it at a time.

>

> When we did sync rep it made sense to have the WALSender do the work

> and for others to just wait. It would be quite strange to require a

> different design for essentially the same thing for normal commits and

> WAL flushes to local disk. I should mention the original proposal for

> streaming replication had each backend send data to standby

> independently and that was recognised as a bad idea after some time.

> Same for sync rep also.

I don't think those cases are directly comparable. SR is talking to

another machine, and I can't imagine that there is a terribly

convenient or portable way for every backend that needs one to get a

hold of the file descriptor for the socket. Even if it could, the

data is sent as a stream, so if multiple backends sent to the same

file descriptor you'd have to have some locking to prevent messages

from getting interleaved. Or else you could have multiple

connections, or use UDP, but that gets rather complex. Anyway, none

of this is an issue for file I/O: anybody can open the file, and if

two backends write data at different offsets at the same time - or the

same data at the same offset at the same time - there's no problem.

So the fact that it wasn't a good idea for SR doesn't convince me that

it's also a bad idea here.

On the other hand, I'm not saying we *should* do it that way, either -

i.e. I am not trying to "require a different design" just because it's

fun to make people change things. Rather, I am trying to figure out

whether the design you've chosen is in fact the best one, and part of

that involves reasoning about why it might or might not be. There are

obvious reasons to think that having process A kick process B and go

to sleep, then have process B do some work and wake up process A might

be less efficient than having process A just do the work itself, in

the uncontended case. The fact that that isn't happening seems

awfully strange to me; it's hard to understand why 3 system calls are

faster than one. That suggests that either the current system is

badly broken in some way that this patch fixes (in which case, it

would be nice to know what the broken thing is) or that there's an

opportunity for further optimization of the new patch (either now or

later, depending on how much work we're talking about). Just to be

clear, it makes perfect sense that the new system is faster in the

contended case, and the benchmark numbers are very impressive. It's a

lot harder to understand why it's not slower in the uncontended case.

> Not sure why its odd to have backends do one thing and auxiliaries do

> another. The whole point of auxiliary processes is that they do a

> specific thing different to normal backends. Anyway, the important

> thing is to have auxiliary processes be independent of each other as

> much as possible, which simplifies error handling and state logic in

> the postmaster.

Yeah, I guess the shutdown sequence could get a bit complex if we try

to make everyone go through the WAL writer all the time. But I wonder

if we could rejiggering things somehow so that everything goes through

WAL writer if its dead.

+ * Wait for group commit, and then return true, if group commit serviced the

+ * request (not necessarily successfully). Otherwise, return false

and fastpath

+ * out of here, allowing the backend to make alternative arrangements to flush

+ * its WAL in a more granular fashion. This can happen because the record that

+ * the backend requests to have flushed in so far into the future

that to group

+ * commit it would

This trails off in mid-sentence.

+ if (delta > XLOG_SEG_SIZE * CheckPointSegments ||

+ !ProcGlobal->groupCommitAvailable)

That seems like a gigantic value. I would think that we'd want to

forget about group commit any time we're behind by more than one

segment (maybe less).

+ if (ProcDiePending || QueryCancelPending)

+ {

+ GroupCommitCancelWait();

+

+ /*

+ * Let out-of-line interrupt handler take it

from here. Cannot raise

+ * an error here because we're in an enclosing

critical block.

+ */

+ break;

+ }

Presumably in this case we need to return false, not true. TBH, I

feel like this error handling is quite fragile, a fragility it

inherits from sync rep, on which it is based. I am not sure I have a

better idea, though.

+ /*

+ * Backends may still be waiting to have their transactions

committed in batch,

+ * but calling this function prevents any backend from using group commit, and

+ * thus from having a dependency on the WAL Writer.

+ *

+ * When the WAL Writer finishes servicing those remaining backends,

it will not

+ * have any additional work to do, and may shutdown.

+ */

+ void

+ GroupCommitDisable(void)

+ {

+ ProcGlobal->groupCommitAvailable = false;

+ }

I can't imagine that this is safe against memory ordering issues.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Simon Riggs <simon(at)2ndquadrant(dot)com>, Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-20 03:46:54 |

| Message-ID: | CAEYLb_UcWD+EOYkat=ZF-SyZP=bhHJ2ciZTeVn6=K3RWvje6RQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 19 January 2012 17:40, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> I don't know what you mean by this. I think removing wal_writer_delay

> is premature, because I think it still may have some utility, and the

> patch removes it. That's a separate change that should be factored

> out of this patch and discussed separately.

FWIW, I don't really care too much if we keep wal_writer_delay,

provided it is only used in place of the patch's

WALWRITER_NORMAL_TIMEOUT constant. I will note in passing that I doubt

that the effect with asynchronous commits and hint bits is pronounced

enough to have ever transferred through to someone making a practical

recommendation to reduce wal_writer_delay to ameliorate clog

contention.

>> When we did sync rep it made sense to have the WALSender do the work

>> and for others to just wait. It would be quite strange to require a

>> different design for essentially the same thing for normal commits and

>> WAL flushes to local disk. I should mention the original proposal for

>> streaming replication had each backend send data to standby

>> independently and that was recognised as a bad idea after some time.

>> Same for sync rep also.

>

> I don't think those cases are directly comparable. SR is talking to

> another machine, and I can't imagine that there is a terribly

> convenient or portable way for every backend that needs one to get a

> hold of the file descriptor for the socket. Even if it could, the

> data is sent as a stream, so if multiple backends sent to the same

> file descriptor you'd have to have some locking to prevent messages

> from getting interleaved. Or else you could have multiple

> connections, or use UDP, but that gets rather complex. Anyway, none

> of this is an issue for file I/O: anybody can open the file, and if

> two backends write data at different offsets at the same time - or the

> same data at the same offset at the same time - there's no problem.

> So the fact that it wasn't a good idea for SR doesn't convince me that

> it's also a bad idea here.

>

> On the other hand, I'm not saying we *should* do it that way, either -

> i.e. I am not trying to "require a different design" just because it's

> fun to make people change things. Rather, I am trying to figure out

> whether the design you've chosen is in fact the best one, and part of

> that involves reasoning about why it might or might not be. There are

> obvious reasons to think that having process A kick process B and go

> to sleep, then have process B do some work and wake up process A might

> be less efficient than having process A just do the work itself, in

> the uncontended case. The fact that that isn't happening seems

> awfully strange to me; it's hard to understand why 3 system calls are

> faster than one. That suggests that either the current system is

> badly broken in some way that this patch fixes (in which case, it

> would be nice to know what the broken thing is) or that there's an

> opportunity for further optimization of the new patch (either now or

> later, depending on how much work we're talking about). Just to be

> clear, it makes perfect sense that the new system is faster in the

> contended case, and the benchmark numbers are very impressive. It's a

> lot harder to understand why it's not slower in the uncontended case.

>

>> Not sure why its odd to have backends do one thing and auxiliaries do

>> another. The whole point of auxiliary processes is that they do a

>> specific thing different to normal backends. Anyway, the important

>> thing is to have auxiliary processes be independent of each other as

>> much as possible, which simplifies error handling and state logic in

>> the postmaster.

>

> Yeah, I guess the shutdown sequence could get a bit complex if we try

> to make everyone go through the WAL writer all the time. But I wonder

> if we could rejiggering things somehow so that everything goes through

> WAL writer if its dead.

I'm not sure what you mean by this last bit. It sounds a bit hazardous.

My problem with nominating a backend to the status of an auxiliary is

that no matter what way you cut it, it increases the failure surface

area, so to speak.

I'm not sure why Heikki thinks that it follows that having a

dependency on some backend is simpler than having one on an auxiliary

process. As to the question of IPC and context switch overhead, I'd

speculate that protecting access to a data structure with book keeping

information regarding which backend is currently the driver and so on

might imply considerably more overhead than IPC and context switching.

It might also be that having WAL Writer almost solely responsible for

this might facilitate more effective use of CPU cache.

On most modern architectures, system calls don't actually cause a full

context switch. The kernel can just enter a "mode switch" (go from

user mode to kernel mode, and then back to user mode). You can observe

this effect with vmstat. That's how 3 system calls might not look much

more expensive than 1.

> + if (delta > XLOG_SEG_SIZE * CheckPointSegments ||

> + !ProcGlobal->groupCommitAvailable)

>

> That seems like a gigantic value. I would think that we'd want to

> forget about group commit any time we're behind by more than one

> segment (maybe less).

I'm sure that you're right - I myself described it as horrible in my

original mail. I think that whatever value we set this to ought to be

well reasoned. Right now, your magic number doesn't seem much better

than my bogus heuristic (which only ever served as a placeholder

implementation that hinted at a well-principled formula). What's your

basis for suggesting that that limit would always be close to optimal?

Once again, I ask the question: what does a big flusher look like?

> + if (ProcDiePending || QueryCancelPending)

> + {

> + GroupCommitCancelWait();

> +

> + /*

> + * Let out-of-line interrupt handler take it

> from here. Cannot raise

> + * an error here because we're in an enclosing

> critical block.

> + */

> + break;

> + }

>

> Presumably in this case we need to return false, not true.

No, that is not an error. As described in the comment above that

function, the return value indicates if group commit serviced the

request, not necessarily successfully. If we were to return false,

we'd be acting as if group commit just didn't want to flush that LSN,

necessitating making alternative arrangements (calling XLogWrite()).

However, we should just hurry up and get to the interrupt handler to

error and report failure to the client (though not before executing

the code immediately before "return true" at the end of the function).

Maybe you could argue that it would be better to do that anyway, but I

think that it could mask problems and unnecessarily complicate

matters.

> TBH, I feel like this error handling is quite fragile, a fragility it

> inherits from sync rep, on which it is based. I am not sure I have a

> better idea, though.

I agree with this analysis. However, since no better alternative

suggests itself, we may find that an XXX comment will go a long way.

> + void

> + GroupCommitDisable(void)

> + {

> + ProcGlobal->groupCommitAvailable = false;

> + }

>

> I can't imagine that this is safe against memory ordering issues.

That had occurred to me. Strictly speaking, it doesn't necessarily

have to be, provided that some architecture with weak memory ordering

is not inclined to take an inordinate amount of time, or even forever,

to notice that the variable has been set to false. WAL Writer calls

this to "turn the tap off" to allow it to finish its group commit

backlog (allowing those backends to shutdown) and shutdown itself, but

it doesn't actually assume that turning the tap off will be

immediately effective.

However, now that I think about it, even if my assumption about the

behaviour of all machines regarding memory ordering there doesn't fall

down, it does seem possible that GroupCommitShutdownReady() could

return true, but that status could immediately change because some

backend on Alpha or something didn't see that the flag was set, so

your point is well taken.

By the way, how goes the introduction of memory barriers to Postgres?

I'm aware that you committed an implementation back in September, but

am not sure what the plan is as regards using them in 9.2.

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | Simon Riggs <simon(at)2ndquadrant(dot)com>, Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-20 15:30:33 |

| Message-ID: | CA+Tgmoa+jrb_vXckcExZNOzJcLJBaXq-RVoJhMbRtyZdaQMSMg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Jan 19, 2012 at 10:46 PM, Peter Geoghegan <peter(at)2ndquadrant(dot)com> wrote:

> On 19 January 2012 17:40, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>> I don't know what you mean by this. I think removing wal_writer_delay

>> is premature, because I think it still may have some utility, and the

>> patch removes it. That's a separate change that should be factored

>> out of this patch and discussed separately.

>

> FWIW, I don't really care too much if we keep wal_writer_delay,

> provided it is only used in place of the patch's

> WALWRITER_NORMAL_TIMEOUT constant. I will note in passing that I doubt

> that the effect with asynchronous commits and hint bits is pronounced

> enough to have ever transferred through to someone making a practical

> recommendation to reduce wal_writer_delay to ameliorate clog

> contention.

It was very visible in some benchmarking Heikki did, and I was able to

reproduce it.

>> Yeah, I guess the shutdown sequence could get a bit complex if we try

>> to make everyone go through the WAL writer all the time. But I wonder

>> if we could rejiggering things somehow so that everything goes through

>> WAL writer if its dead.

>

> I'm not sure what you mean by this last bit. It sounds a bit hazardous.

That last "if" was supposed to say "unless", which may contribute to

the confusion.

> My problem with nominating a backend to the status of an auxiliary is

> that no matter what way you cut it, it increases the failure surface

> area, so to speak.

I think that's the wrong way of thinking about it. Imagine this: we

maintain a queue of people who are waiting on the current WAL flush,

the current-flush-to LSN, and a queue of people who are waiting on the

next WAL flush, and a leader. All this data is protected by a

spinlock. When you want to flush WAL, you grab the spinlock. If the

current-flush-to LSN is greater than the LSN you need, you add

yourself to the waiting-on-current-flush queue, release the spinlock,

and go to sleep. Otherwise, if there's no leader, you become the

leader, enter your flush LSN as the current-flush-to-LSN, and release

the spinlock. If there is a leader, you add yourself to the

waiting-on-next-flush queue, release the spinlock, and sleep.

If you become the leader, you perform the flush. Then you retake the

spinlock, dequeue anyone waiting on the current flush, move all of the

next flush waiters (or those within a certain LSN distance) to the

current flush list, remember who is at the head of that new queue, and

release the spinlock. You then set a flag in the PGPROC of the

backend now at the head of the next-flush queue and wake him up; he

checks that flag on waking to know whether he is the leader or whether

he's just been woken because his flush is done. After waking him so

the next flush can get started, you wake all the people who were

waiting on the flush you already did.

This may or may not be a good design, but I don't think it has any

more failure surface area than what you have here. In particular,

whether or not the WAL writer is running doesn't matter; the system

can run just fine without it, and can even still do group commit. To

look at it another way, it's not a question of whether we're treating

a regular backend as an auxiliary process; there's no rule anywhere

that backends can't be dependent on the proper operation of other

backends for proper functioning - there are MANY places that have that

property, including LWLockAcquire() and LWLockRelease(). Nor is there

any rule that background processes are more reliable than foreground

processes, nor do I believe they are. Much of the existing patch's

failure surface seems to me to come from the coordination it requires

between ordinary backends and the background writer, and possible race

conditions appertaining thereto: WAL writer dies, backend dies,

postmaster dies, postmaster and WAL writer die together, etc.

>> + if (delta > XLOG_SEG_SIZE * CheckPointSegments ||

>> + !ProcGlobal->groupCommitAvailable)

>>

>> That seems like a gigantic value. I would think that we'd want to

>> forget about group commit any time we're behind by more than one

>> segment (maybe less).

>

> I'm sure that you're right - I myself described it as horrible in my

> original mail. I think that whatever value we set this to ought to be

> well reasoned. Right now, your magic number doesn't seem much better

> than my bogus heuristic (which only ever served as a placeholder

> implementation that hinted at a well-principled formula). What's your

> basis for suggesting that that limit would always be close to optimal?

It's probably not - I suspect even a single WAL segment still too

large. I'm pretty sure that it would be safe to always flush up to

the next block boundary, because we always write the whole block

anyway even if it's only partially filled. So there's no possible

regression there. Anything larger than "the end of the current 8kB

block" is going to be a trade-off between latency and throughput, and

it seems to me that there's probably only one way to figure out what's

reasonable: test a bunch of different values and see which ones

perform well in practice. Maybe we could answer part of the question

by writing a test program which does repeated sequential writes of

increasingly-large chunks of data. We might find out for example that

64kB is basically the same as 8kB because most of the overhead is in

the system call anyway, but beyond that the curve kinks. Or it may be

that 16kB is already twice as slow as 8kB, or that you can go up to

1MB without a problem. I don't see a way to know that without testing

it on a couple different pieces of hardware and seeing what happens.

>> + if (ProcDiePending || QueryCancelPending)

>> + {

>> + GroupCommitCancelWait();

>> +

>> + /*

>> + * Let out-of-line interrupt handler take it

>> from here. Cannot raise

>> + * an error here because we're in an enclosing

>> critical block.

>> + */

>> + break;

>> + }

>>

>> Presumably in this case we need to return false, not true.

>

> No, that is not an error. As described in the comment above that

> function, the return value indicates if group commit serviced the

> request, not necessarily successfully. If we were to return false,

> we'd be acting as if group commit just didn't want to flush that LSN,

> necessitating making alternative arrangements (calling XLogWrite()).

> However, we should just hurry up and get to the interrupt handler to

> error and report failure to the client (though not before executing

> the code immediately before "return true" at the end of the function).

> Maybe you could argue that it would be better to do that anyway, but I

> think that it could mask problems and unnecessarily complicate

> matters.

Ugh. Our current system doesn't even require this code to be

interruptible, I think: you can't receive cancel or die interrupts

either while waiting for an LWLock or while holding it.

> By the way, how goes the introduction of memory barriers to Postgres?

> I'm aware that you committed an implementation back in September, but

> am not sure what the plan is as regards using them in 9.2.

Well, I was expecting a few patches to go in that needed them, but so

far that hasn't happened. I think it would be a fine thing if we had

a good reason to commit some code that uses them, so that we can watch

the buildfarm turn pretty colors.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Simon Riggs <simon(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-20 22:30:06 |

| Message-ID: | 4F19EAEE.2050501@enterprisedb.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I spent some time cleaning this up. Details below, but here are the

highlights:

* Reverted the removal of wal_writer_delay

* Doesn't rely on WAL writer. Any process can act as the "leader" now.

* Removed heuristic on big flushes

* Uses PGSemaphoreLock/Unlock instead of latches

On 20.01.2012 17:30, Robert Haas wrote:

> On Thu, Jan 19, 2012 at 10:46 PM, Peter Geoghegan<peter(at)2ndquadrant(dot)com> wrote:

>>> + if (delta> XLOG_SEG_SIZE * CheckPointSegments ||

>>> + !ProcGlobal->groupCommitAvailable)

>>>

>>> That seems like a gigantic value. I would think that we'd want to

>>> forget about group commit any time we're behind by more than one

>>> segment (maybe less).

>>

>> I'm sure that you're right - I myself described it as horrible in my

>> original mail. I think that whatever value we set this to ought to be

>> well reasoned. Right now, your magic number doesn't seem much better

>> than my bogus heuristic (which only ever served as a placeholder

>> implementation that hinted at a well-principled formula). What's your

>> basis for suggesting that that limit would always be close to optimal?

>

> It's probably not - I suspect even a single WAL segment still too

> large. I'm pretty sure that it would be safe to always flush up to

> the next block boundary, because we always write the whole block

> anyway even if it's only partially filled. So there's no possible

> regression there. Anything larger than "the end of the current 8kB

> block" is going to be a trade-off between latency and throughput, and

> it seems to me that there's probably only one way to figure out what's

> reasonable: test a bunch of different values and see which ones

> perform well in practice. Maybe we could answer part of the question

> by writing a test program which does repeated sequential writes of

> increasingly-large chunks of data. We might find out for example that

> 64kB is basically the same as 8kB because most of the overhead is in

> the system call anyway, but beyond that the curve kinks. Or it may be

> that 16kB is already twice as slow as 8kB, or that you can go up to

> 1MB without a problem. I don't see a way to know that without testing

> it on a couple different pieces of hardware and seeing what happens.

I ripped away that heuristic for a flush that's "too large". After

pondering it for a while, I came to the conclusion that as implemented

in the patch, it was pointless. The thing is, if the big flush doesn't

go through the group commit machinery, it's going to grab the

WALWriteLock straight away. Before any smaller commits can proceed, they

will need to grab that lock anyway, so the effect is the same as if the

big flush had just joined the queue.

Maybe we should have a heuristic to split a large flush into smaller

chunks. The WAL segment boundary would be a quite natural split point,

for example, because when crossing the file boundary you have to issue

separate fsync()s for the files anyway. But I'm happy with leaving that

out for now, it's not any worse than it used to be without group commit

anyway.

> Ugh. Our current system doesn't even require this code to be

> interruptible, I think: you can't receive cancel or die interrupts

> either while waiting for an LWLock or while holding it.

Right. Or within HOLD/RESUME_INTERRUPTS blocks.

The patch added some CHECK_FOR_INTERRUPTS() calls into various places in

the commit/abort codepaths, but that's all dead code; they're all within

a HOLD/RESUME_INTERRUPTS blocks.

I replaced the usage of latches with the more traditional

PGSemaphoreLock/Unlock. It semaphore model works just fine in this case,

where we have a lwlock to guard the wait queue, and when a process is

waiting we know it will be woken up or something messed up at a pretty

low level. We don't need a timeout or to wake up at other signals while

waiting. Furthermore, the WAL writer didn't have a latch before this

patch; it's not many lines of code to initialize the latch and set up

the signal handler for it, but it already has a semaphore that's ready

to use.

I wonder if we should rename the file into "xlogflush.c" or something

like that, to make it clear that this works with any XLOG flushes, not

just commits? Group commit is the usual term for this feature, so we

should definitely mention that in the comments, but it might be better

to rename the files/functions to emphasize that this is about WAL

flushing in general.

This probably needs some further cleanup to fix things I've broken, and

I haven't done any performance testing, but it's progress. Do you have a

shell script or something that you used for the performance tests that I

could run? Or would you like to re-run the tests you did earlier with

this patch?

--

Heikki Linnakangas

EnterpriseDB http://www.enterprisedb.com

| Attachment | Content-Type | Size |

|---|---|---|

| group_commit_2012_01_21.patch | text/x-diff | 42.1 KB |

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Simon Riggs <simon(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-21 03:13:56 |

| Message-ID: | CAEYLb_XfjM2qQtdo9oRHJ+SnTaBteevTq=v6guFRRDffE1Fh6A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 20 January 2012 22:30, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> Maybe we should have a heuristic to split a large flush into smaller chunks.

> The WAL segment boundary would be a quite natural split point, for example,

> because when crossing the file boundary you have to issue separate fsync()s

> for the files anyway. But I'm happy with leaving that out for now, it's not

> any worse than it used to be without group commit anyway.

Let's quantify how much of a problem that is first.

> The patch added some CHECK_FOR_INTERRUPTS() calls into various places in the

> commit/abort codepaths, but that's all dead code; they're all within a

> HOLD/RESUME_INTERRUPTS blocks.

Fair enough, but do you think it's acceptable to say "well, we can't

have errors within critical blocks anyway, and nor can the driver, so

just assume that the driver will successfully service the request"?

> Furthermore, the WAL writer didn't have a latch before this patch; it's not

> many lines of code to initialize the latch and set up the signal handler for

> it, but it already has a semaphore that's ready to use.

Uh, yes it did - WalWriterDelay is passed to WaitLatch(). It didn't

use the process latch as I did (which is initialised anyway), though I

believe it should have (on general principal, to avoid invalidation

issues when generic handlers are registered, plus because the process

latch is already initialised and available), which is why I changed

it. Whatever you do with group commit, you're going to want to look at

the changes made to the WAL Writer in my original patch outside of the

main loop, because there are one or two fixes for it included

(registering a usr1 signal handler and saving errno in existing

handlers), and because we need an alternative way of power saving if

you're not going to include the mechanism originally proposed - maybe

something similar to what has been done for the BGWriter in my patch

for that. At 5 wake-ups per second by default, the process is by a

wide margin the biggest culprit (except BGWriter, which is also 5 by

default, but that is addressed by my other patch that you're

reviewing). I want to fix that problem too, and possibly investigate

if there's something to be done about the checkpointer (though that

only has a 5 second timeout, so it's not a major concern). In any

case, we should encourage the idea that auxiliary processes will use

the proc latch, unless perhaps they only use a local latch like the

avlauncher does, imho.

Why did you remove the new assertions in unix_latch.c/win32_latch.c? I

think you should keep them, as well as my additional comments on latch

timeout invalidation issues in latch.h which are untouched in your

revision (though this looks to be a rough revision, so I shouldn't

read anything into that either way I suppose). In general, we should

try and use the process latch whenever we can.

> I wonder if we should rename the file into "xlogflush.c" or something like

> that, to make it clear that this works with any XLOG flushes, not just

> commits? Group commit is the usual term for this feature, so we should

> definitely mention that in the comments, but it might be better to rename

> the files/functions to emphasize that this is about WAL flushing in general.

Okay.

> This probably needs some further cleanup to fix things I've broken, and I

> haven't done any performance testing, but it's progress. Do you have a shell

> script or something that you used for the performance tests that I could

> run? Or would you like to re-run the tests you did earlier with this patch?

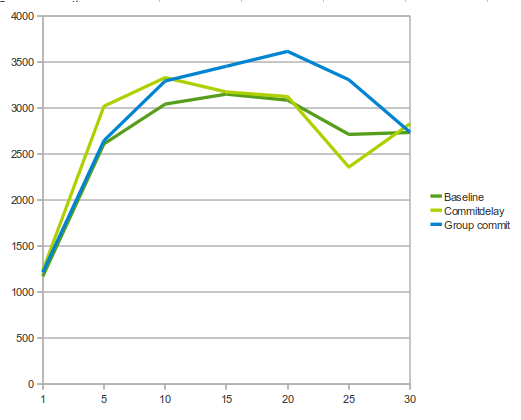

No, I'm using pgbench-tools, and there's no reason to think that you

couldn't get similar results on ordinary hardware, which is all I used

- obviously you'll want to make sure that you're using a file system

that supports granular fsyncs, like ext4. All of the details,

including the config for pgbench-tools, are in my original e-mail. I

have taken the time to re-run the benchmark and update the wiki with

that new information - I'd call it a draw.

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| From: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Simon Riggs <simon(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-21 03:37:22 |

| Message-ID: | CAEYLb_UjzGO9axc7x-ONeCy8HdqfDCTo2bnAxCMQnGbPwb+Tsg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 21 January 2012 03:13, Peter Geoghegan <peter(at)2ndquadrant(dot)com> wrote:

> I have taken the time to re-run the benchmark and update the wiki with

> that new information - I'd call it a draw.

On second though, the occasional latency spikes that we see with my

patch (which uses the poll() based latch in the run that is

benchmarked) might be significant - difficult to say. The averages are

about the same though.

--

Peter Geoghegan http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training and Services

| From: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | Peter Geoghegan <peter(at)2ndquadrant(dot)com>, Robert Haas <robertmhaas(at)gmail(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-25 03:50:22 |

| Message-ID: | CA+U5nMLG91oFao4=O_WoMSgQ8ELEGxqTqqmXLzADt5XV-rAUpw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Jan 20, 2012 at 10:30 PM, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> I spent some time cleaning this up. Details below, but here are the

> highlights:

>

> * Reverted the removal of wal_writer_delay

> * Removed heuristic on big flushes

No contested viewpoints on anything there.

> * Doesn't rely on WAL writer. Any process can act as the "leader" now.

> * Uses PGSemaphoreLock/Unlock instead of latches

Thanks for producing an alternate version, it will allow us to comment

on various approaches.

There is much yet to discuss so please don't think about committing

anything yet.

--

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Training & Services

| From: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

|---|---|

| To: | Peter Geoghegan <peter(at)2ndquadrant(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Simon Riggs <simon(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-25 08:11:00 |

| Message-ID: | 4F1FB914.2060003@enterprisedb.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

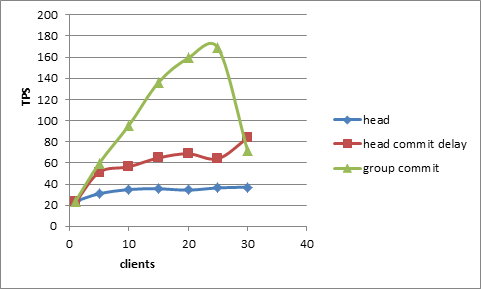

I've been thinking, what exactly is the important part of this group

commit patch that gives the benefit? Keeping the queue sorted isn't all

that important - XLogFlush() requests for commits will come in almost

the correct order anyway.

I also don't much like the division of labour between groupcommit.c and

xlog.c. XLogFlush() calls GroupCommitWaitForLSN(), which calls back

DoXLogFlush(), which is a bit hard to follow. (that's in my latest

version; the original patch had similar division but it also cut across

processes, with the wal writer actually doing the flush)

It occurs to me that the WALWriteLock already provides much of the same

machinery we're trying to build here. It provides FIFO-style queuing,

with the capability to wake up the next process or processes in the

queue. Perhaps all we need is some new primitive to LWLock, to make it

do exactly what we need.

Attached is a patch to do that. It adds a new mode to

LWLockConditionalAcquire(), LW_EXCLUSIVE_BUT_WAIT. If the lock is free,

it is acquired and the function returns immediately. However, unlike

normal LWLockConditionalAcquire(), if the lock is not free it goes to

sleep until it is released. But unlike normal LWLockAcquire(), when the

lock is released, the function returns *without* acquiring the lock.

I modified XLogFlush() to use that new function for WALWriteLock. It

tries to get WALWriteLock, but if it's not immediately free, it waits

until it is released. Then, before trying to acquire the lock again, it

rechecks LogwrtResult to see if the record was already flushed by the

process that released the lock.

This is a much smaller patch than the group commit patch, and in my

pgbench-tools tests on my laptop, it has the same effect on performance.

The downside is that it touches lwlock.c, which is a change at a lower

level. Robert's flexlocks patch probably would've been useful here.

--

Heikki Linnakangas

EnterpriseDB http://www.enterprisedb.com

| Attachment | Content-Type | Size |

|---|---|---|

| groupcommit-with-special-lwlockmode-1.patch | text/x-diff | 7.6 KB |

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Heikki Linnakangas <heikki(dot)linnakangas(at)enterprisedb(dot)com> |

| Cc: | Peter Geoghegan <peter(at)2ndquadrant(dot)com>, Simon Riggs <simon(at)2ndquadrant(dot)com>, PG Hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Group commit, revised |

| Date: | 2012-01-26 02:10:47 |

| Message-ID: | CA+TgmoaPyQKEaoFz8HkDGvRDbOmRpkGo69zjODB5=7Jh3hbPQA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Wed, Jan 25, 2012 at 3:11 AM, Heikki Linnakangas

<heikki(dot)linnakangas(at)enterprisedb(dot)com> wrote:

> I've been thinking, what exactly is the important part of this group commit

> patch that gives the benefit? Keeping the queue sorted isn't all that

> important - XLogFlush() requests for commits will come in almost the correct

> order anyway.

>

> I also don't much like the division of labour between groupcommit.c and

> xlog.c. XLogFlush() calls GroupCommitWaitForLSN(), which calls back

> DoXLogFlush(), which is a bit hard to follow. (that's in my latest version;

> the original patch had similar division but it also cut across processes,

> with the wal writer actually doing the flush)

>

> It occurs to me that the WALWriteLock already provides much of the same

> machinery we're trying to build here. It provides FIFO-style queuing, with

> the capability to wake up the next process or processes in the queue.

> Perhaps all we need is some new primitive to LWLock, to make it do exactly

> what we need.

>

> Attached is a patch to do that. It adds a new mode to

> LWLockConditionalAcquire(), LW_EXCLUSIVE_BUT_WAIT. If the lock is free, it

> is acquired and the function returns immediately. However, unlike normal

> LWLockConditionalAcquire(), if the lock is not free it goes to sleep until

> it is released. But unlike normal LWLockAcquire(), when the lock is

> released, the function returns *without* acquiring the lock.

>

> I modified XLogFlush() to use that new function for WALWriteLock. It tries

> to get WALWriteLock, but if it's not immediately free, it waits until it is

> released. Then, before trying to acquire the lock again, it rechecks

> LogwrtResult to see if the record was already flushed by the process that

> released the lock.

>

> This is a much smaller patch than the group commit patch, and in my

> pgbench-tools tests on my laptop, it has the same effect on performance. The

> downside is that it touches lwlock.c, which is a change at a lower level.

> Robert's flexlocks patch probably would've been useful here.

I think you should break this off into a new function,

LWLockWaitUntilFree(), rather than treating it as a new LWLockMode.

Also, instead of adding lwWaitOnly, I would suggest that we generalize

lwWaiting and lwExclusive into a field lwWaitRequest, which can be set

to 1 for exclusive, 2 for shared, 3 for wait-for-free, etc. That way

we don't have to add another boolean every time someone invents a new

type of wait - not that that should hopefully happen very often. A

side benefit of this is that you can simplify the test in

LWLockRelease(): keep releasing waiters until you come to an exclusive