Re: Speed up Clog Access by increasing CLOG buffers

| Lists: | pgsql-hackers |

|---|

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-01 04:49:19 |

| Message-ID: | CAA4eK1+8=X9mSNeVeHg_NqMsOR-XKsjuqrYzQf=iCsdh3U4EOA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

After reducing ProcArrayLock contention in commit

(0e141c0fbb211bdd23783afa731e3eef95c9ad7a), the other lock

which seems to be contentious in read-write transactions is

CLogControlLock. In my investigation, I found that the contention

is mainly due to two reasons, one is that while writing the transaction

status in CLOG (TransactionIdSetPageStatus()), it acquires EXCLUSIVE

CLogControlLock which contends with every other transaction which

tries to access the CLOG for checking transaction status and to reduce it

already a patch [1] is proposed by Simon; Second contention is due to

the reason that when the CLOG page is not found in CLOG buffers, it

needs to acquire CLogControlLock in Exclusive mode which again contends

with shared lockers which tries to access the transaction status.

Increasing CLOG buffers to 64 helps in reducing the contention due to second

reason. Experiments revealed that increasing CLOG buffers only helps

once the contention around ProcArrayLock is reduced.

Performance Data

-----------------------------

RAM - 500GB

8 sockets, 64 cores(Hyperthreaded128 threads total)

Non-default parameters

------------------------------------

max_connections = 300

shared_buffers=8GB

min_wal_size=10GB

max_wal_size=15GB

checkpoint_timeout =35min

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.9

wal_buffers = 256MB

pgbench setup

------------------------

scale factor - 300

Data is on magnetic disk and WAL on ssd.

pgbench -M prepared tpc-b

HEAD - commit 0e141c0f

Patch-1 - increase_clog_bufs_v1

Client Count/Patch_ver 1 8 16 32 64 128 256 HEAD 911 5695 9886 18028 27851

28654 25714 Patch-1 954 5568 9898 18450 29313 31108 28213

This data shows that there is an increase of ~5% at 64 client-count

and 8~10% at more higher clients without degradation at lower client-

count. In above data, there is some fluctuation seen at 8-client-count,

but I attribute that to run-to-run variation, however if anybody has doubts

I can again re-verify the data at lower client counts.

Now if we try to further increase the number of CLOG buffers to 128,

no improvement is seen.

I have also verified that this improvement can be seen only after the

contention around ProcArrayLock is reduced. Below is the data with

Commit before the ProcArrayLock reduction patch. Setup and test

is same as mentioned for previous test.

HEAD - commit 253de7e1

Patch-1 - increase_clog_bufs_v1

Client Count/Patch_ver 128 256 HEAD 16657 10512 Patch-1 16694 10477

I think the benefit of this patch would be more significant along

with the other patch to reduce CLogControlLock contention [1]

(I have not tested both the patches together as still there are

few issues left in the other patch), however it has it's own independent

value, so can be considered separately.

Thoughts?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| Attachment | Content-Type | Size |

|---|---|---|

| increase_clog_bufs_v1.patch | application/octet-stream | 2.4 KB |

| From: | Andres Freund <andres(at)anarazel(dot)de> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-03 11:41:37 |

| Message-ID: | 20150903114137.GE27649@awork2.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 2015-09-01 10:19:19 +0530, Amit Kapila wrote:

> pgbench setup

> ------------------------

> scale factor - 300

> Data is on magnetic disk and WAL on ssd.

> pgbench -M prepared tpc-b

>

> HEAD - commit 0e141c0f

> Patch-1 - increase_clog_bufs_v1

>

> Client Count/Patch_ver 1 8 16 32 64 128 256 HEAD 911 5695 9886 18028 27851

> 28654 25714 Patch-1 954 5568 9898 18450 29313 31108 28213

>

>

> This data shows that there is an increase of ~5% at 64 client-count

> and 8~10% at more higher clients without degradation at lower client-

> count. In above data, there is some fluctuation seen at 8-client-count,

> but I attribute that to run-to-run variation, however if anybody has doubts

> I can again re-verify the data at lower client counts.

> Now if we try to further increase the number of CLOG buffers to 128,

> no improvement is seen.

>

> I have also verified that this improvement can be seen only after the

> contention around ProcArrayLock is reduced. Below is the data with

> Commit before the ProcArrayLock reduction patch. Setup and test

> is same as mentioned for previous test.

The buffer replacement algorithm for clog is rather stupid - I do wonder

where the cutoff is that it hurts.

Could you perhaps try to create a testcase where xids are accessed that

are so far apart on average that they're unlikely to be in memory? And

then test that across a number of client counts?

There's two reasons that I'd like to see that: First I'd like to avoid

regression, second I'd like to avoid having to bump the maximum number

of buffers by small buffers after every hardware generation...

> /*

> * Number of shared CLOG buffers.

> *

> - * Testing during the PostgreSQL 9.2 development cycle revealed that on a

> + * Testing during the PostgreSQL 9.6 development cycle revealed that on a

> * large multi-processor system, it was possible to have more CLOG page

> - * requests in flight at one time than the number of CLOG buffers which existed

> - * at that time, which was hardcoded to 8. Further testing revealed that

> - * performance dropped off with more than 32 CLOG buffers, possibly because

> - * the linear buffer search algorithm doesn't scale well.

> + * requests in flight at one time than the number of CLOG buffers which

> + * existed at that time, which was 32 assuming there are enough shared_buffers.

> + * Further testing revealed that either performance stayed same or dropped off

> + * with more than 64 CLOG buffers, possibly because the linear buffer search

> + * algorithm doesn't scale well or some other locking bottlenecks in the

> + * system mask the improvement.

> *

> - * Unconditionally increasing the number of CLOG buffers to 32 did not seem

> + * Unconditionally increasing the number of CLOG buffers to 64 did not seem

> * like a good idea, because it would increase the minimum amount of shared

> * memory required to start, which could be a problem for people running very

> * small configurations. The following formula seems to represent a reasonable

> * compromise: people with very low values for shared_buffers will get fewer

> - * CLOG buffers as well, and everyone else will get 32.

> + * CLOG buffers as well, and everyone else will get 64.

> *

> * It is likely that some further work will be needed here in future releases;

> * for example, on a 64-core server, the maximum number of CLOG requests that

> * can be simultaneously in flight will be even larger. But that will

> * apparently require more than just changing the formula, so for now we take

> - * the easy way out.

> + * the easy way out. It could also happen that after removing other locking

> + * bottlenecks, further increase in CLOG buffers can help, but that's not the

> + * case now.

> */

I think the comment should be more drastically rephrased to not

reference individual versions and numbers.

Greetings,

Andres Freund

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-07 13:34:10 |

| Message-ID: | 20150907133410.GO2912@alvherre.pgsql |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Andres Freund wrote:

> The buffer replacement algorithm for clog is rather stupid - I do wonder

> where the cutoff is that it hurts.

>

> Could you perhaps try to create a testcase where xids are accessed that

> are so far apart on average that they're unlikely to be in memory? And

> then test that across a number of client counts?

>

> There's two reasons that I'd like to see that: First I'd like to avoid

> regression, second I'd like to avoid having to bump the maximum number

> of buffers by small buffers after every hardware generation...

I wonder if it would make sense to explore an idea that has been floated

for years now -- to have pg_clog pages be allocated as part of shared

buffers rather than have their own separate pool. That way, no separate

hardcoded allocation limit is needed. It's probably pretty tricky to

implement, though :-(

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Andres Freund <andres(at)anarazel(dot)de> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-07 16:49:08 |

| Message-ID: | 20150907164908.GB5084@alap3.anarazel.de |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Hi,

On 2015-09-07 10:34:10 -0300, Alvaro Herrera wrote:

> I wonder if it would make sense to explore an idea that has been floated

> for years now -- to have pg_clog pages be allocated as part of shared

> buffers rather than have their own separate pool. That way, no separate

> hardcoded allocation limit is needed. It's probably pretty tricky to

> implement, though :-(

I still think that'd be a good plan, especially as it'd also let us use

a lot of related infrastructure. I doubt we could just use the standard

cache replacement mechanism though - it's not particularly efficient

either... I also have my doubts that a hash table lookup at every clog

lookup is going to be ok performancewise.

The biggest problem will probably be that the buffer manager is pretty

directly tied to relations and breaking up that bond won't be all that

easy. My guess is that the best bet here is that the easiest way to at

least explore this is to define pg_clog/... as their own tablespaces

(akin to pg_global) and treat the files therein as plain relations.

Greetings,

Andres Freund

| From: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-07 18:56:39 |

| Message-ID: | 20150907185639.GQ2912@alvherre.pgsql |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Andres Freund wrote:

> On 2015-09-07 10:34:10 -0300, Alvaro Herrera wrote:

> > I wonder if it would make sense to explore an idea that has been floated

> > for years now -- to have pg_clog pages be allocated as part of shared

> > buffers rather than have their own separate pool. That way, no separate

> > hardcoded allocation limit is needed. It's probably pretty tricky to

> > implement, though :-(

>

> I still think that'd be a good plan, especially as it'd also let us use

> a lot of related infrastructure. I doubt we could just use the standard

> cache replacement mechanism though - it's not particularly efficient

> either... I also have my doubts that a hash table lookup at every clog

> lookup is going to be ok performancewise.

Yeah. I guess we'd have to mark buffers as unusable for regular pages

("somehow"), and have a separate lookup mechanism. As I said, it is

likely to be tricky.

--

Álvaro Herrera http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-08 11:50:01 |

| Message-ID: | CAA4eK1KpXReQcFL-qKw6T7buYqQAmAEPwYgwCzmRtS+9J4dq0Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 7, 2015 at 7:04 PM, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com>

wrote:

>

> Andres Freund wrote:

>

> > The buffer replacement algorithm for clog is rather stupid - I do wonder

> > where the cutoff is that it hurts.

> >

> > Could you perhaps try to create a testcase where xids are accessed that

> > are so far apart on average that they're unlikely to be in memory?

> >

Yes, I am working on it, what I have in mind is to create a table with

large number of rows (say 50000000) and have each row with different

transaction id. Now each transaction should try to update rows that

are at least 1048576 (number of transactions whose status can be held in

32 CLog buffers) distance apart, that way for each update it will try to

access

Clog page that is not in-memory. Let me know if you can think of any

better or simpler way.

> > There's two reasons that I'd like to see that: First I'd like to avoid

> > regression, second I'd like to avoid having to bump the maximum number

> > of buffers by small buffers after every hardware generation...

>

> I wonder if it would make sense to explore an idea that has been floated

> for years now -- to have pg_clog pages be allocated as part of shared

> buffers rather than have their own separate pool.

>

There could be some benefits of it, but I think we still have to acquire

Exclusive lock while committing transaction or while Extending Clog

which are also major sources of contention in this area. I think the

benefits of moving it to shared_buffers could be that the upper limit on

number of pages that can be retained in memory could be increased and even

if we have to replace the page, responsibility to flush it could be

delegated

to checkpoint. So yes, there could be benefits with this idea, but not sure

if they are worth investigating this idea, one thing we could try if you

think

that is beneficial is that just skip fsync during write of clog pages and

if thats

beneficial, then we can think of pushing it to checkpoint (something similar

to what Andres has mentioned on nearby thread).

Yet another way could be to have configuration variable for clog buffers

(Clog_Buffers).

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-08 18:30:19 |

| Message-ID: | CA+TgmoaDRa2ioA=Udku9bgJOAff743nb+phLLVyY46rmHeFa5A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 7, 2015 at 9:34 AM, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com> wrote:

> Andres Freund wrote:

>> The buffer replacement algorithm for clog is rather stupid - I do wonder

>> where the cutoff is that it hurts.

>>

>> Could you perhaps try to create a testcase where xids are accessed that

>> are so far apart on average that they're unlikely to be in memory? And

>> then test that across a number of client counts?

>>

>> There's two reasons that I'd like to see that: First I'd like to avoid

>> regression, second I'd like to avoid having to bump the maximum number

>> of buffers by small buffers after every hardware generation...

>

> I wonder if it would make sense to explore an idea that has been floated

> for years now -- to have pg_clog pages be allocated as part of shared

> buffers rather than have their own separate pool. That way, no separate

> hardcoded allocation limit is needed. It's probably pretty tricky to

> implement, though :-(

Yeah, I looked at that once and threw my hands up in despair pretty

quickly. I also considered another idea that looked simpler: instead

of giving every SLRU its own pool of pages, have one pool of pages for

all of them, separate from shared buffers but common to all SLRUs.

That looked easier, but still not easy.

I've also considered trying to replace the entire SLRU system with new

code and throwing away what exists today. The locking mode is just

really strange compared to what we do elsewhere. That, too, does not

look all that easy. :-(

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Andres Freund <andres(at)anarazel(dot)de> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-11 14:31:18 |

| Message-ID: | CAA4eK1LMMGNQ439BUm0LcS3p0sb8S9kc-cUGU_ThNqMwA8_Tug@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Thu, Sep 3, 2015 at 5:11 PM, Andres Freund <andres(at)anarazel(dot)de> wrote:

>

> On 2015-09-01 10:19:19 +0530, Amit Kapila wrote:

> > pgbench setup

> > ------------------------

> > scale factor - 300

> > Data is on magnetic disk and WAL on ssd.

> > pgbench -M prepared tpc-b

> >

> > HEAD - commit 0e141c0f

> > Patch-1 - increase_clog_bufs_v1

> >

>

> The buffer replacement algorithm for clog is rather stupid - I do wonder

> where the cutoff is that it hurts.

>

> Could you perhaps try to create a testcase where xids are accessed that

> are so far apart on average that they're unlikely to be in memory? And

> then test that across a number of client counts?

>

Okay, I have tried one such test, but what I could come up with is on an

average every 100th access is a disk access and then tested it with

different number of clog buffers and client count. Below is the result:

Non-default parameters

------------------------------------

max_connections = 300

shared_buffers=32GB

min_wal_size=10GB

max_wal_size=15GB

checkpoint_timeout =35min

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.9

wal_buffers = 256MB

autovacuum=off

HEAD - commit 49124613

Patch-1 - Clog Buffers - 64

Patch-2 - Clog Buffers - 128

Client Count/Patch_ver 1 8 64 128 HEAD 1395 8336 37866 34463 Patch-1 1615

8180 37799 35315 Patch-2 1409 8219 37068 34729

So there is not much difference in test results with different values for

Clog

buffers, probably because the I/O has dominated the test and it shows that

increasing the clog buffers won't regress the current behaviour even though

there are lot more accesses for transaction status outside CLOG buffers.

Now about the test, create a table with large number of rows (say 11617457,

I have tried to create larger, but it was taking too much time (more than a

day))

and have each row with different transaction id. Now each transaction

should

update rows that are at least 1048576 (number of transactions whose status

can

be held in 32 CLog buffers) distance apart, that way ideally for each update

it will

try to access Clog page that is not in-memory, however as the value to

update

is getting selected randomly and that leads to every 100th access as disk

access.

Test

-------

1. Attached file clog_prep.sh should create and populate the required

table and create the function used to access the CLOG pages. You

might want to update the no_of_rows based on the rows you want to

create in table

2. Attached file access_clog_disk.sql is used to execute the function

with random values. You might want to update nrows variable based

on the rows created in previous step.

3. Use pgbench as follows with different client count

./pgbench -c 4 -j 4 -n -M prepared -f "access_clog_disk.sql" -T 300 postgres

4. To ensure that clog access function always accesses same data

during each run, the test ensures to copy the data_directory created by

step-1

before each run.

I have checked by adding some instrumentation that approximately

every 100th access is disk access, attached patch clog_info-v1.patch

adds the necessary instrumentation in code.

As an example, pgbench test yields below results:

./pgbench -c 4 -j 4 -n -M prepared -f "access_clog_disk.sql" -T 180 postgres

LOG: trans_status(3169396)

LOG: trans_status_disk(29546)

LOG: trans_status(3054952)

LOG: trans_status_disk(28291)

LOG: trans_status(3131242)

LOG: trans_status_disk(28989)

LOG: trans_status(3155449)

LOG: trans_status_disk(29347)

Here 'trans_status' is the number of times the process went for accessing

the CLOG status and 'trans_status_disk' is the number of times it went

to disk for accessing CLOG page.

>

> > /*

> > * Number of shared CLOG buffers.

> > *

>

>

>

> I think the comment should be more drastically rephrased to not

> reference individual versions and numbers.

>

Updated comments and the patch (increate_clog_bufs_v2.patch)

containing the same is attached.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| Attachment | Content-Type | Size |

|---|---|---|

| clog_prep.sh | application/x-sh | 2.6 KB |

| access_clog_disk.sql | application/octet-stream | 154 bytes |

| clog_info-v1.patch | application/octet-stream | 1.9 KB |

| increase_clog_bufs_v2.patch | application/octet-stream | 2.1 KB |

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-11 15:51:36 |

| Message-ID: | CA+TgmoYE4kj=fRNwPPL6+Qm-oD-JYX+RnxFjVaGGgOjT1aj70Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Sep 11, 2015 at 10:31 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> > Could you perhaps try to create a testcase where xids are accessed that

> > are so far apart on average that they're unlikely to be in memory? And

> > then test that across a number of client counts?

> >

>

> Now about the test, create a table with large number of rows (say 11617457,

> I have tried to create larger, but it was taking too much time (more than a day))

> and have each row with different transaction id. Now each transaction should

> update rows that are at least 1048576 (number of transactions whose status can

> be held in 32 CLog buffers) distance apart, that way ideally for each update it will

> try to access Clog page that is not in-memory, however as the value to update

> is getting selected randomly and that leads to every 100th access as disk access.

What about just running a regular pgbench test, but hacking the

XID-assignment code so that we increment the XID counter by 100 each

time instead of 1?

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-12 03:01:51 |

| Message-ID: | CAA4eK1JxL0zfqNxX=a-bRyNbCfXeL9Pq8v5oeoPb8Z_u2sjL+Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Sep 11, 2015 at 9:21 PM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>

> On Fri, Sep 11, 2015 at 10:31 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > > Could you perhaps try to create a testcase where xids are accessed

that

> > > are so far apart on average that they're unlikely to be in memory? And

> > > then test that across a number of client counts?

> > >

> >

> > Now about the test, create a table with large number of rows (say

11617457,

> > I have tried to create larger, but it was taking too much time (more

than a day))

> > and have each row with different transaction id. Now each transaction

should

> > update rows that are at least 1048576 (number of transactions whose

status can

> > be held in 32 CLog buffers) distance apart, that way ideally for each

update it will

> > try to access Clog page that is not in-memory, however as the value to

update

> > is getting selected randomly and that leads to every 100th access as

disk access.

>

> What about just running a regular pgbench test, but hacking the

> XID-assignment code so that we increment the XID counter by 100 each

> time instead of 1?

>

If I am not wrong we need 1048576 number of transactions difference

for each record to make each CLOG access a disk access, so if we

increment XID counter by 100, then probably every 10000th (or multiplier

of 10000) transaction would go for disk access.

The number 1048576 is derived by below calc:

#define CLOG_XACTS_PER_BYTE 4

#define CLOG_XACTS_PER_PAGE (BLCKSZ * CLOG_XACTS_PER_BYTE)

Transaction difference required for each transaction to go for disk access:

CLOG_XACTS_PER_PAGE * num_clog_buffers.

I think reducing to every 100th access for transaction status as disk access

is sufficient to prove that there is no regression with the patch for the

screnario

asked by Andres or do you think it is not?

Now another possibility here could be that we try by commenting out fsync

in CLOG path to see how much it impact the performance of this test and

then for pgbench test. I am not sure there will be any impact because even

every 100th transaction goes to disk access that is still less as compare

WAL fsync which we have to perform for each transaction.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-14 11:23:27 |

| Message-ID: | CA+TgmobJCGdyZkdbD8cSMh7NfPvEbo2_-BL_TVGapH_xX7YtiA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Sep 11, 2015 at 11:01 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> If I am not wrong we need 1048576 number of transactions difference

> for each record to make each CLOG access a disk access, so if we

> increment XID counter by 100, then probably every 10000th (or multiplier

> of 10000) transaction would go for disk access.

>

> The number 1048576 is derived by below calc:

> #define CLOG_XACTS_PER_BYTE 4

> #define CLOG_XACTS_PER_PAGE (BLCKSZ * CLOG_XACTS_PER_BYTE)

>

> Transaction difference required for each transaction to go for disk access:

> CLOG_XACTS_PER_PAGE * num_clog_buffers.

>

> I think reducing to every 100th access for transaction status as disk access

> is sufficient to prove that there is no regression with the patch for the

> screnario

> asked by Andres or do you think it is not?

I have no idea. I was just suggesting that hacking the server somehow

might be an easier way of creating the scenario Andres was interested

in than the process you described. But feel free to ignore me, I

haven't taken much time to think about this.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com> |

|---|---|

| To: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-18 17:38:59 |

| Message-ID: | 55FC4C33.1050903@redhat.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 09/11/2015 10:31 AM, Amit Kapila wrote:

> Updated comments and the patch (increate_clog_bufs_v2.patch)

> containing the same is attached.

>

I have done various runs on an Intel Xeon 28C/56T w/ 256Gb mem and 2 x

RAID10 SSD (data + xlog) with Min(64,).

Kept the shared_buffers=64GB and effective_cache_size=160GB settings

across all runs, but did runs with both synchronous_commit on and off

and different scale factors for pgbench.

The results are in flux for all client numbers within -2 to +2%

depending on the latency average.

So no real conclusion from here other than the patch doesn't help/hurt

performance on this setup, likely depends on further CLogControlLock

related changes to see real benefit.

Best regards,

Jesper

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-19 03:11:44 |

| Message-ID: | CAA4eK1LKw4zR6Mb5EQWsmRCyhxpeUakRrDyB1Gth6nw4ktw3iw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Sep 18, 2015 at 11:08 PM, Jesper Pedersen <

jesper(dot)pedersen(at)redhat(dot)com> wrote:

> On 09/11/2015 10:31 AM, Amit Kapila wrote:

>

>> Updated comments and the patch (increate_clog_bufs_v2.patch)

>> containing the same is attached.

>>

>>

> I have done various runs on an Intel Xeon 28C/56T w/ 256Gb mem and 2 x

> RAID10 SSD (data + xlog) with Min(64,).

>

>

The benefit with this patch could be seen at somewhat higher

client-count as you can see in my initial mail, can you please

once try with client count > 64?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Peter Geoghegan <pg(at)heroku(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-21 01:04:28 |

| Message-ID: | CAM3SWZRWJp05QvwPtuDL4xmixuYcBeq2ChVZpgwJZdZ_ncGDYQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 31, 2015 at 9:49 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> Increasing CLOG buffers to 64 helps in reducing the contention due to second

> reason. Experiments revealed that increasing CLOG buffers only helps

> once the contention around ProcArrayLock is reduced.

There has been a lot of research on bitmap compression, more or less

for the benefit of bitmap index access methods.

Simple techniques like run length encoding are effective for some

things. If the need to map the bitmap into memory to access the status

of transactions is a concern, there has been work done on that, too.

Byte-aligned bitmap compression is a technique that might offer a good

trade-off between compression clog, and decompression overhead -- I

think that there basically is no decompression overhead, because set

operations can be performed on the "compressed" representation

directly. There are other techniques, too.

Something to consider. There could be multiple benefits to compressing

clog, even beyond simply avoiding managing clog buffers.

--

Peter Geoghegan

| From: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-09-21 12:55:45 |

| Message-ID: | 55FFFE51.1060308@redhat.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 09/18/2015 11:11 PM, Amit Kapila wrote:

>> I have done various runs on an Intel Xeon 28C/56T w/ 256Gb mem and 2 x

>> RAID10 SSD (data + xlog) with Min(64,).

>>

>>

> The benefit with this patch could be seen at somewhat higher

> client-count as you can see in my initial mail, can you please

> once try with client count > 64?

>

Client count were from 1 to 80.

I did do one run with Min(128,) like you, but didn't see any difference

in the result compared to Min(64,), so focused instead in the

sync_commit on/off testing case.

Best regards,

Jesper

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-10-05 01:04:17 |

| Message-ID: | CAMkU=1yLzEBi3w-zsAMzyYvDs-FM1p_AiUu9=0d67u0fULWgqw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Fri, Sep 11, 2015 at 8:01 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> On Fri, Sep 11, 2015 at 9:21 PM, Robert Haas <robertmhaas(at)gmail(dot)com>

> wrote:

> >

> > On Fri, Sep 11, 2015 at 10:31 AM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

> wrote:

> > > > Could you perhaps try to create a testcase where xids are accessed

> that

> > > > are so far apart on average that they're unlikely to be in memory?

> And

> > > > then test that across a number of client counts?

> > > >

> > >

> > > Now about the test, create a table with large number of rows (say

> 11617457,

> > > I have tried to create larger, but it was taking too much time (more

> than a day))

> > > and have each row with different transaction id. Now each transaction

> should

> > > update rows that are at least 1048576 (number of transactions whose

> status can

> > > be held in 32 CLog buffers) distance apart, that way ideally for each

> update it will

> > > try to access Clog page that is not in-memory, however as the value to

> update

> > > is getting selected randomly and that leads to every 100th access as

> disk access.

> >

> > What about just running a regular pgbench test, but hacking the

> > XID-assignment code so that we increment the XID counter by 100 each

> > time instead of 1?

> >

>

> If I am not wrong we need 1048576 number of transactions difference

> for each record to make each CLOG access a disk access, so if we

> increment XID counter by 100, then probably every 10000th (or multiplier

> of 10000) transaction would go for disk access.

>

> The number 1048576 is derived by below calc:

> #define CLOG_XACTS_PER_BYTE 4

> #define CLOG_XACTS_PER_PAGE (BLCKSZ * CLOG_XACTS_PER_BYTE)

>

> Transaction difference required for each transaction to go for disk access:

> CLOG_XACTS_PER_PAGE * num_clog_buffers.

>

That guarantees that every xid occupies its own 32-contiguous-pages chunk

of clog.

But clog pages are not pulled in and out in 32-page chunks, but one page

chunks. So you would only need 32,768 differences to get every real

transaction to live on its own clog page, which means every look up of a

different real transaction would have to do a page replacement. (I think

your references to disk access here are misleading. Isn't the issue here

the contention on the lock that controls the page replacement, not the

actual IO?)

I've attached a patch that allows you set the guc "JJ_xid",which makes it

burn the given number of xids every time one new one is asked for. (The

patch introduces lots of other stuff as well, but I didn't feel like

ripping the irrelevant parts out--if you don't set any of the other gucs it

introduces from their defaults, they shouldn't cause you trouble.) I think

there are other tools around that do the same thing, but this is the one I

know about. It is easy to drive the system into wrap-around shutdown with

this, so lowering autovacuum_vacuum_cost_delay is a good idea.

Actually I haven't attached it, because then the commitfest app will list

it as the patch needing review, instead I've put it here

https://drive.google.com/file/d/0Bzqrh1SO9FcERV9EUThtT3pacmM/view?usp=sharing

I think reducing to every 100th access for transaction status as disk access

> is sufficient to prove that there is no regression with the patch for the

> screnario

> asked by Andres or do you think it is not?

>

> Now another possibility here could be that we try by commenting out fsync

> in CLOG path to see how much it impact the performance of this test and

> then for pgbench test. I am not sure there will be any impact because even

> every 100th transaction goes to disk access that is still less as compare

> WAL fsync which we have to perform for each transaction.

>

You mentioned that your clog is not on ssd, but surely at this scale of

hardware, the hdd the clog is on has a bbu in front of it, no?

But I thought Andres' concern was not about fsync, but about the fact that

the SLRU does linear scans (repeatedly) of the buffers while holding the

control lock? At some point, scanning more and more buffers under the lock

is going to cause more contention than scanning fewer buffers and just

evicting a page will.

Cheers,

Jeff

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-10-05 03:27:00 |

| Message-ID: | CAA4eK1Jh0BLZZycQWU8whLr4Drmw=o_iENDeHw8tJUGrP320bw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Oct 5, 2015 at 6:34 AM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

> On Fri, Sep 11, 2015 at 8:01 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

> wrote:

>>

>>

>> If I am not wrong we need 1048576 number of transactions difference

>> for each record to make each CLOG access a disk access, so if we

>> increment XID counter by 100, then probably every 10000th (or multiplier

>> of 10000) transaction would go for disk access.

>>

>> The number 1048576 is derived by below calc:

>> #define CLOG_XACTS_PER_BYTE 4

>> #define CLOG_XACTS_PER_PAGE (BLCKSZ * CLOG_XACTS_PER_BYTE)

>>

>

>> Transaction difference required for each transaction to go for disk

>> access:

>> CLOG_XACTS_PER_PAGE * num_clog_buffers.

>>

>

>

> That guarantees that every xid occupies its own 32-contiguous-pages chunk

> of clog.

>

> But clog pages are not pulled in and out in 32-page chunks, but one page

> chunks. So you would only need 32,768 differences to get every real

> transaction to live on its own clog page, which means every look up of a

> different real transaction would have to do a page replacement.

>

Agreed, but that doesn't effect the test result with the test done above.

> (I think your references to disk access here are misleading. Isn't the

> issue here the contention on the lock that controls the page replacement,

> not the actual IO?)

>

>

The point is that if there is no I/O needed, then all the read-access for

transaction status will just use Shared locks, however if there is an I/O,

then it would need an Exclusive lock.

> I've attached a patch that allows you set the guc "JJ_xid",which makes it

> burn the given number of xids every time one new one is asked for. (The

> patch introduces lots of other stuff as well, but I didn't feel like

> ripping the irrelevant parts out--if you don't set any of the other gucs it

> introduces from their defaults, they shouldn't cause you trouble.) I think

> there are other tools around that do the same thing, but this is the one I

> know about. It is easy to drive the system into wrap-around shutdown with

> this, so lowering autovacuum_vacuum_cost_delay is a good idea.

>

> Actually I haven't attached it, because then the commitfest app will list

> it as the patch needing review, instead I've put it here

> https://drive.google.com/file/d/0Bzqrh1SO9FcERV9EUThtT3pacmM/view?usp=sharing

>

>

Thanks, I think probably this could also be used for testing.

> I think reducing to every 100th access for transaction status as disk

>> access

>> is sufficient to prove that there is no regression with the patch for the

>> screnario

>> asked by Andres or do you think it is not?

>>

>> Now another possibility here could be that we try by commenting out fsync

>> in CLOG path to see how much it impact the performance of this test and

>> then for pgbench test. I am not sure there will be any impact because

>> even

>> every 100th transaction goes to disk access that is still less as compare

>> WAL fsync which we have to perform for each transaction.

>>

>

> You mentioned that your clog is not on ssd, but surely at this scale of

> hardware, the hdd the clog is on has a bbu in front of it, no?

>

>

Yes.

> But I thought Andres' concern was not about fsync, but about the fact that

> the SLRU does linear scans (repeatedly) of the buffers while holding the

> control lock? At some point, scanning more and more buffers under the lock

> is going to cause more contention than scanning fewer buffers and just

> evicting a page will.

>

>

Yes, at some point, that could matter, but I could not see the impact

at 64 or 128 number of Clog buffers.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 06:50:36 |

| Message-ID: | CAA4eK1L_snxM_JcrzEstNq9P66++F4kKFce=1r5+D1vzPofdtg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 21, 2015 at 6:25 PM, Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com

> wrote:

> On 09/18/2015 11:11 PM, Amit Kapila wrote:

>

>> I have done various runs on an Intel Xeon 28C/56T w/ 256Gb mem and 2 x

>>> RAID10 SSD (data + xlog) with Min(64,).

>>>

>>>

>>> The benefit with this patch could be seen at somewhat higher

>> client-count as you can see in my initial mail, can you please

>> once try with client count > 64?

>>

>>

> Client count were from 1 to 80.

>

> I did do one run with Min(128,) like you, but didn't see any difference in

> the result compared to Min(64,), so focused instead in the sync_commit

> on/off testing case.

>

I think the main focus for test in this area would be at higher client

count. At what scale factors have you taken the data and what are

the other non-default settings you have used. By the way, have you

tried by dropping and recreating the database and restarting the server

after each run, can you share the exact steps you have used to perform

the tests. I am not sure why it is not showing the benefit in your testing,

may be the benefit is on some what more higher end m/c or it could be

that some of the settings used for test are not same as mine or the way

to test the read-write workload of pgbench is different.

In anycase, I went ahead and tried further reducing the CLogControlLock

contention by grouping the transaction status updates. The basic idea

is same as is used to reduce the ProcArrayLock contention [1] which is to

allow one of the proc to become leader and update the transaction status for

other active transactions in system. This has helped to reduce the

contention

around CLOGControlLock. Attached patch group_update_clog_v1.patch

implements this idea.

I have taken performance data with this patch to see the impact at

various scale-factors. All the data is for cases when data fits in shared

buffers and is taken against commit - 5c90a2ff on server with below

configuration and non-default postgresql.conf settings.

Performance Data

-----------------------------

RAM - 500GB

8 sockets, 64 cores(Hyperthreaded128 threads total)

Non-default parameters

------------------------------------

max_connections = 300

shared_buffers=8GB

min_wal_size=10GB

max_wal_size=15GB

checkpoint_timeout =35min

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.9

wal_buffers = 256MB

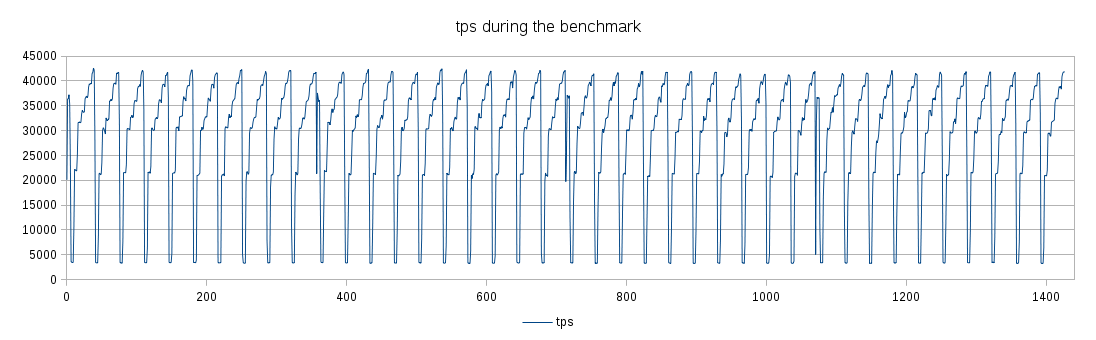

Refer attached files for performance data.

sc_300_perf.png - This data indicates that at scale_factor 300, there is a

gain of ~15% at higher client counts, without degradation at lower client

count.

different_sc_perf.png - At various scale factors, there is a gain from

~15% to 41% at higher client counts and in some cases we see gain

of ~5% at somewhat moderate client count (64) as well.

perf_write_clogcontrollock_data_v1.ods - Detailed performance data at

various client counts and scale factors.

Feel free to ask for more details if the data in attached files is not

clear.

Below is the LWLock_Stats information with and without patch:

Stats Data

---------

A. scale_factor = 300; shared_buffers=32GB; client_connections - 128

HEAD - 5c90a2ff

----------------

CLogControlLock Data

------------------------

PID 94100 lwlock main 11: shacq 678672 exacq 326477 blk 204427 spindelay

8532 dequeue self 93192

PID 94129 lwlock main 11: shacq 757047 exacq 363176 blk 207840 spindelay

8866 dequeue self 96601

PID 94115 lwlock main 11: shacq 721632 exacq 345967 blk 207665 spindelay

8595 dequeue self 96185

PID 94011 lwlock main 11: shacq 501900 exacq 241346 blk 173295 spindelay

7882 dequeue self 78134

PID 94087 lwlock main 11: shacq 653701 exacq 314311 blk 201733 spindelay

8419 dequeue self 92190

After Patch group_update_clog_v1

----------------

CLogControlLock Data

------------------------

PID 100205 lwlock main 11: shacq 836897 exacq 176007 blk 116328 spindelay

1206 dequeue self 54485

PID 100034 lwlock main 11: shacq 437610 exacq 91419 blk 77523 spindelay 994

dequeue self 35419

PID 100175 lwlock main 11: shacq 748948 exacq 158970 blk 114027 spindelay

1277 dequeue self 53486

PID 100162 lwlock main 11: shacq 717262 exacq 152807 blk 115268 spindelay

1227 dequeue self 51643

PID 100214 lwlock main 11: shacq 856044 exacq 180422 blk 113695 spindelay

1202 dequeue self 54435

The above data indicates that contention due to CLogControlLock is

reduced by around 50% with this patch.

The reasons for remaining contention could be:

1. Readers of clog data (checking transaction status data) can take

Exclusive CLOGControlLock when reading the page from disk, this can

contend with other Readers (shared lockers of CLogControlLock) and with

exclusive locker which updates transaction status. One of the ways to

mitigate this contention is to increase the number of CLOG buffers for which

patch has been already posted on this thread.

2. Readers of clog data (checking transaction status data) takes shared

CLOGControlLock which can contend with exclusive locker (Group leader) which

updates transaction status. I have tried to reduce the amount of work done

by group leader, by allowing group leader to just read the Clog page once

for all the transactions in the group which updated the same CLOG page

(idea similar to what we currently we use for updating the status of

transactions

having sub-transaction tree), but that hasn't given any further performance

boost,

so I left it.

I think we can use some other ways as well to reduce the contention around

CLOGControlLock by doing somewhat major surgery around SLRU like using

buffer pools similar to shared buffers, but this idea gives us moderate

improvement without much impact on exiting mechanism.

Thoughts?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| Attachment | Content-Type | Size |

|---|---|---|

| group_update_clog_v1.patch | application/octet-stream | 12.5 KB |

|

image/png | 66.2 KB |

| different_sc_perf.png | image/png | 82.0 KB |

| perf_write_clogcontrollock_data_v1.ods | application/vnd.oasis.opendocument.spreadsheet | 24.8 KB |

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Peter Geoghegan <pg(at)heroku(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 08:02:29 |

| Message-ID: | CAA4eK1KtyBowAQNUf9WfaOKeG3wqc46mt6FQZHROf8Jd+AM-aQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Sep 21, 2015 at 6:34 AM, Peter Geoghegan <pg(at)heroku(dot)com> wrote:

>

> On Mon, Aug 31, 2015 at 9:49 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > Increasing CLOG buffers to 64 helps in reducing the contention due to

second

> > reason. Experiments revealed that increasing CLOG buffers only helps

> > once the contention around ProcArrayLock is reduced.

>

> There has been a lot of research on bitmap compression, more or less

> for the benefit of bitmap index access methods.

>

> Simple techniques like run length encoding are effective for some

> things. If the need to map the bitmap into memory to access the status

> of transactions is a concern, there has been work done on that, too.

> Byte-aligned bitmap compression is a technique that might offer a good

> trade-off between compression clog, and decompression overhead -- I

> think that there basically is no decompression overhead, because set

> operations can be performed on the "compressed" representation

> directly. There are other techniques, too.

>

I could see benefits of doing compression for CLOG, but I think it won't

be straight forward, other than handling of compression and decompression,

currently code relies on transaction id to find the clog page, that will

not work after compression or we need to do some changes in that mapping

to make it work. Also I think it could avoid the increase of clog buffers

which

can help readers, but it won't help much for contention around clog

updates for transaction status.

Overall this idea sounds promising, but I think the work involved is more

than the benefit I am expecting for the current optimization we are

discussing.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Peter Geoghegan <pg(at)heroku(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 08:06:47 |

| Message-ID: | CAA4eK1+9AcXMBAnWi5ntVwmQfd3pR4m7UMJtoEd1Lb6zPemgHQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Nov 17, 2015 at 1:32 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

>

> On Mon, Sep 21, 2015 at 6:34 AM, Peter Geoghegan <pg(at)heroku(dot)com> wrote:

> >

> > On Mon, Aug 31, 2015 at 9:49 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> > > Increasing CLOG buffers to 64 helps in reducing the contention due to

second

> > > reason. Experiments revealed that increasing CLOG buffers only helps

> > > once the contention around ProcArrayLock is reduced.

> >

>

> Overall this idea sounds promising, but I think the work involved is more

> than the benefit I am expecting for the current optimization we are

> discussing.

>

Sorry, I think last line is slightly confusing, let me try to again write

it:

Overall this idea sounds promising, but I think the work involved is more

than the benefit expected from the current optimization we are

discussing.

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 09:15:35 |

| Message-ID: | CANP8+jKGcCypXg6cTsAx=vOze81wHrEmEUPu9qWj8BfwvB9Thw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17 November 2015 at 06:50, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> In anycase, I went ahead and tried further reducing the CLogControlLock

> contention by grouping the transaction status updates. The basic idea

> is same as is used to reduce the ProcArrayLock contention [1] which is to

> allow one of the proc to become leader and update the transaction status

> for

> other active transactions in system. This has helped to reduce the

> contention

> around CLOGControlLock.

>

Sounds good. The technique has proved effective with proc array and makes

sense to use here also.

> Attached patch group_update_clog_v1.patch

> implements this idea.

>

I don't think we should be doing this only for transactions that don't have

subtransactions. We are trying to speed up real cases, not just benchmarks.

So +1 for the concept, patch is going in right direction though lets do the

full press-up.

The above data indicates that contention due to CLogControlLock is

> reduced by around 50% with this patch.

>

> The reasons for remaining contention could be:

>

> 1. Readers of clog data (checking transaction status data) can take

> Exclusive CLOGControlLock when reading the page from disk, this can

> contend with other Readers (shared lockers of CLogControlLock) and with

> exclusive locker which updates transaction status. One of the ways to

> mitigate this contention is to increase the number of CLOG buffers for

> which

> patch has been already posted on this thread.

>

> 2. Readers of clog data (checking transaction status data) takes shared

> CLOGControlLock which can contend with exclusive locker (Group leader)

> which

> updates transaction status. I have tried to reduce the amount of work done

> by group leader, by allowing group leader to just read the Clog page once

> for all the transactions in the group which updated the same CLOG page

> (idea similar to what we currently we use for updating the status of

> transactions

> having sub-transaction tree), but that hasn't given any further

> performance boost,

> so I left it.

>

> I think we can use some other ways as well to reduce the contention around

> CLOGControlLock by doing somewhat major surgery around SLRU like using

> buffer pools similar to shared buffers, but this idea gives us moderate

> improvement without much impact on exiting mechanism.

>

My earlier patch to reduce contention by changing required lock level is

still valid here. Increasing the number of buffers doesn't do enough to

remove that.

I'm working on a patch to use a fast-update area like we use for GIN. If a

page is not available when we want to record commit, just store it in a

hash table, when not in crash recovery. I'm experimenting with writing WAL

for any xids earlier than last checkpoint, though we could also trickle

writes and/or flush them in batches at checkpoint time - your code would

help there.

The hash table can also be used for lookups. My thinking is that most reads

of older xids are caused by long running transactions, so they cause a page

fault at commit and then other page faults later when people read them back

in. The hash table works for both kinds of page fault.

--

Simon Riggs http://www.2ndQuadrant.com/

<http://www.2ndquadrant.com/>

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 11:27:20 |

| Message-ID: | CAA4eK1LJmK=CfHf3xu+fPHMPzs6GYXON_Ni7LvncP7LddWaXWQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Nov 17, 2015 at 2:45 PM, Simon Riggs <simon(at)2ndquadrant(dot)com> wrote:

> On 17 November 2015 at 06:50, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>

>

>> In anycase, I went ahead and tried further reducing the CLogControlLock

>> contention by grouping the transaction status updates. The basic idea

>> is same as is used to reduce the ProcArrayLock contention [1] which is to

>> allow one of the proc to become leader and update the transaction status

>> for

>> other active transactions in system. This has helped to reduce the

>> contention

>> around CLOGControlLock.

>>

>

> Sounds good. The technique has proved effective with proc array and makes

> sense to use here also.

>

>

>> Attached patch group_update_clog_v1.patch

>> implements this idea.

>>

>

> I don't think we should be doing this only for transactions that don't

> have subtransactions.

>

The reason for not doing this optimization for subtransactions is that we

need to advertise the information that Group leader needs for updating

the transaction status and if we want to do it for sub transactions, then

all the subtransaction id's needs to be advertised. Now here the tricky

part is that number of subtransactions for which the status needs to

be updated is dynamic, so reserving memory for it would be difficult.

However, we can reserve some space in Proc like we do for XidCache

(cache of sub transaction ids) and then use that to advertise that many

Xid's at-a-time or just allow this optimization if number of subtransactions

is lesser than or equal to the size of this new XidCache. I am not sure

if it is good idea to use the existing XidCache for this purpose in which

case we need to have a separate space in PGProc for this purpose. I

don't see allocating space for 64 or so subxid's as a problem, however

doing it for bigger number could be cause of concern.

> We are trying to speed up real cases, not just benchmarks.

>

> So +1 for the concept, patch is going in right direction though lets do

> the full press-up.

>

>

I have mentioned above the reason for not doing it for sub transactions, if

you think it is viable to reserve space in shared memory for this purpose,

then

I can include the optimization for subtransactions as well.

> The above data indicates that contention due to CLogControlLock is

>> reduced by around 50% with this patch.

>>

>> The reasons for remaining contention could be:

>>

>> 1. Readers of clog data (checking transaction status data) can take

>> Exclusive CLOGControlLock when reading the page from disk, this can

>> contend with other Readers (shared lockers of CLogControlLock) and with

>> exclusive locker which updates transaction status. One of the ways to

>> mitigate this contention is to increase the number of CLOG buffers for

>> which

>> patch has been already posted on this thread.

>>

>> 2. Readers of clog data (checking transaction status data) takes shared

>> CLOGControlLock which can contend with exclusive locker (Group leader)

>> which

>> updates transaction status. I have tried to reduce the amount of work

>> done

>> by group leader, by allowing group leader to just read the Clog page once

>> for all the transactions in the group which updated the same CLOG page

>> (idea similar to what we currently we use for updating the status of

>> transactions

>> having sub-transaction tree), but that hasn't given any further

>> performance boost,

>> so I left it.

>>

>> I think we can use some other ways as well to reduce the contention around

>> CLOGControlLock by doing somewhat major surgery around SLRU like using

>> buffer pools similar to shared buffers, but this idea gives us moderate

>> improvement without much impact on exiting mechanism.

>>

>

> My earlier patch to reduce contention by changing required lock level is

> still valid here. Increasing the number of buffers doesn't do enough to

> remove that.

>

>

I understand that increasing alone the number of buffers is not

enough, that's why I have tried this group leader idea. However

if we do something on lines what you have described below

(handling page faults) could avoid the need for increasing buffers.

> I'm working on a patch to use a fast-update area like we use for GIN. If a

> page is not available when we want to record commit, just store it in a

> hash table, when not in crash recovery. I'm experimenting with writing WAL

> for any xids earlier than last checkpoint, though we could also trickle

> writes and/or flush them in batches at checkpoint time - your code would

> help there.

>

> The hash table can also be used for lookups. My thinking is that most

> reads of older xids are caused by long running transactions, so they cause

> a page fault at commit and then other page faults later when people read

> them back in. The hash table works for both kinds of page fault.

>

>

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Simon Riggs <simon(at)2ndquadrant(dot)com>, Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 11:34:17 |

| Message-ID: | CANP8+j+q4+ZP0JExgvDRPBpW6cDjb15nvjBR3iWmyoHH--3dCg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17 November 2015 at 11:27, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> Attached patch group_update_clog_v1.patch

>>> implements this idea.

>>>

>>

>> I don't think we should be doing this only for transactions that don't

>> have subtransactions.

>>

>

> The reason for not doing this optimization for subtransactions is that we

> need to advertise the information that Group leader needs for updating

> the transaction status and if we want to do it for sub transactions, then

> all the subtransaction id's needs to be advertised. Now here the tricky

> part is that number of subtransactions for which the status needs to

> be updated is dynamic, so reserving memory for it would be difficult.

> However, we can reserve some space in Proc like we do for XidCache

> (cache of sub transaction ids) and then use that to advertise that many

> Xid's at-a-time or just allow this optimization if number of

> subtransactions

> is lesser than or equal to the size of this new XidCache. I am not sure

> if it is good idea to use the existing XidCache for this purpose in which

> case we need to have a separate space in PGProc for this purpose. I

> don't see allocating space for 64 or so subxid's as a problem, however

> doing it for bigger number could be cause of concern.

>

>

>> We are trying to speed up real cases, not just benchmarks.

>>

>> So +1 for the concept, patch is going in right direction though lets do

>> the full press-up.

>>

>>

> I have mentioned above the reason for not doing it for sub transactions, if

> you think it is viable to reserve space in shared memory for this purpose,

> then

> I can include the optimization for subtransactions as well.

>

The number of subxids is unbounded, so as you say, reserving shmem isn't

viable.

I'm interested in real world cases, so allocating 65 xids per process isn't

needed, but we can say is that the optimization shouldn't break down

abruptly in the presence of a small/reasonable number of subtransactions.

--

Simon Riggs http://www.2ndQuadrant.com/

<http://www.2ndquadrant.com/>

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 11:48:20 |

| Message-ID: | CAA4eK1+pgGLNuumZo6swNZGd1_=Sfve0fuT58JQ-KpYKF4064A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Nov 17, 2015 at 5:04 PM, Simon Riggs <simon(at)2ndquadrant(dot)com> wrote:

> On 17 November 2015 at 11:27, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

>

> We are trying to speed up real cases, not just benchmarks.

>>>

>>> So +1 for the concept, patch is going in right direction though lets do

>>> the full press-up.

>>>

>>>

>> I have mentioned above the reason for not doing it for sub transactions,

>> if

>> you think it is viable to reserve space in shared memory for this

>> purpose, then

>> I can include the optimization for subtransactions as well.

>>

>

> The number of subxids is unbounded, so as you say, reserving shmem isn't

> viable.

>

> I'm interested in real world cases, so allocating 65 xids per process

> isn't needed, but we can say is that the optimization shouldn't break down

> abruptly in the presence of a small/reasonable number of subtransactions.

>

>

I think in that case what we can do is if the total number of

sub transactions is lesser than equal to 64 (we can find that by

overflowed flag in PGXact) , then apply this optimisation, else use

the existing flow to update the transaction status. I think for that we

don't even need to reserve any additional memory. Does that sound

sensible to you?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 12:20:16 |

| Message-ID: | CAA4eK1LjL6OrCKBubTtwPZgcYdZJ6ytW=wsGPv+w2JLjo6Zxjg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Nov 17, 2015 at 5:18 PM, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

wrote:

> On Tue, Nov 17, 2015 at 5:04 PM, Simon Riggs <simon(at)2ndquadrant(dot)com>

> wrote:

>

>> On 17 November 2015 at 11:27, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

>> wrote:

>>

>> We are trying to speed up real cases, not just benchmarks.

>>>>

>>>> So +1 for the concept, patch is going in right direction though lets do

>>>> the full press-up.

>>>>

>>>>

>>> I have mentioned above the reason for not doing it for sub transactions,

>>> if

>>> you think it is viable to reserve space in shared memory for this

>>> purpose, then

>>> I can include the optimization for subtransactions as well.

>>>

>>

>> The number of subxids is unbounded, so as you say, reserving shmem isn't

>> viable.

>>

>> I'm interested in real world cases, so allocating 65 xids per process

>> isn't needed, but we can say is that the optimization shouldn't break down

>> abruptly in the presence of a small/reasonable number of subtransactions.

>>

>>

> I think in that case what we can do is if the total number of

> sub transactions is lesser than equal to 64 (we can find that by

> overflowed flag in PGXact) , then apply this optimisation, else use

> the existing flow to update the transaction status. I think for that we

> don't even need to reserve any additional memory.

>

I think this won't work as it is, because subxids in XidCache could be

on different pages in which case we either need an additional flag

in XidCache array or a separate array to indicate for which subxids

we want to update the status. I don't see any better way to do this

optimization for sub transactions, do you have something else in

mind?

With Regards,

Amit Kapila.

EnterpriseDB: http://www.enterprisedb.com

| From: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Simon Riggs <simon(at)2ndquadrant(dot)com>, Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 13:00:54 |

| Message-ID: | CANP8+j+hZ8ekC++eMGq33+MFiNrPNwc-GnBNfRdRjktDPd+G0g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On 17 November 2015 at 11:48, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> On Tue, Nov 17, 2015 at 5:04 PM, Simon Riggs <simon(at)2ndquadrant(dot)com>

> wrote:

>

>> On 17 November 2015 at 11:27, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com>

>> wrote:

>>

>> We are trying to speed up real cases, not just benchmarks.

>>>>

>>>> So +1 for the concept, patch is going in right direction though lets do

>>>> the full press-up.

>>>>

>>>>

>>> I have mentioned above the reason for not doing it for sub transactions,

>>> if

>>> you think it is viable to reserve space in shared memory for this

>>> purpose, then

>>> I can include the optimization for subtransactions as well.

>>>

>>

>> The number of subxids is unbounded, so as you say, reserving shmem isn't

>> viable.

>>

>> I'm interested in real world cases, so allocating 65 xids per process

>> isn't needed, but we can say is that the optimization shouldn't break down

>> abruptly in the presence of a small/reasonable number of subtransactions.

>>

>>

> I think in that case what we can do is if the total number of

> sub transactions is lesser than equal to 64 (we can find that by

> overflowed flag in PGXact) , then apply this optimisation, else use

> the existing flow to update the transaction status. I think for that we

> don't even need to reserve any additional memory. Does that sound

> sensible to you?

>

I understand you to mean that the leader should look backwards through the

queue collecting xids while !(PGXACT->overflowed)

No additional shmem is required

--

Simon Riggs http://www.2ndQuadrant.com/

<http://www.2ndquadrant.com/>

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| From: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

|---|---|

| To: | Simon Riggs <simon(at)2ndquadrant(dot)com> |

| Cc: | Jesper Pedersen <jesper(dot)pedersen(at)redhat(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Speed up Clog Access by increasing CLOG buffers |

| Date: | 2015-11-17 13:41:28 |

| Message-ID: | CAA4eK1Ksgc=-5oPq67KoZ0z1B8v+gRJ8UNWJGtsqou=WuFUdWA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Nov 17, 2015 at 6:30 PM, Simon Riggs <simon(at)2ndquadrant(dot)com> wrote: