Re: spinlocks on HP-UX

| Lists: | pgsql-hackers |

|---|

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | spinlocks on HP-UX |

| Date: | 2011-08-28 00:09:08 |

| Message-ID: | CA+TgmoZvATZV+eLh3U35jaNnwwzLL5ewUU_-t0X=T0Qwas+ZdA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I was able to obtain access to a 32-core HP-UX server. I repeated the

pgbench -S testing that I have previously done on Linux, and found

that the results were not too good. Here are the results at scale

factor 100, on 9.2devel, with various numbers of clients. Five minute

runs, shared_buffers=8GB.

1:tps = 5590.070816 (including connections establishing)

8:tps = 37660.233932 (including connections establishing)

16:tps = 67366.099286 (including connections establishing)

32:tps = 82781.624665 (including connections establishing)

48:tps = 18589.995074 (including connections establishing)

64:tps = 16424.661371 (including connections establishing)

And just for comparison, here are the numbers at scale factor 1000:

1:tps = 4751.768608 (including connections establishing)

8:tps = 33621.474490 (including connections establishing)

16:tps = 58959.043171 (including connections establishing)

32:tps = 78801.265189 (including connections establishing)

48:tps = 21635.234969 (including connections establishing)

64:tps = 18611.863567 (including connections establishing)

After mulling over the vmstat output for a bit, I began to suspect

spinlock contention. I took a look at document called "Implementing

Spinlocks on the Intel Itanium Architecture and PA-RISC", by Tor

Ekqvist and David Graves and available via the HP web site, which

states that when spinning on a spinlock on these machines, you should

use a regular, unlocked test first and use the atomic test only when

the unlocked test looks OK. I tried implementing this in two ways,

and both produced results which are FAR superior to our current

implementation. First, I did this:

--- a/src/include/storage/s_lock.h

+++ b/src/include/storage/s_lock.h

@@ -726,7 +726,7 @@ tas(volatile slock_t *lock)

typedef unsigned int slock_t;

#include <ia64/sys/inline.h>

-#define TAS(lock) _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE)

+#define TAS(lock) (*(lock) ? 1 : _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE))

#endif /* HPUX on IA64, non gcc */

That resulted in these numbers. Scale factor 100:

1:tps = 5569.911714 (including connections establishing)

8:tps = 37365.364468 (including connections establishing)

16:tps = 63596.261875 (including connections establishing)

32:tps = 95948.157678 (including connections establishing)

48:tps = 90708.253920 (including connections establishing)

64:tps = 100109.065744 (including connections establishing)

Scale factor 1000:

1:tps = 4878.332996 (including connections establishing)

8:tps = 33245.469907 (including connections establishing)

16:tps = 56708.424880 (including connections establishing)

48:tps = 69652.232635 (including connections establishing)

64:tps = 70593.208637 (including connections establishing)

Then, I did this:

--- a/src/backend/storage/lmgr/s_lock.c

+++ b/src/backend/storage/lmgr/s_lock.c

@@ -96,7 +96,7 @@ s_lock(volatile slock_t *lock, const char *file, int line)

int delays = 0;

int cur_delay = 0;

- while (TAS(lock))

+ while (*lock ? 1 : TAS(lock))

{

/* CPU-specific delay each time through the loop */

SPIN_DELAY();

That resulted in these numbers, at scale factor 100:

1:tps = 5564.059494 (including connections establishing)

8:tps = 37487.090798 (including connections establishing)

16:tps = 66061.524760 (including connections establishing)

32:tps = 96535.523905 (including connections establishing)

48:tps = 92031.618360 (including connections establishing)

64:tps = 106813.631701 (including connections establishing)

And at scale factor 1000:

1:tps = 4980.338246 (including connections establishing)

8:tps = 33576.680072 (including connections establishing)

16:tps = 55618.677975 (including connections establishing)

32:tps = 73589.442746 (including connections establishing)

48:tps = 70987.026228 (including connections establishing)

Note sure why I am missing the 64-client results for that last set of

tests, but no matter.

Of course, we can't apply the second patch as it stands, because I

tested it on x86 and it loses. But it seems pretty clear we need to

do it at least for this architecture...

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-28 15:35:33 |

| Message-ID: | 29042.1314545733@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> First, I did this:

> -#define TAS(lock) _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE)

> +#define TAS(lock) (*(lock) ? 1 : _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE))

Seems reasonable, and similar to x86 logic.

> Then, I did this:

> - while (TAS(lock))

> + while (*lock ? 1 : TAS(lock))

Er, what? That sure looks like a manual application of what you'd

already done in the TAS macro.

> Of course, we can't apply the second patch as it stands, because I

> tested it on x86 and it loses. But it seems pretty clear we need to

> do it at least for this architecture...

Please clarify: when you say "this architecture", are you talking about

IA64 or PA-RISC? Is there any reason to think that this is specific to

HP-UX rather than any other system on the same architecture? (I'm sure

I can get access to some IA64 clusters at Red Hat, though maybe not

64-core ones.)

I don't have an objection to the TAS macro change, but I do object to

fooling with the hardware-independent code in s_lock.c ... especially

when the additional improvement seems barely above the noise threshold.

You ought to be able to do whatever you need inside the TAS macro.

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-28 21:51:44 |

| Message-ID: | CA+Tgmobr9-s3D4fR8PDk=XR_LaoZ-Lb5LiK-BL5H-1Cyy8uO8g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sun, Aug 28, 2011 at 11:35 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>> First, I did this:

>

>> -#define TAS(lock) _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE)

>> +#define TAS(lock) (*(lock) ? 1 : _Asm_xchg(_SZ_W, lock, 1, _LDHINT_NONE))

>

> Seems reasonable, and similar to x86 logic.

>

>> Then, I did this:

>

>> - while (TAS(lock))

>> + while (*lock ? 1 : TAS(lock))

>

> Er, what? That sure looks like a manual application of what you'd

> already done in the TAS macro.

Sorry, I blew through that a little too blithely. If you change TAS()

itself, then even the very first attempt to acquire the lock will try

the unlocked instruction first, whereas changing s_lock() allows you

to do something different in the contended case than you do in the

uncontended case. We COULD just change the TAS() macro since, in this

case, it seems to make only a minor difference, but what I was

thinking is that we could change s_lock.h to define two macros, TAS()

and TAS_SPIN(). If a particular architecture defines TAS() but not

TAS_SPIN(), then we define TAS_SPIN(x) to be TAS(x). Then, S_LOCK()

can stay as-is - calling TAS() - but s_lock() can call TAS_SPIN(),

which will normally be the same as TAS() but can be made different on

any architecture where the retry loop should do something different

than the initial attempt.

> Please clarify: when you say "this architecture", are you talking about

> IA64 or PA-RISC? Is there any reason to think that this is specific to

> HP-UX rather than any other system on the same architecture? (I'm sure

> I can get access to some IA64 clusters at Red Hat, though maybe not

> 64-core ones.)

I tested on IA64; I don't currently have access to a PA-RISC box. The

documentation I'm looking at implies that the same approach would be

desirable there, but that's just an unsubstantiated rumor at this

point....

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-28 22:15:51 |

| Message-ID: | 20331.1314569751@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> On Sun, Aug 28, 2011 at 11:35 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>>> Then, I did this:

>>>

>>> - while (TAS(lock))

>>> + while (*lock ? 1 : TAS(lock))

>> Er, what? That sure looks like a manual application of what you'd

>> already done in the TAS macro.

> Sorry, I blew through that a little too blithely. If you change TAS()

> itself, then even the very first attempt to acquire the lock will try

> the unlocked instruction first, whereas changing s_lock() allows you

> to do something different in the contended case than you do in the

> uncontended case.

Yeah, I figured out that was probably what you meant a little while

later. I found a 64-CPU IA64 machine in Red Hat's test labs and am

currently trying to replicate your results; report to follow.

> We COULD just change the TAS() macro since, in this

> case, it seems to make only a minor difference, but what I was

> thinking is that we could change s_lock.h to define two macros, TAS()

> and TAS_SPIN().

Yeah, I was thinking along the same lines, though perhaps the name of

the new macro could use a little bikeshedding.

The comments in s_lock.h note that the unlocked test in x86 TAS is of

uncertain usefulness. It seems entirely possible to me that we ought

to use a similar design on x86, ie, use the unlocked test only once

we've entered the delay loop.

>> Please clarify: when you say "this architecture", are you talking about

>> IA64 or PA-RISC? Is there any reason to think that this is specific to

>> HP-UX rather than any other system on the same architecture? (I'm sure

>> I can get access to some IA64 clusters at Red Hat, though maybe not

>> 64-core ones.)

> I tested on IA64; I don't currently have access to a PA-RISC box. The

> documentation I'm looking at implies that the same approach would be

> desirable there, but that's just an unsubstantiated rumor at this

> point....

Well, I've got a PA-RISC box, but it's only a single processor so it's

not gonna prove much. Anybody?

regards, tom lane

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-28 23:19:57 |

| Message-ID: | 22039.1314573597@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I wrote:

> Yeah, I figured out that was probably what you meant a little while

> later. I found a 64-CPU IA64 machine in Red Hat's test labs and am

> currently trying to replicate your results; report to follow.

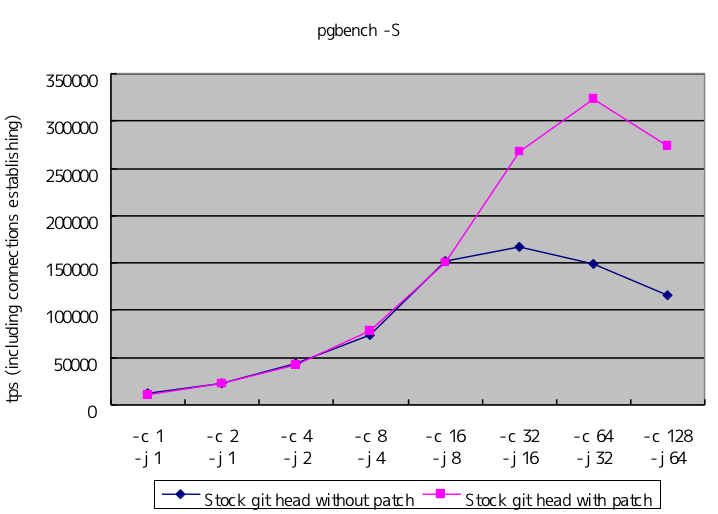

OK, these results are on a 64-processor SGI IA64 machine (AFAICT, 64

independent sockets, no hyperthreading or any funny business); 124GB

in 32 NUMA nodes; running RHEL5.7, gcc 4.1.2. I built today's git

head with --enable-debug (but not --enable-cassert) and ran with all

default configuration settings except shared_buffers = 8GB and

max_connections = 200. The test database is initialized at -s 100.

I did not change the database between runs, but restarted the postmaster

and then did this to warm the caches a tad:

pgbench -c 1 -j 1 -S -T 30 bench

Per-run pgbench parameters are as shown below --- note in particular

that I assigned one pgbench thread per 8 backends.

The numbers are fairly variable even with 5-minute runs; I did each

series twice so you could get a feeling for how much.

Today's git head:

pgbench -c 1 -j 1 -S -T 300 bench tps = 5835.213934 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8499.223161 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 15197.126952 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 30803.255561 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 65795.356797 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 81644.914241 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 40059.202836 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 21309.615001 (including ...

run 2:

pgbench -c 1 -j 1 -S -T 300 bench tps = 5787.310115 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8747.104236 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 14655.369995 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 28287.254924 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 61614.715187 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 79754.640518 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 40334.994324 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 23285.271257 (including ...

With modified TAS macro (see patch 1 below):

pgbench -c 1 -j 1 -S -T 300 bench tps = 6171.454468 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8709.003728 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 14902.731035 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 29789.744482 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 59991.549128 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 117369.287466 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 112583.144495 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 110231.305282 (including ...

run 2:

pgbench -c 1 -j 1 -S -T 300 bench tps = 5670.097936 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8230.786940 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 14785.952481 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 29335.875139 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 59605.433837 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 108884.294519 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 110387.439978 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 109046.121191 (including ...

With unlocked test in s_lock.c delay loop only (patch 2 below):

pgbench -c 1 -j 1 -S -T 300 bench tps = 5426.491088 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8787.939425 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 15720.801359 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 33711.102718 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 61829.180234 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 109781.655020 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 107132.848280 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 106533.630986 (including ...

run 2:

pgbench -c 1 -j 1 -S -T 300 bench tps = 5705.283316 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8442.798662 (including ...

pgbench -c 8 -j 1 -S -T 300 bench tps = 14423.723837 (including ...

pgbench -c 16 -j 2 -S -T 300 bench tps = 29112.751995 (including ...

pgbench -c 32 -j 4 -S -T 300 bench tps = 62258.984033 (including ...

pgbench -c 64 -j 8 -S -T 300 bench tps = 107741.988800 (including ...

pgbench -c 96 -j 12 -S -T 300 bench tps = 107138.968981 (including ...

pgbench -c 128 -j 16 -S -T 300 bench tps = 106110.215138 (including ...

So this pretty well confirms Robert's results, in particular that all of

the win from an unlocked test comes from using it in the delay loop.

Given the lack of evidence that a general change in TAS() is beneficial,

I'm inclined to vote against it, on the grounds that the extra test is

surely a loss at some level when there is not contention.

(IOW, +1 for inventing a second macro to use in the delay loop only.)

We ought to do similar tests on other architectures. I found some

lots-o-processors x86_64 machines at Red Hat, but they don't seem to

own any PPC systems with more than 8 processors. Anybody have big

iron with other non-Intel chips?

regards, tom lane

Patch 1: change TAS globally, non-HPUX code:

*** src/include/storage/s_lock.h.orig Sat Jan 1 13:27:24 2011

--- src/include/storage/s_lock.h Sun Aug 28 13:32:47 2011

***************

*** 228,233 ****

--- 228,240 ----

{

long int ret;

+ /*

+ * Use a non-locking test before the locking instruction proper. This

+ * appears to be a very significant win on many-core IA64.

+ */

+ if (*lock)

+ return 1;

+

__asm__ __volatile__(

" xchg4 %0=%1,%2 \n"

: "=r"(ret), "+m"(*lock)

***************

*** 243,248 ****

--- 250,262 ----

{

int ret;

+ /*

+ * Use a non-locking test before the locking instruction proper. This

+ * appears to be a very significant win on many-core IA64.

+ */

+ if (*lock)

+ return 1;

+

ret = _InterlockedExchange(lock,1); /* this is a xchg asm macro */

return ret;

Patch 2: change s_lock only (same as Robert's quick hack):

*** src/backend/storage/lmgr/s_lock.c.orig Sat Jan 1 13:27:09 2011

--- src/backend/storage/lmgr/s_lock.c Sun Aug 28 14:02:29 2011

***************

*** 96,102 ****

int delays = 0;

int cur_delay = 0;

! while (TAS(lock))

{

/* CPU-specific delay each time through the loop */

SPIN_DELAY();

--- 96,102 ----

int delays = 0;

int cur_delay = 0;

! while (*lock ? 1 : TAS(lock))

{

/* CPU-specific delay each time through the loop */

SPIN_DELAY();

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-28 23:49:18 |

| Message-ID: | CA+Tgmoa4Eh0_7JBevcLhN8ETYoL8uzx21JsVd2dURCd=Q=K0fA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sun, Aug 28, 2011 at 7:19 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> So this pretty well confirms Robert's results, in particular that all of

> the win from an unlocked test comes from using it in the delay loop.

> Given the lack of evidence that a general change in TAS() is beneficial,

> I'm inclined to vote against it, on the grounds that the extra test is

> surely a loss at some level when there is not contention.

> (IOW, +1 for inventing a second macro to use in the delay loop only.)

Beautiful. Got a naming preference for that second macro? I

suggested TAS_SPIN() because it's what you use when you spin, as

opposed to what you use in the uncontended case, but I'm not attached

to that.

> We ought to do similar tests on other architectures. I found some

> lots-o-processors x86_64 machines at Red Hat, but they don't seem to

> own any PPC systems with more than 8 processors. Anybody have big

> iron with other non-Intel chips?

Aside from PPC, it would probably be worth testing SPARC and ARM if we

can find machines. Anything else is probably too old or too marginal

to get excited about. AFAIK these effects don't manifest with <32

cores, so I suspect that a lot of what's in s_lock.h is irrelevant

just because many of those architectures are too old to exist in

32-core versions.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 00:00:05 |

| Message-ID: | 22599.1314576005@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> On Sun, Aug 28, 2011 at 7:19 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> (IOW, +1 for inventing a second macro to use in the delay loop only.)

> Beautiful. Got a naming preference for that second macro? I

> suggested TAS_SPIN() because it's what you use when you spin, as

> opposed to what you use in the uncontended case, but I'm not attached

> to that.

I had been thinking TAS_CONTENDED, but on reflection there's not a

strong argument for that over TAS_SPIN. Do what you will.

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 14:20:18 |

| Message-ID: | CA+TgmobUPXzREgDUOB6+4+Jy1n1R1gkAym_Pj5__G+BhBUn40g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Sun, Aug 28, 2011 at 8:00 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>> On Sun, Aug 28, 2011 at 7:19 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>>> (IOW, +1 for inventing a second macro to use in the delay loop only.)

>

>> Beautiful. Got a naming preference for that second macro? I

>> suggested TAS_SPIN() because it's what you use when you spin, as

>> opposed to what you use in the uncontended case, but I'm not attached

>> to that.

>

> I had been thinking TAS_CONTENDED, but on reflection there's not a

> strong argument for that over TAS_SPIN. Do what you will.

OK, done. I think while we're tidying up here we ought to do

something about this comment:

* ANOTHER CAUTION: be sure that TAS(), TAS_SPIN(), and

S_UNLOCK() represent

* sequence points, ie, loads and stores of other values must not be moved

* across a lock or unlock. In most cases it suffices to make

the operation

* be done through a "volatile" pointer.

IIUC, this is basically total nonsense. volatile only constrains the

optimizer, not the CPU; and only with regard to the ordering of one

volatile access vs. another, NOT the ordering of volatile accesses

with any non-volatile accesses that may be floating around. So its

practical utility for synchronization purposes seems to be nearly

zero. I think what this should say is that we expect these operations

to act as a full memory fence. Note that in some other worlds (e.g.

Linux) a spinlock acquisition or release is only required to act as a

half-fence; that is, other loads and stores are allowed to bleed into

the critical section but not out. However, I think we have been

assuming full-fence behavior. In either case, claiming that the use

of a volatile pointer is all that's needed here strikes me as pretty

misleading.

In the department of loose ends, there are a bunch of other things

that maybe need cleanup here: (1) the gcc/intel compiler cases on

ia64, (2) PA-RISC, (3) ARM, (4) PowerPC... and we should also perhaps

reconsider the 32-bit x86 case. Right now TAS() says:

/*

* Use a non-locking test before asserting the bus lock. Note that the

* extra test appears to be a small loss on some x86 platforms

and a small

* win on others; it's by no means clear that we should keep it.

*/

I can't get too excited about spending a lot of effort optimizing

32-bit PostgreSQL on any architecture at this point, but if someone

else is, we might want to check whether it makes more sense to do the

non-locking test only in TAS_SPIN().

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 15:07:48 |

| Message-ID: | 21426.1314630468@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> OK, done. I think while we're tidying up here we ought to do

> something about this comment:

> * ANOTHER CAUTION: be sure that TAS(), TAS_SPIN(), and

> S_UNLOCK() represent

> * sequence points, ie, loads and stores of other values must not be moved

> * across a lock or unlock. In most cases it suffices to make

> the operation

> * be done through a "volatile" pointer.

> IIUC, this is basically total nonsense.

It could maybe be rewritten for more clarity, but it's far from being

nonsense. The responsibility for having an actual hardware memory fence

instruction lies with the author of the TAS macro. But the

responsibility for keeping the compiler from reordering stuff around the

TAS call is that of the *user* of the TAS macro (or spinlock), and in

most cases the way to do that is to make sure that both the spinlock and

the shared data structure are referenced through volatile pointers.

This isn't academic; we've seen bugs from failure to do that. (BTW,

the reason for not being equivalently tense about LWLock-protected

structures is that the compiler generally won't try to move operations

around an out-of-line function call. It's the fact that spinlocks are

inline-able that creates the risk here.)

> In the department of loose ends, there are a bunch of other things

> that maybe need cleanup here: (1) the gcc/intel compiler cases on

> ia64, (2) PA-RISC, (3) ARM, (4) PowerPC... and we should also perhaps

> reconsider the 32-bit x86 case.

The results I got yesterday seem sufficient basis to change the

gcc/intel cases for IA64, so I will go do that if you didn't already.

I am also currently running tests on x86_64 and PPC using Red Hat test

machines --- expect results later today. Red Hat doesn't seem to own

any many-CPU machines that are 32-bit-only, and I rather wonder if there

are any. It might be that it only makes sense to optimize the x86 paths

for a few CPUs, in which case this test methodology may not be very

helpful.

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 15:29:16 |

| Message-ID: | CA+TgmobzhPvmQxnv2DbUFHCnPabWHAuhb4ktyG34nogvepoO-g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 11:07 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>> OK, done. I think while we're tidying up here we ought to do

>> something about this comment:

>

>> * ANOTHER CAUTION: be sure that TAS(), TAS_SPIN(), and

>> S_UNLOCK() represent

>> * sequence points, ie, loads and stores of other values must not be moved

>> * across a lock or unlock. In most cases it suffices to make

>> the operation

>> * be done through a "volatile" pointer.

>

>> IIUC, this is basically total nonsense.

>

> It could maybe be rewritten for more clarity, but it's far from being

> nonsense. The responsibility for having an actual hardware memory fence

> instruction lies with the author of the TAS macro.

Right... but the comment implies that you probably don't need one, and

doesn't even mention that you MIGHT need one.

>> In the department of loose ends, there are a bunch of other things

>> that maybe need cleanup here: (1) the gcc/intel compiler cases on

>> ia64, (2) PA-RISC, (3) ARM, (4) PowerPC... and we should also perhaps

>> reconsider the 32-bit x86 case.

>

> The results I got yesterday seem sufficient basis to change the

> gcc/intel cases for IA64, so I will go do that if you didn't already.

I did not; please go ahead. I wasn't relishing the idea trying to

figure out how to install gcc to test that case.

> I am also currently running tests on x86_64 and PPC using Red Hat test

> machines --- expect results later today. Red Hat doesn't seem to own

> any many-CPU machines that are 32-bit-only, and I rather wonder if there

> are any. It might be that it only makes sense to optimize the x86 paths

> for a few CPUs, in which case this test methodology may not be very

> helpful.

FWIW, I tried spinning unlocked on x86_64 at 32 cores and got a

regression. Don't have any PPC gear at present.

I think optimizing spinlocks for machines with only a few CPUs is

probably pointless. Based on what I've seen so far, spinlock

contention even at 16 CPUs is negligible pretty much no matter what

you do. Whether your implementation is fast or slow isn't going to

matter, because even an inefficient implementation will account for

only a negligible percentage of the total CPU time - much less than 1%

- as opposed to a 64-core machine, where it's not that hard to find

cases where spin-waits consume the *majority* of available CPU time

(recall previous discussion of lseek). So I'm disinclined to spend

the time it would take to tinker with the 32-bit code, because it will

not matter; for that platform we're better off spending our time

installing a hash table in ScanKeywordLookup(). And there's always a

possibility that AMD and Intel chips could be different, or there

could even be differences between different chip generations from the

same manufacturer, so all in all it seems like a pretty unrewarding

exercise.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 15:42:28 |

| Message-ID: | 22142.1314632548@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> On Mon, Aug 29, 2011 at 11:07 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>>> IIUC, this is basically total nonsense.

>> It could maybe be rewritten for more clarity, but it's far from being

>> nonsense. The responsibility for having an actual hardware memory fence

>> instruction lies with the author of the TAS macro.

> Right... but the comment implies that you probably don't need one, and

> doesn't even mention that you MIGHT need one.

I think maybe we need to split it into two paragraphs, one addressing

the TAS author and the other for the TAS user. I'll have a go at that.

> I think optimizing spinlocks for machines with only a few CPUs is

> probably pointless. Based on what I've seen so far, spinlock

> contention even at 16 CPUs is negligible pretty much no matter what

> you do.

We did find significant differences several years ago, testing on

machines that probably had no more than four cores; that's where the

existing comments in s_lock.h came from. Whether those tests are

still relevant for today's source code is not obvious though.

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 15:47:48 |

| Message-ID: | CA+TgmoanMFiNbOTXA8TLqmy23Wa6whp9K3ccNd0s1M8GkrK5Qw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 11:42 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>> On Mon, Aug 29, 2011 at 11:07 AM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>>> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>>>> IIUC, this is basically total nonsense.

>

>>> It could maybe be rewritten for more clarity, but it's far from being

>>> nonsense. The responsibility for having an actual hardware memory fence

>>> instruction lies with the author of the TAS macro.

>

>> Right... but the comment implies that you probably don't need one, and

>> doesn't even mention that you MIGHT need one.

>

> I think maybe we need to split it into two paragraphs, one addressing

> the TAS author and the other for the TAS user. I'll have a go at that.

OK.

>> I think optimizing spinlocks for machines with only a few CPUs is

>> probably pointless. Based on what I've seen so far, spinlock

>> contention even at 16 CPUs is negligible pretty much no matter what

>> you do.

>

> We did find significant differences several years ago, testing on

> machines that probably had no more than four cores; that's where the

> existing comments in s_lock.h came from. Whether those tests are

> still relevant for today's source code is not obvious though.

Hmm, OK. I guess if you want to put energy into it, I'm not going to

complain too much... just not sure it's the best use of time.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Greg Stark <stark(at)mit(dot)edu> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 15:48:23 |

| Message-ID: | CAM-w4HNkj-Snn2jud-Au2vy-d+35YF1td7MFsXeD+M3Yahyq+g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 4:07 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> * ANOTHER CAUTION: be sure that TAS(), TAS_SPIN(), and

>> S_UNLOCK() represent

>> * sequence points, ie, loads and stores of other values must not be moved

>> * across a lock or unlock. In most cases it suffices to make

>> the operation

>> * be done through a "volatile" pointer.

>

>> IIUC, this is basically total nonsense.

>

> It could maybe be rewritten for more clarity, but it's far from being

> nonsense.

The confusion for me is that it's talking about sequence points and

volatile pointers in the same breath as if one implies the other.

Making something a volatile pointer dose not create a sequence point.

It requires that the compiler not move the access or store across any

sequence points that are already there.

It might be helpful to include the actual bug that the comment is

trying to warn against because iirc it was a real case that caused you

to add the volatile modifiers.

--

greg

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Greg Stark <stark(at)mit(dot)edu> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 16:00:08 |

| Message-ID: | 22545.1314633608@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Greg Stark <stark(at)mit(dot)edu> writes:

> The confusion for me is that it's talking about sequence points and

> volatile pointers in the same breath as if one implies the other.

> Making something a volatile pointer dose not create a sequence point.

> It requires that the compiler not move the access or store across any

> sequence points that are already there.

Well, no, I don't think that's the useful way to think about it. Modern

compilers seem to only honor sequence points in terms of the semantics

seen by the executing program; they don't believe that they can't

reorder loads/stores freely. And as long as the memory in question is

only used by the given program, they're right. But for memory locations

shared with other threads of execution, you have to be careful about the

order of accesses to those locations. My understanding of "volatile"

is that the compiler is forbidden from altering the order of

volatile-qualified loads and stores *relative to each other*, or from

optimizing away a load or store that seems redundant in the context of

the given program. That's got nothing to do with sequence points per se.

> It might be helpful to include the actual bug that the comment is

> trying to warn against because iirc it was a real case that caused you

> to add the volatile modifiers.

Well, if there were one and only one bug involved here, it wouldn't be

such a critical problem ...

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | Greg Stark <stark(at)mit(dot)edu>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 16:53:51 |

| Message-ID: | CA+TgmoYnDkOdUQMyvPM9viJ3ewemdik1RK+tjtWKpv6N2xd6-Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 12:00 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Greg Stark <stark(at)mit(dot)edu> writes:

>> The confusion for me is that it's talking about sequence points and

>> volatile pointers in the same breath as if one implies the other.

>> Making something a volatile pointer dose not create a sequence point.

>> It requires that the compiler not move the access or store across any

>> sequence points that are already there.

>

> Well, no, I don't think that's the useful way to think about it. Modern

> compilers seem to only honor sequence points in terms of the semantics

> seen by the executing program; they don't believe that they can't

> reorder loads/stores freely.

This discussion seems to miss the fact that there are two levels of

reordering that can happen. First, the compiler can move things

around. Second, the CPU can move things around. Even on x86, for

example, a sequence like this can be reordered:

LOAD A

STORE A

LOAD B

Even though the compiler may emit those instructions in exactly that

order, an x86 CPU can, IIUC, decide to load B before it finishes

storing A, so that the actual apparent execution order as seen by

other CPUs will be either the above, or the above with the last two

instructions reversed. On a weakly ordered CPU, the load of B could

be moved back even further, before the LOAD of A.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Greg Stark <stark(at)mit(dot)edu>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 17:24:36 |

| Message-ID: | 7396.1314638676@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> This discussion seems to miss the fact that there are two levels of

> reordering that can happen. First, the compiler can move things

> around. Second, the CPU can move things around.

Right, I think that's exactly the problem with the previous wording of

that comment; it doesn't address the two logical levels involved.

I've rewritten it, see what you think.

* Another caution for users of these macros is that it is the caller's

* responsibility to ensure that the compiler doesn't re-order accesses

* to shared memory to precede the actual lock acquisition, or follow the

* lock release. Typically we handle this by using volatile-qualified

* pointers to refer to both the spinlock itself and the shared data

* structure being accessed within the spinlocked critical section.

* That fixes it because compilers are not allowed to re-order accesses

* to volatile objects relative to other such accesses.

*

* On platforms with weak memory ordering, the TAS(), TAS_SPIN(), and

* S_UNLOCK() macros must further include hardware-level memory fence

* instructions to prevent similar re-ordering at the hardware level.

* TAS() and TAS_SPIN() must guarantee that loads and stores issued after

* the macro are not executed until the lock has been obtained. Conversely,

* S_UNLOCK() must guarantee that loads and stores issued before the macro

* have been executed before the lock is released.

regards, tom lane

| From: | Greg Stark <stark(at)mit(dot)edu> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 17:34:12 |

| Message-ID: | CAM-w4HMrD+kp0Qh_DE9MtERw1BWHaXc8V-gfkHnAP=bA__QcOg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 5:53 PM, Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> Even though the compiler may emit those instructions in exactly that

> order, an x86 CPU can, IIUC, decide to load B before it finishes

> storing A, so that the actual apparent execution order as seen by

> other CPUs will be either the above, or the above with the last two

> instructions reversed. On a weakly ordered CPU, the load of B could

> be moved back even further, before the LOAD of A.

My understanding of what the comment meant is that there is already a

full memory barrier as far as the CPU is concerned due to the TAS or

whatever, but it's important that there also be a sequence point there

so that the volatile memory access isn't reordered by the compiler to

occur before the memory barrier.

I was going to say the same thing as Tom that sequence points and

volatile pointers have nothing at all to do with each other. However

my brief searching online actually seemed to indicate that in fact the

compiler isn't supposed to reorder volatile memory accesses across

sequence points. That seemed to make sense since I couldn't think of

any other way to rigorously describe the constraints the compiler

should operate under.

--

greg

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | Greg Stark <stark(at)mit(dot)edu>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 17:57:07 |

| Message-ID: | CA+TgmobhJtJsLaDQkjMb_5=EBi=NrP4aWL4X7CTjv-rr1r5mfA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 1:24 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Robert Haas <robertmhaas(at)gmail(dot)com> writes:

>> This discussion seems to miss the fact that there are two levels of

>> reordering that can happen. First, the compiler can move things

>> around. Second, the CPU can move things around.

>

> Right, I think that's exactly the problem with the previous wording of

> that comment; it doesn't address the two logical levels involved.

> I've rewritten it, see what you think.

>

> * Another caution for users of these macros is that it is the caller's

> * responsibility to ensure that the compiler doesn't re-order accesses

> * to shared memory to precede the actual lock acquisition, or follow the

> * lock release. Typically we handle this by using volatile-qualified

> * pointers to refer to both the spinlock itself and the shared data

> * structure being accessed within the spinlocked critical section.

> * That fixes it because compilers are not allowed to re-order accesses

> * to volatile objects relative to other such accesses.

> *

> * On platforms with weak memory ordering, the TAS(), TAS_SPIN(), and

> * S_UNLOCK() macros must further include hardware-level memory fence

> * instructions to prevent similar re-ordering at the hardware level.

> * TAS() and TAS_SPIN() must guarantee that loads and stores issued after

> * the macro are not executed until the lock has been obtained. Conversely,

> * S_UNLOCK() must guarantee that loads and stores issued before the macro

> * have been executed before the lock is released.

That's definitely an improvement.

I'm actually not convinced that we're entirely consistent here about

what we require the semantics of acquiring and releasing a spinlock to

be. For example, on x86 and x86_64, we acquire the lock using xchgb,

which acts a full memory barrier. But when we release the lock, we

just zero out the memory address, which is NOT a full memory barrier.

Stores can't cross it, but non-dependent loads of different locations

can back up over it. That's pretty close to a full barrier, but it

isn't, quite. Now, I don't see why that should really cause any

problem, at least for common cases like LWLockAcquire(). If the CPU

prefetches the data protected by the lwlock after we know we've got

the lock before we've actually released the spinlock and returned from

LWLockAcquire(), that should be fine, even good (for performance).

The real problem with being squiffy here is that it's not clear how

weak we can make the fence instruction on weakly ordered architectures

that support multiple types. Right now we're pretty conservative, but

I think that may be costing us. I might be wrong; more research is

needed here; but I think that we should at least start to get our head

about what semantics we actually need.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 18:15:21 |

| Message-ID: | 8292.1314641721@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I wrote:

> I am also currently running tests on x86_64 and PPC using Red Hat test

> machines --- expect results later today.

OK, I ran some more tests. These are not directly comparable to my

previous results with IA64, because (a) I used RHEL6.2 and gcc 4.4.6;

(b) I used half as many pgbench threads as backends, rather than one

thread per eight backends. Testing showed that pgbench cannot saturate

more than two backends per thread in this test environment, as shown

for example by this series:

pgbench -c 8 -j 1 -S -T 300 bench tps = 22091.461409 (including ...

pgbench -c 8 -j 2 -S -T 300 bench tps = 42587.661755 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 77515.057885 (including ...

pgbench -c 8 -j 8 -S -T 300 bench tps = 75830.463821 (including ...

I find this entirely astonishing, BTW; the backend is surely doing far

more than twice as much work per query as pgbench. We need to look into

why pgbench is apparently still such a dog. However, that's not

tremendously relevant to the question of whether we need an unlocked

test in spinlocks.

These tests were run on a 32-CPU Opteron machine (Sun Fire X4600 M2,

8 quad-core sockets). Test conditions the same as my IA64 set, except

for the OS and the -j switches:

Stock git head:

pgbench -c 1 -j 1 -S -T 300 bench tps = 9515.435401 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 20239.289880 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 78628.371372 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 143065.596555 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 227349.424654 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 269016.946095 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 253884.095190 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 269235.253012 (including ...

Non-locked test in TAS():

pgbench -c 1 -j 1 -S -T 300 bench tps = 9316.195621 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 19852.444846 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 77701.546927 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 138926.775553 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 188485.669320 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 253602.490286 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 251181.310600 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 260812.933702 (including ...

Non-locked test in TAS_SPIN() only:

pgbench -c 1 -j 1 -S -T 300 bench tps = 9283.944739 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 20213.208443 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 78824.247744 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 141027.072774 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 201658.416366 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 271035.843105 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 261337.324585 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 271272.921058 (including ...

So basically there is no benefit to the unlocked test on this hardware.

But it doesn't cost much either, which is odd because the last time we

did this type of testing, adding an unlocked test was a "huge loss" on

Opteron. Apparently AMD improved their handling of the case, and/or

the other changes we've made change the usage pattern completely.

I am hoping to do a similar test on another machine with $bignum Xeon

processors, to see if Intel hardware reacts any differently. But that

machine is in the Westford office which is currently without power,

so it will have to wait a few days. (I can no longer get at either

of the machines cited in this mail, either, so if you want to see

more test cases it'll have to wait.)

These tests were run on a 32-processor PPC64 machine (IBM 8406-71Y,

POWER7 architecture; I think it might be 16 cores with hyperthreading,

not sure). The machine has "only" 6GB of RAM so I set shared_buffers to

4GB, other test conditions the same:

Stock git head:

pgbench -c 1 -j 1 -S -T 300 bench tps = 8746.076443 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 12297.297308 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 48697.392492 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 94133.227472 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 126822.857978 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 129364.417801 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 125728.697772 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 131566.394880 (including ...

Non-locked test in TAS():

pgbench -c 1 -j 1 -S -T 300 bench tps = 8810.484890 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 12336.612804 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 49023.435650 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 96306.706556 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 131731.475778 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 133451.416612 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 110076.269474 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 111339.797242 (including ...

Non-locked test in TAS_SPIN() only:

pgbench -c 1 -j 1 -S -T 300 bench tps = 8726.269726 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 12228.415466 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 48227.623829 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 93302.510254 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 130661.097475 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 133009.181697 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 128710.757986 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 133063.460934 (including ...

So basically no value to an unlocked test on this platform either.

regards, tom lane

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Greg Stark <stark(at)mit(dot)edu>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 18:21:50 |

| Message-ID: | 8434.1314642110@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> I'm actually not convinced that we're entirely consistent here about

> what we require the semantics of acquiring and releasing a spinlock to

> be. For example, on x86 and x86_64, we acquire the lock using xchgb,

> which acts a full memory barrier. But when we release the lock, we

> just zero out the memory address, which is NOT a full memory barrier.

> Stores can't cross it, but non-dependent loads of different locations

> can back up over it. That's pretty close to a full barrier, but it

> isn't, quite.

Right. That's why I wrote the comment as I did; it says what the actual

requirement is. There probably are cases where our implementations are

more restrictive than necessary (I hope none where they are weaker).

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 18:32:49 |

| Message-ID: | CA+Tgmoaxzc_qFv6L04wM0wdkGVUkzuNT0pD-dGp-qznqZGO4Zw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Mon, Aug 29, 2011 at 2:15 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> These tests were run on a 32-CPU Opteron machine (Sun Fire X4600 M2,

> 8 quad-core sockets). Test conditions the same as my IA64 set, except

> for the OS and the -j switches:

>

> Stock git head:

>

> pgbench -c 1 -j 1 -S -T 300 bench tps = 9515.435401 (including ...

> pgbench -c 32 -j 16 -S -T 300 bench tps = 227349.424654 (including ...

>

> These tests were run on a 32-processor PPC64 machine (IBM 8406-71Y,

> POWER7 architecture; I think it might be 16 cores with hyperthreading,

> not sure). The machine has "only" 6GB of RAM so I set shared_buffers to

> 4GB, other test conditions the same:

>

> Stock git head:

>

> pgbench -c 1 -j 1 -S -T 300 bench tps = 8746.076443 (including ...

> pgbench -c 32 -j 16 -S -T 300 bench tps = 126822.857978 (including ...

Stepping beyond the immediate issue of whether we want an unlocked

test in there or not (and I agree that based on these numbers we

don't), there's a clear and puzzling difference between those sets of

numbers. The Opteron test is showing 32 clients getting about 23.9

times the throughput of a single client, which is not exactly linear

but is at least respectable, whereas the PPC64 test is showing 32

clients getting just 14.5 times the throughput of a single client,

which is pretty significantly less good. Moreover, cranking it up to

64 clients is squeezing a significant amount of additional work out on

Opteron, but not on PPC64. The HP-UX/Itanium numbers in my OP give a

ratio of 17.3x - a little better than your PPC64 numbers, but

certainly not great.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Greg Stark <stark(at)mit(dot)edu> |

| Cc: | Robert Haas <robertmhaas(at)gmail(dot)com>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 18:41:49 |

| Message-ID: | 8829.1314643309@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Greg Stark <stark(at)mit(dot)edu> writes:

> I was going to say the same thing as Tom that sequence points and

> volatile pointers have nothing at all to do with each other. However

> my brief searching online actually seemed to indicate that in fact the

> compiler isn't supposed to reorder volatile memory accesses across

> sequence points. That seemed to make sense since I couldn't think of

> any other way to rigorously describe the constraints the compiler

> should operate under.

It's a bit confusing. I agree that if the code is written such that

there are two volatile accesses with no intervening sequence point,

the compiler is at liberty to do them in either order; for instance in

foo(*x, *y);

there are no guarantees about which value is fetched first, even if x

and y are volatile-qualified. What's bothering me is that in, say,

*x = 0;

*y = 1;

*z = 2;

if x and z are volatile-qualified but y isn't, I believe the compilers

think they are only required to store into *x before *z, and can reorder

the store to *y around either of the others. So this makes the notion

of a sequence point pretty squishy in itself.

regards, tom lane

| From: | "Kevin Grittner" <Kevin(dot)Grittner(at)wicourts(dot)gov> |

|---|---|

| To: | "Robert Haas" <robertmhaas(at)gmail(dot)com>, "Tom Lane" <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 18:48:12 |

| Message-ID: | 4E5B989C0200002500040844@gw.wicourts.gov |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

> Stepping beyond the immediate issue of whether we want an unlocked

> test in there or not (and I agree that based on these numbers we

> don't), there's a clear and puzzling difference between those sets

> of numbers. The Opteron test is showing 32 clients getting about

> 23.9 times the throughput of a single client, which is not exactly

> linear but is at least respectable, whereas the PPC64 test is

> showing 32 clients getting just 14.5 times the throughput of a

> single client, which is pretty significantly less good. Moreover,

> cranking it up to 64 clients is squeezing a significant amount of

> additional work out on Opteron, but not on PPC64. The

> HP-UX/Itanium numbers in my OP give a ratio of 17.3x - a little

> better than your PPC64 numbers, but certainly not great.

I wouldn't make too much of that without comparing to a STREAM test

(properly configured -- the default array size is likely not to be

large enough for these machines). On a recently delivered 32 core

machine with 256 GB RAM, I saw numbers like this for just RAM

access:

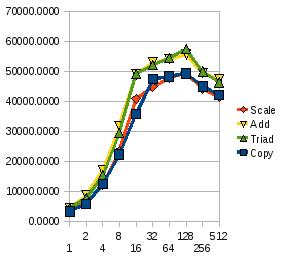

Threads Copy Scale Add Triad

1 3332.3721 3374.8146 4500.1954 4309.7392

2 5822.8107 6158.4621 8563.3236 7756.9050

4 12474.9646 12282.3401 16960.7216 15399.2406

8 22353.6013 23502.4389 31911.5206 29574.8124

16 35760.8782 40946.6710 49108.4386 49264.6576

32 47567.3882 44935.4608 52983.9355 52278.1373

64 48428.9939 47851.7320 54141.8830 54560.0520

128 49354.4303 49586.6092 55663.2606 57489.5798

256 45081.3601 44303.1032 49561.3815 50073.3530

512 42271.9688 41689.8609 47362.4190 46388.9720

Graph attached for those who are visually inclined and have support

for the display of JPEG files.

Note that this is a machine which is *not* configured to be

blazingly fast for a single connection, but to scale up well for a

large number of concurrent processes:

http://www.redbooks.ibm.com/redpapers/pdfs/redp4650.pdf

Unless your benchmarks are falling off a lot faster than the STREAM

test on that hardware, I wouldn't worry.

-Kevin

| Attachment | Content-Type | Size |

|---|---|---|

|

image/jpeg | 12.7 KB |

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | "Kevin Grittner" <Kevin(dot)Grittner(at)wicourts(dot)gov> |

| Cc: | "Robert Haas" <robertmhaas(at)gmail(dot)com>, pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-29 19:12:51 |

| Message-ID: | 10108.1314645171@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

"Kevin Grittner" <Kevin(dot)Grittner(at)wicourts(dot)gov> writes:

> Robert Haas <robertmhaas(at)gmail(dot)com> wrote:

>> Stepping beyond the immediate issue of whether we want an unlocked

>> test in there or not (and I agree that based on these numbers we

>> don't), there's a clear and puzzling difference between those sets

>> of numbers. The Opteron test is showing 32 clients getting about

>> 23.9 times the throughput of a single client, which is not exactly

>> linear but is at least respectable, whereas the PPC64 test is

>> showing 32 clients getting just 14.5 times the throughput of a

>> single client, which is pretty significantly less good.

> I wouldn't make too much of that without comparing to a STREAM test

> (properly configured -- the default array size is likely not to be

> large enough for these machines).

Yeah. One point I didn't mention is that the Opteron machine's memory

is split across 8 NUMA nodes, whereas the PPC machine's isn't. I would

bet there's a significant difference in aggregate available memory

bandwidth.

Also, if the PPC machine really is hyperthreaded (the internal webpage

for it says "Hyper? True" but /proc/cpuinfo doesn't provide any clear

indications), that might mean it's not going to scale too well past 16x

the single-thread case.

regards, tom lane

| From: | Ants Aasma <ants(dot)aasma(at)eesti(dot)ee> |

|---|---|

| To: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | spinlocks on HP-UX |

| Date: | 2011-08-29 21:07:18 |

| Message-ID: | CA+CSw_ufKHvpjfkcZMiFQgLnB_MkmJ_EictSn4+y6dnTdYGW_A@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Sorry, forgot to cc the list.

On Mon, Aug 29, 2011 at 10:12 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> Also, if the PPC machine really is hyperthreaded (the internal webpage

> for it says "Hyper? True" but /proc/cpuinfo doesn't provide any clear

> indications), that might mean it's not going to scale too well past 16x

> the single-thread case.

According to IBM docs [1], 8406-71Y contains one 8 core POWER7 chip

that is 4-way multi-threaded and has 4 memory channels. X4600M2 should

have 16 memory channels, although at 2/3 the transfer rate. 6GB of

memory is a strange amount for the IBM, according to specs it should take

4 or 8GB DIMMs in pairs. Sounds like the server is split into multiple

partitions.

--

Ants Aasma

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Ants Aasma <ants(dot)aasma(at)eesti(dot)ee> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-30 04:59:01 |

| Message-ID: | 7674.1314680341@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Ants Aasma <ants(dot)aasma(at)eesti(dot)ee> writes:

> On Mon, Aug 29, 2011 at 10:12 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

>> Also, if the PPC machine really is hyperthreaded (the internal webpage

>> for it says "Hyper? True" but /proc/cpuinfo doesn't provide any clear

>> indications), that might mean it's not going to scale too well past 16x

>> the single-thread case.

> According to IBM docs [1], 8406-71Y contains one 8 core POWER7 chip

> that is 4-way multi-threaded and has 4 memory channels.

Yeah, I looked at the docs. "Multi threading" is IBM's term for

hyperthreading, that is several instruction streams competing for use of

a single processor core's pipelines. So the 32 virtual processors on

the machine only really represent 8 physically independent cores,

squaring with the hardware designation. I found an IBM doc

http://www-03.ibm.com/systems/resources/pwrsysperf_SMT4OnP7.pdf

suggesting that the throughput benefit of 4-way SMT is typically 1.5 to

2X, that is you max out at 1.5 to 2X as much work as you'd get with just

8 virtual processors on the same 8 cores. So I'd say we're really doing

quite well to get the numbers I got. (The paper also implies that you

get more benefit from SMT with workloads that incur more memory-access

stalls, so the relatively large working set of this test case is helping

it look good.)

> 6GB of

> memory is a strange amount for the IBM, according to specs it should take

> 4 or 8GB DIMMs in pairs. Sounds like the server is split into multiple

> partitions.

I'm confused about that too. There definitely seemed to be only 6GB of

available RAM, but there's no way I can see that memory might be

partitioned across different blades. The blades look pretty independent

...

regards, tom lane

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-30 20:05:52 |

| Message-ID: | 25989.1314734752@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

I wrote:

> I am hoping to do a similar test on another machine with $bignum Xeon

> processors, to see if Intel hardware reacts any differently. But that

> machine is in the Westford office which is currently without power,

> so it will have to wait a few days.

OK, the lights are on again in Westford, so here are some results from

an 8-socket Fujitsu PRIMEQUEST 1800 with 10-core Xeon E7-8870 processors,

hyperthreading enabled for a total of 160 virtual processors.

All test conditions the same as from my Opteron runs yesterday,

except just for the heck of it I ran it up to 160 backends.

Stock git head (of a couple of days ago now):

pgbench -c 1 -j 1 -S -T 300 bench tps = 4401.589257 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8585.789827 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 36315.227334 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 73841.195884 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 155309.526039 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 77477.101725 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 41301.481915 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 30443.815506 (including ...

pgbench -c 160 -j 80 -S -T 300 bench tps = 24600.584202 (including ...

Non-locked test in TAS():

pgbench -c 1 -j 1 -S -T 300 bench tps = 4412.336573 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 8739.900806 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 32957.710818 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 71538.032629 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 153892.469308 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 127786.277182 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 92108.895423 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 75382.131814 (including ...

pgbench -c 160 -j 80 -S -T 300 bench tps = 67277.057981 (including ...

Non-locked test in TAS_SPIN() only:

pgbench -c 1 -j 1 -S -T 300 bench tps = 4006.626861 (including ...

pgbench -c 2 -j 1 -S -T 300 bench tps = 9020.124850 (including ...

pgbench -c 8 -j 4 -S -T 300 bench tps = 36507.582318 (including ...

pgbench -c 16 -j 8 -S -T 300 bench tps = 69668.921550 (including ...

pgbench -c 32 -j 16 -S -T 300 bench tps = 150886.395754 (including ...

pgbench -c 64 -j 32 -S -T 300 bench tps = 216697.745497 (including ...

pgbench -c 96 -j 48 -S -T 300 bench tps = 171013.266643 (including ...

pgbench -c 128 -j 64 -S -T 300 bench tps = 115205.718495 (including ...

pgbench -c 160 -j 80 -S -T 300 bench tps = 92073.704665 (including ...

This suggests that (1) an unlocked test in TAS_SPIN might be a good idea

on x86_64 after all, and (2) this test scenario may not be pushing the

system hard enough to expose limitations of the spinlock implementation.

I am now thinking that the reason we saw clear differences in spinlock

implementations years ago, and now are not seeing them except on insane

hardware, is mainly that we've managed to reduce contention at higher

levels of the system. That doesn't mean spinlocks have become

uninteresting, just that "pgbench -S" isn't the ideal test case for

stressing them. I'm thinking maybe we need a test scenario that

generates sinval traffic, for example, or forces snapshots to be taken

more often. Ideas anyone?

regards, tom lane

| From: | Robert Haas <robertmhaas(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-30 20:23:48 |

| Message-ID: | CA+TgmoZ4LFNYkpYz2tnYQjrM39nzXPRv+SUAOmj0bB-c+U=wYg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

On Tue, Aug 30, 2011 at 4:05 PM, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> wrote:

> This suggests that (1) an unlocked test in TAS_SPIN might be a good idea

> on x86_64 after all, and (2) this test scenario may not be pushing the

> system hard enough to expose limitations of the spinlock implementation.

>

> I am now thinking that the reason we saw clear differences in spinlock

> implementations years ago, and now are not seeing them except on insane

> hardware, is mainly that we've managed to reduce contention at higher

> levels of the system. That doesn't mean spinlocks have become

> uninteresting, just that "pgbench -S" isn't the ideal test case for

> stressing them. I'm thinking maybe we need a test scenario that

> generates sinval traffic, for example, or forces snapshots to be taken

> more often. Ideas anyone?

On current sources, with a workload that fits into shared_buffers,

pgbench -S hammers the spinlock protecting ProcArrayLock extremely

hard. I'm sure it's possible to come up with a test case that

hammers them harder, but using a real workload can expose issues (like

aggregate memory bandwidth) that you might not see otherwise.

I am a bit surprised by your test results, because I also tried x86_64

with an unlocked test, also on pgbench -S, and I am pretty sure I got

a regression. Maybe I'll try rerunning that. It seems possible that

the x86_64 results depend on the particular sub-architecture and/or

whether HT is in use, which would be kind of a nuisance.

Also, did you happen to measure the amount of user time vs. system

time that your test runs used? If this is on Linux, I am surprised

that you didn't get killed by the lseek() contention problem on a

machine with that many cores. I found it to be visible at 32 and

crippling at 64, so I can't even imagine what it would be like at 160.

--

Robert Haas

EnterpriseDB: http://www.enterprisedb.com

The Enterprise PostgreSQL Company

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | pgsql-hackers(at)postgresql(dot)org |

| Subject: | Re: spinlocks on HP-UX |

| Date: | 2011-08-30 20:37:07 |

| Message-ID: | 26609.1314736627@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers |

Robert Haas <robertmhaas(at)gmail(dot)com> writes:

> I am a bit surprised by your test results, because I also tried x86_64

> with an unlocked test, also on pgbench -S, and I am pretty sure I got

> a regression. Maybe I'll try rerunning that. It seems possible that

> the x86_64 results depend on the particular sub-architecture and/or

> whether HT is in use, which would be kind of a nuisance.

Well, if you consider Opteron as a sub-architecture of x86_64, that was

already true the last time we did this. So far there have not been

cases where something really good for one implementation was really bad

for another, but someday we'll probably hit that.

> Also, did you happen to measure the amount of user time vs. system

> time that your test runs used?

Did not think about that. I was considering how to measure the average

context swap rate over each run, so that we could keep an eye out for

the "context swap storm" behavior that's the usual visible-in-top

symptom of these sorts of problems. But it'd have to be automated;

I'm not going to keep my eyes glued to "top" output for several hours.

I'd be happy to re-run these tests with any RHEL-compatible measurement

scaffolding somebody else provides, but if I have to write it, it

probably won't happen very soon.

> If this is on Linux, I am surprised

> that you didn't get killed by the lseek() contention problem on a

> machine with that many cores.

Hm ... now that you mention it, all of these tests have been using

the latest-and-greatest unreleased RHEL kernels. Maybe Red Hat already