Re: [PERFORM] Slow query: bitmap scan troubles

| Lists: | pgsql-hackerspgsql-performance |

|---|

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Slow query: bitmap scan troubles |

| Date: | 2012-12-04 15:06:48 |

| Message-ID: | 092a01cdd230$ff6143c0$fe23cb40$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Hi guys (and girls)

I've been banging my head over this for a few days now so if any of you kind

souls could take a minute to take a look at this I would be eternally

grateful.

I have a pretty straightforward query that is very slow by default, and

about 70 times faster when I set enable_bitmapscan=off. I would like to

convince the planner to use my lovely indexes.

The scenario is this; I have two tables, trade and position_effect. A trade

is a deal we do with somebody to exchange something for something else. It

has a time it was done, and is associated with a particular book for

accounting purposes. A position effect records changes to our position (e.g.

how much we have) of an particular asset. One trade can many position

effects (usually only 1,2 or 3)

For example, I do a trade of USD/GBP and I get two position effects, +1000

GBP and -1200USD

SCHEMA:

-------

The actual schema is a bit more complicated but I will put the important

parts here (if you think it important, the full schema for the two tables is

here: http://pastebin.com/6Y52aDFL):

CREATE TABLE trade

(

id bigserial NOT NULL,

time_executed timestamp with time zone NOT NULL,

id_book integer NOT NULL,

CONSTRAINT cons_trade_primary_key PRIMARY KEY (id),

)

CREATE INDEX idx_trade_id_book

ON trade

USING btree

(id_book, time_executed, id);

CREATE TABLE position_effect

(

id bigserial NOT NULL,

id_trade bigint NOT NULL,

id_asset integer NOT NULL,

quantity double precision NOT NULL,

CONSTRAINT cons_pe_primary_key PRIMARY KEY (id_trade, id_asset),

)

SETUP:

------

These tables are relatively large (~100 million rows in position effect).

The box is a pretty beastly affair with 512Mb of ram and 4x10 2Ghz cores.

The postgres configuration is here:

I am using a 64bit postgresql 9.2.1, hand compiled on a RedHat 6.2 box.

QUERY:

------

What I want to do is sum all of the position effects, for a particular asset

while joined to the trade table to filter for the time it was executed and

the book it was traded into:

SELECT sum(position_effect.quantity)

FROM trade, position_effect

WHERE trade.id = position_effect.id_trade

AND position_effect.id_asset = 1837

AND trade.time_executed >= '2012-10-28 00:00:00'

AND trade.id_book = 41

In this case there are only 11 rows that need to be summed. If I just let

postgres do its thing, that query takes 5000ms (Which when multiplied over

many books and assets gets very slow). I think this is because it is

bitmapping the whole position_effect table which is very large. If I disable

bitmap scans:

set enable_bitmapscan = off;

The query takes 43ms, and properly uses the indexes I have set up.

Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7

Fast version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 15:21:17 |

| Message-ID: | 093301cdd233$057a2b30$106e8190$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Bad form to reply to yourself I know but just check-reading that for the

third time I noticed two mistakes

- The box has 128Gb of ram, not 512Mb

- There is an additional constraint on the position_effect table (though I

don't think it matters for this discussion):

CONSTRAINT cons_pe_trade FOREIGN KEY (id_trade) REFERENCES trade (id)

Sorry to clog your inboxes further!

Regards,

Philip

-----Original Message-----

From: pgsql-performance-owner(at)postgresql(dot)org

[mailto:pgsql-performance-owner(at)postgresql(dot)org] On Behalf Of

postgresql(at)foo(dot)me(dot)uk

Sent: 04 December 2012 15:07

To: pgsql-performance(at)postgresql(dot)org

Subject: [PERFORM] Slow query: bitmap scan troubles

Hi guys (and girls)

I've been banging my head over this for a few days now so if any of you kind

souls could take a minute to take a look at this I would be eternally

grateful.

I have a pretty straightforward query that is very slow by default, and

about 70 times faster when I set enable_bitmapscan=off. I would like to

convince the planner to use my lovely indexes.

The scenario is this; I have two tables, trade and position_effect. A trade

is a deal we do with somebody to exchange something for something else. It

has a time it was done, and is associated with a particular book for

accounting purposes. A position effect records changes to our position (e.g.

how much we have) of an particular asset. One trade can many position

effects (usually only 1,2 or 3)

For example, I do a trade of USD/GBP and I get two position effects, +1000

GBP and -1200USD

SCHEMA:

-------

The actual schema is a bit more complicated but I will put the important

parts here (if you think it important, the full schema for the two tables is

here: http://pastebin.com/6Y52aDFL):

CREATE TABLE trade

(

id bigserial NOT NULL,

time_executed timestamp with time zone NOT NULL,

id_book integer NOT NULL,

CONSTRAINT cons_trade_primary_key PRIMARY KEY (id),

)

CREATE INDEX idx_trade_id_book

ON trade

USING btree

(id_book, time_executed, id);

CREATE TABLE position_effect

(

id bigserial NOT NULL,

id_trade bigint NOT NULL,

id_asset integer NOT NULL,

quantity double precision NOT NULL,

CONSTRAINT cons_pe_primary_key PRIMARY KEY (id_trade, id_asset),

)

SETUP:

------

These tables are relatively large (~100 million rows in position effect).

The box is a pretty beastly affair with 512Mb of ram and 4x10 2Ghz cores.

The postgres configuration is here:

I am using a 64bit postgresql 9.2.1, hand compiled on a RedHat 6.2 box.

QUERY:

------

What I want to do is sum all of the position effects, for a particular asset

while joined to the trade table to filter for the time it was executed and

the book it was traded into:

SELECT sum(position_effect.quantity)

FROM trade, position_effect

WHERE trade.id = position_effect.id_trade

AND position_effect.id_asset = 1837

AND trade.time_executed >= '2012-10-28 00:00:00'

AND trade.id_book = 41

In this case there are only 11 rows that need to be summed. If I just let

postgres do its thing, that query takes 5000ms (Which when multiplied over

many books and assets gets very slow). I think this is because it is

bitmapping the whole position_effect table which is very large. If I disable

bitmap scans:

set enable_bitmapscan = off;

The query takes 43ms, and properly uses the indexes I have set up.

Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7 Fast

version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

--

Sent via pgsql-performance mailing list (pgsql-performance(at)postgresql(dot)org)

To make changes to your subscription:

http://www.postgresql.org/mailpref/pgsql-performance

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 15:27:57 |

| Message-ID: | CAGTBQpaKOfZs+qy+S7CCPcTg7PYOrwgcQAXgWV88cQTn-BFstg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 12:06 PM, <postgresql(at)foo(dot)me(dot)uk> wrote:

> Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7

> Fast version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

If you check the "fast" plan, it has a higher cost compared against

the "slow" plan.

The difference between cost estimation and actual cost of your

queries, under relatively precise row estimates, seems to suggest your

e_c_s or r_p_c aren't a reflection of your hardware's performance.

First, make sure caching isn't interfering with your results. Run each

query several times.

Then, if the difference persists, you may have to tweak

effective_cache_size first, maybe random_page_cost too, to better

match your I/O subsystem's actual performance

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 17:22:29 |

| Message-ID: | CAMkU=1wEc1cCeUdR1oZ3Q2fe2pGj6tvrwRcY2obbfEk8LcNypQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 7:27 AM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

> On Tue, Dec 4, 2012 at 12:06 PM, <postgresql(at)foo(dot)me(dot)uk> wrote:

>> Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7

>> Fast version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

>

> If you check the "fast" plan, it has a higher cost compared against

> the "slow" plan.

>

> The difference between cost estimation and actual cost of your

> queries, under relatively precise row estimates, seems to suggest your

> e_c_s or r_p_c aren't a reflection of your hardware's performance.

But the row estimates are not precise at the top of the join/filter.

It thinks there will 2120 rows, but there are only 11.

So it seems like there is a negative correlation between the two

tables which is not recognized.

> First, make sure caching isn't interfering with your results. Run each

> query several times.

If that is not how the production system works (running the same query

over and over) then you want to model the cold cache, not the hot one.

But in any case, the posted explains indicates that all buffers were

cached.

Cheers,

Jeff

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 17:25:56 |

| Message-ID: | CAGTBQpbqzBf5545-qxdLQHbLyBBOvxn3hEtjQmqEp+UJPKsSrA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 2:22 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

> On Tue, Dec 4, 2012 at 7:27 AM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

>> On Tue, Dec 4, 2012 at 12:06 PM, <postgresql(at)foo(dot)me(dot)uk> wrote:

>>> Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7

>>> Fast version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

>>

>> If you check the "fast" plan, it has a higher cost compared against

>> the "slow" plan.

>>

>> The difference between cost estimation and actual cost of your

>> queries, under relatively precise row estimates, seems to suggest your

>> e_c_s or r_p_c aren't a reflection of your hardware's performance.

>

> But the row estimates are not precise at the top of the join/filter.

> It thinks there will 2120 rows, but there are only 11.

Ah... I didn't spot that one...

| From: | "Philip Scott" <pscott(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 17:35:32 |

| Message-ID: | 096a01cdd245$c4a49830$4dedc890$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

>> But the row estimates are not precise at the top of the join/filter.

>> It thinks there will 2120 rows, but there are only 11.

>Ah... I didn't spot that one...

Yes, you are right there - this is probably a slightly atypical query of

this sort actually, 2012 is a pretty good guess.

On Claudio's suggestion I have found lots more things to read up on and am

eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

effective_work_mem setting is going from 6Gb->88Gb which I think will make

quite a difference.

I still can't quite wrap around my head why accessing an index is expected

to use more disk access than doing a bitmap scan of the table itself, but I

guess it does make a bit of sense if postgres assumes the table is more

likely to be cached.

It's all quite, quite fascinating :)

I'll let you know how it goes.

- Phil

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 17:47:29 |

| Message-ID: | 096f01cdd247$722264a0$56672de0$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

>> But the row estimates are not precise at the top of the join/filter.

>> It thinks there will 2120 rows, but there are only 11.

>Ah... I didn't spot that one...

Yes, you are right there - this is probably a slightly atypical query of

this sort actually, 2012 is a pretty good guess.

On Claudio's suggestion I have found lots more things to read up on and am

eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

effective_work_mem setting is going from 6Gb->88Gb which I think will make

quite a difference.

I still can't quite wrap around my head why accessing an index is expected

to use more disk access than doing a bitmap scan of the table itself, but I

guess it does make a bit of sense if postgres assumes the table is more

likely to be cached.

It's all quite, quite fascinating :)

I'll let you know how it goes.

- Phil

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:03:29 |

| Message-ID: | 097601cdd249$ae338530$0a9a8f90$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

> But the row estimates are not precise at the top of the join/filter.

> It thinks there will 2120 rows, but there are only 11.

> So it seems like there is a negative correlation between the two tables

which is not recognized.

Yes, you are right there. I am only just beginning to understand how to

parse these explain reports.. As I mentioned above, I probably picked a bad

example to run that query on 11 is an unusually low number of results to get

back, a few thousand would be more normal.

Though that doesn't account for the 70x difference between the speed of the

two queries in actuality given a pretty similar expected speed (does it?).

It does go some way to explaining why a bad choice of plan was made.

Is there some nice bit of literature somewhere that explains what sort of

costs are associated with the different types of lookup? I have found bits

and bobs online but I still don't have a really clear idea in my head what

the difference is between a bitmap index scan and index only scan is, though

I can sort of guess I don't see why one would be considered more likely to

use the disk than the other.

On the 'slow' query (with the better predicted score)

>> First, make sure caching isn't interfering with your results. Run each

>> query several times.

> If that is not how the production system works (running the same query

over and over) then you want to model the cold cache, not the hot one.

> But in any case, the posted explains indicates that all buffers were

cached.

We are in the rather pleasant situation here in that we are willing to spend

money on the box (up to a point, but quite a large point) to get it up to

the spec so that it should hardly ever need to touch the disk, the trick is

figuring out how to let our favourite database server know that.

I've just discovered pgtune and am having some fun with that too.

Cheers,

Phil

| From: | "Philip Scott" <pscott(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'Claudio Freire'" <klaussfreire(at)gmail(dot)com>, <postgresql(at)foo(dot)me(dot)uk> |

| Cc: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:31:05 |

| Message-ID: | 098101cdd24d$892524c0$9b6f6e40$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

> The difference between cost estimation and actual cost of your queries,

under relatively precise row estimates, seems to suggest your e_c_s or r_p_c

aren't a reflection of your hardware's performance.

Wow, so tweaking these has fixed it and then some. It now picks a slightly

different plan than the 'fast' one previously:

New super fast version with e_c_s 6GB->88Gb and r_p_c 2-> 1 (s_p_c 1->0.5):

http://explain.depesz.com/s/ECk

For reference:

> Slow version with bitmapscan enabled: http://explain.depesz.com/s/6I7

> Fast version with bitmapscan disabled: http://explain.depesz.com/s/4MWG

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:31:50 |

| Message-ID: | CAGTBQpaueZhezPjfM8Vaw1UExeYJh6jR6+6S0Qk_k59Bb5m=MQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 3:03 PM, <postgresql(at)foo(dot)me(dot)uk> wrote:

>

> Though that doesn't account for the 70x difference between the speed of the

> two queries in actuality given a pretty similar expected speed (does it?).

> It does go some way to explaining why a bad choice of plan was made.

I still don't think it does. I still think the problem is the GUC settings.

The slow plan joins in a way that processes all 3M rows in both sides

of the join, and pg knows it.

The fast plan only processes 5k of them. And pg knows it. Why is it

choosing to process 3M rows?

If there's negative correlation, it only means less rows will be

produced, but the nested loop and and the right-hand index scan still

happens.

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | Philip Scott <pscott(at)foo(dot)me(dot)uk> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:32:57 |

| Message-ID: | CAGTBQpb2FZfbhLD_vwUJfNja-KHHypJ6zXrOPjsUwn0+prvtcA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 3:31 PM, Philip Scott <pscott(at)foo(dot)me(dot)uk> wrote:

> r_p_c 2-> 1 (s_p_c 1->0.5):

Is this really necessary?

(looks like a no-op, unless your CPU is slow)

| From: | Vitalii Tymchyshyn <tivv00(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:50:41 |

| Message-ID: | CABWW-d2gK6=AOBWxJij8GYS6CQC4+p7xWDsr4FSs2-BaXDQMsA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Well, you don't need to put anything down. Most settings that change

planner decisions can be tuned on per-quey basis by issuing set commands in

given session. This should not affect other queries more than it is needed

to run query in the way planner chooses.

Best regards, Vitalii Tymchyshyn

2012/12/4 <postgresql(at)foo(dot)me(dot)uk>

>

> >> But the row estimates are not precise at the top of the join/filter.

> >> It thinks there will 2120 rows, but there are only 11.

>

> >Ah... I didn't spot that one...

>

> Yes, you are right there - this is probably a slightly atypical query of

> this sort actually, 2012 is a pretty good guess.

>

> On Claudio's suggestion I have found lots more things to read up on and am

> eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

> effective_work_mem setting is going from 6Gb->88Gb which I think will make

> quite a difference.

>

> I still can't quite wrap around my head why accessing an index is expected

> to use more disk access than doing a bitmap scan of the table itself, but I

> guess it does make a bit of sense if postgres assumes the table is more

> likely to be cached.

>

> It's all quite, quite fascinating :)

>

> I'll let you know how it goes.

>

> - Phil

>

>

>

> --

> Sent via pgsql-performance mailing list (pgsql-performance(at)postgresql(dot)org)

> To make changes to your subscription:

> http://www.postgresql.org/mailpref/pgsql-performance

>

--

Best regards,

Vitalii Tymchyshyn

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:54:29 |

| Message-ID: | 098601cdd250$ce2f85d0$6a8e9170$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Ah, okay - my reasoning was there's a big fancy-pants raid array behind it

that makes disk operations faster relative to CPU ones.

I'll test it and see if it actually makes any difference.

-----Original Message-----

From: Claudio Freire [mailto:klaussfreire(at)gmail(dot)com]

Sent: 04 December 2012 18:33

To: Philip Scott

Cc: postgresql(at)foo(dot)me(dot)uk; postgres performance list

Subject: Re: [PERFORM] Slow query: bitmap scan troubles

On Tue, Dec 4, 2012 at 3:31 PM, Philip Scott <pscott(at)foo(dot)me(dot)uk> wrote:

> r_p_c 2-> 1 (s_p_c 1->0.5):

Is this really necessary?

(looks like a no-op, unless your CPU is slow)

| From: | "Philip Scott" <pscott(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'Vitalii Tymchyshyn'" <tivv00(at)gmail(dot)com>, <postgresql(at)foo(dot)me(dot)uk> |

| Cc: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:55:17 |

| Message-ID: | 098801cdd250$e70232b0$b5069810$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Ah okay, thanks. I knew I could set various things but not

effective_work_mem (I tried reloading the edited config file but it didn't

seem to pick it up)

From: Vitalii Tymchyshyn [mailto:tivv00(at)gmail(dot)com]

Sent: 04 December 2012 18:51

To: postgresql(at)foo(dot)me(dot)uk

Cc: postgres performance list

Subject: Re: [PERFORM] Slow query: bitmap scan troubles

Well, you don't need to put anything down. Most settings that change planner

decisions can be tuned on per-quey basis by issuing set commands in given

session. This should not affect other queries more than it is needed to run

query in the way planner chooses.

Best regards, Vitalii Tymchyshyn

2012/12/4 <postgresql(at)foo(dot)me(dot)uk>

>> But the row estimates are not precise at the top of the join/filter.

>> It thinks there will 2120 rows, but there are only 11.

>Ah... I didn't spot that one...

Yes, you are right there - this is probably a slightly atypical query of

this sort actually, 2012 is a pretty good guess.

On Claudio's suggestion I have found lots more things to read up on and am

eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

effective_work_mem setting is going from 6Gb->88Gb which I think will make

quite a difference.

I still can't quite wrap around my head why accessing an index is expected

to use more disk access than doing a bitmap scan of the table itself, but I

guess it does make a bit of sense if postgres assumes the table is more

likely to be cached.

It's all quite, quite fascinating :)

I'll let you know how it goes.

- Phil

--

Sent via pgsql-performance mailing list (pgsql-performance(at)postgresql(dot)org)

To make changes to your subscription:

http://www.postgresql.org/mailpref/pgsql-performance

--

Best regards,

Vitalii Tymchyshyn

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 18:56:04 |

| Message-ID: | 099801cdd251$02c7b9c0$08572d40$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Ah okay, thanks. I knew I could set various things but not

effective_work_mem (I tried reloading the edited config file but it didn't

seem to pick it up)

From: Vitalii Tymchyshyn [mailto:tivv00(at)gmail(dot)com]

Sent: 04 December 2012 18:51

To: postgresql(at)foo(dot)me(dot)uk

Cc: postgres performance list

Subject: Re: [PERFORM] Slow query: bitmap scan troubles

Well, you don't need to put anything down. Most settings that change planner

decisions can be tuned on per-quey basis by issuing set commands in given

session. This should not affect other queries more than it is needed to run

query in the way planner chooses.

Best regards, Vitalii Tymchyshyn

2012/12/4 <postgresql(at)foo(dot)me(dot)uk>

>> But the row estimates are not precise at the top of the join/filter.

>> It thinks there will 2120 rows, but there are only 11.

>Ah... I didn't spot that one...

Yes, you are right there - this is probably a slightly atypical query of

this sort actually, 2012 is a pretty good guess.

On Claudio's suggestion I have found lots more things to read up on and am

eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

effective_work_mem setting is going from 6Gb->88Gb which I think will make

quite a difference.

I still can't quite wrap around my head why accessing an index is expected

to use more disk access than doing a bitmap scan of the table itself, but I

guess it does make a bit of sense if postgres assumes the table is more

likely to be cached.

It's all quite, quite fascinating :)

I'll let you know how it goes.

- Phil

--

Sent via pgsql-performance mailing list (pgsql-performance(at)postgresql(dot)org)

To make changes to your subscription:

http://www.postgresql.org/mailpref/pgsql-performance

--

Best regards,

Vitalii Tymchyshyn

| From: | Sergey Konoplev <gray(dot)ru(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 20:11:53 |

| Message-ID: | CAL_0b1vkyXYYpCxbFvu-4Piem10Y-tPz50MRO-mp3QMbkF1mEQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 9:47 AM, <postgresql(at)foo(dot)me(dot)uk> wrote:

> eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

> effective_work_mem setting is going from 6Gb->88Gb which I think will make

> quite a difference.

I also wonder if increasing (say x10) of default_statistics_target or

just doing ALTER TABLE SET STATISTICS for particular tables will help.

It will make planned to produce more precise estimations. Do not

forget ANALYZE afer changing it.

>

> I still can't quite wrap around my head why accessing an index is expected

> to use more disk access than doing a bitmap scan of the table itself, but I

> guess it does make a bit of sense if postgres assumes the table is more

> likely to be cached.

>

> It's all quite, quite fascinating :)

>

> I'll let you know how it goes.

>

> - Phil

>

>

>

> --

> Sent via pgsql-performance mailing list (pgsql-performance(at)postgresql(dot)org)

> To make changes to your subscription:

> http://www.postgresql.org/mailpref/pgsql-performance

--

Sergey Konoplev

Database and Software Architect

http://www.linkedin.com/in/grayhemp

Phones:

USA +1 415 867 9984

Russia, Moscow +7 901 903 0499

Russia, Krasnodar +7 988 888 1979

Skype: gray-hemp

Jabber: gray(dot)ru(at)gmail(dot)com

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 22:34:42 |

| Message-ID: | CAMkU=1zCDqsnfQ-cE4jc_A8=WhJYkDGwMh1-V8vB4a8fQ60ysA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 9:47 AM, <postgresql(at)foo(dot)me(dot)uk> wrote:

>

>>> But the row estimates are not precise at the top of the join/filter.

>>> It thinks there will 2120 rows, but there are only 11.

>

>>Ah... I didn't spot that one...

>

> Yes, you are right there - this is probably a slightly atypical query of

> this sort actually, 2012 is a pretty good guess.

What do the timings look like on a more realistic example?

> On Claudio's suggestion I have found lots more things to read up on and am

> eagerly awaiting 6pm when I can bring the DB down and start tweaking. The

> effective_work_mem setting is going from 6Gb->88Gb which I think will make

> quite a difference.

You can change effective_cache_size just in your own session, or do it

globally with a "reload" or SIGHUP, no need to bring down the server.

However, I don't think it will make much difference. Even though it

thinks it is hitting the index 14,085 times, that is still small

compared to the overall size of the table.

> I still can't quite wrap around my head why accessing an index is expected

> to use more disk access than doing a bitmap scan of the table itself,

It is only doing an bitmap scan of those parts of the table which

contain relevant data, and it is doing them in physical order, so it

thinks that much of the IO which it thinks it is going to do is

largely sequential.

> but I

> guess it does make a bit of sense if postgres assumes the table is more

> likely to be cached.

Unfortunately, postgres's planner doesn't know anything about that.

From your "explain" I can see in hindsight that everything you needed

was cached, but that is not information that the planner can use

(currently). And I don't know if *everything* is cached, or if just

those particular blocks are because you already ran the same query

with the same parameters recently.

Also, your work_mem is pretty low given the amount of RAM you have.

work_mem = 1MB

I don't think the current planner attempts to take account of the fact

that a bitmap scan which overflows work_mem and so becomes "lossy" is

quite a performance set-back. Nor does it look like explain analyze

informs you of this happening. But maybe I'm just looking in the

wrong places.

Cheers,

Jeff

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-04 23:42:21 |

| Message-ID: | CAMkU=1wmxKifgUufbSffQ+VH3kFNczK65MxXetwVFJZd3zvkeA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 10:03 AM, <postgresql(at)foo(dot)me(dot)uk> wrote:

>

> Though that doesn't account for the 70x difference between the speed of the

> two queries in actuality given a pretty similar expected speed (does it?).

It kind of does. The expected speed is predicated on the number of

rows being 200 fold higher. If the number of rows actually was that

much higher, the two speeds might be closer together. That is why it

would be interesting to see a more typical case where the actual

number of rows is closer to the 2000 estimate.

But I am curious about how the cost estimate for the primary key look

up is arrived at:

Index Scan using cons_pe_primary_key on position_effect

(cost=0.00..42.96 rows=1 width=16)

There should be a random page for the index leaf page, and a random

page for the heap page. Since you set random_page_cost to 2, that

comes up to 4. Then there would be some almost negligible CPU costs.

Where the heck is the extra 38 cost coming from?

> It does go some way to explaining why a bad choice of plan was made.

>

> Is there some nice bit of literature somewhere that explains what sort of

> costs are associated with the different types of lookup?

I've heard good things about Greg Smith's book, but I don't know if it

covers this particular thing.

Otherwise, I don't know of a good single place which is a tutorial

rather than a reference (or the code itself)

>>> First, make sure caching isn't interfering with your results. Run each

>>> query several times.

>> If that is not how the production system works (running the same query

> over and over) then you want to model the cold cache, not the hot one.

>> But in any case, the posted explains indicates that all buffers were

> cached.

>

> We are in the rather pleasant situation here in that we are willing to spend

> money on the box (up to a point, but quite a large point) to get it up to

> the spec so that it should hardly ever need to touch the disk, the trick is

> figuring out how to let our favourite database server know that.

Well, that part is fairly easy. Make random_page_cost and

seq_page_cost much smaller than their defaults. Like, 0.04 and 0.03,

for example.

I think the *_page_cost should strictly an estimate of actually doing

IO, with a separate parameter to reflect likelihood of needing to do

the IO, like *_page_cachedness. But that isn't the way it is done

currently.

Cheers,

Jeff

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | postgresql(at)foo(dot)me(dot)uk |

| Cc: | postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-05 17:39:35 |

| Message-ID: | CAMkU=1w40aQt0twukCsvL2hPHUPujLqJBe8v90phuqBJ5wgw1Q@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 4, 2012 at 3:42 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

(Regarding http://explain.depesz.com/s/4MWG, wrote)

>

> But I am curious about how the cost estimate for the primary key look

> up is arrived at:

>

> Index Scan using cons_pe_primary_key on position_effect

> (cost=0.00..42.96 rows=1 width=16)

>

> There should be a random page for the index leaf page, and a random

> page for the heap page. Since you set random_page_cost to 2, that

> comes up to 4. Then there would be some almost negligible CPU costs.

> Where the heck is the extra 38 cost coming from?

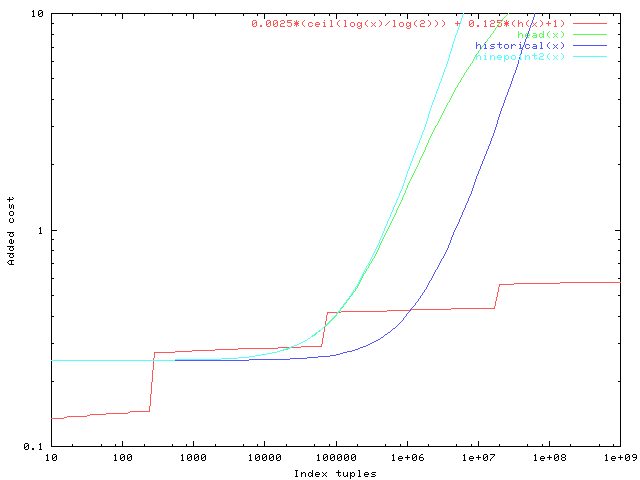

I now see where the cost is coming from. In commit 21a39de5809 (first

appearing in 9.2) the "fudge factor" cost estimate for large indexes

was increased by about 10 fold, which really hits this index hard.

This was fixed in commit bf01e34b556 "Tweak genericcostestimate's

fudge factor for index size", by changing it to use the log of the

index size. But that commit probably won't be shipped until 9.3.

I'm not sure that this change would fix your problem, because it might

also change the costs of the alternative plans in a way that

neutralizes things. But I suspect it would fix it. Of course, a

correct estimate of the join size would also fix it--you have kind of

a perfect storm here.

Cheers,

Jeff

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-05 17:43:49 |

| Message-ID: | CAGTBQpZa4Ru68pu6GiewBZUNk1hw8OObXaks6=Lt+-0qBdhjsg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Wed, Dec 5, 2012 at 2:39 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

> I'm not sure that this change would fix your problem, because it might

> also change the costs of the alternative plans in a way that

> neutralizes things. But I suspect it would fix it. Of course, a

> correct estimate of the join size would also fix it--you have kind of

> a perfect storm here.

As far as I can see on the explain, the misestimation is 3x~4x not 200x.

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-05 18:05:10 |

| Message-ID: | 9022.1354730710@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Jeff Janes <jeff(dot)janes(at)gmail(dot)com> writes:

> I now see where the cost is coming from. In commit 21a39de5809 (first

> appearing in 9.2) the "fudge factor" cost estimate for large indexes

> was increased by about 10 fold, which really hits this index hard.

> This was fixed in commit bf01e34b556 "Tweak genericcostestimate's

> fudge factor for index size", by changing it to use the log of the

> index size. But that commit probably won't be shipped until 9.3.

Hm. To tell you the truth, in October I'd completely forgotten about

the January patch, and was thinking that the 1/10000 cost had a lot

of history behind it. But if we never shipped it before 9.2 then of

course that idea is false. Perhaps we should backpatch the log curve

into 9.2 --- that would reduce the amount of differential between what

9.2 does and what previous branches do for large indexes.

It would definitely be interesting to know if applying bf01e34b556

helps the OP's example.

regards, tom lane

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 12:52:07 |

| Message-ID: | 0b8901cdd3b0$83a55dd0$8af01970$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

That is very interesting indeed, these indexes are quite large!

I will apply that patch and try it out this evening and let you know.

Thank you very much everyone for your time, the support has been amazing.

PS: Just looked at this thread on the archives page and realised I don't

have my name in FROM: field, which is a misconfiguration of my email client,

but figured I would leave it to prevent confusion, sorry about that.

All the best,

Philip Scott

-----Original Message-----

From: Tom Lane [mailto:tgl(at)sss(dot)pgh(dot)pa(dot)us]

Sent: 05 December 2012 18:05

To: Jeff Janes

Cc: postgresql(at)foo(dot)me(dot)uk; postgres performance list

Subject: Re: [PERFORM] Slow query: bitmap scan troubles

Jeff Janes <jeff(dot)janes(at)gmail(dot)com> writes:

> I now see where the cost is coming from. In commit 21a39de5809 (first

> appearing in 9.2) the "fudge factor" cost estimate for large indexes

> was increased by about 10 fold, which really hits this index hard.

> This was fixed in commit bf01e34b556 "Tweak genericcostestimate's

> fudge factor for index size", by changing it to use the log of the

> index size. But that commit probably won't be shipped until 9.3.

Hm. To tell you the truth, in October I'd completely forgotten about the

January patch, and was thinking that the 1/10000 cost had a lot of history

behind it. But if we never shipped it before 9.2 then of course that idea

is false. Perhaps we should backpatch the log curve into 9.2 --- that would

reduce the amount of differential between what

9.2 does and what previous branches do for large indexes.

It would definitely be interesting to know if applying bf01e34b556 helps the

OP's example.

regards, tom lane

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 12:56:26 |

| Message-ID: | 0b8b01cdd3b1$1a55b8b0$4f012a10$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

> I also wonder if increasing (say x10) of default_statistics_target or just

doing ALTER TABLE SET STATISTICS for particular tables will help.

> It will make planned to produce more precise estimations. Do not forget

ANALYZE afer changing it.

Thanks Sergey, I will try this too.

I think the bother here is that this statistics are pretty good (we do

analyse regularly and default_statistics_target is already 1000), but once I

start filtering the two tables the correlations alter quite a bit. I don't

think there is that much that can be done about that :)

- Phil

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 14:10:29 |

| Message-ID: | 0b9001cdd3bb$760226d0$62067470$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Hi Jeff

> It kind of does. The expected speed is predicated on the number of rows

being 200 fold higher. If the number of rows actually was that much higher,

the two speeds might be closer together. That is why it would be

interesting to see a more typical case where the actual number of rows is

closer to the 2000 estimate.

Ah, I see of course. Makes a lot of sense when you think about it. This has

been quite an enlightening adventure into the guts of postgres for me :)

> But I am curious about how the cost estimate for the primary key look up

is arrived at:

( Delt with in your next reply, thanks for figuring that out! I will

certainly try the patch)

> I've heard good things about Greg Smith's book, but I don't know if it

covers this particular thing.

A copy is on its way, thank you.

>> We are in the rather pleasant situation here in that we are willing to

>> spend money on the box (up to a point, but quite a large point) to get

>> it up to the spec so that it should hardly ever need to touch the

>> disk, the trick is figuring out how to let our favourite database server

know that.

> Well, that part is fairly easy. Make random_page_cost and seq_page_cost

much smaller than their defaults. Like, 0.04 and 0.03, for example.

Yes, I have been playing a lot with that it makes a lot of difference. When

I tweak them down I end up getting a lot of nested loops instead of hash or

merge joins and they are much faster (presumably we might have gotten a

nested loop out of the planner if it could correctly estimate the low number

of rows returned).

I've got plenty of ammunition now to dig deeper, you guys have been

invaluable.

Cheers,

Phil

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 17:27:48 |

| Message-ID: | CAMkU=1xXdiB+chgqwzWoN+7qqag9DeMTtDA1HWQYvuot6yDyJg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Wed, Dec 5, 2012 at 9:43 AM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

> On Wed, Dec 5, 2012 at 2:39 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

>> I'm not sure that this change would fix your problem, because it might

>> also change the costs of the alternative plans in a way that

>> neutralizes things. But I suspect it would fix it. Of course, a

>> correct estimate of the join size would also fix it--you have kind of

>> a perfect storm here.

>

> As far as I can see on the explain, the misestimation is 3x~4x not 200x.

It is 3x (14085 vs 4588) for selectivity on one of the tables, "Index

Only Scan using idx_trade_id_book on trade".

But for the join of both tables it is estimate 2120 vs actual 11.

Cheers,

Jeff

| From: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 20:05:09 |

| Message-ID: | CAGTBQpYmSNjV5QsnuQdYqYjJnVGrphUVeFm9nxPOfi5oG5-9UQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Thu, Dec 6, 2012 at 2:27 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

> On Wed, Dec 5, 2012 at 9:43 AM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

>> On Wed, Dec 5, 2012 at 2:39 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

>>> I'm not sure that this change would fix your problem, because it might

>>> also change the costs of the alternative plans in a way that

>>> neutralizes things. But I suspect it would fix it. Of course, a

>>> correct estimate of the join size would also fix it--you have kind of

>>> a perfect storm here.

>>

>> As far as I can see on the explain, the misestimation is 3x~4x not 200x.

>

> It is 3x (14085 vs 4588) for selectivity on one of the tables, "Index

> Only Scan using idx_trade_id_book on trade".

>

> But for the join of both tables it is estimate 2120 vs actual 11.

But the final result set isn't further worked on (except for the

aggregate), which means it doesn't affect the cost by much.

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Claudio Freire <klaussfreire(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-06 21:09:54 |

| Message-ID: | CAMkU=1wpMU8JvDBkxZS0zb5=xqh=_xHRcwvY--YT=6uyOOfZ0g@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Thu, Dec 6, 2012 at 12:05 PM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

> On Thu, Dec 6, 2012 at 2:27 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

>> On Wed, Dec 5, 2012 at 9:43 AM, Claudio Freire <klaussfreire(at)gmail(dot)com> wrote:

>>> As far as I can see on the explain, the misestimation is 3x~4x not 200x.

>>

>> It is 3x (14085 vs 4588) for selectivity on one of the tables, "Index

>> Only Scan using idx_trade_id_book on trade".

>>

>> But for the join of both tables it is estimate 2120 vs actual 11.

>

> But the final result set isn't further worked on (except for the

> aggregate), which means it doesn't affect the cost by much.

Good point. Both the NL and hash join do about the same amount of

work probing for success whether the success is actually there or not.

So scratch what I said about the correlation being important, in this

case it is not.

The 3x error is enough to push it over the edge, but the fudge factor

is what gets it so close to that edge in the first place.

And I'm now pretty sure the fudge factor change would fix this. The

truly-fast NL plan is getting overcharged by the fudge-factor once per

each 14,085 of the loopings, while the truly-slow bitmap scan is

overcharged only once for the entire scan. So the change is by no

means neutralized between the two plans.

I don't know if my other theory that the bitmap scan is overflowing

work_mem (but not costed for doing so) is also contributing.

Cheers,

Jeff

| From: | Guillaume Lelarge <guillaume(at)lelarge(dot)info> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | postgresql(at)foo(dot)me(dot)uk, postgres performance list <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-08 15:15:42 |

| Message-ID: | 1354979742.1963.11.camel@localhost.localdomain |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, 2012-12-04 at 15:42 -0800, Jeff Janes wrote:

> On Tue, Dec 4, 2012 at 10:03 AM, <postgresql(at)foo(dot)me(dot)uk> wrote:

> >[...]

> >

> > Is there some nice bit of literature somewhere that explains what sort of

> > costs are associated with the different types of lookup?

>

> I've heard good things about Greg Smith's book, but I don't know if it

> covers this particular thing.

>

> Otherwise, I don't know of a good single place which is a tutorial

> rather than a reference (or the code itself)

>

Greg's book is awesome. It really gives a lot of

informations/tips/whatever on performances. I mostly remember all the

informations about hardware, OS, PostgreSQL configuration, and such. Not

much on the EXPLAIN part.

On the EXPLAIN part, you may have better luck with some slides available

here and there.

Robert Haas gave a talk on the query planner at pgCon 2010. The audio

feed of Robert Haas talk is available with this file:

http://www.pgcon.org/2010/audio/15%20The%20PostgreSQL%20Query%

20Planner.mp3

You can also find the slides on

https://sites.google.com/site/robertmhaas/presentations

You can also read the "Explaining the Postgres Query Optimizer" talk

written by Bruce Momjian. It's available there :

http://momjian.us/main/presentations/internals.html

And finally, you can grab my slides over here:

http://www.dalibo.org/_media/understanding_explain.pdf. You have more

than slides. I tried to put a lot of informations in there.

--

Guillaume

http://blog.guillaume.lelarge.info

http://www.dalibo.com

| From: | <postgresql(at)foo(dot)me(dot)uk> |

|---|---|

| To: | "'postgres performance list'" <pgsql-performance(at)postgresql(dot)org> |

| Subject: | Re: Slow query: bitmap scan troubles |

| Date: | 2012-12-10 09:52:42 |

| Message-ID: | 0dfe01cdd6bc$1986e900$4c94bb00$@foo.me.uk |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

> Greg's book is awesome. It really gives a lot of informations/tips/whatever on performances. I mostly remember all the informations about hardware, OS, PostgreSQL configuration, and such. Not much on the EXPLAIN part.

Arrived this morning :)

> http://www.pgcon.org/2010/audio/15%20The%20PostgreSQL%20Query%

> https://sites.google.com/site/robertmhaas/presentations

> http://momjian.us/main/presentations/internals.html

> http://www.dalibo.org/_media/understanding_explain.pdf

Well that is my evenings occupied for the next week. Thank you kindly.

- Phil

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2012-12-18 06:00:16 |

| Message-ID: | CAMkU=1ykyMgXccv2dAWKOXdDx5U0Qpc28aW6k659hvE7VZEMXQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

[moved to hackers]

On Wednesday, December 5, 2012, Tom Lane wrote:

> Jeff Janes <jeff(dot)janes(at)gmail(dot)com> writes:

> > I now see where the cost is coming from. In commit 21a39de5809 (first

> > appearing in 9.2) the "fudge factor" cost estimate for large indexes

> > was increased by about 10 fold, which really hits this index hard.

>

> > This was fixed in commit bf01e34b556 "Tweak genericcostestimate's

> > fudge factor for index size", by changing it to use the log of the

> > index size. But that commit probably won't be shipped until 9.3.

>

> Hm. To tell you the truth, in October I'd completely forgotten about

> the January patch, and was thinking that the 1/10000 cost had a lot

> of history behind it. But if we never shipped it before 9.2 then of

> course that idea is false. Perhaps we should backpatch the log curve

> into 9.2 --- that would reduce the amount of differential between what

> 9.2 does and what previous branches do for large indexes.

>

I think we should backpatch it for 9.2.3. I've seen another email which is

probably due to the same issue (nested loop vs hash join). And some

monitoring of a database I am responsible for suggests it might be heading

in that direction as well as the size grows.

But I am wondering if it should be present at all in 9.3. When it was

introduced, the argument seemed to be that smaller indexes might be easier

to keep in cache. And surely that is so. But if a larger index that

covers the same type of queries exists when a smaller one also exists, we

can assume the larger one also exists for a reason. While it may be easier

to keep a smaller index in cache, it is not easier to keep both a larger

and a smaller one in cache as the same time. So it seems to me that this

reasoning is a wash. (Countering this argument is that a partial index is

more esoteric, and so if one exists it is more likely to have been

well-thought out)

The argument for increasing the penalty by a factor of 10 was that the

smaller one could be "swamped by noise such as page-boundary-roundoff

behavior". I don't really know what that means, but it seems to me that if

it is so easily swamped by noise, then it probably isn't so important in

the first place which one it chooses. Whereas, I think that even the log

based penalty has the risk of being too much on large indexes. (For one

thing, it implicitly assumes the fan-out ratio at each level of btree is e,

when it will usually be much larger than e.)

One thing which depends on the index size which, as far as I can tell, is

not currently being counted is the cost of comparing the tuples all the way

down the index. This would be proportional to log2(indextuples) *

cpu_index_tuple_cost, or maybe log2(indextuples) *

(cpu_index_tuple_cost+cpu_operator_cost), or something like that. This

cost would depend on the number index tuples, not baserel tuples, and so

would penalize large indexes. It would be much smaller than the current

log(pages/10000) penalty, but it would be more principle-based rather than

heuristic-based.

The log(pages/10000) change is more suitable for back-patching because it

is more conservative, being asymptotic with the previous behavior at the

low end. But I don't think that the case for that previous behavior was

ever all that strong.

If we really want a heuristic to give a bonus to partial indexes, maybe we

should explicitly give them a bonus, rather than penalizing ordinary

indexes.

maybe something like bonus = 0.05 * (reltuples-indextuples)/reltuples

Cheers,

Jeff

>

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2012-12-19 01:05:05 |

| Message-ID: | CAMkU=1xKAGpT9-t3NkQO1=MRBaMudjZA2OKowSYJxACb7nvorQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

[moved to hackers]

On Wednesday, December 5, 2012, Tom Lane wrote:

> Jeff Janes <jeff(dot)janes(at)gmail(dot)com <javascript:;>> writes:

> > I now see where the cost is coming from. In commit 21a39de5809 (first

> > appearing in 9.2) the "fudge factor" cost estimate for large indexes

> > was increased by about 10 fold, which really hits this index hard.

>

> > This was fixed in commit bf01e34b556 "Tweak genericcostestimate's

> > fudge factor for index size", by changing it to use the log of the

> > index size. But that commit probably won't be shipped until 9.3.

>

> Hm. To tell you the truth, in October I'd completely forgotten about

> the January patch, and was thinking that the 1/10000 cost had a lot

> of history behind it. But if we never shipped it before 9.2 then of

> course that idea is false. Perhaps we should backpatch the log curve

> into 9.2 --- that would reduce the amount of differential between what

> 9.2 does and what previous branches do for large indexes.

>

I think we should backpatch it for 9.2.3. I've seen another email which is

probably due to the same issue (nested loop vs hash join). And some

monitoring of a database I am responsible for suggests it might be heading

in that direction as well as the size grows.

But I am wondering if it should be present at all in 9.3. When it was

introduced, the argument seemed to be that smaller indexes might be easier

to keep in cache. And surely that is so. But if a larger index that

covers the same type of queries exists when a smaller one also exists, we

can assume the larger one also exists for a reason. While it may be easier

to keep a smaller index in cache, it is not easier to keep both a larger

and a smaller one in cache as the same time. So it seems to me that this

reasoning is a wash. (Countering this argument is that a partial index is

more esoteric, and so if one exists it is more likely to have been

well-thought out)

The argument for increasing the penalty by a factor of 10 was that the

smaller one could be "swamped by noise such as page-boundary-roundoff

behavior". I don't really know what that means, but it seems to me that if

it is so easily swamped by noise, then it probably isn't so important in

the first place which one it chooses. Whereas, I think that even the log

based penalty has the risk of being too much on large indexes. (For one

thing, it implicitly assumes the fan-out ratio at each level of btree is e,

when it will usually be much larger than e.)

One thing which depends on the index size which, as far as I can tell, is

not currently being counted is the cost of comparing the tuples all the way

down the index. This would be proportional to log2(indextuples) *

cpu_index_tuple_cost, or maybe log2(indextuples) *

(cpu_index_tuple_cost+cpu_operator_cost), or something like that. This

cost would depend on the number index tuples, not baserel tuples, and so

would penalize large indexes. It would be much smaller than the current

log(pages/10000) penalty, but it would be more principle-based rather than

heuristic-based.

The log(pages/10000) change is more suitable for back-patching because it

is more conservative, being asymptotic with the previous behavior at the

low end. But I don't think that the case for that previous behavior was

ever all that strong.

If we really want a heuristic to give a bonus to partial indexes, maybe we

should explicitly give them a bonus, rather than penalizing ordinary

indexes (which penalty is then used in comparing them to hash joins and

such, not just partial indexes).

maybe something like bonus = 0.05 * (reltuples-indextuples)/reltuples

Cheers,

Jeff

>

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2012-12-19 14:40:54 |

| Message-ID: | CAMkU=1zOHs==DYLeqbOZFfjV=J0BroKmmJc9zgTJN2Aj4c5qYQ@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Tue, Dec 18, 2012 at 5:05 PM, Jeff Janes <jeff(dot)janes(at)gmail(dot)com> wrote:

Sorry for the malformed and duplicate post. I was not trying to be

emphatic; I was testing out gmail offline. Clearly the test didn't go

too well.

Jeff

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2013-01-05 22:18:16 |

| Message-ID: | 28944.1357424296@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

Jeff Janes <jeff(dot)janes(at)gmail(dot)com> writes:

> [moved to hackers]

> On Wednesday, December 5, 2012, Tom Lane wrote:

>> Hm. To tell you the truth, in October I'd completely forgotten about

>> the January patch, and was thinking that the 1/10000 cost had a lot

>> of history behind it. But if we never shipped it before 9.2 then of

>> course that idea is false. Perhaps we should backpatch the log curve

>> into 9.2 --- that would reduce the amount of differential between what

>> 9.2 does and what previous branches do for large indexes.

> I think we should backpatch it for 9.2.3. I've seen another email which is

> probably due to the same issue (nested loop vs hash join). And some

> monitoring of a database I am responsible for suggests it might be heading

> in that direction as well as the size grows.

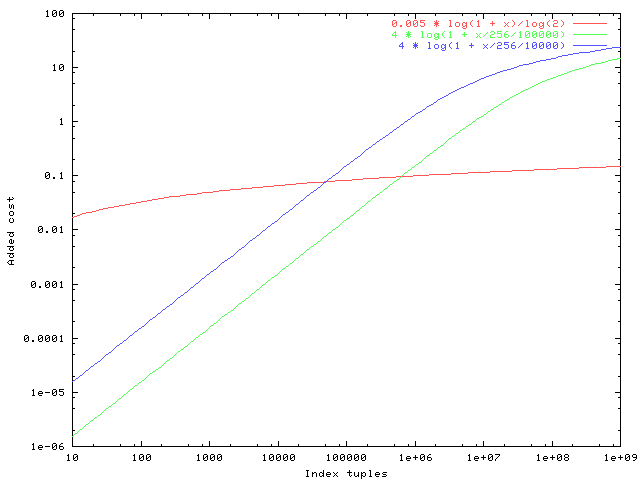

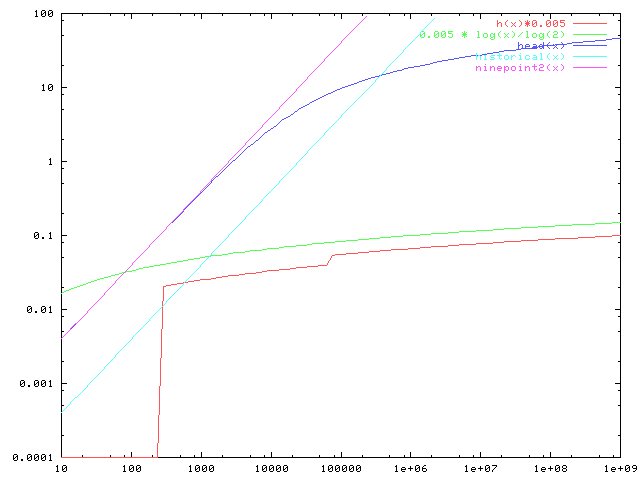

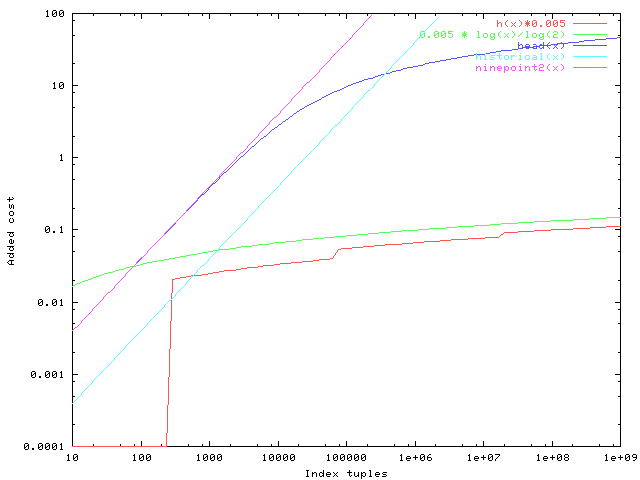

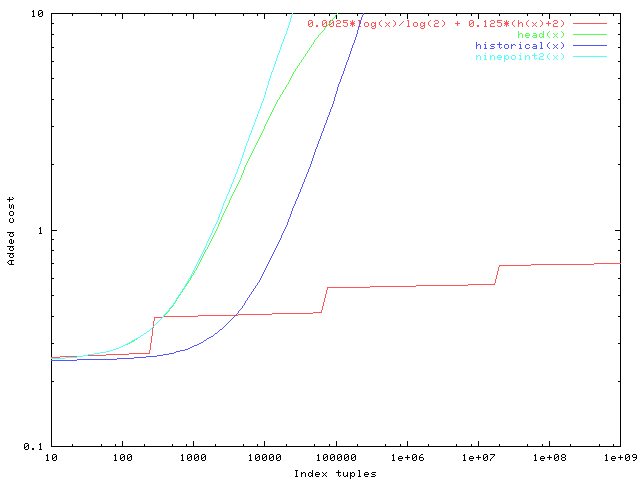

I received an off-list report of a case where not only did the 1/10000

factor cause a nestloop-vs-hashjoin decision to be made wrongly, but

even adding the ln() computation as in commit bf01e34b556 didn't fix it.

I believe the index in question was on the order of 20000 pages, so

it's not too hard to see why this might be the case:

* historical fudge factor 4 * 20000/100000 = 0.8

* 9.2 fudge factor 4 * 20000/10000 = 8.0

* with ln() correction 4 * ln(1 + 20000/10000) = 4.39 or so

At this point I'm about ready to not only revert the 100000-to-10000

change, but keep the ln() adjustment, ie make the calculation be

random_page_cost * ln(1 + index_pages/100000). This would give

essentially the pre-9.2 behavior for indexes up to some tens of

thousands of pages, and keep the fudge factor from getting out of

control even for very very large indexes.

> But I am wondering if it should be present at all in 9.3. When it was

> introduced, the argument seemed to be that smaller indexes might be easier

> to keep in cache.

No. The argument is that if we don't have some such correction, the

planner is liable to believe that different-sized indexes have *exactly

the same cost*, if a given query would fetch the same number of index

entries. This is quite easy to demonstrate when experimenting with

partial indexes, in particular - without the fudge factor the planner

sees no advantage of a partial index over a full index from which the

query would fetch the same number of entries. We do want the planner

to pick the partial index if it's usable, and a fudge factor is about

the least unprincipled way to make it do so.

> The argument for increasing the penalty by a factor of 10 was that the

> smaller one could be "swamped by noise such as page-boundary-roundoff

> behavior".

Yeah, I wrote that, but in hindsight it seems like a mistaken idea.

The noise problem is that because we round off page count and row count

estimates to integers at various places, it's fairly easy for small

changes in statistics to move a plan's estimated cost by significantly

more than this fudge factor will. However, the case that the fudge

factor is meant to fix is indexes that are otherwise identical for

the query's purposes --- and any roundoff effects will be the same.

(The fudge factor itself is *not* rounded off anywhere, it flows

directly to the bottom-line cost for the indexscan.)

> One thing which depends on the index size which, as far as I can tell, is

> not currently being counted is the cost of comparing the tuples all the way

> down the index. This would be proportional to log2(indextuples) *

> cpu_index_tuple_cost, or maybe log2(indextuples) *

> (cpu_index_tuple_cost+cpu_operator_cost), or something like that.

Yeah, I know. I've experimented repeatedly over the years with trying

to account explicitly for index descent costs. But every time, anything

that looks even remotely principled turns out to produce an overly large

correction that results in bad plan choices. I don't know exactly why

this is, but it's true.

One other point is that I think it is better for any such correction

to depend on the index's total page count, not total tuple count,

because otherwise two indexes that are identical except for bloat

effects will appear to have identical costs. So from that standpoint,

the ln() form of the fudge factor seems quite reasonable as a crude form

of index descent cost estimate. The fact that we're needing to dial

it down so much reinforces my feeling that descent costs are close to

negligible in practice.

regards, tom lane

| From: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com> |

|---|---|

| To: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Cc: | pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2013-01-06 16:29:17 |

| Message-ID: | CAMkU=1zYe4eujgrvBMsZLS8gjZg2hn+eWr_r8D17J-1FanzKog@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Lists: | pgsql-hackers pgsql-performance |

On Saturday, January 5, 2013, Tom Lane wrote:

> Jeff Janes <jeff(dot)janes(at)gmail(dot)com <javascript:;>> writes:

> > [moved to hackers]

> > On Wednesday, December 5, 2012, Tom Lane wrote:

> >> Hm. To tell you the truth, in October I'd completely forgotten about

> >> the January patch, and was thinking that the 1/10000 cost had a lot

> >> of history behind it. But if we never shipped it before 9.2 then of

> >> course that idea is false. Perhaps we should backpatch the log curve

> >> into 9.2 --- that would reduce the amount of differential between what

> >> 9.2 does and what previous branches do for large indexes.

>

> > I think we should backpatch it for 9.2.3. I've seen another email which

> is

> > probably due to the same issue (nested loop vs hash join). And some

> > monitoring of a database I am responsible for suggests it might be

> heading

> > in that direction as well as the size grows.

>

> I received an off-list report of a case where not only did the 1/10000

> factor cause a nestloop-vs-hashjoin decision to be made wrongly, but

> even adding the ln() computation as in commit bf01e34b556 didn't fix it.

> I believe the index in question was on the order of 20000 pages, so

> it's not too hard to see why this might be the case:

>

> * historical fudge factor 4 * 20000/100000 = 0.8

> * 9.2 fudge factor 4 * 20000/10000 = 8.0

> * with ln() correction 4 * ln(1 + 20000/10000) = 4.39 or so

>

> At this point I'm about ready to not only revert the 100000-to-10000

> change, but keep the ln() adjustment, ie make the calculation be

> random_page_cost * ln(1 + index_pages/100000). This would give

> essentially the pre-9.2 behavior for indexes up to some tens of

> thousands of pages, and keep the fudge factor from getting out of

> control even for very very large indexes.

>

Yeah, I agree that even the log function grows too rapidly, especially at

the early stages. I didn't know if a change that changes that asymptote

would be welcome in a backpatch, though.

>

> > But I am wondering if it should be present at all in 9.3. When it was

> > introduced, the argument seemed to be that smaller indexes might be

> easier

> > to keep in cache.

>

> No. The argument is that if we don't have some such correction, the

> planner is liable to believe that different-sized indexes have *exactly

> the same cost*, if a given query would fetch the same number of index

> entries.

But it seems like they very likely *do* have exactly the same cost, unless

you want to take either the CPU cost of descending the index into account,

or take cachebility into account. If they do have the same cost, why

shouldn't the estimate reflect that? Using cpu_index_tuple_cost * lg(#

index tuples) would break the tie, but by such a small amount that it would

easily get swamped by the stochastic nature of ANALYZE for nodes expected

to return more than one row.

> This is quite easy to demonstrate when experimenting with

> partial indexes, in particular - without the fudge factor the planner

> sees no advantage of a partial index over a full index from which the

> query would fetch the same number of entries. We do want the planner

> to pick the partial index if it's usable, and a fudge factor is about

> the least unprincipled way to make it do so.

>

I noticed a long time ago that ordinary index scans seemed to be preferred

over bitmap index scans with the same cost estimate, as best as I could

determine because they are tested first and the tie goes to the first one

(and there is something about it needs to be better by 1% to be counted as

better--although that part might only apply when the start-up cost and the

full cost disagree over which one is best). If I've reconstructed that

correctly, could something similar be done for partial indexes, where they

are just considered first? I guess the problem there is a index scan on a

partial index is not a separate node type from a index scan on a full

index, unlike index vs bitmap.

>

> > The argument for increasing the penalty by a factor of 10 was that the

> > smaller one could be "swamped by noise such as page-boundary-roundoff

> > behavior".

>

> Yeah, I wrote that, but in hindsight it seems like a mistaken idea.

> The noise problem is that because we round off page count and row count

> estimates to integers at various places, it's fairly easy for small

> changes in statistics to move a plan's estimated cost by significantly

> more than this fudge factor will. However, the case that the fudge

> factor is meant to fix is indexes that are otherwise identical for

> the query's purposes --- and any roundoff effects will be the same.

> (The fudge factor itself is *not* rounded off anywhere, it flows

> directly to the bottom-line cost for the indexscan.)

>

OK, and this agrees with my experience. It seemed like it was the

stochastic nature of analyze, not round off problems, that caused the plans

to go back and forth.

>

> > One thing which depends on the index size which, as far as I can tell, is

> > not currently being counted is the cost of comparing the tuples all the

> way

> > down the index. This would be proportional to log2(indextuples) *

> > cpu_index_tuple_cost, or maybe log2(indextuples) *

> > (cpu_index_tuple_cost+cpu_operator_cost), or something like that.

>

> Yeah, I know. I've experimented repeatedly over the years with trying

> to account explicitly for index descent costs. But every time, anything

> that looks even remotely principled turns out to produce an overly large

> correction that results in bad plan choices. I don't know exactly why

> this is, but it's true.

>

log2(indextuples) * cpu_index_tuple_cost should produce pretty darn small

corrections, at least if cost parameters are at the defaults. Do you

remember if that one of the ones you tried?

>

> One other point is that I think it is better for any such correction

> to depend on the index's total page count, not total tuple count,

> because otherwise two indexes that are identical except for bloat

> effects will appear to have identical costs.

This isn't so. A bloated index will be estimated to visit more pages than

an otherwise identical non-bloated index, and so have a higher cost.

jeff=# create table bar as select * from generate_series(1,1000000);

jeff=# create index foo1 on bar (generate_series);

jeff=# create index foo2 on bar (generate_series);

jeff=# delete from bar where generate_series %100 !=0;

jeff=# reindex index foo1;